当前位置:网站首页>Common optimization methods

Common optimization methods

2022-07-05 05:33:00 【Li Junfeng】

Preface

In the training of neural networks , Its essence is to find the appropriate parameters , bring loss function Minimum . However, this minimum value is too difficult to find , Because there are too many parameters . To solve this problem , At present, there are several common optimization methods .

Random gradient descent method

This is the most classic algorithm , It is also a common method . Compared with random search , This method is already excellent , But there are still some deficiencies .

shortcoming

- The value of step size has a great influence on the result : Too many steps will not converge , The step size is too small , Training time is too long , It can't even converge .

- The gradient of some functions does not point to their minimum , For example, the function is z = x 2 100 + y 2 z=\frac{x^2}{100}+y^2 z=100x2+y2, This function image is symmetric , Long and narrow “ The valley ”.

- Because part of the gradient does not point to the minimum , This will cause its path to find the optimal solution to be very tortuous , And the correspondence is relatively “ flat ” The place of , It may not be able to find the optimal solution .

AdaGrad

Because of the nature of the gradient , It is difficult to improve in some places , But the step size can also be optimized .

A common method is Step attenuation , The initial step size is relatively large , Because at this time, it is generally far from the optimal solution , You can walk more , To improve the speed of training . As the training goes on , The step size decreases , Because at this time, it is closer to the optimal solution , If the step size is too large, it may miss the optimal solution or fail to converge .

Attenuation parameters are generally related to the gradient that has been trained

h ← h + ∂ L ∂ W ⋅ ∂ L ∂ W W ← W − η 1 h ⋅ ∂ L ∂ W h\leftarrow h + \frac{\partial L}{\partial W}\cdot\frac{\partial L}{\partial W} \newline W\leftarrow W - \eta\frac{1}{\sqrt{h}}\cdot\frac{\partial L}{\partial W} h←h+∂W∂L⋅∂W∂LW←W−ηh1⋅∂W∂L

Momentum

The explanation of this word is momentum , According to the definition in Physics F ⋅ t = m ⋅ v F\cdot t = m\cdot v F⋅t=m⋅v.

In order to understand this method more vividly , Consider a surface in three-dimensional space , There is a ball on it , Need to roll to the lowest point .

For the sake of calculation , Part regards the mass of the ball as a unit 1, Then find the derivative of time for this formula : d F d t ⋅ d t = m ⋅ d v d t ⇒ d F = m ⋅ d v d t \frac{dF}{dt}\cdot dt=m\cdot\frac{dv}{dt} \Rightarrow dF=m\cdot\frac{dv}{dt} dtdF⋅dt=m⋅dtdv⇒dF=m⋅dtdv.

Consider the impact of this little ball “ force ”: The component of gravity caused by the inclination of the current position ( gradient ), Friction that blocks motion ( When there is no gradient, the velocity will attenuation ).

Then you can easily write the speed and position of the ball at the next moment : v ← α ⋅ v − ∂ F ∂ W w ← w + v v\leftarrow\alpha\cdot v - \frac{\partial F}{\partial W} \newline w\leftarrow w + v v←α⋅v−∂W∂Fw←w+v

advantage

This method can be very close to the problem that the gradient does not point to the optimal solution , Even if a gradient does not point to the optimal solution , But only it exists to the optimal solution Speed , Then it can continue to approach the optimal solution .

边栏推荐

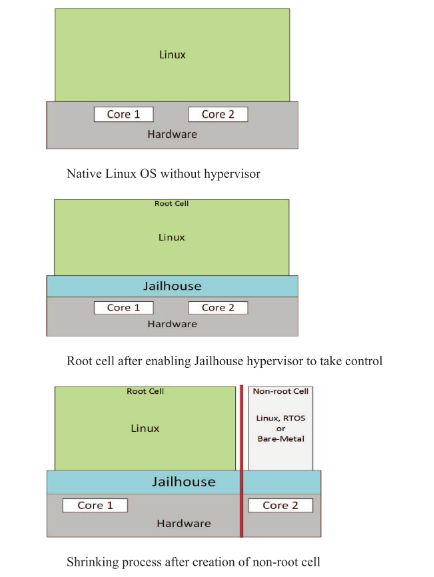

- 【Jailhouse 文章】Look Mum, no VM Exits

- Detailed explanation of expression (csp-j 2021 expr) topic

- 每日一题-搜索二维矩阵ps二维数组的查找

- How can the Solon framework easily obtain the response time of each request?

- 剑指 Offer 58 - II. 左旋转字符串

- AtCoder Grand Contest 013 E - Placing Squares

- Demonstration of using Solon auth authentication framework (simpler authentication framework)

- Using HashMap to realize simple cache

- How many checks does kubedm series-01-preflight have

- Introduction to tools in TF-A

猜你喜欢

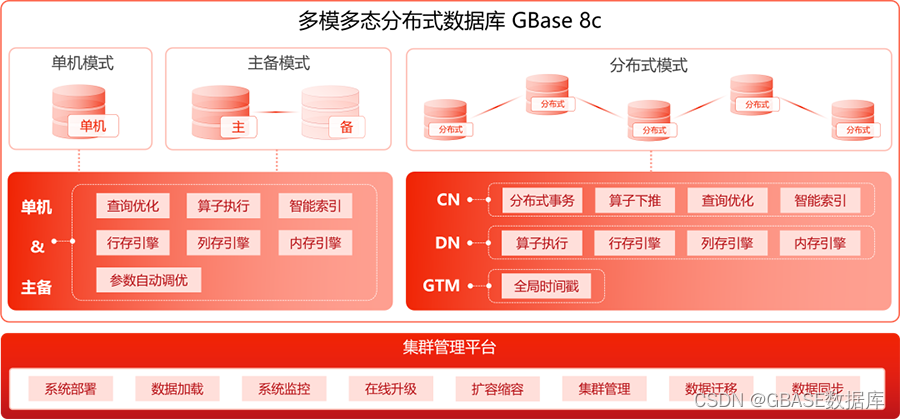

Support multi-mode polymorphic gbase 8C database continuous innovation and heavy upgrade

![[to be continued] [UE4 notes] L3 import resources and project migration](/img/81/6f75f8fbe60e037b45db2037d87bcf.jpg)

[to be continued] [UE4 notes] L3 import resources and project migration

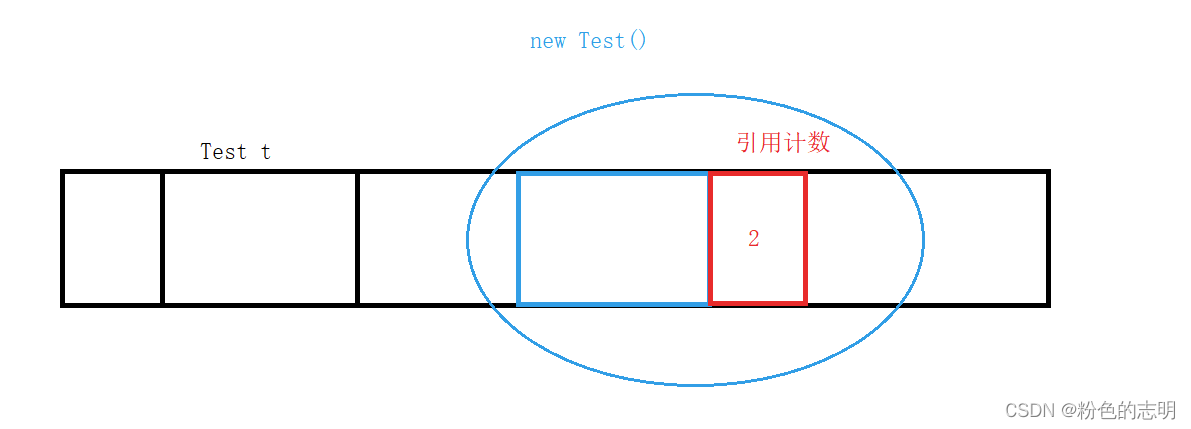

浅谈JVM(面试常考)

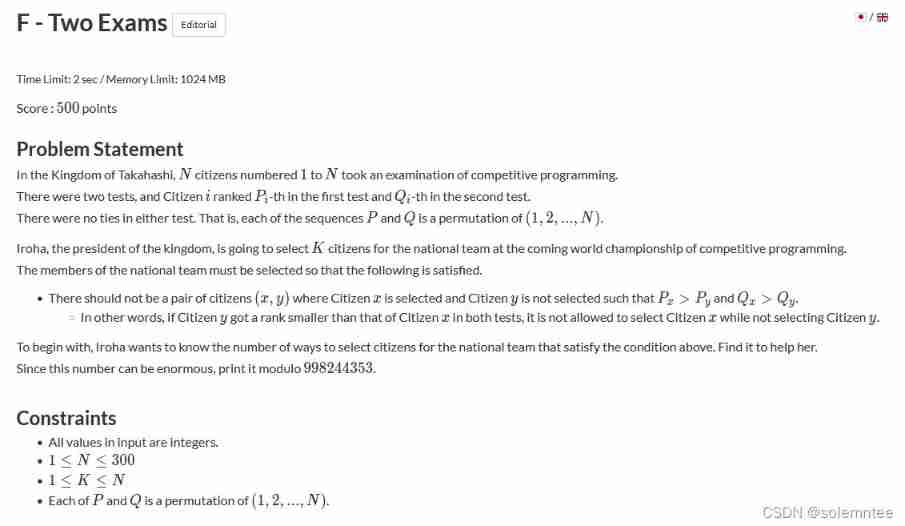

F - Two Exam(AtCoder Beginner Contest 238)

【Jailhouse 文章】Performance measurements for hypervisors on embedded ARM processors

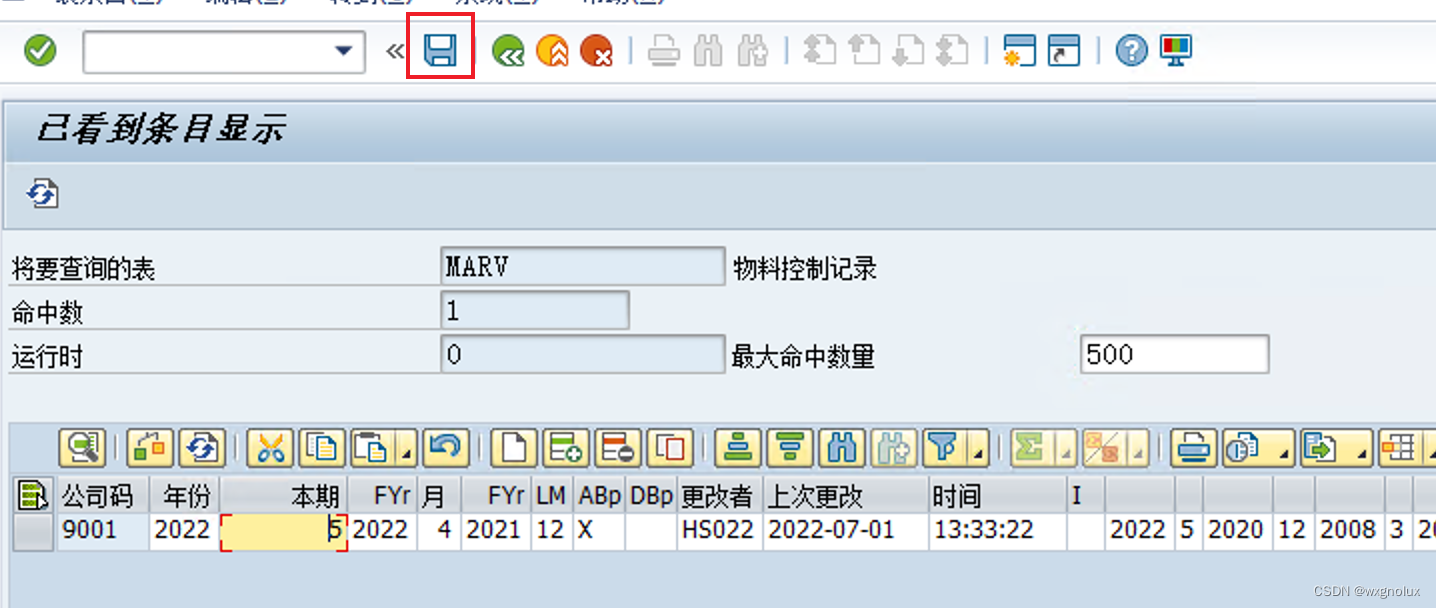

SAP-修改系统表数据的方法

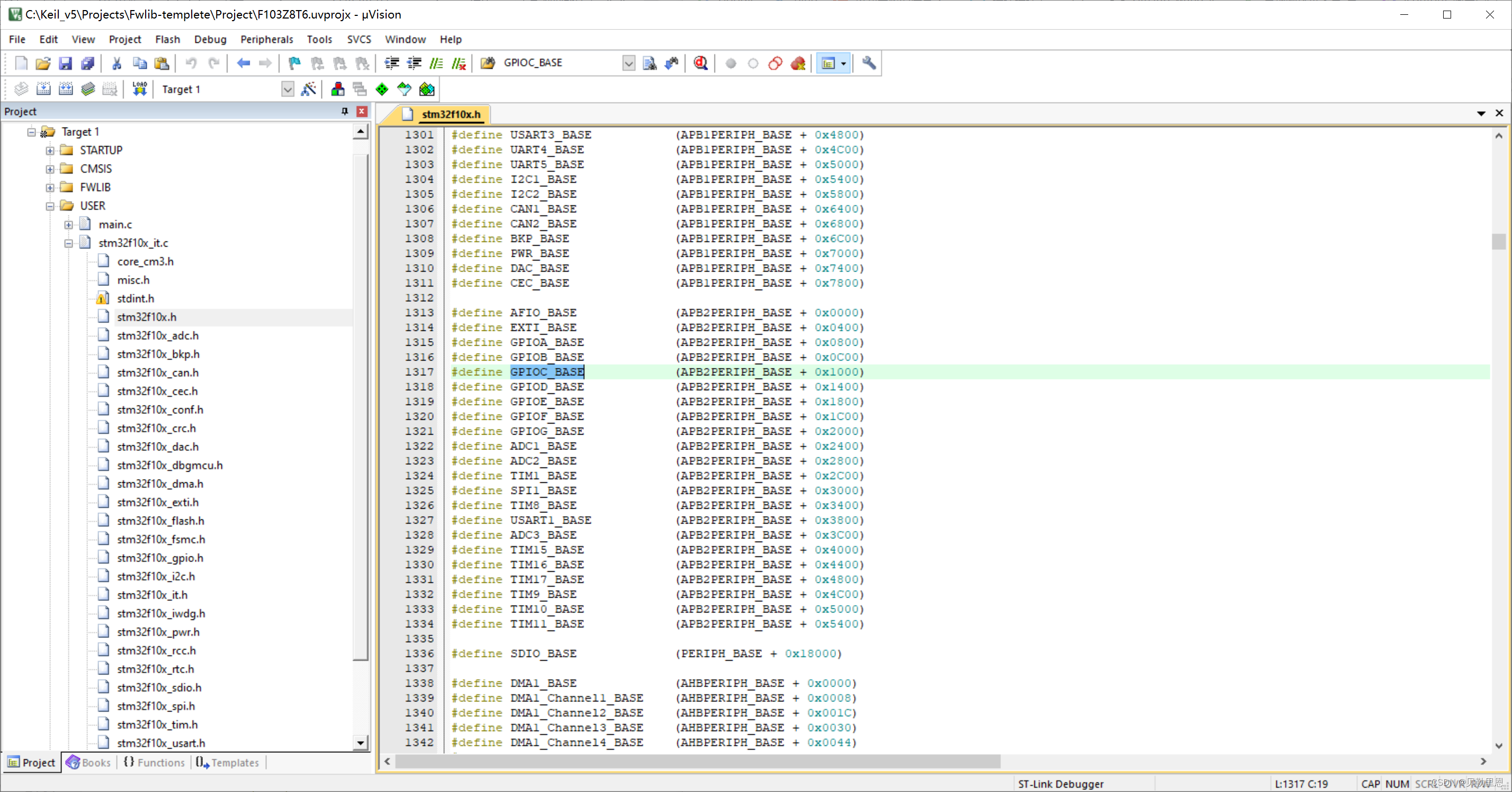

用STM32点个灯

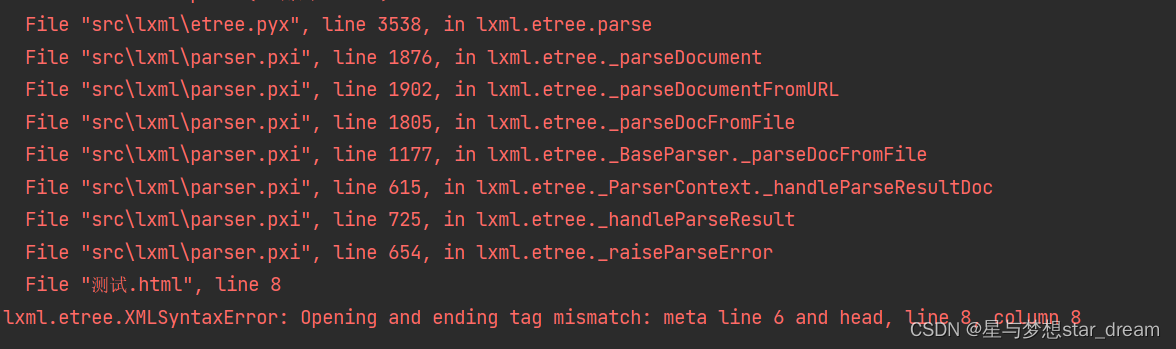

lxml.etree.XMLSyntaxError: Opening and ending tag mismatch: meta line 6 and head, line 8, column 8

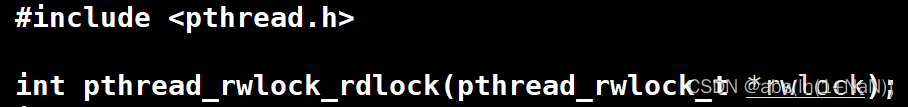

读者写者模型

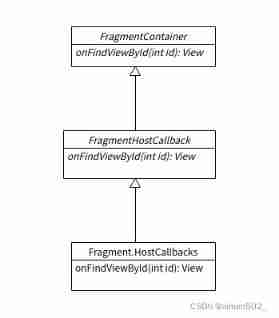

Fragment addition failed error lookup

随机推荐

Gbase database helps the development of digital finance in the Bay Area

动漫评分数据分析与可视化 与 IT行业招聘数据分析与可视化

剑指 Offer 53 - I. 在排序数组中查找数字 I

Sword finger offer 58 - ii Rotate string left

Binary search basis

Pointnet++的改进

Haut OJ 1357: lunch question (I) -- high precision multiplication

从Dijkstra的图灵奖演讲论科技创业者特点

Haut OJ 1401: praise energy

剑指 Offer 53 - II. 0~n-1中缺失的数字

Acwing 4300. Two operations

游戏商城毕业设计

Double pointer Foundation

EOJ 2021.10 E. XOR tree

[to be continued] [UE4 notes] L2 interface introduction

CF1634E Fair Share

To the distance we have been looking for -- film review of "flying house journey"

Developing desktop applications with electron

常见的最优化方法

lxml. etree. XMLSyntaxError: Opening and ending tag mismatch: meta line 6 and head, line 8, column 8