当前位置:网站首页>Understanding disentangling in β- VAE paper reading notes

Understanding disentangling in β- VAE paper reading notes

2022-07-06 18:46:00 【zeronose】

List of articles

Preface

article :Understanding disentangling in β-VAE

Link to the original text : link

Understanding disentangling in β-VAE Is based on β-VAE An article from .

First ,β-VAE There are several problems in :

1.β-VAE Just by KL Item adds a super parameter β, It is found that the model has decoupling characteristics , But there is no good explanation for adding a super parameter β Will produce decoupling characteristics .

2.β-VAE Find out , When the decoupling effect is good, the reconstruction effect is not good , When the reconstruction effect is good, the decoupling effect is poor , So we need to balance decoupling and reconstruction .

Based on this ,Understanding disentangling in β-VAE adopt Information bottleneck theory given β-VAE Explanation of decoupling , And for β-VAE We need to balance decoupling and reconstruction , They put forward their own training methods ---- Gradually increase the amount of information of potential variables in the training process .

The original text also introduces VAE And β-VAE, It's just more here , Interested can see my previous article

VAE

β-VAE

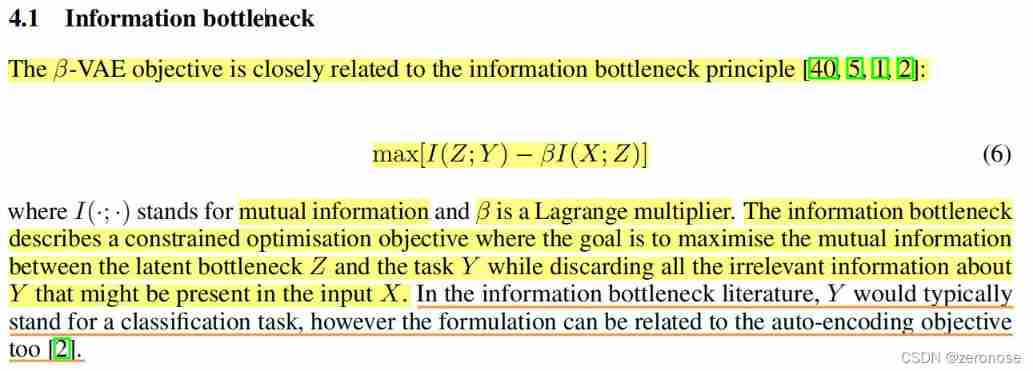

One 、 What is the information bottleneck ?

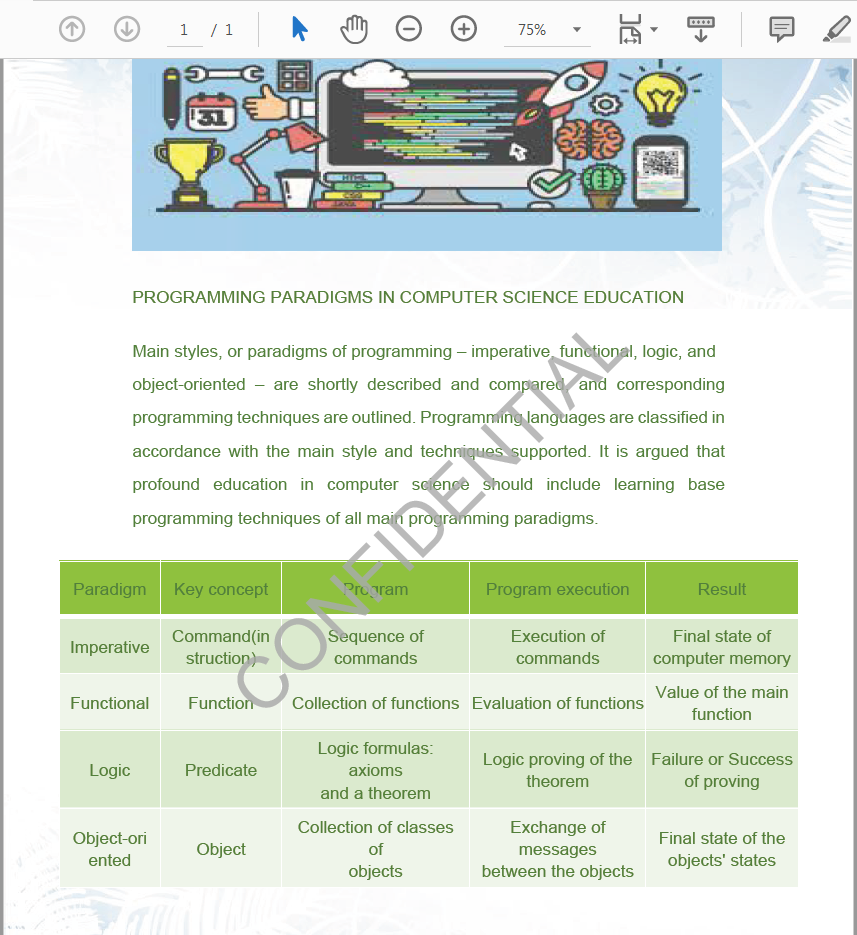

The figure below is the explanation of the information bottleneck in the original text , In fact, information bottleneck describes a constrained optimization goal , The goal is to maximize potential bottlenecks Z And tasks Y Mutual information between , At the same time, discard the input X About Y All irrelevant information .

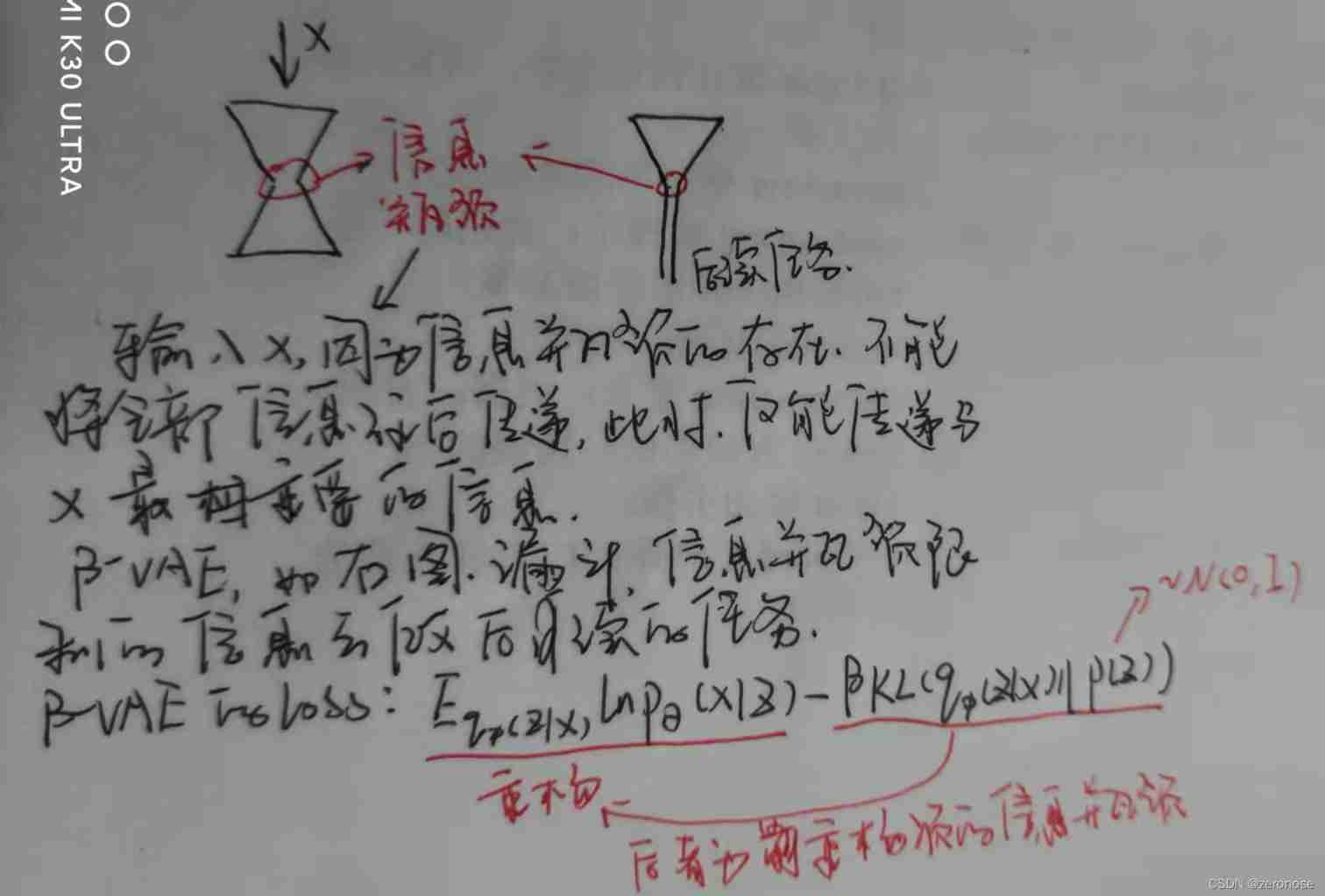

Drawing is troublesome , Let's make do with it , As shown in the figure below

You can see β-VAE The loss function of is :

L(θ, ϕ, β; x, z) = Eqϕ(z|x)ln pθ(x|z)− βKL (qϕ(z|x) || p(z))

The first term on the right of the equation is the reconstruction term , The second term is the regular term . The second is the information bottleneck of the first , Increase the weight of the second term, that is β Value , Also is to let qϕ(z|x) Closer to the p(z), because p(z) It's the standard Zhengtai distribution , At this time, the implicit variables are limited z It contains x The amount of information , Therefore, the decoupling effect is good, but the reconstruction effect is poor , On the contrary, the decoupling effect is poor , The effect of refactoring is good .

Two 、 New training goals

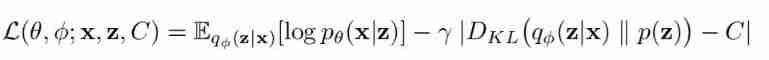

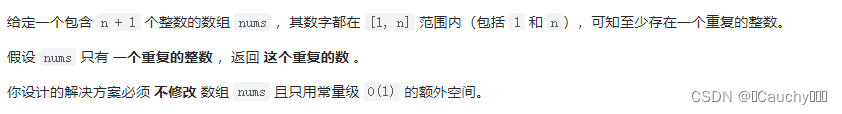

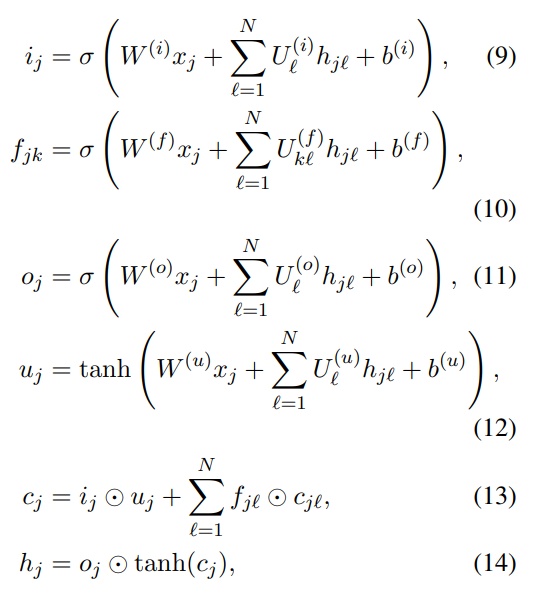

1. Loss function

among ,γ Fixed to a larger number 1000,C Is a variable number . In the process of training ,C Gradually increase from zero to a value large enough to produce high-quality reconstruction .

The training process here is similar to β-VAE Different ,β-VAE During training , It needs to be fixed first β Value then train , change β You need to retrain after the value . there C Is a variable parameter , It also becomes the amount of information , He is in the process of training , from 0 Gradually increasing .

summary

By controlling the increase of potential posterior coding ability in the training process , Allow the previous average KL The difference increases gradually from zero , Not the original β-VAE Fixed in the target β weighting KL Increase of items . Compared with the result of the original formula , It promotes Robust learning of disentangled representation , Combined with better reconstruction fidelity .

边栏推荐

- [Sun Yat sen University] information sharing of postgraduate entrance examination and re examination

- Shangsilicon Valley JUC high concurrency programming learning notes (3) multi thread lock

- 图片缩放中心

- 涂鸦智能在香港双重主板上市:市值112亿港元 年营收3亿美元

- Brief description of SQL optimization problems

- Describe the process of key exchange

- Splay

- 44 colleges and universities were selected! Publicity of distributed intelligent computing project list

- 一种用于夜间和无袖测量血压手臂可穿戴设备【翻译】

- 重磅硬核 | 一文聊透对象在 JVM 中的内存布局,以及内存对齐和压缩指针的原理及应用

猜你喜欢

抽象类与抽象方法

【LeetCode第 300 场周赛】

涂鸦智能在香港双重主板上市:市值112亿港元 年营收3亿美元

44 colleges and universities were selected! Publicity of distributed intelligent computing project list

C#/VB. Net to add text / image watermarks to PDF documents

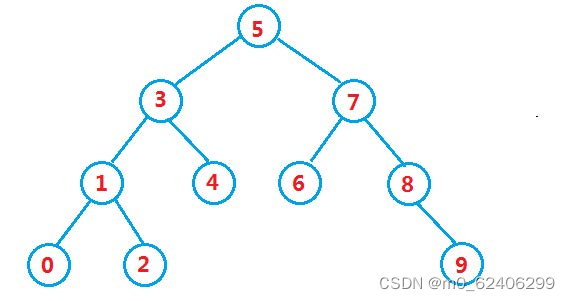

287. Find duplicates

Binary search tree

巨杉数据库首批入选金融信创解决方案!

openmv4 学习笔记1----一键下载、图像处理背景知识、LAB亮度-对比度

Some understandings of tree LSTM and DGL code implementation

随机推荐

Noninvasive and cuff free blood pressure measurement for telemedicine [translation]

SAP Fiori 应用索引大全工具和 SAP Fiori Tools 的使用介绍

Penetration test information collection - App information

Cocos2d Lua 越来越小样本 内存游戏

On AAE

[Sun Yat sen University] information sharing of postgraduate entrance examination and re examination

With the implementation of MapReduce job de emphasis, a variety of output folders

爬虫玩得好,牢饭吃到饱?这3条底线千万不能碰!

287. 寻找重复数

[Matlab] Simulink 同一模块的输入输出的变量不能同名

Interpreting cloud native technology

Deep circulation network long-term blood pressure prediction [translation]

Xu Xiang's wife Ying Ying responded to the "stock review": she wrote it!

测试行业的小伙伴,有问题可以找我哈。菜鸟一枚~

深度循环网络长期血压预测【翻译】

Video based full link Intelligent Cloud? This article explains in detail what Alibaba cloud video cloud "intelligent media production" is

Penetration test information collection - site architecture and construction

Unity资源顺序加载的一个方法

How does crmeb mall system help marketing?

Numerical analysis: least squares and ridge regression (pytoch Implementation)