当前位置:网站首页>Spark independent cluster dynamic online and offline worker node

Spark independent cluster dynamic online and offline worker node

2022-07-06 16:37:00 【Ruo Miaoshen】

List of articles

( One ) Offline Worker node

My operation : Just turn it off ……

Anyway, the work will naturally be handed over to other nodes .

Of course, it should be like this , Log in to the node host that needs to be offline :

Execute script to shut down the machine Worker:

$> $SPARK_HOME/sbin/stop-worker.sh

Notice there's another one stop-workers.sh, It is used to close all Worker Of , Don't get me wrong .

See clearly one more s!!!

If you are in the Master I accidentally executed this multiple S Script for (Master To all Worker It must be configured ssh Key login ),

Then it's all closed ……

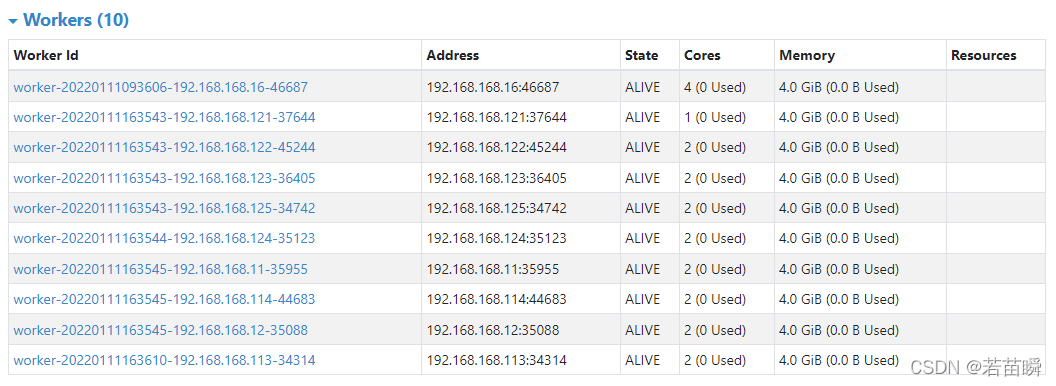

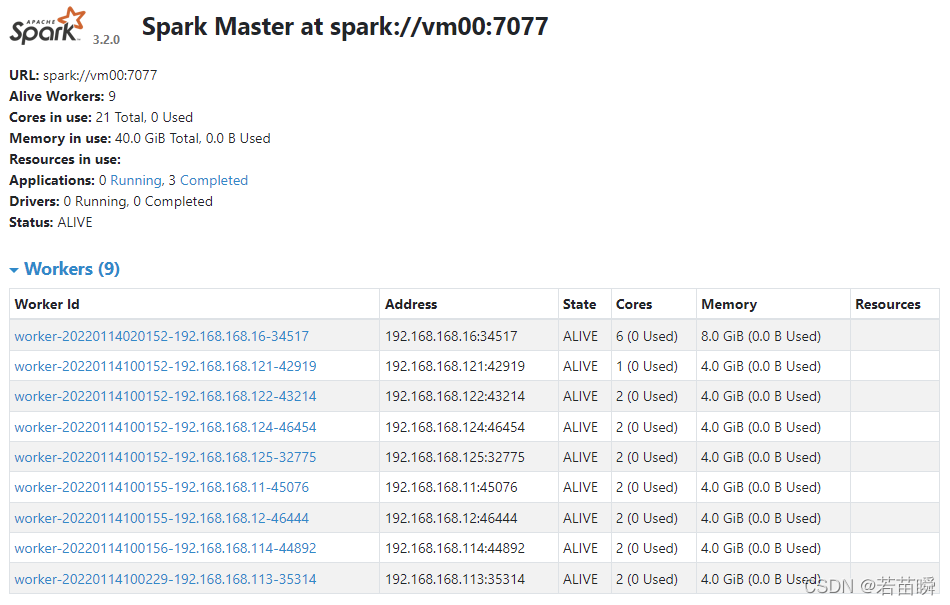

Before shutdown :

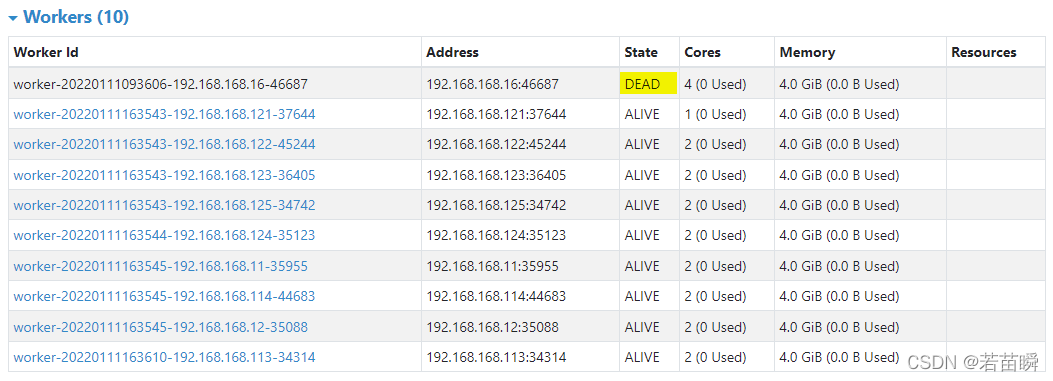

After shutting down :

After a while DEAD Of Worker Will disappear ( The cluster must take some time to see if it can reconnect ).

.

( Two ) go online Worker node

Log in to the node host that needs to go online :

Execute script to start the machine Worker, And connect to Spark Master:

$> $SPARK_HOME/sbin/start-worker.sh spark://vm00:7077

Notice there's another one start-workers.sh Script . The difference is the same as above .

Last , If it's a new one Worker, Don't forget it. $SPARK_CONF_DIR Editor inside workers( or slaves).

Add new Worker The host name , It is convenient for all clusters to start together next time .

by the way , A new start Worker There are new ones ID.

Uh …… End .

边栏推荐

- 图像处理一百题(1-10)

- Problem - 922D、Robot Vacuum Cleaner - Codeforces

- Research Report on market supply and demand and strategy of China's four flat leadless (QFN) packaging industry

- Acwing - game 55 of the week

- Bidirectional linked list - all operations

- Codeforces Round #803 (Div. 2)A~C

- Installation and configuration of MariaDB

- Codeforces - 1526C1&&C2 - Potions

- pytorch提取骨架(可微)

- Flask框架配置loguru日志库

猜你喜欢

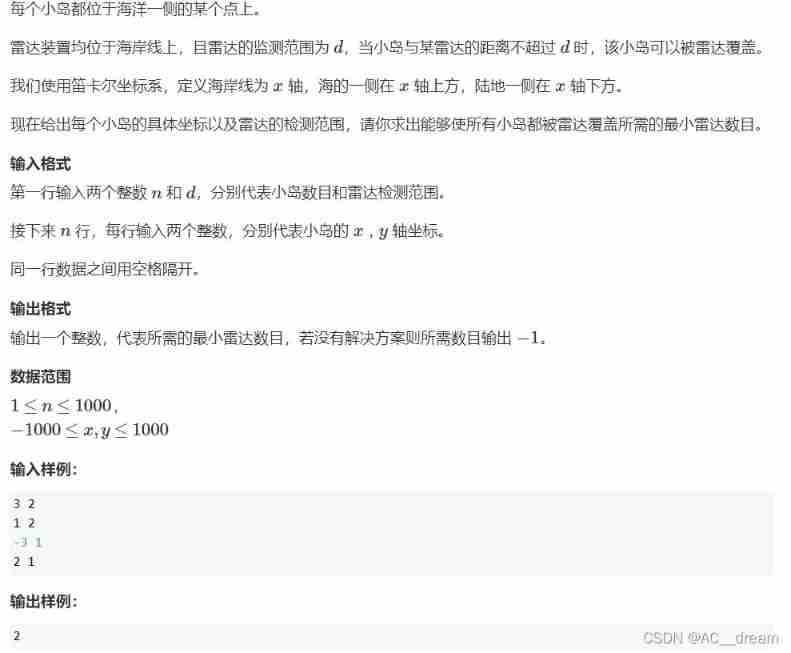

Radar equipment (greedy)

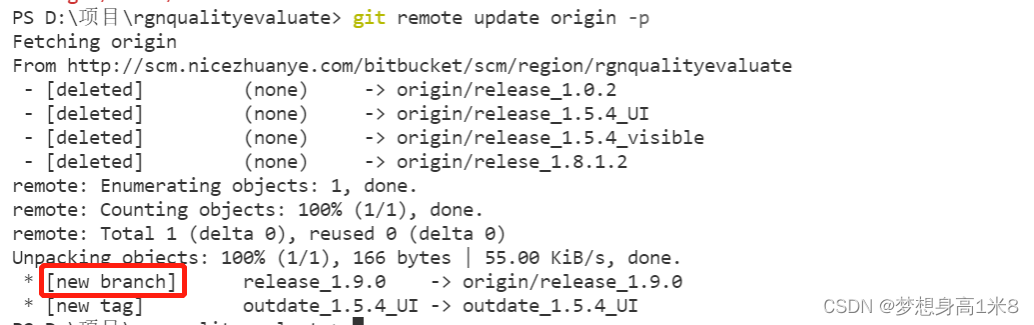

拉取分支失败,fatal: ‘origin/xxx‘ is not a commit and a branch ‘xxx‘ cannot be created from it

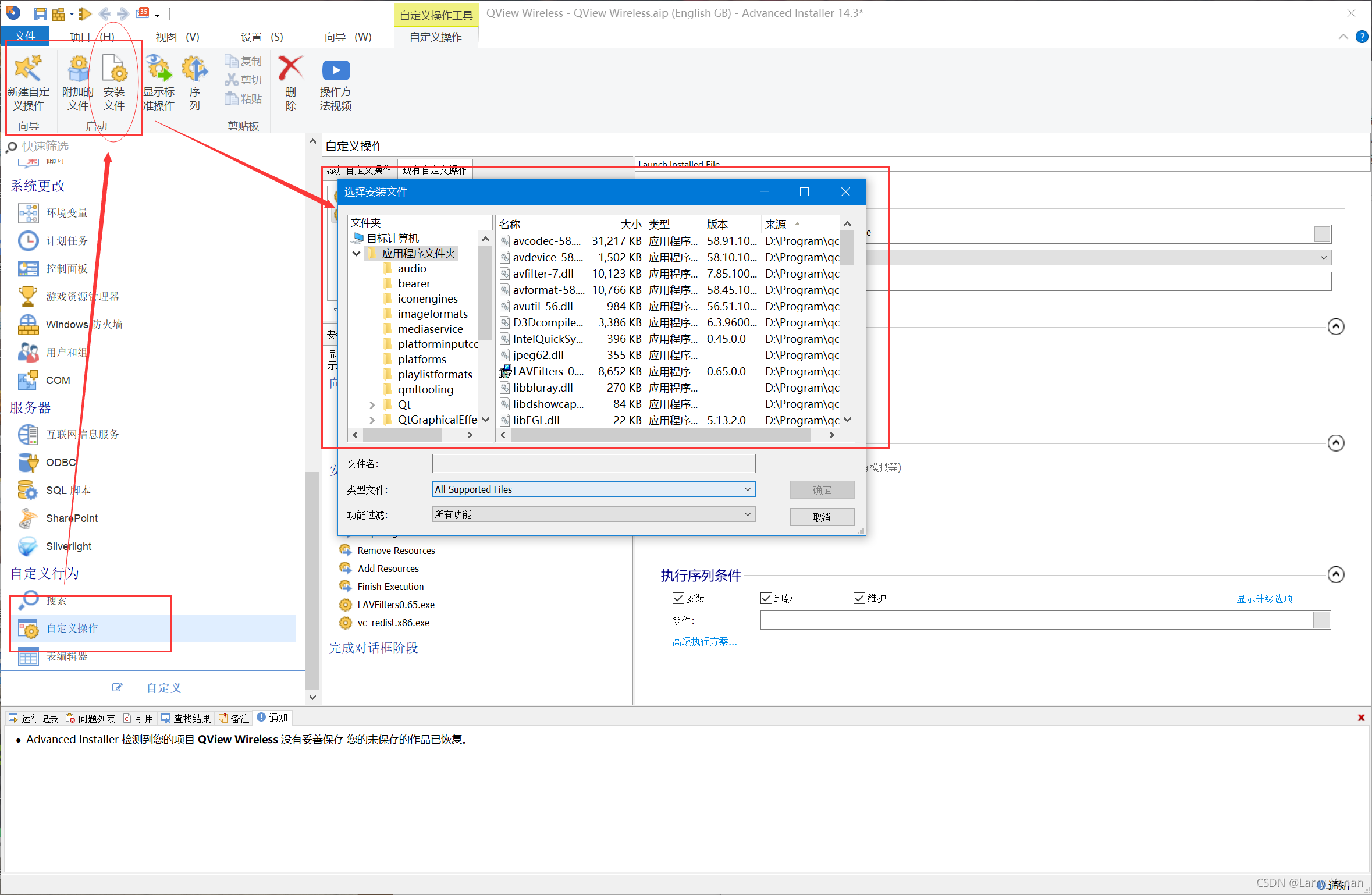

Advancedinstaller安装包自定义操作打开文件

Codeforces Round #799 (Div. 4)A~H

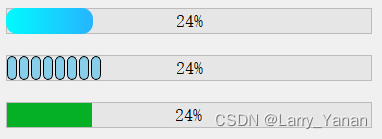

QT implementation window gradually disappears qpropertyanimation+ progress bar

Codeforces round 797 (Div. 3) no f

图像处理一百题(11-20)

Spark独立集群Worker和Executor的概念

QT实现窗口渐变消失QPropertyAnimation+进度条

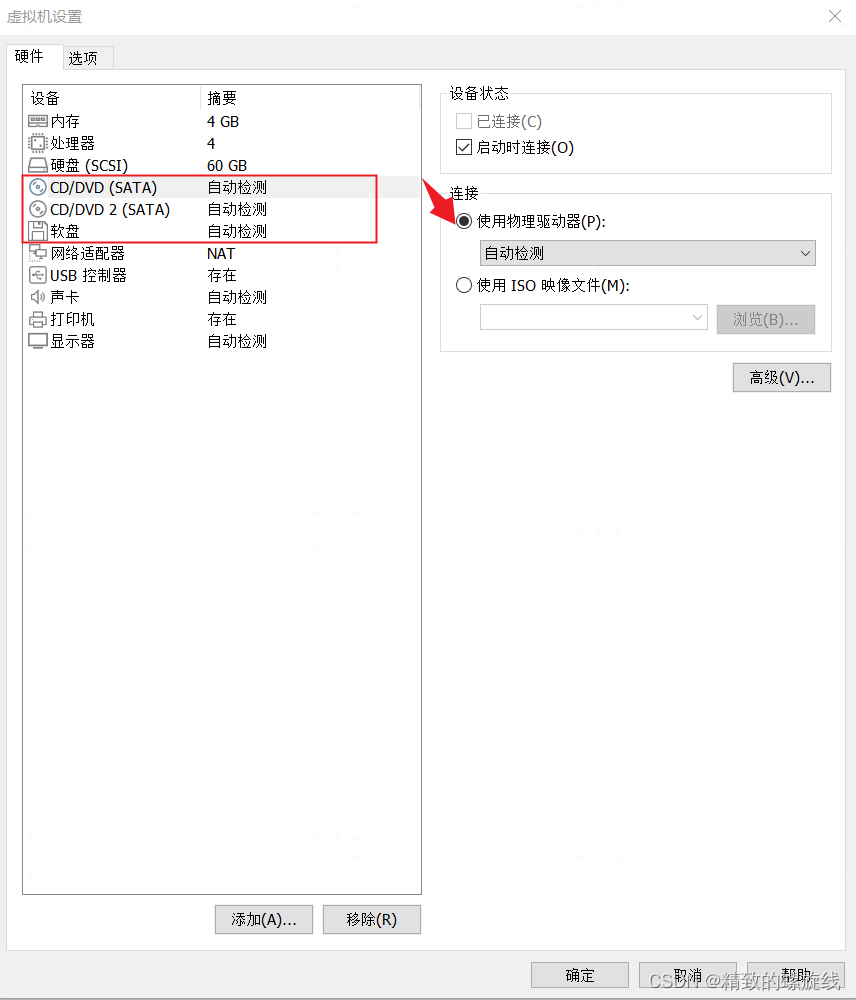

Installation and use of VMware Tools and open VM tools: solve the problems of incomplete screen and unable to transfer files of virtual machines

随机推荐

Codeforces Round #801 (Div. 2)A~C

Tree of life (tree DP)

(POJ - 3258) River hopper (two points)

Installation and use of VMware Tools and open VM tools: solve the problems of incomplete screen and unable to transfer files of virtual machines

js时间函数大全 详细的讲解 -----阿浩博客

Radar equipment (greedy)

input 只能输入数字,限定输入

Market trend report, technological innovation and market forecast of double door and multi door refrigerators in China

Codeforces Round #799 (Div. 4)A~H

第6章 DataNode

Kubernetes集群部署

第7章 __consumer_offsets topic

Some problems encountered in installing pytorch in windows11 CONDA

Chapter 2 shell operation of hfds

(lightoj - 1323) billiard balls (thinking)

Input can only input numbers, limited input

Spark独立集群动态上线下线Worker节点

QT实现窗口渐变消失QPropertyAnimation+进度条

AcWing:第58场周赛

Acwing - game 55 of the week