当前位置:网站首页>Use dumping to back up tidb cluster data to GCS

Use dumping to back up tidb cluster data to GCS

2022-07-07 04:02:00 【Tianxiang shop】

This document describes how to put Kubernetes On TiDB The data of the cluster is backed up to Google Cloud Storage (GCS) On . In this document “ Backup ”, All refer to full backup ( namely Ad-hoc Full backup and scheduled full backup ).

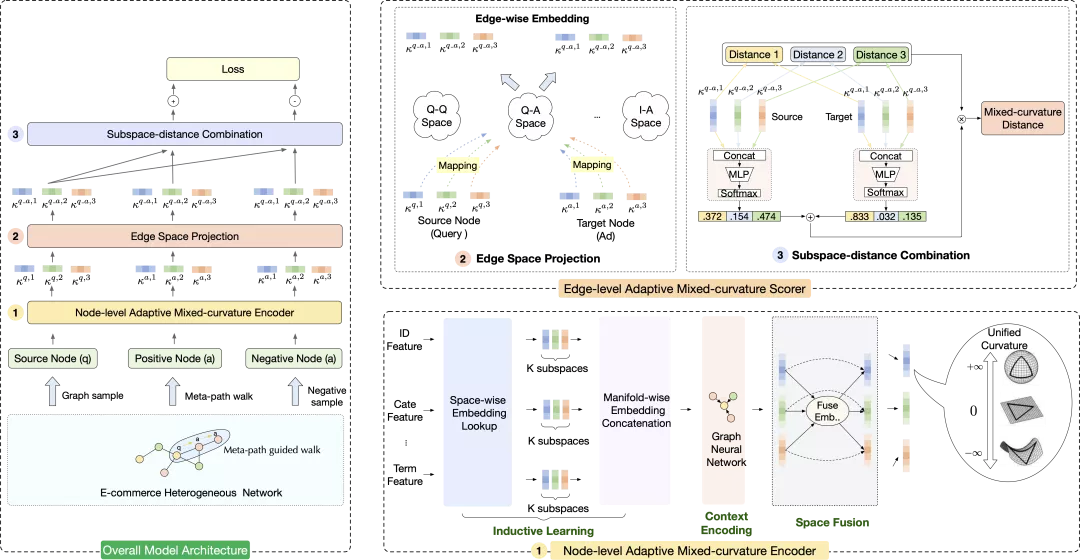

The backup method described in this document is based on TiDB Operator(v1.1 And above ) Of CustomResourceDefinition (CRD) Realization , Bottom use Dumpling The tool obtains the logical backup of the cluster , Then upload the backup data to the remote end GCS.

Dumpling Is a data export tool , The tool can store in TiDB/MySQL The data in is exported as SQL perhaps CSV Format , It can be used to complete logical full backup or export .

Use scenarios

If you need to TiDB Cluster data in Ad-hoc Full volume backup or Scheduled full backup Backup to GCS, And there are the following requirements for data backup , You can use the backup scheme introduced in this article :

- export SQL or CSV Formatted data

- For single SQL Limit the memory of the statement

- export TiDB Snapshot of historical data

precondition

Use Dumpling Backup TiDB Cluster data to GCS front , Make sure you have the following permissions to back up the database :

mysql.tidbTabularSELECTandUPDATEjurisdiction : Before and after backup ,Backup CR You need a database account with this permission , Used to adjust GC Time- SELECT

- RELOAD

- LOCK TABLES

- REPLICATION CLIENT

Ad-hoc Full volume backup

Ad-hoc Full backup by creating a custom Backup custom resource (CR) Object to describe a backup .TiDB Operator According to this Backup Object to complete the specific backup process . If an error occurs during the backup , The program will not automatically retry , At this time, it needs to be handled manually .

To better describe how backups are used , This document provides the following backup examples . The example assumes that the pair is deployed in Kubernetes test1 This namespace Medium TiDB colony demo1 Data backup , The following is the specific operation process .

The first 1 Step :Ad-hoc Full backup environment preparation

Download the file backup-rbac.yaml, And execute the following command in

test1This namespace To create a backup RBAC Related resources :kubectl apply -f backup-rbac.yaml -n test1Remote storage access authorization .

Reference resources GCS Account Authorization Authorized access GCS Remote storage .

establish

backup-demo1-tidb-secretsecret. The secret Store for access TiDB Clustered root Account and key .kubectl create secret generic backup-demo1-tidb-secret --from-literal=password=${password} --namespace=test1

The first 2 Step : Backup data to GCS

establish

BackupCR, And back up the data to GCS:kubectl apply -f backup-gcs.yamlbackup-gcs.yamlThe contents of the document are as follows :--- apiVersion: pingcap.com/v1alpha1 kind: Backup metadata: name: demo1-backup-gcs namespace: test1 spec: from: host: ${tidb_host} port: ${tidb_port} user: ${tidb_user} secretName: backup-demo1-tidb-secret gcs: secretName: gcs-secret projectId: ${project_id} bucket: ${bucket} # prefix: ${prefix} # location: us-east1 # storageClass: STANDARD_IA # objectAcl: private # bucketAcl: private # dumpling: # options: # - --threads=16 # - --rows=10000 # tableFilter: # - "test.*" storageClassName: local-storage storageSize: 10GiThe above example will TiDB The data of the cluster is fully exported and backed up to GCS.GCS The configuration of the

location、objectAcl、bucketAcl、storageClassItems can be omitted .GCS Storage related configuration reference GCS Storage field introduction .In the example above

.spec.dumplingExpress Dumpling Related configuration , Can be inoptionsField assignment Dumpling Operation parameters of , For details, see Dumpling Using document ; By default, this field can be configured without . When you don't specify Dumpling The configuration of ,optionsThe default values of the fields are as follows :options: - --threads=16 - --rows=10000more

BackupCR For detailed explanation of fields, please refer to Backup CR Field is introduced .Create good

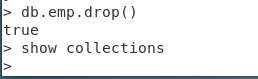

BackupCR after , You can view the backup status through the following command :kubectl get bk -n test1 -owide

Scheduled full backup

Users set backup policies to TiDB The cluster performs scheduled backup , At the same time, set the retention policy of backup to avoid too many backups . Scheduled full backup through customized BackupSchedule CR Object to describe . A full backup will be triggered every time the backup time point , The bottom layer of scheduled full backup passes Ad-hoc Full backup . The following are the specific steps to create a scheduled full backup :

The first 1 Step : Regular full backup environment preparation

Same as Ad-hoc Full backup environment preparation .

The first 2 Step : Back up data in full on a regular basis to GCS

establish

BackupScheduleCR Turn on TiDB Scheduled full backup of the cluster , Back up the data to GCS:kubectl apply -f backup-schedule-gcs.yamlbackup-schedule-gcs.yamlThe contents of the document are as follows :--- apiVersion: pingcap.com/v1alpha1 kind: BackupSchedule metadata: name: demo1-backup-schedule-gcs namespace: test1 spec: #maxBackups: 5 #pause: true maxReservedTime: "3h" schedule: "*/2 * * * *" backupTemplate: from: host: ${tidb_host} port: ${tidb_port} user: ${tidb_user} secretName: backup-demo1-tidb-secret gcs: secretName: gcs-secret projectId: ${project_id} bucket: ${bucket} # prefix: ${prefix} # location: us-east1 # storageClass: STANDARD_IA # objectAcl: private # bucketAcl: private # dumpling: # options: # - --threads=16 # - --rows=10000 # tableFilter: # - "test.*" # storageClassName: local-storage storageSize: 10GiAfter the scheduled full backup is created , You can view the status of scheduled full backup through the following commands :

kubectl get bks -n test1 -owideCheck all the backup pieces below the scheduled full backup :

kubectl get bk -l tidb.pingcap.com/backup-schedule=demo1-backup-schedule-gcs -n test1

From the above example ,backupSchedule The configuration of consists of two parts . Part of it is backupSchedule Unique configuration , The other part is backupTemplate.backupTemplate Specify the configuration related to cluster and remote storage , Fields and Backup CR Medium spec equally , Please refer to Backup CR Field is introduced .backupSchedule For specific introduction of unique configuration items, please refer to BackupSchedule CR Field is introduced .

Be careful

TiDB Operator Will create a PVC, This PVC At the same time Ad-hoc Full backup and scheduled full backup , The backup data will be stored in PV, Then upload to remote storage . If you want to delete this after the backup PVC, You can refer to Delete resources First back up Pod Delete , Then take it. PVC Delete .

If the backup and upload to the remote storage are successful ,TiDB Operator Will automatically delete the local backup file . If the upload fails , Then the local backup file will be preserved .

边栏推荐

- A 股指数成分数据 API 数据接口

- 维护万星开源向量数据库是什么体验

- Clock in during winter vacation

- 学习使用js把两个对象合并成一个对象的方法Object.assign()

- 二叉搜索树的实现

- 1.19.11.SQL客户端、启动SQL客户端、执行SQL查询、环境配置文件、重启策略、自定义函数(User-defined Functions)、构造函数参数

- Confirm the future development route! Digital economy, digital transformation, data This meeting is very important

- NoSQL之Redis配置与优化

- 使用切面实现记录操作日志

- List interview common questions

猜你喜欢

随机推荐

[MySQL] row sorting in MySQL

Unity3D在一建筑GL材料可以改变颜色和显示样本

Arduino droplet detection

2022夏每日一题(一)

如何检测mysql代码运行是否出现死锁+binlog查看

力扣------路径总和 III

Ggplot facet detail adjustment summary

浅谈网络安全之文件上传

Implementation of binary search tree

2022电工杯A题高比例风电电力系统储能运行及配置分析思路

Implementation of map and set

Redis configuration and optimization of NoSQL

什么是 BA ?BA怎么样?BA和BI是什么关系?

MySQL storage engine

Codeworks 5 questions per day (1700 average) - day 7

使用 BR 恢复 GCS 上的备份数据

UltraEdit-32 温馨提示:右协会,取消 bak文件[通俗易懂]

复杂因子计算优化案例:深度不平衡、买卖压力指标、波动率计算

The true face of function pointer in single chip microcomputer and the operation of callback function

接口数据安全保证的10种方式