当前位置:网站首页>Use br to back up tidb cluster to GCS

Use br to back up tidb cluster to GCS

2022-07-07 04:02:00 【Tianxiang shop】

This article describes how to run on Kubernetes Upper TiDB The cluster data is backed up to Google Cloud Storage (GCS) On .

The backup method used in this article is based on TiDB Operator Of Custom Resource Definition (CRD) Realization , Bottom use BR Get cluster data , Then upload the data to the remote end GCS.BR Its full name is Backup & Restore, yes TiDB Command line tools for distributed backup and recovery , Used to deal with TiDB Cluster for data backup and recovery .

Use scenarios

If you have the following requirements for data backup , Consider using BR take TiDB Cluster data in Ad-hoc Backup or Scheduled full backup Backup to GCS On :

- The amount of data that needs to be backed up is large ( Greater than 1 TB), And it requires faster backup

- Need to backup data directly SST file ( Key value pair )

If there are other backup requirements , Please refer to Introduction to backup and recovery Choose the right backup method .

Be careful

- BR Only support TiDB v3.1 And above .

- Use BR The data backed up can only be restored to TiDB In the database , Cannot recover to other databases .

Ad-hoc Backup

Ad-hoc Backup supports full backup and incremental backup .

To carry out Ad-hoc Backup , You need to create a custom Backup custom resource (CR) Object to describe this backup . Create good Backup After the object ,TiDB Operator Automatically complete the specific backup process according to this object . If an error occurs during the backup , The program will not automatically retry , At this time, it needs to be handled manually .

This document assumes that the deployment is in Kubernetes test1 This namespace Medium TiDB colony demo1 Data backup . The following is the specific operation process .

The first 1 Step : Get ready Ad-hoc Backup environment

Download the file backup-rbac.yaml, And execute the following command in

test1This namespace To create a backup RBAC Related resources :kubectl apply -f backup-rbac.yaml -n test1Grant remote storage access .

Reference resources GCS Account Authorization , Authorized access GCS Remote storage .

If you use it TiDB Version below v4.0.8, You also need to complete the following steps . If you use it TiDB by v4.0.8 And above , Please skip these steps .

Make sure you have a backup database

mysql.tidbTabularSELECTandUPDATEjurisdiction , Used to adjust before and after backup GC Time .establish

backup-demo1-tidb-secretsecret For storing access TiDB Clustered root Account and key .kubectl create secret generic backup-demo1-tidb-secret --from-literal=password=<password> --namespace=test1

The first 2 Step : Backup data to GCS

establish

BackupCR, Back up the data to GCS:kubectl apply -f backup-gcs.yamlbackup-gcs.yamlThe contents of the document are as follows :--- apiVersion: pingcap.com/v1alpha1 kind: Backup metadata: name: demo1-backup-gcs namespace: test1 spec: # backupType: full # Only needed for TiDB Operator < v1.1.10 or TiDB < v4.0.8 from: host: ${tidb-host} port: ${tidb-port} user: ${tidb-user} secretName: backup-demo1-tidb-secret br: cluster: demo1 clusterNamespace: test1 # logLevel: info # statusAddr: ${status-addr} # concurrency: 4 # rateLimit: 0 # checksum: true # sendCredToTikv: true # options: # - --lastbackupts=420134118382108673 gcs: projectId: ${project_id} secretName: gcs-secret bucket: ${bucket} prefix: ${prefix} # location: us-east1 # storageClass: STANDARD_IA # objectAcl: privateIn the configuration

backup-gcs.yamlWhen you file , Please refer to the following information :- since v1.1.6 Since version , If you need incremental backup , Only need

spec.br.optionsSpecifies the last backup timestamp--lastbackuptsthat will do . Restrictions on incremental backups , May refer to Use BR Back up and restore . .spec.brSome parameters in are optional , for examplelogLevel、statusAddretc. . complete.spec.brDetailed explanation of fields , Please refer to BR Field is introduced .spec.gcsSome parameters in are optional , Such aslocation、objectAcl、storageClass.GCS Storage related configuration reference GCS Storage field introduction .- If you use it TiDB by v4.0.8 And above , BR Will automatically adjust

tikv_gc_life_timeParameters , No configuration requiredspec.tikvGCLifeTimeandspec.fromField . - more

BackupCR Detailed explanation of fields , Please refer to Backup CR Field is introduced .

- since v1.1.6 Since version , If you need incremental backup , Only need

Create good

BackupCR after ,TiDB Operator Will be based onBackupCR Automatically start backup . You can check the backup status through the following command :kubectl get bk -n test1 -owide

Backup example

Back up all cluster data

Backing up data from a single database

Back up the data of a single table

Use the table library filtering function to back up the data of multiple tables

Scheduled full backup

Users set backup policies to TiDB The cluster performs scheduled backup , At the same time, set the retention policy of backup to avoid too many backups . Scheduled full backup through customized BackupSchedule CR Object to describe . A full backup will be triggered every time the backup time point , The bottom layer of scheduled full backup passes Ad-hoc Full backup . The following are the specific steps to create a scheduled full backup :

The first 1 Step : Regular full backup environment preparation

Same as Ad-hoc Full backup environment preparation .

The first 2 Step : Regularly back up data to GCS

establish

BackupScheduleCR, Turn on TiDB Scheduled full backup of the cluster , Back up the data to GCS:kubectl apply -f backup-schedule-gcs.yamlbackup-schedule-gcs.yamlThe contents of the document are as follows :--- apiVersion: pingcap.com/v1alpha1 kind: BackupSchedule metadata: name: demo1-backup-schedule-gcs namespace: test1 spec: #maxBackups: 5 #pause: true maxReservedTime: "3h" schedule: "*/2 * * * *" backupTemplate: # Only needed for TiDB Operator < v1.1.10 or TiDB < v4.0.8 from: host: ${tidb_host} port: ${tidb_port} user: ${tidb_user} secretName: backup-demo1-tidb-secret br: cluster: demo1 clusterNamespace: test1 # logLevel: info # statusAddr: ${status-addr} # concurrency: 4 # rateLimit: 0 # checksum: true # sendCredToTikv: true gcs: secretName: gcs-secret projectId: ${project_id} bucket: ${bucket} prefix: ${prefix} # location: us-east1 # storageClass: STANDARD_IA # objectAcl: privateFrom above

backup-schedule-gcs.yamlThe file configuration example shows ,backupScheduleThe configuration of consists of two parts . Part of it isbackupScheduleUnique configuration , The other part isbackupTemplate.- About

backupScheduleSpecific introduction to unique configuration items , Please refer to BackupSchedule CR Field is introduced . backupTemplateUsed to specify the configuration related to cluster and remote storage , Fields and Backup CR Mediumspecequally , Please refer to Backup CR Field is introduced .

- About

After the scheduled full backup is created , Check the status of the backup through the following command :

kubectl get bks -n test1 -owideCheck all the backup pieces below the scheduled full backup :

kubectl get bk -l tidb.pingcap.com/backup-schedule=demo1-backup-schedule-gcs -n test1

边栏推荐

- AVL树插入操作与验证操作的简单实现

- 使用Thread类和Runnable接口实现多线程的区别

- Unity3D在一建筑GL材料可以改变颜色和显示样本

- [leetcode] 450 and 98 (deletion and verification of binary search tree)

- 【mysql】mysql中行排序

- Ggplot facet detail adjustment summary

- GPT-3当一作自己研究自己,已投稿,在线蹲一个同行评议

- SQL injection -day15

- idea gradle lombok 报错集锦

- UltraEdit-32 温馨提示:右协会,取消 bak文件[通俗易懂]

猜你喜欢

【编码字体系列】OpenDyslexic字体

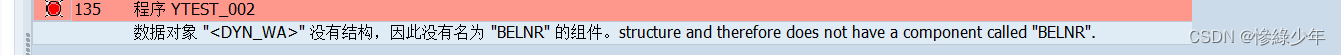

ABAP 动态内表分组循环

2022中青杯C题城市交通思路分析

Antd Comment 递归循环评论

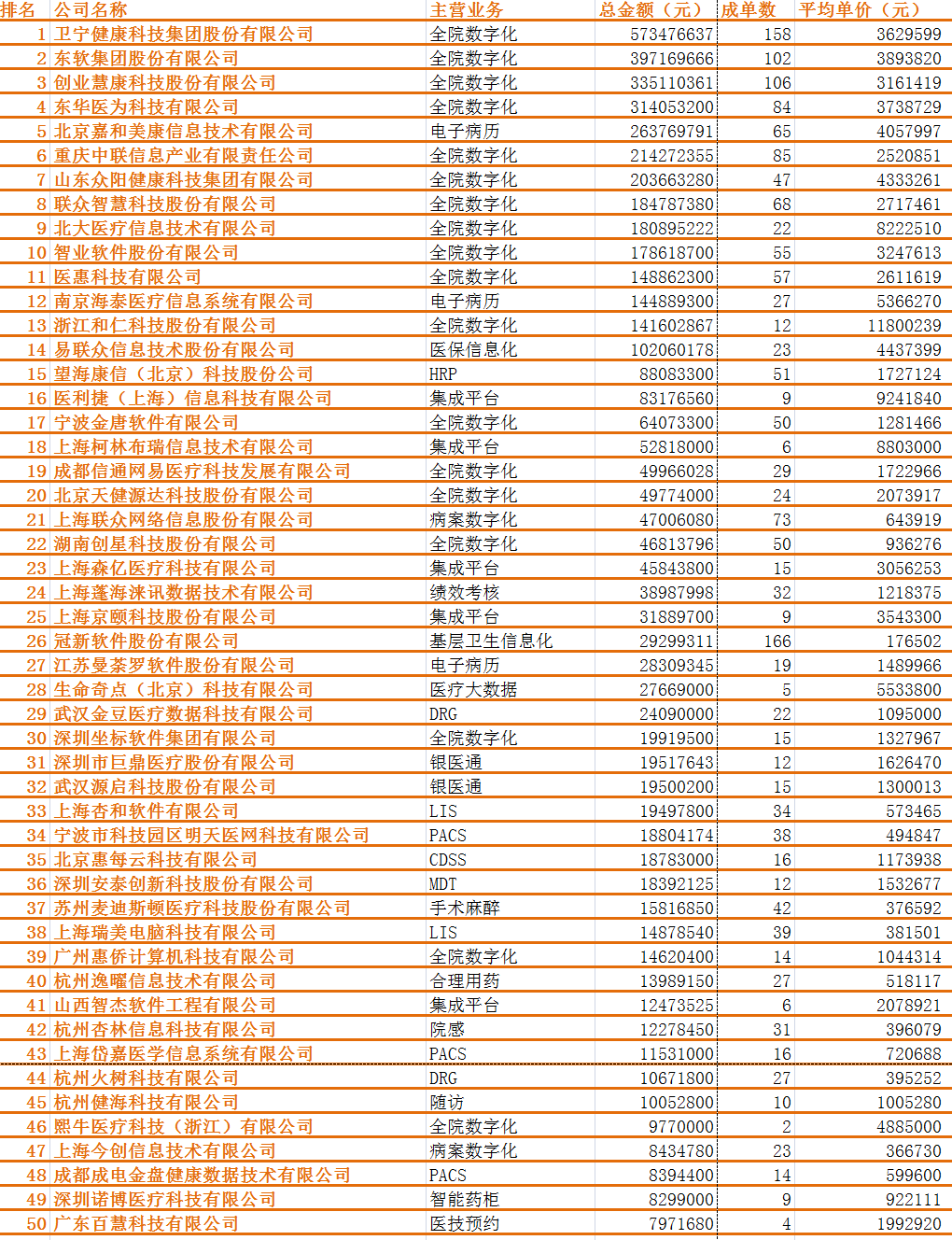

Top 50 hit industry in the first half of 2022

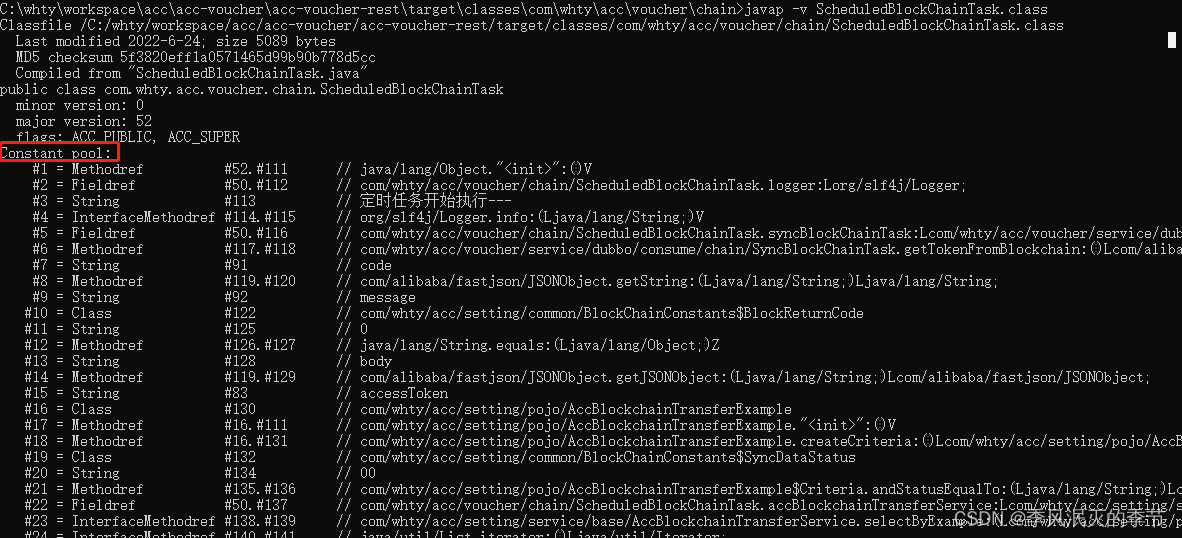

Class常量池与运行时常量池

Implementation steps of docker deploying mysql8

Codeworks 5 questions per day (1700 average) - day 7

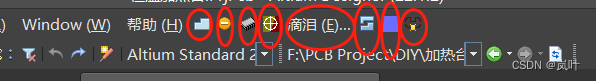

Create commonly used shortcut icons at the top of the ad interface (menu bar)

Confirm the future development route! Digital economy, digital transformation, data This meeting is very important

随机推荐

运算放大器应用汇总1

HW notes (II)

使用 BR 恢复 GCS 上的备份数据

QT thread and other 01 concepts

Implementation steps of docker deploying mysql8

. Net interface can be implemented by default

接口数据安全保证的10种方式

GPT-3当一作自己研究自己,已投稿,在线蹲一个同行评议

Do you choose pandas or SQL for the top 1 of data analysis in your mind?

C task expansion method

[dpdk] dpdk sample source code analysis III: dpdk-l3fwd_ 001

QT 打开文件 使用 QFileDialog 获取文件名称、内容等

Tencent cloud native database tdsql-c was selected into the cloud native product catalog of the Academy of communications and communications

概率论公式

The true face of function pointer in single chip microcomputer and the operation of callback function

Machine learning notes - bird species classification using machine learning

使用Thread类和Runnable接口实现多线程的区别

Delete data in SQL

Construction of Hisilicon universal platform: color space conversion YUV2RGB

再AD 的 界面顶部(菜单栏)创建常用的快捷图标