当前位置:网站首页>Logistic regression: the most basic neural network

Logistic regression: the most basic neural network

2022-07-05 07:34:00 【sukhoi27smk】

One 、 What is? logictic regression

The picture below is Andrew Ng One provided with logistic regression Schematic diagram of algorithm structure for identifying master and child pictures :

「 On the left 」 Of 「x0 To x12287「 It's input (input), We call it 」 features (feather)」, Often use 「 Column vector x(i)「 To express ( there i On behalf of the i Training samples , Next, when only one sample is discussed , Just omit this mark for the time being , So as not to faint -_-|||), In picture recognition , The feature is usually the pixel value of the picture , Putting all the pixel values in a sequence is the input feature , Each feature has its own 」 The weight (weight)」, It's on the line in the figure 「w0 To w12287」, Usually, we also combine the left and right weights into one 「 Column vector W」.

「 The middle circle 」, We can call it a neuron , It receives input from the left and multiplies it by the corresponding weight , Plus an offset term b( A real number ), So the total input finally received is :

But this is not the final output , Just like neurons , There will be one. 「 Activation function (activation function)「 To process the input , To decide whether to output or how much .Logistic Regression The activation function of is 」sigmoid function 」, Be situated between 0 and 1 Between , The slope in the middle is relatively large , The slope on both sides is very small and tends to zero in the distance . Long like this ( Remember function expressions ):

We use it to represent the output of this neuron ,σ() The function represents sigmoid, Then we can see :

This can be seen as a prediction made by our small model according to the input , In the case corresponding to the initial figure , It is to predict whether the picture is a cat according to the pixels of the picture . With the corresponding , Every sample x Each has its own real label , The representative picture is a cat , It means not a cat . We hope that the output of the model can be as close to the real label as possible , such , This model can be used to predict whether a new picture is a cat . therefore , Our task is to find a group W,b, So that our model can be based on the given , Predict correctly . Here, , We can argue that , As long as the calculated value is greater than 0.5, that y' It's closer to 1, So it can be predicted that “ It's a cat. ”, whereas “ It's not a cat ”.

That's all Logistic Regression The basic structure of .

Two 、 How to learn W and b

In fact, I mentioned earlier , We 「 Need to learn W and b It can make the predicted value of the model y' With real labels y As close as possible to , That is to say y' and y Try to narrow the gap 」. therefore , We can define one 「 Loss function (Loss function)」, To measure and y The gap between :

actually , This is the cross entropy loss function ,Cross-entropy loss. Cross entropy measures the difference between two different distributions , ad locum , That is to measure the gap between our predicted distribution and the official distribution .

How to explain that this formula is suitable as a loss function ? Let's see :

When y=1 when ,, To make L Minimum , The maximum , be =1;

When y=0 when ,, To make L Minimum , Minimum , be =0.

such , Then we know that it meets our expectations for the loss function , Therefore, it is suitable as a loss function .

We know ,x Represents a set of inputs , It is equivalent to the characteristics of a sample . But when we train a model, there will be many training samples , That is, there are many x, There will be x(1),x(2),...,x(m) common m Samples (m Column vectors ), They can be written as a X matrix :

Correspondingly, we also have m A label ,:

There will also be calculated by our model m individual :

The loss function we wrote earlier , Calculate the loss of only one sample . But we need to consider the loss of all training samples , Then the total loss can be calculated in this way :

With the total loss function , Our learning task can be expressed in one sentence :

“ seek w and b, Minimize the loss function ”

To minimize the ... Easier said than done , Fortunately, we have computers , It can help us do a lot of repeated operations , So in neural networks , We usually use 「 Gradient descent method (Gradient Decent)」:

This method is more popular , First, find a random point on the curve , Then calculate the slope of the point , Also known as gradient , Then follow the gradient one step down , After reaching a new point , Repeat the above steps , Until we reach the lowest point ( Or reach a certain condition we meet ). Such as , Yes w Make a gradient descent , Is to repeat the steps ( Repeat once is called a 「 iteration 」):

among := representative “ Update with the following values ”,α representative 「 Learning rate (learning rate)」,dJ/dw Namely J Yes w Finding partial derivatives .

Back to our Logistic Regression problem , Is to initialize (initializing) A group of W and b, And give a learning rate , Specified to 「 The number of iterations 」( Is how many steps you want the dot to go down ), Then, in each iteration, find w and b Gradient of , And update the w and b. The final W and b Is what we learned W and b, hold W and b Put it into our model , It's the model we learned , It can be used to predict !

It should be noted that , The loss we use here is the loss of all training samples . actually , It will be too slow to update with the loss of all samples , But use a sample to update , The error will be very big . therefore , We often choose 「 Batches of a certain size 」(batch), And then calculate a batch Loss within , Then update the parameters .

To sum up :

Logistic Regression Model :, Remember that the activation function used is sigmoid function .

Loss function : Measure the difference between the predicted value and the real value , The smaller the better. .

We usually calculate the total loss of a batch of samples , Then use the gradient descent method to update .

「 The steps of training the model 」:

initialization W and b

Appoint learning rate And the number of iterations

Every iteration , Based on the current W and b Calculate the corresponding gradient (J Yes W,b Partial derivative of ), And then update W and b

End of the iteration , Learning W and b, Bring in the model to predict , Test the accuracy of training set test set separately , To evaluate the model

It's so clear (▰˘◡˘▰)

边栏推荐

- Chapter 2: try to implement a simple bean container

- QT small case "addition calculator"

- arcpy. SpatialJoin_ Analysis spatial connection analysis

- Altimeter data knowledge point 2

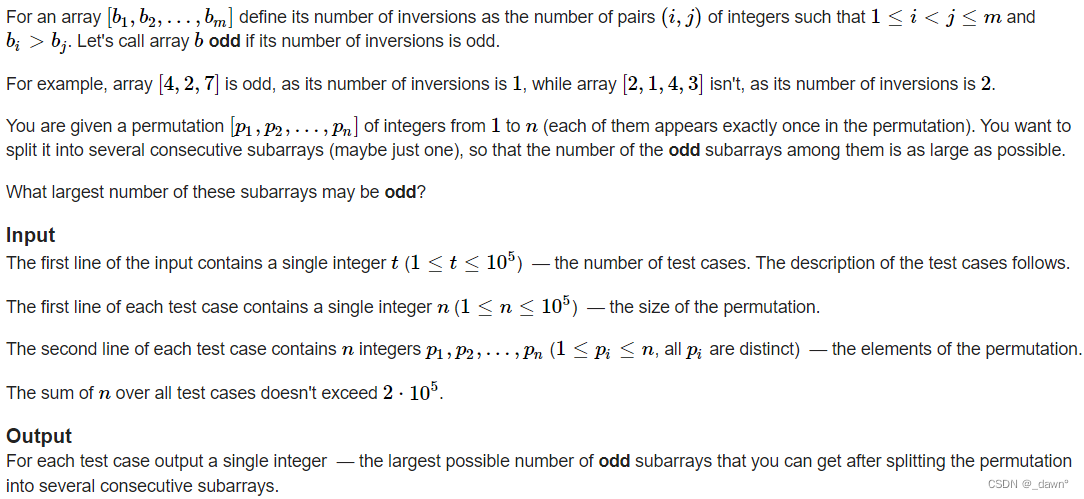

- Daily Practice:Codeforces Round #794 (Div. 2)(A~D)

- 数字孪生实际应用案例-风机篇

- [neo4j] common operations of neo4j cypher and py2neo

- Simple operation with independent keys (hey, a little fancy) (keil5)

- Apple terminal skills

- With the help of Navicat for MySQL software, the data of a database table in different or the same database link is copied to another database table

猜你喜欢

Microservice registry Nacos introduction

Today, share the wonderful and beautiful theme of idea + website address

2022年PMP项目管理考试敏捷知识点(7)

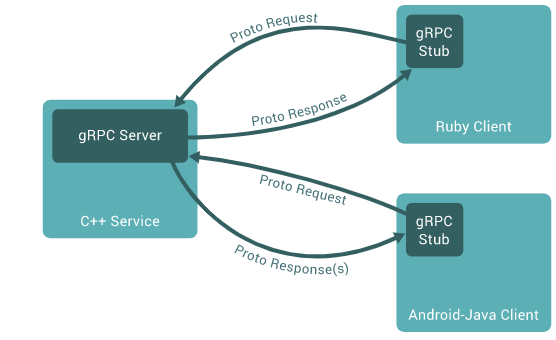

Play with grpc - go deep into concepts and principles

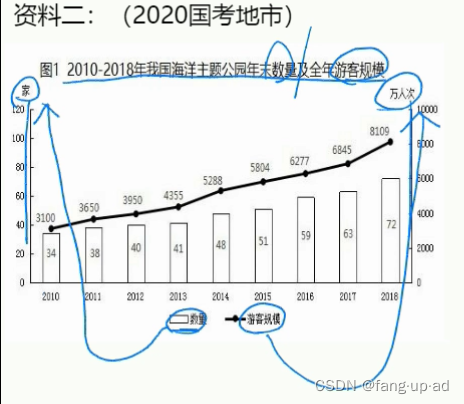

行测--资料分析--fb--高照老师

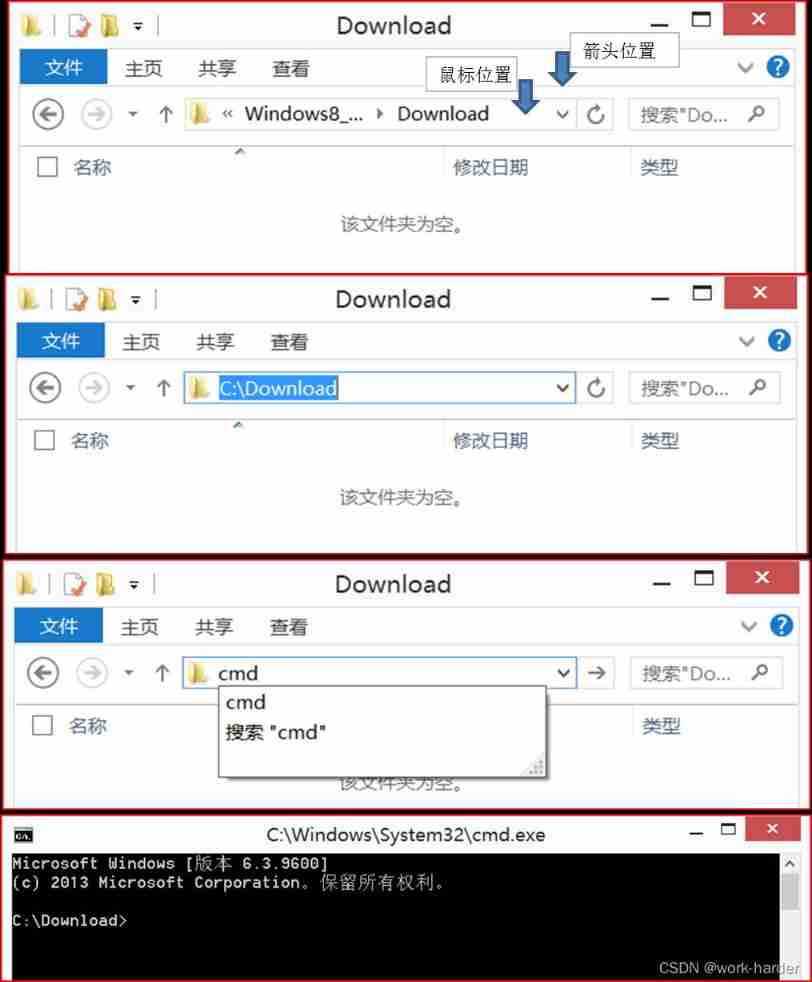

The folder directly enters CMD mode, with the same folder location

Daily Practice:Codeforces Round #794 (Div. 2)(A~D)

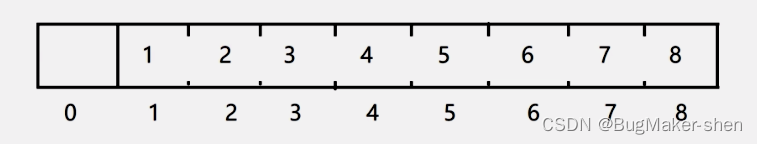

Explanation of parallel search set theory and code implementation

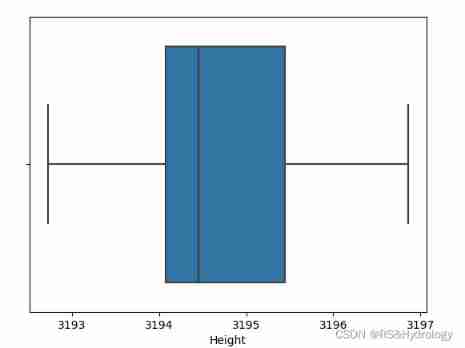

Machine learning Seaborn visualization

![[node] NVM version management tool](/img/26/f13a2451c2f177a86bcb2920936468.png)

[node] NVM version management tool

随机推荐

Process (P) runs, and idle is different from pycharm

Typecho adds Baidu collection (automatic API submission plug-in and crawler protocol)

Light up the running light, rough notes for beginners (1)

Batch convert txt to excel format

arcpy. SpatialJoin_ Analysis spatial connection analysis

Simple operation of running water lamp (keil5)

Matrix keyboard scan (keil5)

剑指 Offer 56 数组中数字出现的次数(异或)

玩转gRPC—深入概念与原理

Pit record of Chmod 2 options in deepin

Idea to view the source code of jar package and some shortcut keys (necessary for reading the source code)

Apple script

[vscode] recommended plug-ins

What if the DataGrid cannot see the table after connecting to the database

What is sodium hydroxide?

UNIX commands often used in work

Clickhouse database installation deployment and remote IP access

苏打粉是什么?

arcgis_ spatialjoin

I 用c I 实现队列