当前位置:网站首页>Over fitting and regularization

Over fitting and regularization

2022-07-05 05:33:00 【Li Junfeng】

Over fitting

This is a neural network training process , The problems we often encounter , Simply speaking , Is the performance of the model , Learning ability is too strong , So that Training set All the details of have been recorded . When you meet Test set , It's when I haven't seen data before , There will be obvious mistakes .

The reasons causing

The most essential reason is : Too many parameters ( The model is too complex )

Other reasons are :

- The distribution of test set and training set is different

- The number of training sets is too small

terms of settlement

For the above reasons , Several countermeasures can be put forward

- Reduce model complexity , Regularization is commonly used .

- Enhanced training set

norm Norm(Minkowski distance )

Definition

A norm is a function , It gives each vector in a vector space a length or size .

For the zero vector , The length of 0.

∥ x ∥ p = ( ∑ i = 1 n ∣ x ∣ p ) 1 p \lVert x \rVert_p = \left(\displaystyle\sum_{i=1}^n \lvert x\rvert^p\right)^{\frac{1}{p}} ∥x∥p=(i=1∑n∣x∣p)p1

Properties of norms

- Nonnegativity ∥ x ∥ ≥ 0 \lVert x\rVert \ge 0 ∥x∥≥0

- Homogeneity ∥ c x ∥ = ∣ c ∣ ∥ x ∥ \lVert cx\rVert=\lvert c\rvert \lVert x\rVert ∥cx∥=∣c∣∥x∥

- Trigonometric inequality ∥ x + y ∥ ≤ ∥ x ∥ + ∥ y ∥ \lVert x + y\rVert \leq \lVert x\rVert +\Vert y\rVert ∥x+y∥≤∥x∥+∥y∥

Norm characteristic

- L 0 L_0 L0 norm : Number of non-zero elements

- L 1 L_1 L1 norm : The sum of absolute values

- L 2 L_2 L2 norm : Euler distance

- L ∞ L_{\infin} L∞ norm : The absolute value Maximum The absolute value of the element of

Regularization

Objective function plus a norm , As a penalty . If a parameter is larger , It will increase the norm , That is, the penalty item increases . So under the action of norm , Many parameters are getting smaller .

The smaller the parameter , It shows that it plays a smaller role in neural network , That is, the smaller the impact on the final result , Therefore, it can make the model simpler , And it has more generalization ability .

Regularization is also a Superior bad discard Thought , Although many parameters are useful for the model , But in the end, only important parameters can be preserved ( It's worth more , Have a great impact on the results ), And most parameters have been eliminated ( Small values , It has little effect on the results ).

边栏推荐

- Palindrome (csp-s-2021-palin) solution

- 2017 USP Try-outs C. Coprimes

- 【Jailhouse 文章】Jailhouse Hypervisor

- Improvement of pointnet++

- Summary of Haut OJ 2021 freshman week

- Graduation project of game mall

- CF1637E Best Pair

- Haut OJ 1352: string of choice

- [to be continued] I believe that everyone has the right to choose their own way of life - written in front of the art column

- kubeadm系列-01-preflight究竟有多少check

猜你喜欢

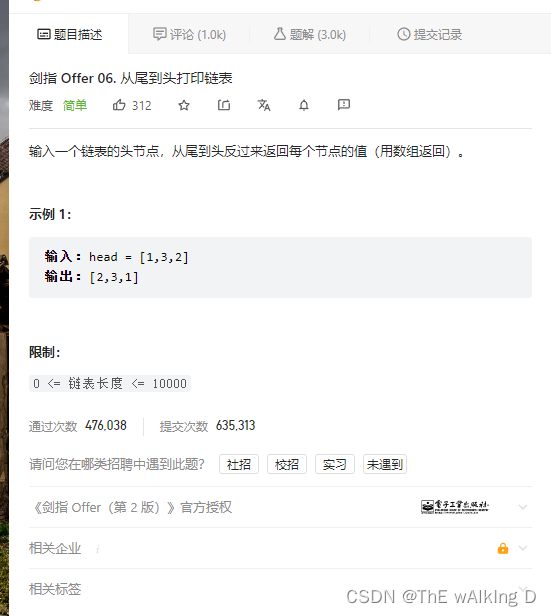

剑指 Offer 06.从头到尾打印链表

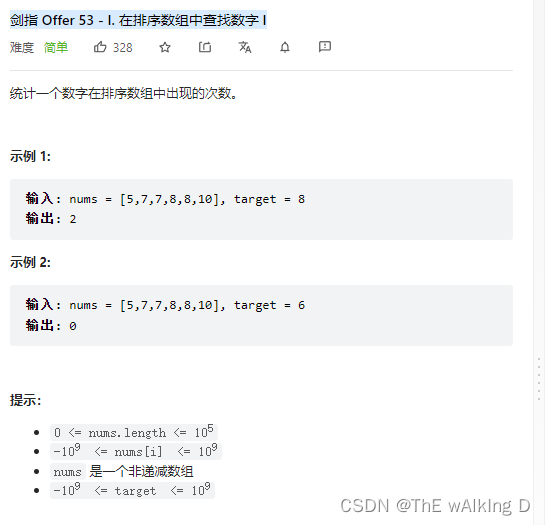

剑指 Offer 53 - I. 在排序数组中查找数字 I

The present is a gift from heaven -- a film review of the journey of the soul

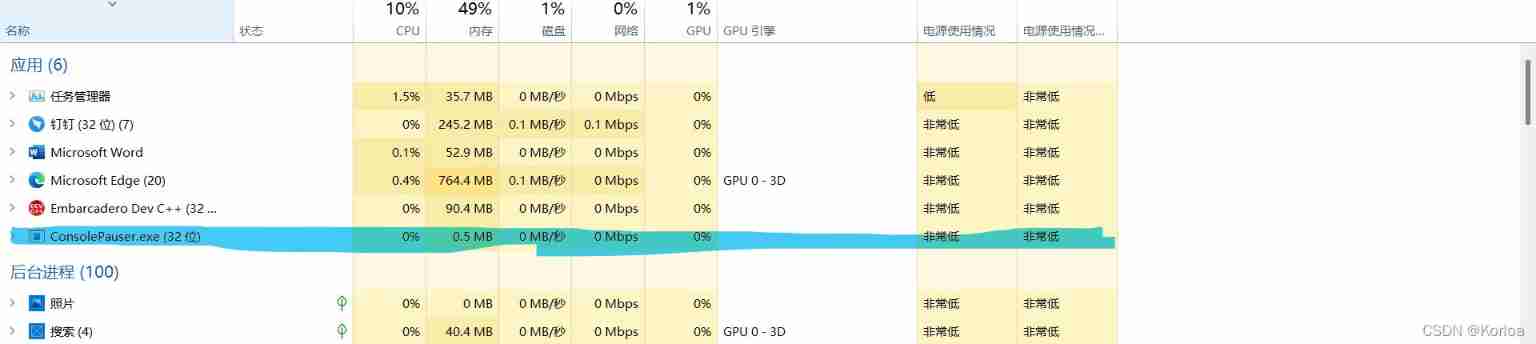

A misunderstanding about the console window

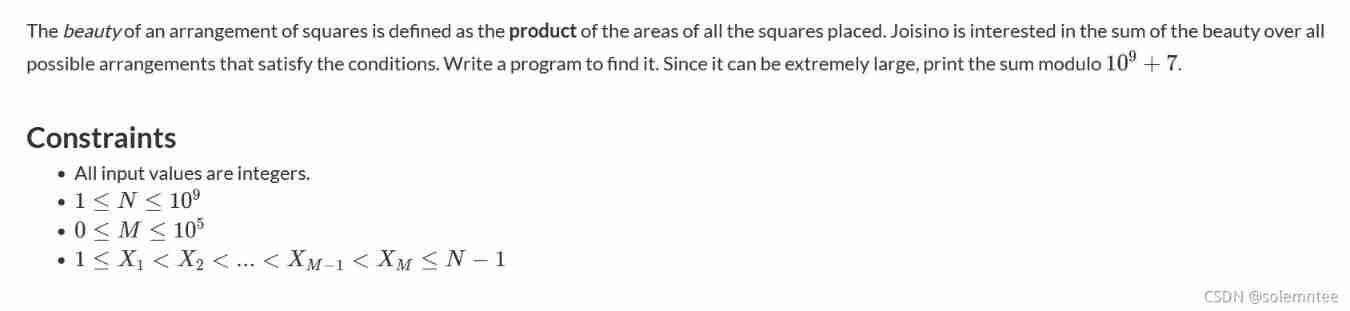

AtCoder Grand Contest 013 E - Placing Squares

Acwing 4300. Two operations

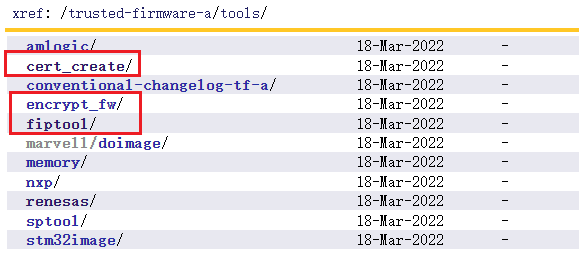

Introduction to tools in TF-A

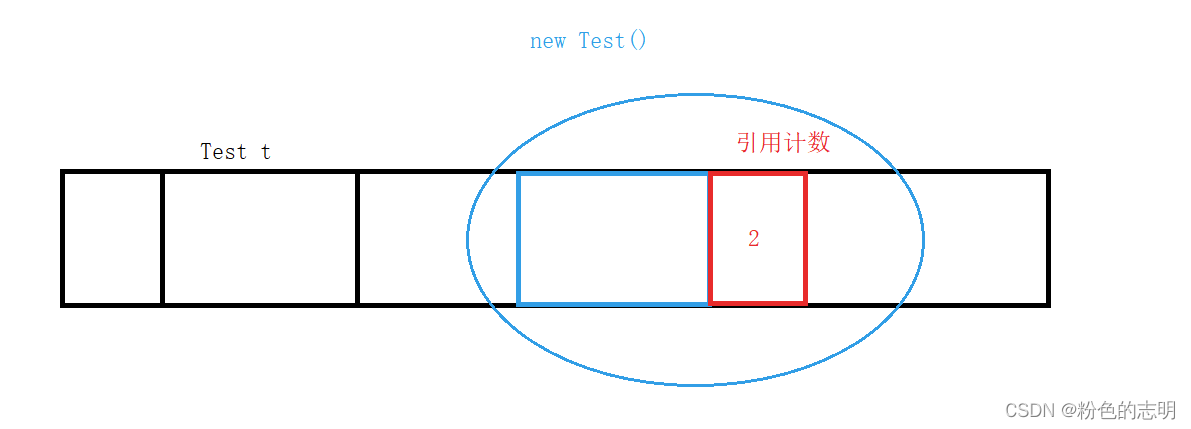

Talking about JVM (frequent interview)

Corridor and bridge distribution (csp-s-2021-t1) popular problem solution

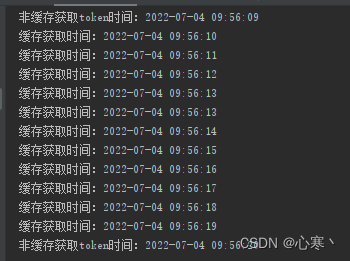

利用HashMap实现简单缓存

随机推荐

sync. Interpretation of mutex source code

Codeforces Round #732 (Div. 2) D. AquaMoon and Chess

Acwing 4301. Truncated sequence

Cluster script of data warehouse project

Detailed explanation of expression (csp-j 2021 expr) topic

Add level control and logger level control of Solon logging plug-in

Alu logic operation unit

卷积神经网络简介

Developing desktop applications with electron

Improvement of pointnet++

The present is a gift from heaven -- a film review of the journey of the soul

[to be continued] [depth first search] 547 Number of provinces

搭建完数据库和网站后.打开app测试时候显示服务器正在维护.

Remote upgrade afraid of cutting beard? Explain FOTA safety upgrade in detail

浅谈JVM(面试常考)

Reflection summary of Haut OJ freshmen on Wednesday

Kubedm series-00-overview

[to be continued] I believe that everyone has the right to choose their own way of life - written in front of the art column

【实战技能】非技术背景经理的技术管理

Haut OJ 1243: simple mathematical problems