当前位置:网站首页>Learning notes of "hands on learning in depth"

Learning notes of "hands on learning in depth"

2022-07-05 05:05:00 【fb_ help】

《 Hands-on deep learning 》 Learning notes

Data manipulation

Automatic derivation

Variable setting derivation

x = torch.ones(2, 2, requires_grad=True)

Obtain derivative

out.backward()

Here, the dependent variable is required to be scalar , If tensor, You need to provide a weight matrix with the same size as the dependent variable , The output dependent variable is transformed into a scalar by weighted summation of all elements , Then you can backward().

The reason is well understood : There is no relationship between dependent variables , All dependent variable elements are simply put together , Therefore, their permutation can be regarded as a one-dimensional vector and linearly weighted to a scalar l On . The advantage of this is that the gradient is independent of the latitude of the dependent variable , Got l And the gradient of the independent variable , It doesn't matter what shape the dependent variable is tensor.

Linear regression

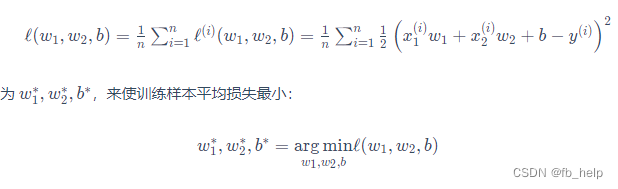

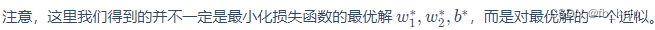

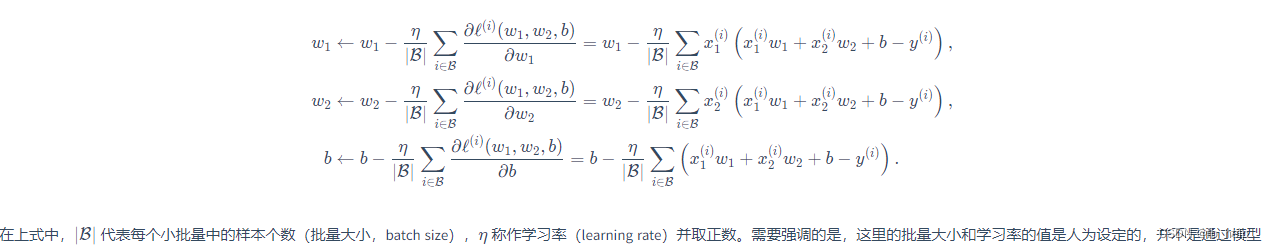

The gradient descent method solves the parameters

The above formula can be solved analytically .

The parameters to be solved can also be optimized by gradient descent through linear regression . Small batch random gradient descent is usually used (mini-batch stochastic gradient descent) Method , That is, calculate the average gradient in small batches , Multi batch optimization parameters .

Here is also multiplied by a learning rate , It is equivalent to step size , It can be a little bigger at first , The back should be smaller .

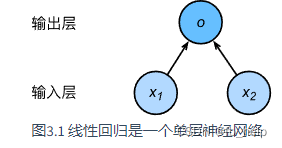

Fully connected layer ( Dense layer )

Linear regression

- Build simulation data , Confirm the input (features), Input (label), Parameters

- Write data loader( Split data into batch)

- structure function (net) , loss And optimization method

- iteration epoch solve

Linear regression concise version

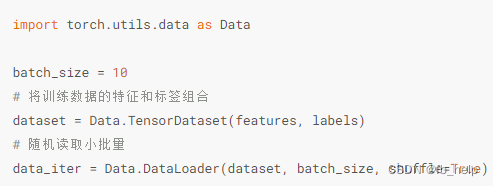

data fetch

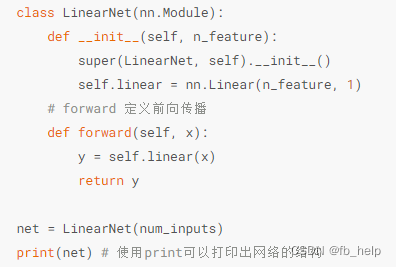

Define your own function, You need to give the number of parameters and forward function . In fact, it is the functional relationship between input and output . therefore , Give the calculation method of input and output , namely forward function . Neural network replaces this functional relationship with grid structure .

use torch Of net structure

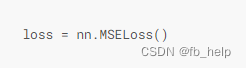

Loss

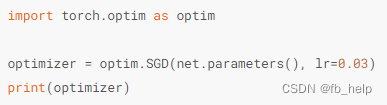

An optimization method

边栏推荐

- cocos_ Lua listview loads too much data

- PostgreSQL surpasses mysql, and the salary of "the best programming language in the world" is low

- 《动手学深度学习》学习笔记

- Sixth note

- Common database statements in unity

- MySQL audit log Archive

- Emlog博客主题模板源码简约好看响应式

- Unity card flipping effect

- SQLServer 存储过程传递数组参数

- Unity check whether the two objects have obstacles by ray

猜你喜欢

随机推荐

Basic knowledge points

xss注入

Database under unity

Dotween usage records ----- appendinterval, appendcallback

UE 虚幻引擎,项目结构

Understand encodefloatrgba and decodefloatrgba

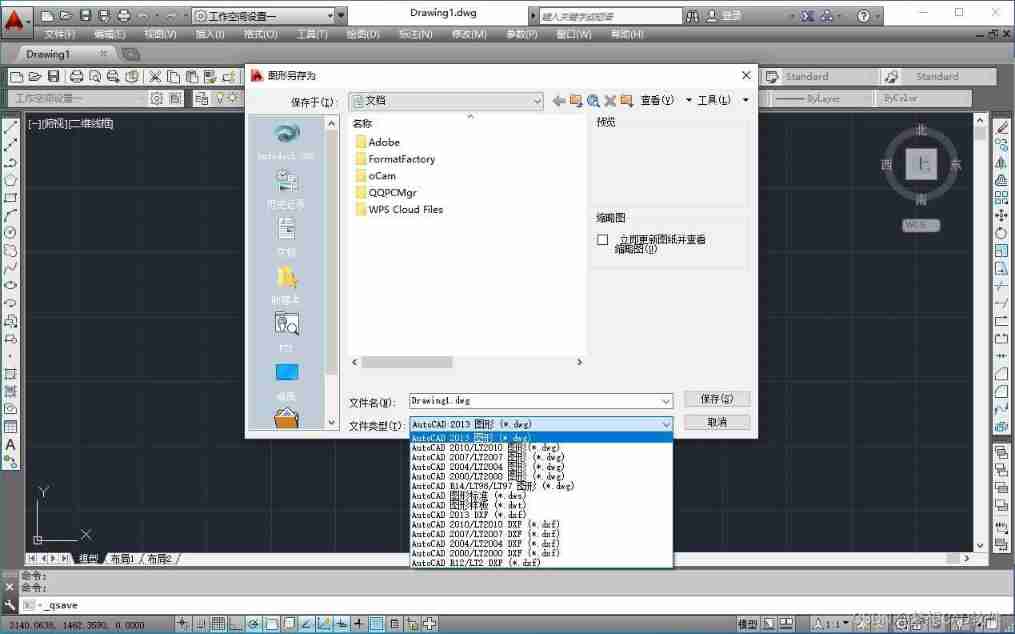

AutoCAD - scaling

mysql审计日志归档

中国艾草行业研究与投资前景预测报告(2022版)

Common database statements in unity

On-off and on-off of quality system construction

775 Div.1 C. Tyler and strings combinatorial mathematics

Redis 排查大 key 的4种方法,优化必备

Unity parallax infinite scrolling background

How much do you know about 3DMAX rendering skills and HDRI light sources? Dry goods sharing

2021-10-29

2022/7/1 learning summary

cocos2dx_ Lua card flip

Sqlserver stored procedures pass array parameters

中国溶聚丁苯橡胶(SSBR)行业研究与预测报告(2022版)