当前位置:网站首页>4. Install and deploy spark (spark on Yan mode)

4. Install and deploy spark (spark on Yan mode)

2022-07-06 11:30:00 【@Little snail】

Catalog

- 4.1 Use the following command , decompression Spark Install package to user root directory :

- 4.2 To configure Hadoop environment variable

- 4.3 verification Spark install

- 4.4 restart hadoop colony ( Make configuration effective )

- 4.5 Get into Spark Install home directory

- 4.6 Installation and deployment Spark-SQL

- 4.6.1 take hadoop Install under directory hdfs-site.xml File copy to spark Install under directory conf Under the table of contents

- 4.6.2 take Hive The installation directory conf Under subdirectories hive-site.xml file , copy to spark Configuration subdirectory of

- 4.6.3 modify spark Configure... In the directory hive-site.xml file

- 4.6.4 take mysql Copy the connected driver package to spark The directory jars subdirectories

- 4.6.5 restart Hadoop Cluster and verify spark-sql; The figure below , Get into spark shell client , explain spark sql Configuration is successful

- 4.6.6 Press ctrl+d Composite key , sign out spark shell

- 4.6.7 if hadoop The cluster is no longer used , Please shut down the cluster

4.1 Use the following command , decompression Spark Install package to user root directory :

[[email protected] ~]$ cd /home/zkpk/tgz/spark/

[[email protected] spark]$ tar -xzvf spark-2.1.1-bin-hadoop2.7.tgz -C /home/zkpk/

[[email protected] spark]$ cd

[[email protected] ~]$ cd spark-2.1.1-bin-hadoop2.7/

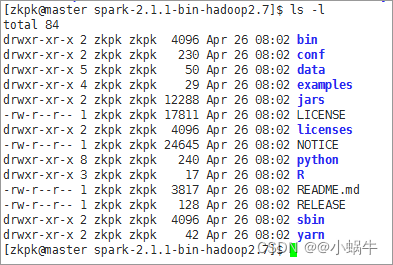

[[email protected] spark-2.1.1-bin-hadoop2.7]$ ls -l

perform ls -l The command will see the content shown in the following picture , These are Spark Included files :

4.2 To configure Hadoop environment variable

4.2.1 stay Yarn Up operation Spark Need configuration HADOOP_CONF_DIR、YARN_CONF_DIR and HDFS_CONF_DIR environment variable

4.2.1.1 command :

[[email protected] ~]$ cd

[[email protected] ~]$ gedit ~/.bash_profile

4.2.1.2 Add the following at the end of the file ; preservation 、 sign out

#SPARK ON YARN

export HADOOP_CONF_DIR=$HADOOP_HOME/etc/hadoop

export HDFS_CONF_DIR=$HADOOP_HOME/etc/hadoop

export YARN_CONF_DIR=$HADOOP_HOME/etc/hadoop

4.2.1.3 Recompile file , Enable environment variables

[[email protected] ~]$ source ~/.bash_profile

4.3 verification Spark install

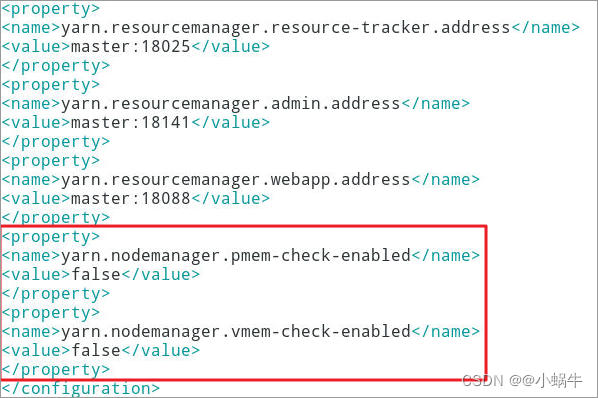

4.3.1 modify ${HADOOP_HOME}/etc/Hadoop/yarn-site.xml;

explain : stay master and slave01、slave02 Nodes should modify this file in this way

4.3.2 Add two property

[[email protected] ~]$ vim ~/hadoop-2.7.3/etc/hadoop/yarn-site.xml

<property>

<name>yarn.nodemanager.pmem-check-enabled</name>

<value>false</value>

</property>

<property>

<name>yarn.nodemanager.vmem-check-enabled</name>

<value>false</value>

</property>

4.4 restart hadoop colony ( Make configuration effective )

[[email protected] ~]$ stop-all.sh

[[email protected] ~]$ start-all.sh

4.5 Get into Spark Install home directory

[[email protected] ~]$ cd ~/spark-2.1.1-bin-hadoop2.7

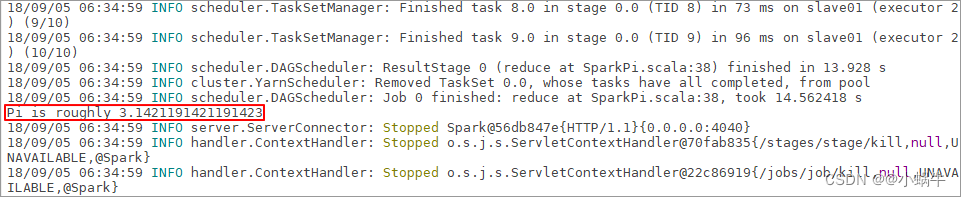

4.5.1 Execute the following command ( Notice this is 1 Line code ):

[[email protected] spark-2.1.1-bin-hadoop2.7]$ ./bin/spark-submit --class org.apache.spark.examples.SparkPi --master yarn --num-executors 3 --driver-memory 1g --executor-memory 1g --executor-cores 1 examples/jars/spark-examples*.jar 10

4.5.2 After executing the command, the following interface will appear :

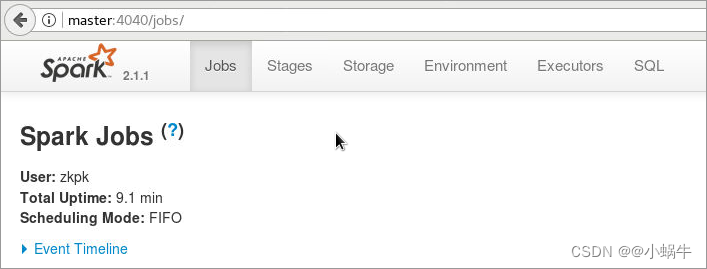

4.5.3Web UI verification

4.5.3.1 Get into spark-shell Interactive terminal , The order is as follows :

[[email protected] spark-2.1.1-bin-hadoop2.7]$ ./bin/spark-shell

4.5.3.2 Open the browser , Enter the following address , View the operation interface ( Address :http://master:4040/)

4.5.3.3 Exit interactive terminal , Press ctrl+d Composite key

scala> :quit

4.6 Installation and deployment Spark-SQL

4.6.1 take hadoop Install under directory hdfs-site.xml File copy to spark Install under directory conf Under the table of contents

[[email protected] spark-2.1.1-bin-hadoop2.7]$ cd

[[email protected] ~]$ cd hadoop-2.7.3/etc/hadoop/

[[email protected] hadoop]$ cp hdfs-site.xml /home/zkpk/spark-2.1.1-bin-hadoop2.7/conf

4.6.2 take Hive The installation directory conf Under subdirectories hive-site.xml file , copy to spark Configuration subdirectory of

[[email protected] hadoop]$ cd

[[email protected] ~]$ cd apache-hive-2.1.1-bin/conf/

[[email protected] conf]$ cp hive-site.xml /home/zkpk/spark-2.1.1-bin-hadoop2.7/conf/

4.6.3 modify spark Configure... In the directory hive-site.xml file

[[email protected] conf]$ cd

[[email protected] ~]$ cd spark-2.1.1-bin-hadoop2.7/conf/

[[email protected] conf]$ vim hive-site.xml

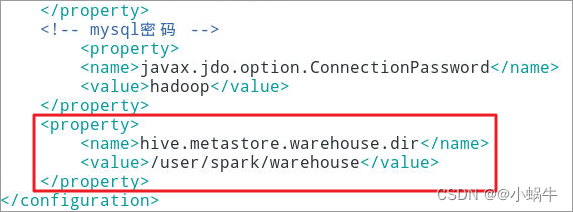

4.6.3.1 Add the following properties

<property>

<name>hive.metastore.warehouse.dir</name>

<value>/user/spark/warehouse</value>

</property>

4.6.4 take mysql Copy the connected driver package to spark The directory jars subdirectories

[[email protected] conf]$ cd

[[email protected] ~]$ cd apache-hive-2.1.1-bin/lib/

[[email protected] lib]$ cp mysql-connector-java-5.1.28.jar /home/zkpk/spark-2.1.1-bin-hadoop2.7/jars/

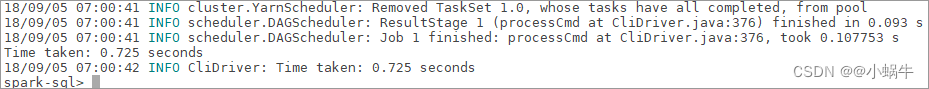

4.6.5 restart Hadoop Cluster and verify spark-sql; The figure below , Get into spark shell client , explain spark sql Configuration is successful

[[email protected] lib]$ cd

[[email protected] ~]$ stop-all.sh

[[email protected] ~]$ start-all.sh

[[email protected] ~]$ cd ~/spark-2.1.1-bin-hadoop2.7

[[email protected] spark-2.1.1-bin-hadoop2.7]$ ./bin/spark-sql --master yarn

4.6.6 Press ctrl+d Composite key , sign out spark shell

4.6.7 if hadoop The cluster is no longer used , Please shut down the cluster

[[email protected] spark-2.1.1-bin-hadoop2.7]$ cd

[[email protected] ~]$ stop-all.sh

边栏推荐

- 数据库高级学习笔记--SQL语句

- Database advanced learning notes -- SQL statement

- 解决安装Failed building wheel for pillow

- Solve the problem of installing failed building wheel for pilot

- 01 project demand analysis (ordering system)

- 学习问题1:127.0.0.1拒绝了我们的访问

- 误删Path变量解决

- 使用lambda在循环中传参时,参数总为同一个值

- L2-004 这是二叉搜索树吗? (25 分)

- double转int精度丢失问题

猜你喜欢

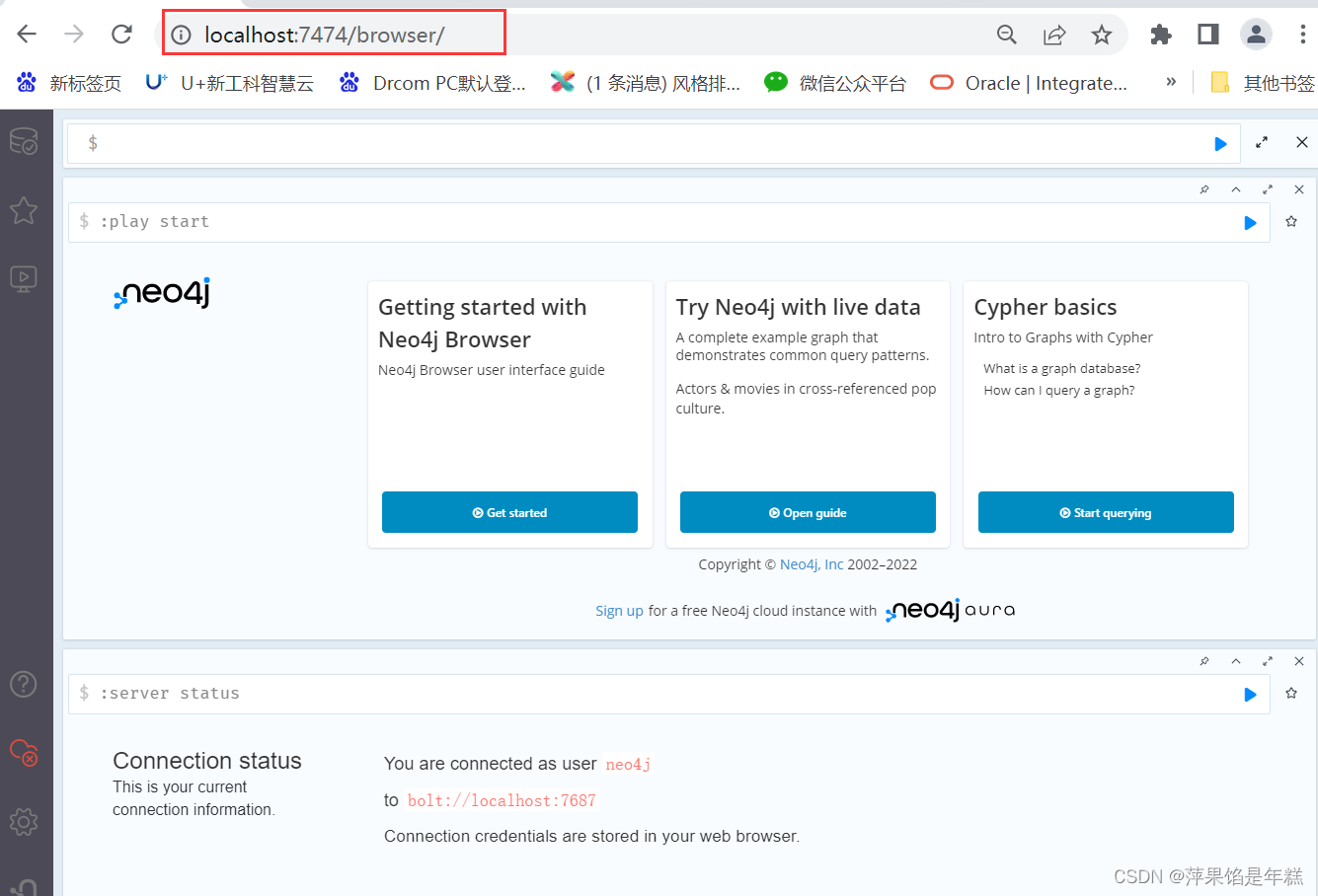

neo4j安装教程

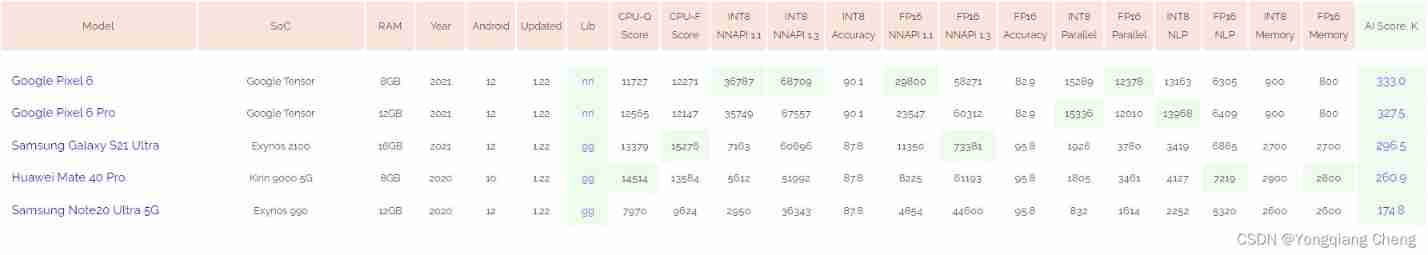

AI benchmark V5 ranking

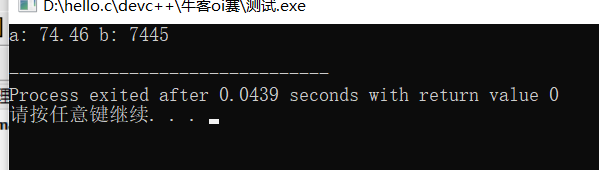

AcWing 242. A simple integer problem (tree array + difference)

一键提取pdf中的表格

Valentine's Day flirting with girls to force a small way, one can learn

double转int精度丢失问题

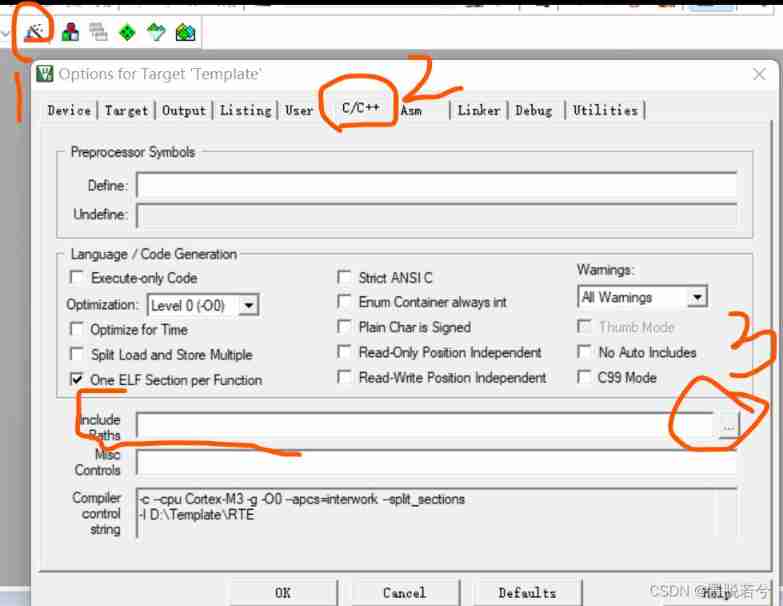

How to build a new project for keil5mdk (with super detailed drawings)

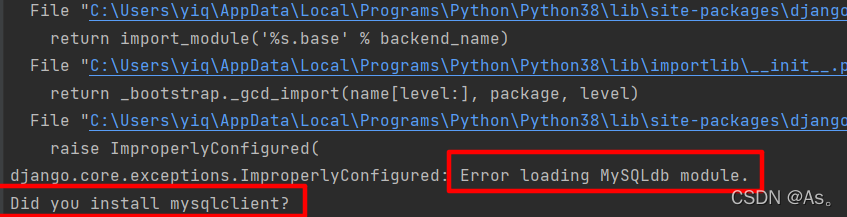

Django running error: error loading mysqldb module solution

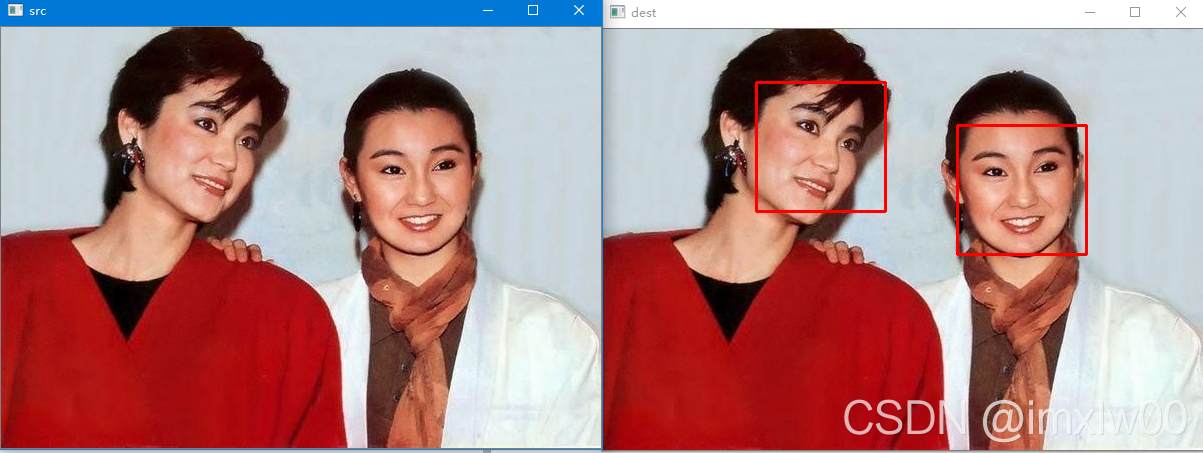

人脸识别 face_recognition

QT creator support platform

随机推荐

Basic use of redis

ES6 let 和 const 命令

Ansible practical series I_ introduction

Antlr4 uses keywords as identifiers

PyCharm中无法调用numpy,报错ModuleNotFoundError: No module named ‘numpy‘

【Flink】CDH/CDP Flink on Yarn 日志配置

Machine learning -- census data analysis

jS数组+数组方法重构

[Blue Bridge Cup 2017 preliminary] grid division

Double to int precision loss

Error reporting solution - io UnsupportedOperation: can‘t do nonzero end-relative seeks

AcWing 242. A simple integer problem (tree array + difference)

搞笑漫画:程序员的逻辑

L2-006 树的遍历 (25 分)

AcWing 1294. Cherry Blossom explanation

Learn winpwn (3) -- sEH from scratch

Learn winpwn (2) -- GS protection from scratch

MySQL与c语言连接(vs2019版)

Solution to the practice set of ladder race LV1 (all)

error C4996: ‘strcpy‘: This function or variable may be unsafe. Consider using strcpy_s instead