当前位置:网站首页>[text generation] recommended in the collection of papers - Stanford researchers introduce time control methods to make long text generation more smooth

[text generation] recommended in the collection of papers - Stanford researchers introduce time control methods to make long text generation more smooth

2022-07-06 08:56:00 【Aminer academic search and scientific and technological informa】

In a recent study , A research group at Stanford University proposed time control (TC), This language model plans implicitly through potential random processes , And generate text consistent with the potential plan , To improve the performance of long text generation . Let's take a look at the text generation :

Text generation is an important research field in natural language processing , It has a broad application prospect . At home and abroad, there have been such as Automated Insights、Narrative Science as well as “ Xiaonan ” Robot and “ Xiao Ming ” Text generation systems such as robots are put into use . These systems generate news based on formatted data or natural language text 、 Financial report or other explanatory text .

AMiner Prepared for you 【 The text generated 】 Collection of papers , Click the link to view ba :https://www.aminer.cn/topic/61cc80ef42323c8f84232085?f=cs

The following are some high-quality papers sorted out from it :

1.SALSA-TEXT : self attentive latent space based adversarial text generation.

“ We propose a novel architecture based on self attention , To improve the performance of adversarial potential code schemes in text generation … In this paper , We took a step to strengthen the architecture used in these settings , especially AAE and ARAE. We discuss two potential code approaches based on adversarial settings (AAE and ARAE) Benchmarking … Experiments show that , The proposed ( Self - ) Attention based models are superior to the most advanced models in text generation based on adversarial code …”

PDF Download link :https://www.aminer.cn/pub/5bdc31c217c44a1f58a0cc6c/?f=cs

2.Text Generation Based on Generative Adversarial Nets with Latent Variable.

“ In this paper , We propose a generation countermeasure network (GAN) Generate realistic text models … We proposed VGAN Model , The generated model is composed of recurrent neural network and VAE form . The discriminant model is a convolutional neural network . We use the strategy gradient training model . We apply the proposed model to the text generation task , And compare it with other recent models based on Neural Network , For example, recursive neural network language model and Seq-GAN…”

PDF Download link :https://www.aminer.cn/pub/5a73cbcc17c44a0b3035f5f0/?f=cs

3.Content preserving text generation with attribute controls.

“ In this work , We solved the problem of modifying the text attributes of sentences . Given an input sentence and a set of attribute tags , We try to generate sentences compatible with conditional information . To ensure that the model generates content compatible sentences , We introduced a reconstruction loss , This loss is interpolated between the automatic encoding and the reverse translation loss components …”

PDF Download link :https://www.aminer.cn/pub/5c8fb7d04895d9cbc65d4525/?f=cs

4.Optimizing Referential Coherence in Text Generation

“ This paper describes an implemented system , The system uses centering theory to plan the selection of coherent text and quotation expressions . We think , Text and sentence planning needs to be driven in part by the goal of maintaining referential continuity , So as to promote the resolution of pronouns : Get favorable ordering of parameters in clauses and clauses , It may increase the chance of using unambiguous pronouns …”

PDF Download link :https://www.aminer.cn/pub/53e9b0b2b7602d9703b1e972/?f=cs

5.Text generation from keywords

“ We describe a method from “ keyword ” or “ Title words ” Methods of generating sentences . The method consists of two main parts , Candidate text construction and evaluation … The model considers not only words n-gram Information , Also consider the dependency information between words . Besides , It also considers string information and morphological information …”

PDF Download link :https://www.aminer.cn/pub/53e99adcb7602d9702360b5a/?f=cs

Click the link to enter AMiner Official website , See more good papers ~https://www.aminer.cn/?f=cs

边栏推荐

- CSP first week of question brushing

- LeetCode:26. Remove duplicates from an ordered array

- 超高效!Swagger-Yapi的秘密

- TP-LINK enterprise router PPTP configuration

- LeetCode:498. 对角线遍历

- ROS compilation calls the third-party dynamic library (xxx.so)

- 力扣每日一题(二)

- What is the role of automated testing frameworks? Shanghai professional third-party software testing company Amway

- TP-LINK 企业路由器 PPTP 配置

- How to effectively conduct automated testing?

猜你喜欢

Cesium draw points, lines, and faces

The ECU of 21 Audi q5l 45tfsi brushes is upgraded to master special adjustment, and the horsepower is safely and stably increased to 305 horsepower

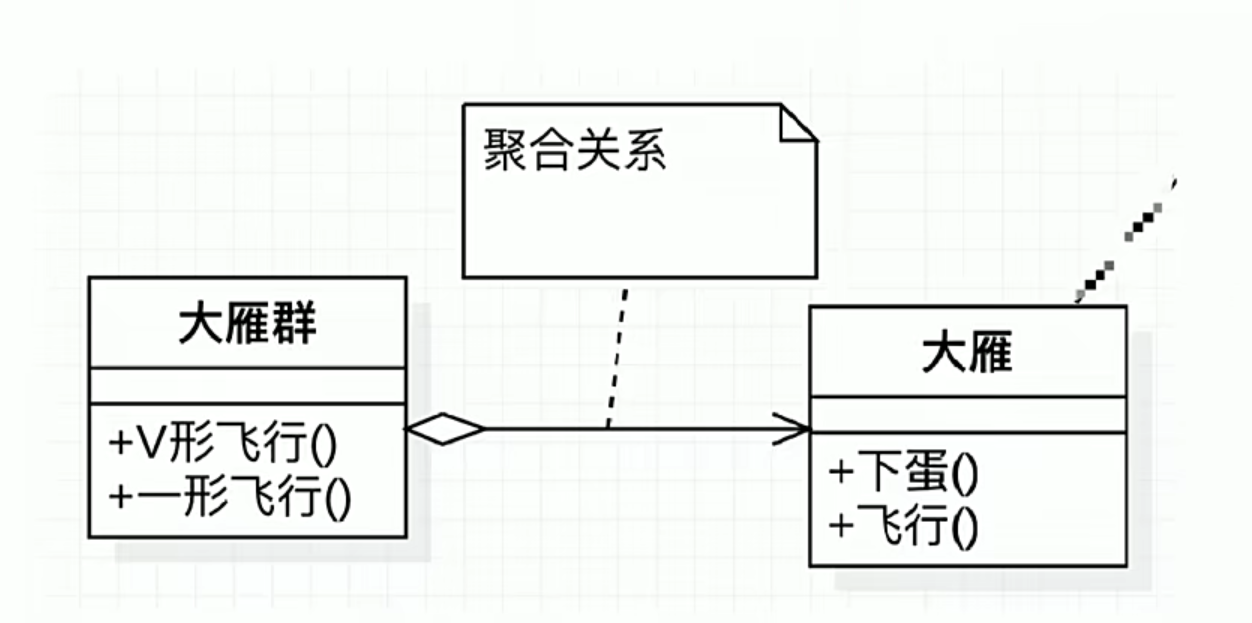

UML圖記憶技巧

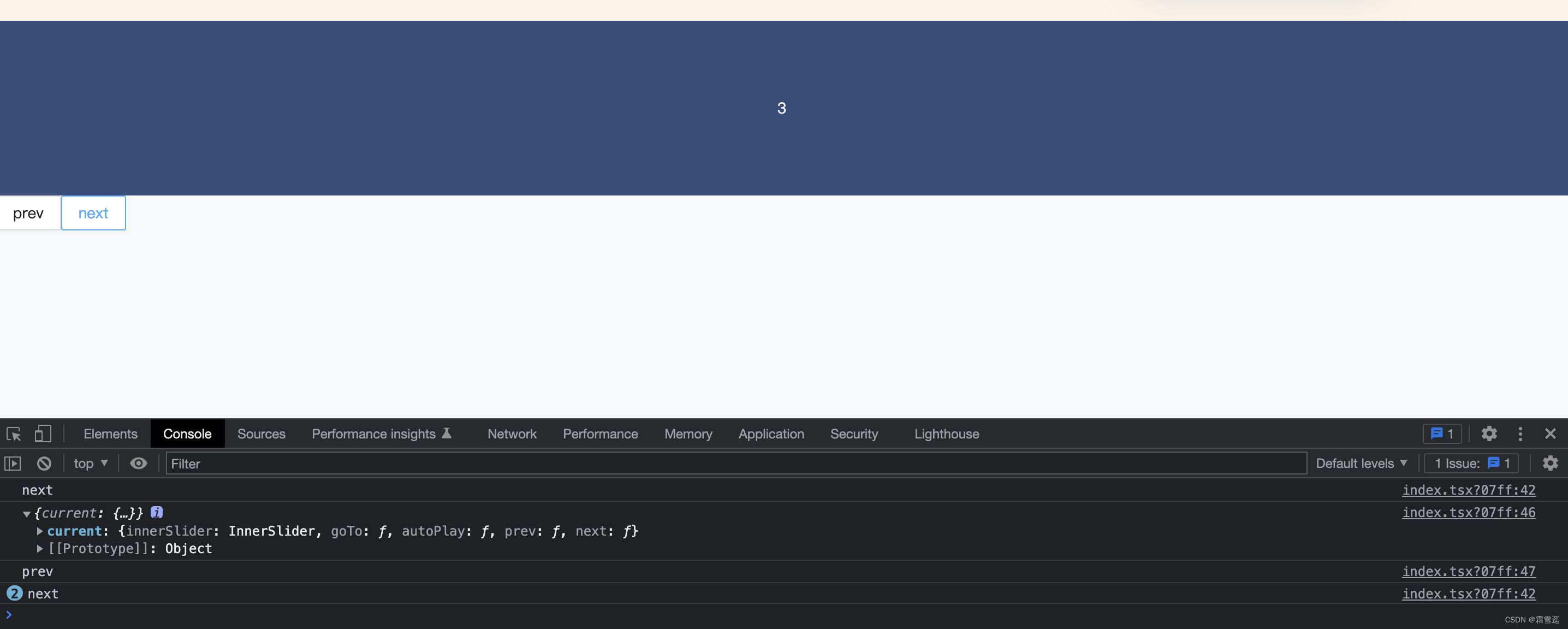

ant-design的走马灯(Carousel)组件在TS(typescript)环境中调用prev以及next方法

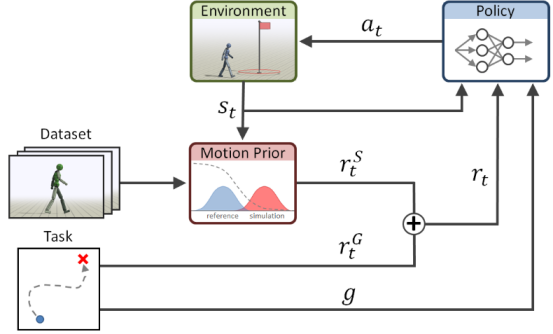

Current situation and trend of character animation

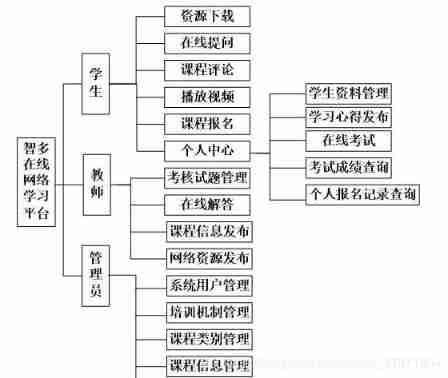

Computer graduation design PHP Zhiduo online learning platform

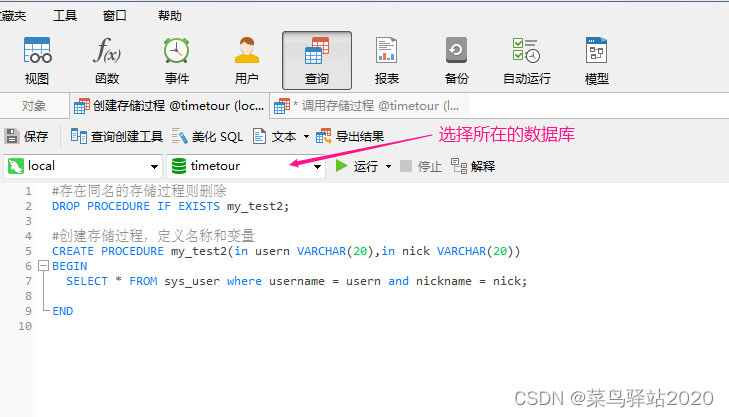

Navicat Premium 创建MySql 创建存储过程

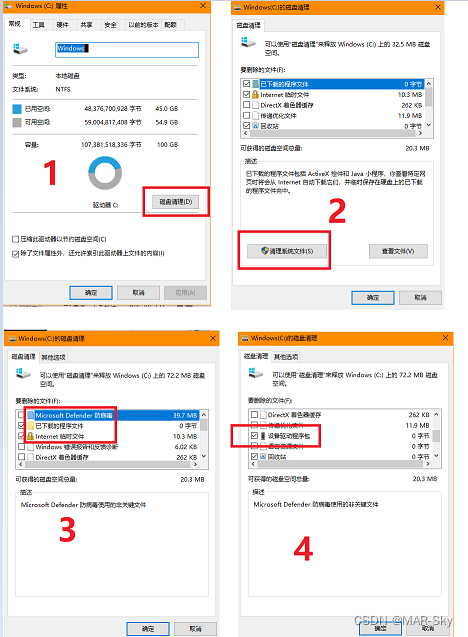

Computer cleaning, deleted system files

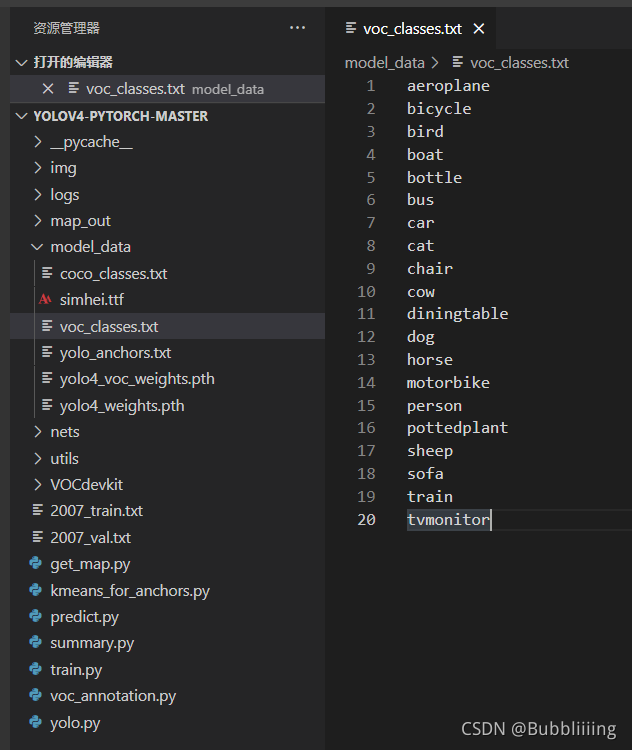

目标检测——Pytorch 利用mobilenet系列(v1,v2,v3)搭建yolov4目标检测平台

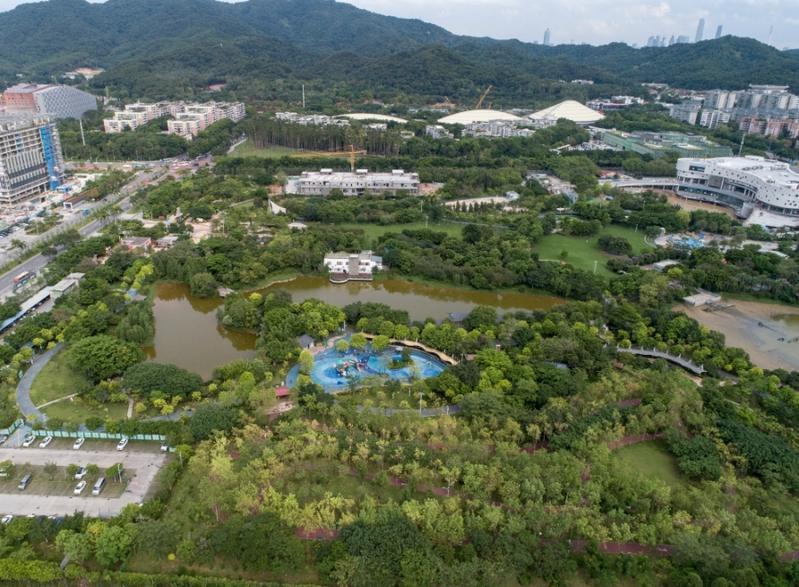

广州推进儿童友好城市建设,将探索学校周边200米设安全区域

随机推荐

poi追加写EXCEL文件

Light of domestic games destroyed by cracking

使用标签模板解决用户恶意输入的问题

Navicat premium create MySQL create stored procedure

After reading the programmer's story, I can't help covering my chest...

Indentation of tabs and spaces when writing programs for sublime text

有效提高软件产品质量,就找第三方软件测评机构

LeetCode:41. 缺失的第一个正数

POI add write excel file

Computer cleaning, deleted system files

LeetCode:剑指 Offer 03. 数组中重复的数字

LeetCode:836. 矩形重叠

Leetcode: Sword finger offer 48 The longest substring without repeated characters

LeetCode:剑指 Offer 42. 连续子数组的最大和

SAP ui5 date type sap ui. model. type. Analysis of the parsing format of date

LeetCode:劍指 Offer 42. 連續子數組的最大和

Super efficient! The secret of swagger Yapi

Leetcode: Jianzhi offer 04 Search in two-dimensional array

LeetCode:162. Looking for peak

广州推进儿童友好城市建设,将探索学校周边200米设安全区域