当前位置:网站首页>Three Python tips for reading, creating and running multiple files

Three Python tips for reading, creating and running multiple files

2020-11-06 01:28:00 【Artificial intelligence meets pioneer】

author |Khuyen Tran compile |VK source |Towards Data Science

motivation

When you put code into production , You probably need to deal with the organization of code files . Read 、 Creating and running many data files is time consuming . This article will show you how to automatically

-

Loop through the files in the directory

-

If there is no nested file , Create them

-

Use bash for loop Run a file with different inputs

These techniques have saved me a lot of time on data science projects . I hope you'll find them useful, too !

Loop through the files in the directory

If we want to read and process multiple data like this :

├── data

│ ├── data1.csv

│ ├── data2.csv

│ └── data3.csv

└── main.py

We can try to read one file at a time manually

import pandas as pd

def process_data(df):

pass

df = pd.read_csv(data1.csv)

process_data(df)

df2 = pd.read_csv(data2.csv)

process_data(df2)

df3 = pd.read_csv(data3.csv)

process_data(df3)

When we have 3 More than data , That's ok , But it's not effective . If we only changed the data in the script above , Why not use for Loop to access each data ?

The following script allows us to traverse the files in the specified directory

import os

import pandas as pd

def loop_directory(directory: str):

''' Loop the files in the directory '''

for filename in os.listdir(directory):

if filename.endswith(".csv"):

file_directory = os.path.join(directory, filename)

print(file_directory)

pd.read_csv(file_directory)

else:

continue

if __name__=='__main__':

loop_directory('data/')

data/data3.csv

data/data2.csv

data/data1.csv

Here is an explanation of the above script

for filename in os.listdir(directory): Loop through files in a specific directoryif filename.endswith(".csv"): Visit to “.csv” Final documentfile_directory = os.path.join(directory, filename): Connect to the parent directory ('data') And the files in the directory .

Now we can visit “data” All files in directory !

If there is no nested file , Create them

Sometimes , We may want to create nested files to organize code or models , This makes it easier to find them in the future . for example , We can use “model 1” To specify specific feature Engineering .

Using models 1 when , We may need to use different types of machine learning models to train our data (“model1/XGBoost”).

When using each machine learning model , We may even want to save different versions of the model , Because the model uses different parameters .

therefore , Our model catalog looks as complex as the following

model

├── model1

│ ├── NaiveBayes

│ └── XGBoost

│ ├── version_1

│ └── version_2

└── model2

├── NaiveBayes

└── XGBoost

├── version_1

└── version_2

For every model we create , It can take a lot of time to create a nested file manually . Is there any way to automate this process ? Yes ,os.makedirs(datapath).

def create_path_if_not_exists(datapath):

''' If it doesn't exist , Create a new file and save the data '''

if not os.path.exists(datapath):

os.makedirs(datapath)

if __name__=='__main__':

create_path_if_not_exists('model/model1/XGBoost/version_1')

Run the file above , You should see nested files 'model/model2/XGBoost/version_2' Automatically create !

Now you can save the model or data to a new directory !

import joblib

import os

def create_path_if_not_exists(datapath):

''' If it doesn't exist, create it '''

if not os.path.exists(datapath):

os.makedirs(datapath)

if __name__=='__main__':

# Create directory

model_path = 'model/model2/XGBoost/version_2'

create_path_if_not_exists(model_path)

# preservation

joblib.dump(model, model_path)

Bash for Loop: Run a file with different parameters

What if we want to run a file with different parameters ? for example , We may want to use the same script to use different models to predict data .

import joblib

# df = ...

model_path = 'model/model1/XGBoost/version_1'

model = joblib.load(model_path)

model.predict(df)

If a script takes a long time to run , And we have multiple models to run , It will be very time-consuming to wait for the script to run and then run the next one . Is there a way to tell a computer to run on a command line 1,2,3,10, And then do something else .

Yes , We can use for bash for loop. First , We use the system argv Enables us to parse command line parameters . If you want to override the configuration file on the command line , You can also use hydra Tools such as .

import sys

import joblib

# df = ...

model_type = sys.argv[1]

model_version = sys.argv[2]

model_path = f'''model/model1/{model_type}/version_{model_version}'''

print('Loading model from', model_path, 'for training')

model = joblib.load(model_path)

mode.predict(df)

>>> python train.py XGBoost 1

Loading model from model/model1/XGBoost/version_1 for training

Great ! We just told our script usage model XGBoost,version 1 To predict the data on the command line . Now we can use it bash Loop through different versions of the model .

If you can use Python perform for loop , It can also be executed on the following terminals

$ for version in 2 3 4

> do

> python train.py XGBoost $version

> done

type Enter Separate lines

Output :

Loading model from model/model1/XGBoost/version_1 for training

Loading model from model/model1/XGBoost/version_2 for training

Loading model from model/model1/XGBoost/version_3 for training

Loading model from model/model1/XGBoost/version_4 for training

Now? , You can run scripts with different models and perform other operations at the same time ! How convenient! !

Conclusion

congratulations ! You just learned how to automatically read and create multiple files at the same time . You also learned how to run a file with different parameters . Read by hand 、 Time to write and run files can now be saved , For more important tasks .

If you're confused about some parts of the article , I created specific examples in this repository :https://github.com/khuyentran1401/Data-science/tree/master/python/python_tricks

Link to the original text :https://towardsdatascience.com/3-python-tricks-to-read-create-and-run-multiple-files-automatically-5221ebaad2ba

Welcome to join us AI Blog station : http://panchuang.net/

sklearn Machine learning Chinese official documents : http://sklearn123.com/

Welcome to pay attention to pan Chuang blog resource summary station : http://docs.panchuang.net/

版权声明

本文为[Artificial intelligence meets pioneer]所创,转载请带上原文链接,感谢

边栏推荐

- 6.3 handlerexceptionresolver exception handling (in-depth analysis of SSM and project practice)

- [JMeter] two ways to realize interface Association: regular representation extractor and JSON extractor

- 采购供应商系统是什么?采购供应商管理平台解决方案

- Just now, I popularized two unique skills of login to Xuemei

- Using Es5 to realize the class of ES6

- 每个前端工程师都应该懂的前端性能优化总结:

- 中小微企业选择共享办公室怎么样?

- 一篇文章带你了解CSS 渐变知识

- 從小公司進入大廠,我都做對了哪些事?

- Our best practices for writing react components

猜你喜欢

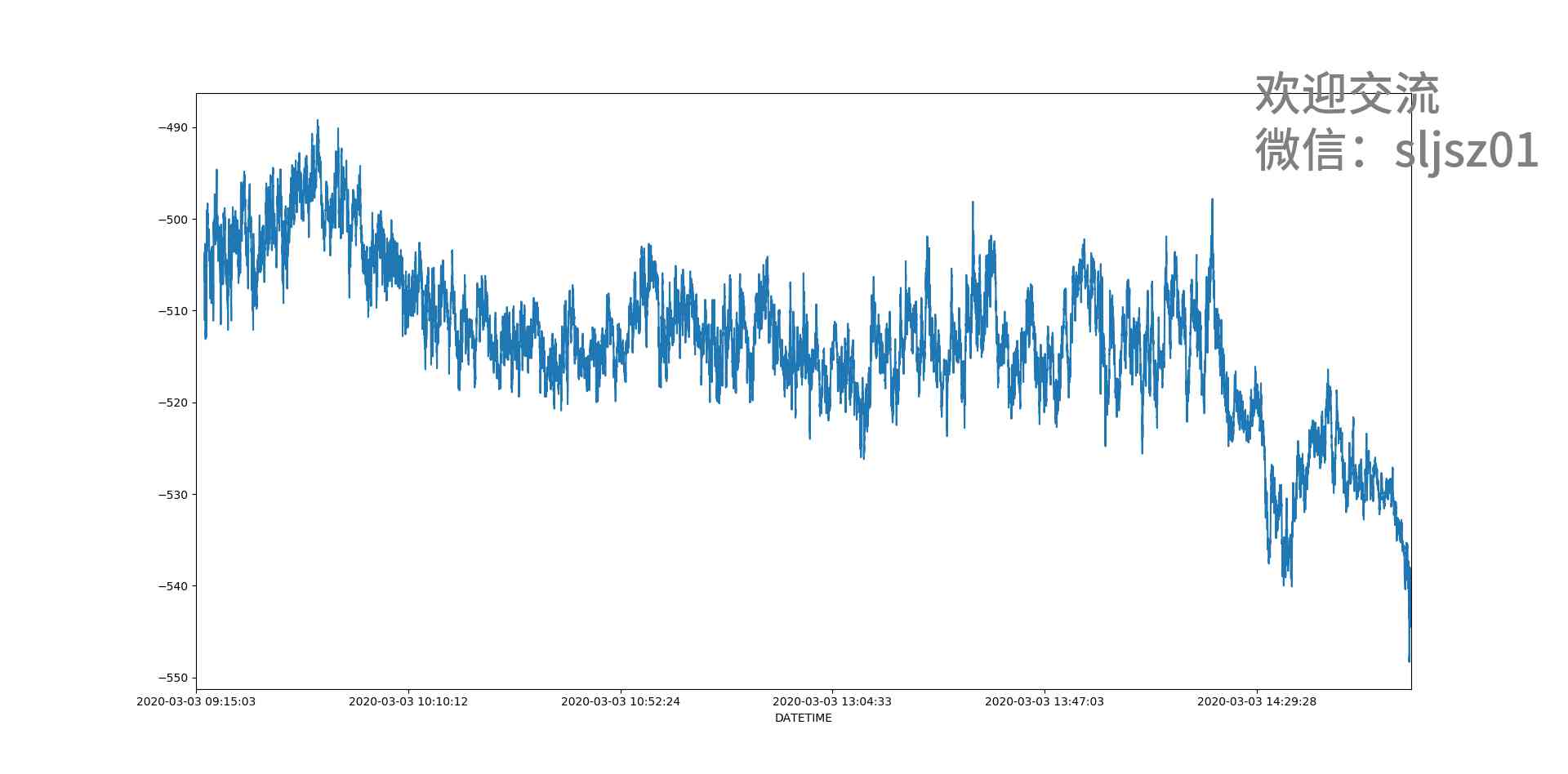

I'm afraid that the spread sequence calculation of arbitrage strategy is not as simple as you think

axios学习笔记(二):轻松弄懂XHR的使用及如何封装简易axios

前端基础牢记的一些操作-Github仓库管理

关于Kubernetes 与 OAM 构建统一、标准化的应用管理平台知识!(附网盘链接)

多机器人行情共享解决方案

Filecoin主网上线以来Filecoin矿机扇区密封到底是什么意思

What is the side effect free method? How to name it? - Mario

EOS创始人BM: UE,UBI,URI有什么区别?

使用 Iceberg on Kubernetes 打造新一代云原生数据湖

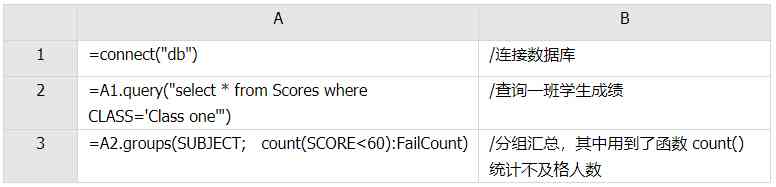

Examples of unconventional aggregation

随机推荐

Summary of common string algorithms

This article will introduce you to jest unit test

前端工程师需要懂的前端面试题(c s s方面)总结(二)

关于Kubernetes 与 OAM 构建统一、标准化的应用管理平台知识!(附网盘链接)

[JMeter] two ways to realize interface Association: regular representation extractor and JSON extractor

至联云分享:IPFS/Filecoin值不值得投资?

钻石标准--Diamond Standard

Want to do read-write separation, give you some small experience

一篇文章带你了解CSS3图片边框

Do not understand UML class diagram? Take a look at this edition of rural love class diagram, a learn!

xmppmini 專案詳解:一步一步從原理跟我學實用 xmpp 技術開發 4.字串解碼祕笈與訊息包

5.5 controlleradvice notes - SSM in depth analysis and project practice

Arrangement of basic knowledge points

一篇文章带你了解CSS 渐变知识

Just now, I popularized two unique skills of login to Xuemei

Classical dynamic programming: complete knapsack problem

基於MVC的RESTFul風格API實戰

6.2 handleradapter adapter processor (in-depth analysis of SSM and project practice)

Python3 e-learning case 4: writing web proxy

Thoughts on interview of Ali CCO project team