当前位置:网站首页>It is really necessary to build a distributed ID generation service

It is really necessary to build a distributed ID generation service

2020-11-06 01:31:00 【Yin Jihuan】

Catalog

- Explain the background

- Leaf snowflake Model is introduced

- Leaf segment Model is introduced

- Leaf Transformation support RPC

Explain the background

Don't brag , No exaggeration , Used in the project ID There are a lot of scenarios generated . For example, when the business needs to be idempotent , If there is no appropriate business field to do unique identification , Then you need to generate a unique identifier , I believe you are familiar with this scene .

Most of the time, for the convenience of painting, it may be to write a simple ID Generate tool classes , Use it directly . If you do a better job, you may have one alone Jar Packages make other projects dependent on , What's not done well is likely to be Copy 了 N The same code .

Make an independent ID It's very necessary to generate Services , Of course, we don't have to make our own wheels , There is a ready-made open source direct use of . If there are enough people , Not bad money , Self research can also .

Today, I'd like to introduce a meituan open source ID Build framework Leaf, stay Leaf On the basis of a little expansion of , increase RPC Exposure and invocation of services , Improve ID Get the performance of .

Leaf Introduce

Leaf The earliest requirements were orders from various lines of business ID Generating requirements . In the early days of meituan , Some businesses go directly through DB It is generated in the way of self increment ID, Some businesses go through redis Cache to generate ID, Some businesses use it directly UUID This way to generate ID. Each of the above methods has its own problems , So we decided to implement a set of distributed ID Generate services to meet requirements .

Specifically Leaf For design documents, see :https://tech.meituan.com/2017/04/21/mt-leaf.html

at present Leaf It covers the internal finance of meituan review company 、 Restaurant 、 Take-out food 、 Hotel Travel 、 Many business lines such as cat's eye movies . stay 4C8G VM On the basis of , Through the company RPC Way to call ,QPS The result of pressure measurement is close to 5w/s,TP999 1ms.

snowflake Pattern

snowflake yes Twitter Open source distributed ID generating algorithm , It is widely used in all kinds of generation ID Scene .Leaf It also supports this way to generate ID.

The procedure is as follows :

Modify the configuration leaf.snowflake.enable=true Turn on snowflake Pattern .

Modify the configuration leaf.snowflake.zk.address and leaf.snowflake.port For your own Zookeeper Address and port .

You must be curious , Why does it depend on Zookeeper Well ?

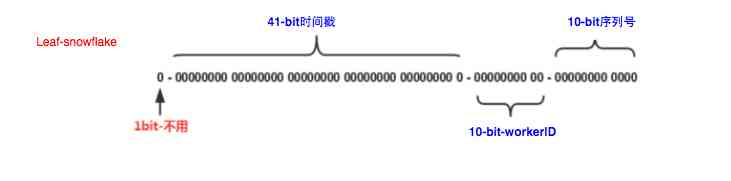

That's because snowflake Of ID There is... In the composition 10bit Of workerId, Here's the picture :

Generally, if the number of services is not large, it is OK to set it manually , There are also frameworks that use a convention based configuration approach , For example, based on IP Generate wokerID, be based on hostname The last few bits generate wokerID, Manually configure on the machine , Manually pass in and so on when the program starts .

Leaf In order to simplify wokerID Configuration of , So we used Zookeeper To generate wokerID. Is to use the Zookeeper The properties of persistent sequential nodes are automatically aligned with snowflake Node configuration wokerID.

If your company doesn't work Zookeeper, I don't want to because of Leaf To deploy separately Zookeeper Words , You can change the logic in the source code , For example, you can provide a generation order ID Instead of Zookeeper.

segment Pattern

segment yes Leaf Based on the implementation of database ID Generation scheme , If the dosage is not large , It can be used completely Mysql Self increasing of ID To achieve ID Increasing .

Leaf Although it is also based on Mysql, But a lot of optimization has been done , Here is a brief introduction segment The principle of pattern .

First we need to add a table to the database ID Relevant information .

CREATE TABLE `leaf_alloc` (`biz_tag` varchar(128) NOT NULL DEFAULT '',`max_id` bigint(20) NOT NULL DEFAULT '1',`step` int(11) NOT NULL,`description` varchar(256) DEFAULT NULL,`update_time` timestamp NOT NULL DEFAULT CURRENT_TIMESTAMP ON UPDATE CURRENT_TIMESTAMP,PRIMARY KEY (`biz_tag`)) ENGINE=InnoDB;

biz_tag Used to distinguish business types , Such as the order , Payment, etc . If there are performance requirements in the future, you need to expand the database , Only need to biz_tag Sub database and sub table are all right .

max_id It means that we should biz_tag What is currently assigned ID Maximum value of segment .

step Indicates the segment length of each allocation .

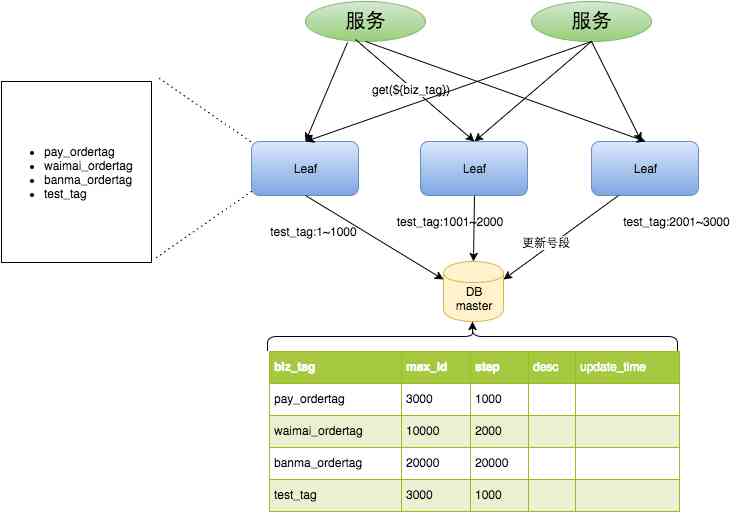

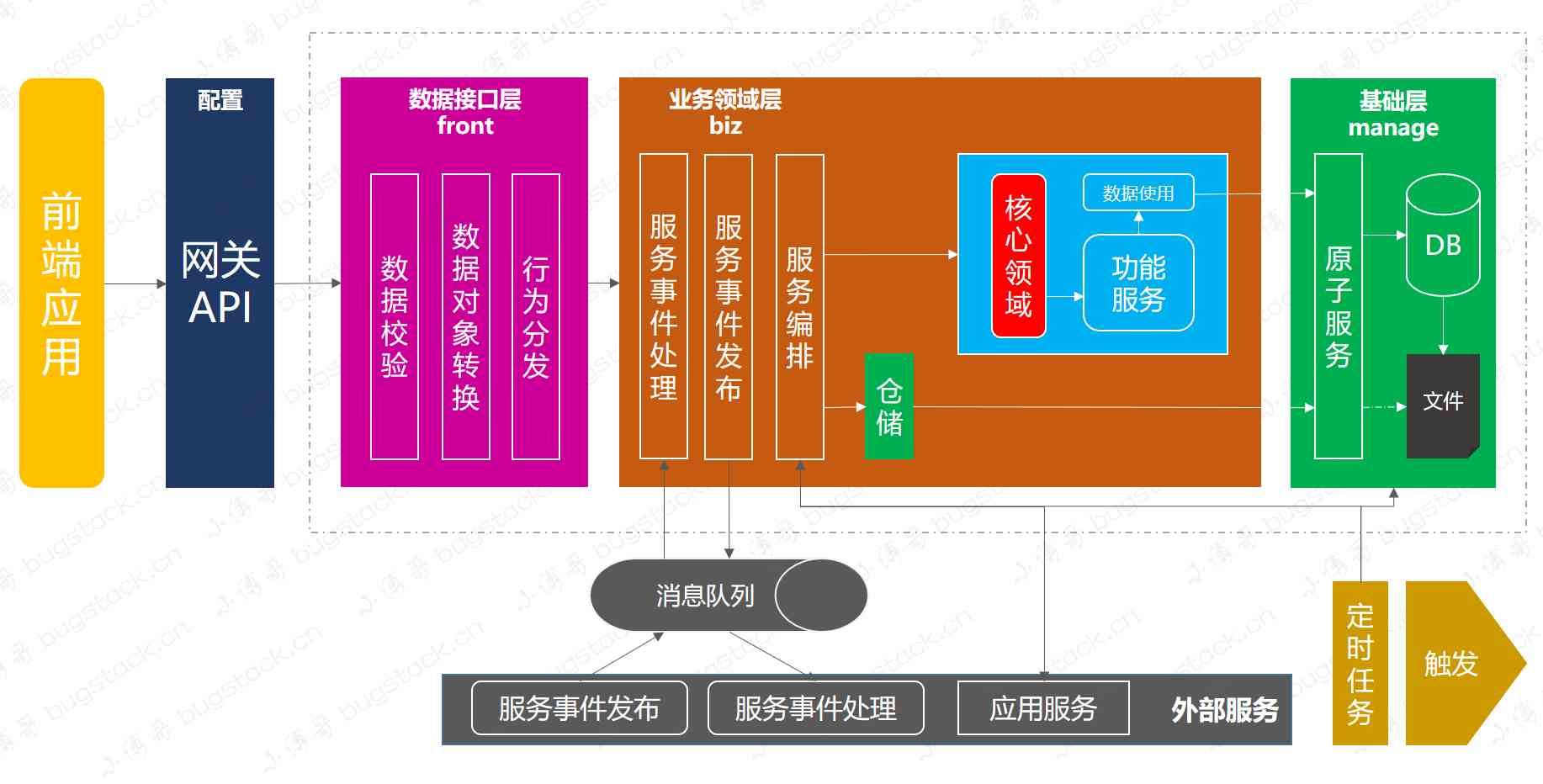

The picture below is segment The architecture of the figure :

As can be seen from the figure above , When multiple services are working on Leaf Conduct ID When getting , It will pass in the corresponding biz_tag,biz_tag They are isolated from each other , They don't influence each other .

such as Leaf There are three nodes , When test_tag For the first time Leaf1 When , here Leaf1 Of ID The scope is 1~1000.

When test_tag A second request to Leaf2 When , here Leaf2 Of ID The scope is 1001~2000.

When test_tag Third request to Leaf3 When , here Leaf3 Of ID The scope is 2001~3000.

such as Leaf1 Already know your own test_tag Of ID The scope is 1~1000, So the follow-up requests come and get test_tag Corresponding ID When , It will start from 1 Start increasing in sequence , This is done in memory , High performance . You don't have to get it every time ID Go to the database once .

Question 1

At this time, someone said , If the amount of concurrency is large ,1000 The length of the segment is used up , Now you have to apply for the next range , In the meantime, requests to come in will also be due to DB Section No. has not been taken back , Cause thread to block .

don 't worry ,Leaf This situation has been optimized in , Not until ID Apply again when you're finished , They will apply for the next range before they are used up . For the problem of large concurrency, you can directly add step Turn it up .

Question two

At this time, someone said , If Leaf Will it have an impact if the service hangs up on a node ?

First Leaf Services are cluster deployment , Generally, it will register with the registry for other services to discover . It's OK to hang up one , And others N A service . The problem is right ID Is there a problem in getting the ? Will there be repetition ID Well ?

The answer is no problem , If Leaf1 If you hang up , Its scope is 1~1000, If it is currently acquiring 100 This stage , Then the service hung up . After the service is restarted , You're going to apply for the next range , No more use 1~1000. So there's no repetition ID appear .

Leaf Transformation support RPC

If you have a lot of calls , In pursuit of higher performance , You can expand it by yourself , take Leaf Transform into Rpc The agreement was exposed .

First of all, will Leaf Of Spring Version upgrade to 5.1.8.RELEASE, Modify the father pom.xml that will do .

<spring.version>5.1.8.RELEASE</spring.version>

And then Spring Boot Upgrade to 2.1.6.RELEASE, modify leaf-server Of pom.xml.

<spring-boot-dependencies.version>2.1.6.RELEASE</spring-boot-dependencies.version>

Still need to be in leaf-server Of pom add nacos Dependent dependency , Because we kitty-cloud Is to use the nacos. At the same time, we need to rely on it dubbo, To expose rpc service .

<dependency><groupId>com.cxytiandi</groupId><artifactId>kitty-spring-cloud-starter-nacos</artifactId><version>1.0-SNAPSHOT</version></dependency><dependency><groupId>com.cxytiandi</groupId><artifactId>kitty-spring-cloud-starter-dubbo</artifactId><version>1.0-SNAPSHOT</version></dependency><dependency><groupId>org.apache.logging.log4j</groupId><artifactId>log4j-core</artifactId></dependency>

stay resource Create bootstrap.properties file , increase nacos Related configuration information .

spring.application.name=LeafSnowflakedubbo.scan.base-packages=com.sankuai.inf.leaf.server.controllerdubbo.protocol.name=dubbodubbo.protocol.port=20086dubbo.registry.address=spring-cloud://localhostspring.cloud.nacos.discovery.server-addr=47.105.66.210:8848spring.cloud.nacos.config.server-addr=${spring.cloud.nacos.discovery.server-addr}

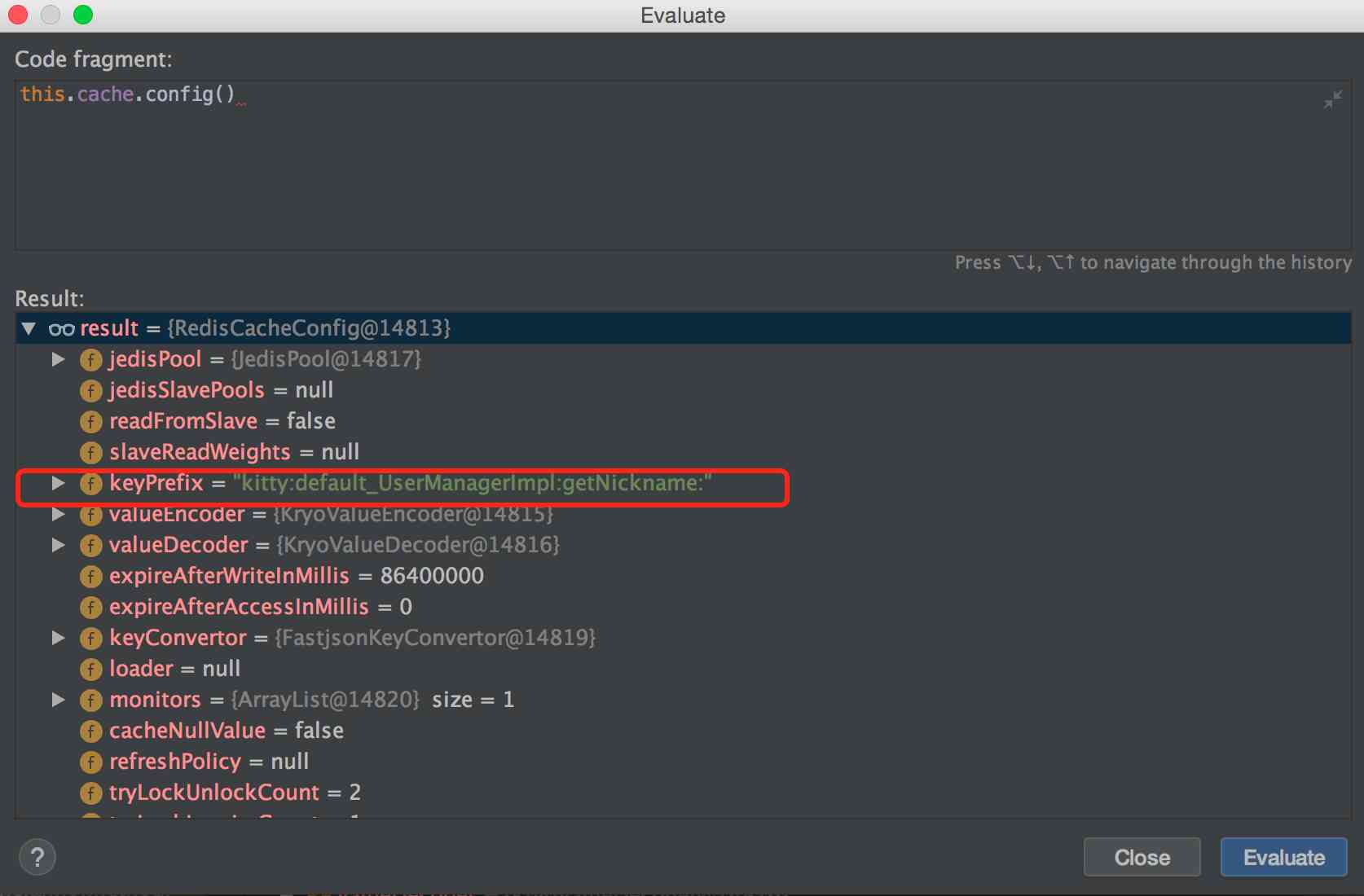

Leaf Default exposed Rest Service is LeafController in , The need now is to expose Rest Expose again RPC service , So we pull out two interfaces . One is Segment Pattern , One is Snowflake Pattern .

Segment Pattern call client

/*** Distributed ID Service client -Segment Pattern** @ author Yin Jihuan* @ Personal wechat jihuan900* @ WeChat official account Ape world* @GitHub https://github.com/yinjihuan* @ The authors introduce http://cxytiandi.com/about* @ Time 2020-04-06 16:20*/@FeignClient("${kitty.id.segment.name:LeafSegment}")public interface DistributedIdLeafSegmentRemoteService {@RequestMapping(value = "/api/segment/get/{key}")String getSegmentId(@PathVariable("key") String key);}

Snowflake Pattern call client

/*** Distributed ID Service client -Snowflake Pattern** @ author Yin Jihuan* @ Personal wechat jihuan900* @ WeChat official account Ape world* @GitHub https://github.com/yinjihuan* @ The authors introduce http://cxytiandi.com/about* @ Time 2020-04-06 16:20*/@FeignClient("${kitty.id.snowflake.name:LeafSnowflake}")public interface DistributedIdLeafSnowflakeRemoteService {@RequestMapping(value = "/api/snowflake/get/{key}")String getSnowflakeId(@PathVariable("key") String key);}

Users can decide to use RPC still Http To call , If you use RPC Just @Reference Inject Client, If you want to use Http Just use @Autowired Inject Client.

The final transformation LeafController Expose both protocols at the same time .

@Service(version = "1.0.0", group = "default")@RestControllerpublic class LeafController implements DistributedIdLeafSnowflakeRemoteService, DistributedIdLeafSegmentRemoteService {private Logger logger = LoggerFactory.getLogger(LeafController.class);@Autowiredprivate SegmentService segmentService;@Autowiredprivate SnowflakeService snowflakeService;@Overridepublic String getSegmentId(@PathVariable("key") String key) {return get(key, segmentService.getId(key));}@Overridepublic String getSnowflakeId(@PathVariable("key") String key) {return get(key, snowflakeService.getId(key));}private String get(@PathVariable("key") String key, Result id) {Result result;if (key == null || key.isEmpty()) {throw new NoKeyException();}result = id;if (result.getStatus().equals(Status.EXCEPTION)) {throw new LeafServerException(result.toString());}return String.valueOf(result.getId());}}

Extended source reference :https://github.com/yinjihuan/Leaf/tree/rpc_support

Interested in Star Let's go :https://github.com/yinjihuan/kitty

About author : Yin Jihuan , Simple technology enthusiasts ,《Spring Cloud Microservices - Full stack technology and case analysis 》, 《Spring Cloud Microservices introduction Actual combat and advanced 》 author , official account Ape world Originator . Personal wechat jihuan900, Welcome to hook up with .

I have compiled a complete set of learning materials , Those who are interested can search through wechat 「 Ape world 」, Reply key 「 Learning materials 」 Get what I've sorted out Spring Cloud,Spring Cloud Alibaba,Sharding-JDBC Sub database and sub table , Task scheduling framework XXL-JOB,MongoDB, Reptiles and other related information .

版权声明

本文为[Yin Jihuan]所创,转载请带上原文链接,感谢

边栏推荐

- This article will introduce you to jest unit test

- 百万年薪,国内工作6年的前辈想和你分享这四点

- Python Jieba segmentation (stuttering segmentation), extracting words, loading words, modifying word frequency, defining thesaurus

- Electron application uses electronic builder and electronic updater to realize automatic update

- 用一个例子理解JS函数的底层处理机制

- Classical dynamic programming: complete knapsack problem

- 合约交易系统开发|智能合约交易平台搭建

- 零基础打造一款属于自己的网页搜索引擎

- Mac installation hanlp, and win installation and use

- Individual annual work summary and 2019 work plan (Internet)

猜你喜欢

一篇文章带你了解CSS3圆角知识

Jetcache buried some of the operation, you can't accept it

Flink的DataSource三部曲之一:直接API

Character string and memory operation function in C language

华为云“四个可靠”的方法论

至联云分享:IPFS/Filecoin值不值得投资?

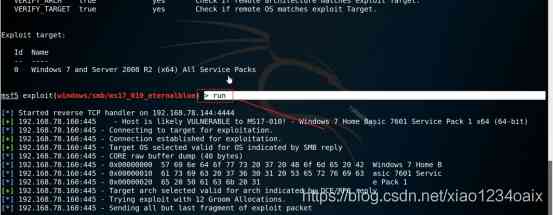

Network security engineer Demo: the original * * is to get your computer administrator rights! 【***】

A brief history of neural networks

一篇文章带你了解HTML表格及其主要属性介绍

If PPT is drawn like this, can the defense of work report be passed?

随机推荐

Save the file directly to Google drive and download it back ten times faster

Arrangement of basic knowledge points

What to do if you are squeezed by old programmers? I don't want to quit

React design pattern: in depth understanding of react & Redux principle

前端基础牢记的一些操作-Github仓库管理

百万年薪,国内工作6年的前辈想和你分享这四点

IPFS/Filecoin合法性:保护个人隐私不被泄露

零基础打造一款属于自己的网页搜索引擎

How to become a data scientist? - kdnuggets

Analysis of react high order components

2019年的一个小目标,成为csdn的博客专家,纪念一下

What problems can clean architecture solve? - jbogard

一篇文章教会你使用Python网络爬虫下载酷狗音乐

在大规模 Kubernetes 集群上实现高 SLO 的方法

5.4 static resource mapping

6.1.1 handlermapping mapping processor (1) (in-depth analysis of SSM and project practice)

Network security engineer Demo: the original * * is to get your computer administrator rights! 【***】

How to use parameters in ES6

The difference between gbdt and XGB, and the mathematical derivation of gradient descent method and Newton method

Word segmentation, naming subject recognition, part of speech and grammatical analysis in natural language processing