当前位置:网站首页>事故指标统计

事故指标统计

2022-07-06 02:18:00 【小胖超凶哦!】

[[email protected] ~]# hive

Logging initialized using configuration in jar:file:/usr/local/soft/hive-1.2.1/lib/hive-common-1.2.1.jar!/hive-log4j.properties

hive> select sgfssj from dwd.base_acd_file order by sgfssj limit 10;

2006-01-22 09:30:00.0

2006-12-21 00:15:00.0

2006-12-21 13:50:00.0

2006-12-21 16:30:00.0

2006-12-21 18:02:00.0

2006-12-22 11:30:00.0

2006-12-22 13:30:00.0

2006-12-22 14:30:00.0

2006-12-22 17:30:00.0

2006-12-22 23:55:00.0

Time taken: 28.559 seconds, Fetched: 10 row(s)

hive> select sgfssj from dwd.base_acd_file order by sgfssj desc limit 10;

OK

2020-10-14 17:28:00.0

2020-10-14 11:16:00.0

2020-10-13 23:06:00.0

2020-10-13 19:25:00.0

2020-10-13 14:12:00.0

2020-10-13 12:10:00.0

2020-10-12 16:04:00.0

2020-10-12 11:50:00.0

2020-10-12 07:38:00.0

2020-10-12 07:30:00.0

Time taken: 21.673 seconds, Fetched: 10 row(s)

hive> select current_date;

OK

2022-07-05

Time taken: 0.459 seconds, Fetched: 1 row(s)

hive> select year(sgfssj),count(*) from dwd.base_acd_file group by year(sgfssj);

2006 43

2007 1082

2008 1070

2009 1377

2010 1579

2011 2604

2012 2117

2013 1802

2014 1936

2015 1991

2016 2094

2017 1933

2018 2373

2019 2617

2020 1930

Time taken: 22.075 seconds, Fetched: 15 row(s)

hive> select substr(sgfssj,1,10)

> ,count(1)as dr_sgs

> from dwd.base_acd_file

> group by substr(sgfssj,1,10);

Time taken: 45.99 seconds, Fetched: 4966 row(s)

hive> select t1.tjrq

> ,t1.dr_sgs

> ,sum(t1.dr_sgs)over (partition by substr(t1.tjrq,1,4))as jn_sgs

> from(

> select substr(sgfssj,1,10)as tjrq

> ,count(1)as dr_sgs

> from dwd.base_acd_file

> group by substr(sgfssj,1,10)

> )t1;

Time taken: 45.99 seconds, Fetched: 4966 row(s)hive> select t1.tjrq

> ,t1.dr_sgs

> ,sum(t1.dr_sgs)over (partition by substr(t1.tjrq,1,4))as jn_sgs

> ,lag(t1.dr_sgs,1,1)over (partition by substr(t1.tjrq,6,5)order by substr(t1.tjrq,1,4))as qntq_sgs

> from(

> select substr(sgfssj,1,10)as tjrq

> ,count(1)as dr_sgs

> from dwd.base_acd_file

> group by substr(sgfssj,1,10)

> )t1;

Time taken: 73.903 seconds, Fetched: 4966 row(s)

hive> exit;

[[email protected] ~]# cd /usr/local/soft/spark-2.4.5/

[[email protected] spark-2.4.5]# ls

bin examples LICENSE NOTICE README.md yarn

conf jars licenses python RELEASE

data kubernetes logs R sbin

[[email protected] spark-2.4.5]# spark-sql --conf spark.sql.shuffle.partitions=2

spark-sql> show databases;

22/07/05 11:11:24 INFO codegen.CodeGenerator: Code generated in 227.508135 ms

default

dwd

Time taken: 0.351 seconds, Fetched 2 row(s)

22/07/05 11:11:24 INFO thriftserver.SparkSQLCLIDriver: Time taken: 0.351 seconds, Fetched 2 row(s)

spark-sql> use dwd;

Time taken: 0.041 seconds

22/07/05 11:11:31 INFO thriftserver.SparkSQLCLIDriver: Time taken: 0.041 seconds

spark-sql> show tables;

22/07/05 11:11:41 INFO spark.ContextCleaner: Cleaned accumulator 2

22/07/05 11:11:41 INFO spark.ContextCleaner: Cleaned accumulator 0

22/07/05 11:11:41 INFO spark.ContextCleaner: Cleaned accumulator 1

22/07/05 11:11:41 INFO codegen.CodeGenerator: Code generated in 13.604985 ms

dwd base_acd_file false

dwd base_acd_filehuman false

dwd base_bd_drivinglicense false

dwd base_bd_vehicle false

dwd base_vio_force false

dwd base_vio_surveil false

dwd base_vio_violation false

Time taken: 0.119 seconds, Fetched 7 row(s)

22/07/05 11:11:41 INFO thriftserver.SparkSQLCLIDriver: Time taken: 0.119 seconds, Fetched 7 row(s)

spark-sql> select tt1.tjrq

> ,tt1.dr_sgs

> ,tt1.jn_sgs

> ,tt1.qntq_sgs

> ,NVL(round((abs(tt1.dr_sgs-tt1.qntq_sgs)/NVL(tt1.qntq_sgs,1))*100,2),0) as tb_sgs

> ,if(tt1.dr_sgs-tt1.qntq_sgs>0,"上升",'下降') as tb_sgs_bj

> from(

> select t1.tjrq

> ,t1.dr_sgs

> ,sum(t1.dr_sgs)over (partition by substr(t1.tjrq,1,4))as jn_sgs

> ,lag(t1.dr_sgs,1,1)over (partition by substr(t1.tjrq,6,5)order by substr(t1.tjrq,1,4))as qntq_sgs

> from(

> select substr(sgfssj,1,10)as tjrq

> ,count(1)as dr_sgs

> from dwd.base_acd_file

> group by substr(sgfssj,1,10)

> )t1

> )tt1;

Time taken: 0.596 seconds, Fetched 4966 row(s)

22/07/05 11:32:08 INFO thriftserver.SparkSQLCLIDriver: Time taken: 0.596 seconds, Fetched 4966 row(s)

spark-sql> select tt1.tjrq

> ,tt1.dr_sgs

> ,tt1.jn_sgs

> ,tt1.qntq_sgs

> ,NVL(round((abs(tt1.dr_sgs-tt1.qntq_sgs)/NVL(tt1.qntq_sgs,1))*100,2),0) as tb_sgs

> ,if(tt1.dr_sgs-tt1.qntq_sgs>0,"上升",'下降') as tb_sgs_bj

> from(

> select t1.tjrq

> ,t1.dr_sgs

> ,sum(t1.dr_sgs)over (partition by substr(t1.tjrq,1,4))as jn_sgs

> ,lag(t1.dr_sgs,1,1)over (partition by substr(t1.tjrq,6,5)order by substr(t1.tjrq,1,4))as qntq_sgs

> from(

> select substr(sgfssj,1,10)as tjrq

> ,count(1)as dr_sgs

> from dwd.base_acd_file

> group by substr(sgfssj,1,10)

> )t1

> )tt1

> order by tt1.tjrq;

Time taken: 5.46 seconds, Fetched 4966 row(s)

22/07/05 14:36:35 INFO thriftserver.SparkSQLCLIDriver: Time taken: 5.46 seconds, Fetched 4966 row(s)spark-sql> select tt1.tjrq

> ,tt1.dr_sgs

> ,tt1.jn_sgs

> ,tt1.qntq_sgs

>

> ,NVL(round((abs(tt1.dr_sgs-tt1.qntq_sgs)/NVL(tt1.qntq_sgs,1))*100,2),0) as tb_sgs

> ,if(tt1.dr_sgs-tt1.qntq_sgs>0,"上升",'下降') as tb_sgs_bj

>

> ,NVL(round((abs(tt1.dr_swsgs-tt1.qntq_swsgs)/NVL(tt1.qntq_swsgs,1))*100,2),0) as tb_swsgs

> ,if(tt1.dr_swsgs-tt1.qntq_swsgs>0,"上升",'下降') as tb_swsgs_bj

> from(

> select t1.tjrq

> ,t1.dr_sgs

> ,t1.dr_swsgs

>

> ,sum(t1.dr_sgs)over (partition by substr(t1.tjrq,1,4))as jn_sgs

> ,lag(t1.dr_sgs,1,1)over (partition by substr(t1.tjrq,6,5)order by substr(t1.tjrq,1,4))as qntq_sgs

>

> ,sum(t1.dr_swsgs)over (partition by substr(t1.tjrq,1,4))as jn_swsgs

> ,lag(t1.dr_swsgs,1,1)over (partition by substr(t1.tjrq,6,5)order by substr(t1.tjrq,1,4))as qntq_swsgs

> from(

> select substr(sgfssj,1,10)as tjrq

> ,count(1)as dr_sgs

> ,sum(if(swrs7>0,1,0))as dr_swsgs

> from dwd.base_acd_file

> group by substr(sgfssj,1,10)

> )t1

> )tt1

> order by tt1.tjrq;

Time taken: 5.372 seconds, Fetched 4966 row(s)

22/07/05 15:17:19 INFO thriftserver.SparkSQLCLIDriver: Time taken: 5.372 seconds, Fetched 4966 row(s)spark-sql> select tt1.tjrq

> ,tt1.dr_sgs

> ,tt1.jn_sgs

> ,tt1.qntq_sgs

> ,tt1.dr_swsgs

> ,tt1.qntq_swsgs

>

> ,NVL(round((abs(tt1.dr_sgs-tt1.qntq_sgs)/NVL(tt1.qntq_sgs,1))*100,2),0) as tb_sgs

> ,if(tt1.dr_sgs-tt1.qntq_sgs>0,"上升",'下降') as tb_sgs_bj

>

> ,NVL(round((abs(tt1.dr_swsgs-tt1.qntq_swsgs)/NVL(tt1.qntq_swsgs,1))*100,2),0) as tb_swsgs

> ,if(tt1.dr_swsgs-tt1.qntq_swsgs>0,"上升",'下降') as tb_swsgs_bj

> from(

> select t1.tjrq

> ,t1.dr_sgs

> ,t1.dr_swsgs

>

> ,sum(t1.dr_sgs)over (partition by substr(t1.tjrq,1,4))as jn_sgs

> ,lag(t1.dr_sgs,1,1)over (partition by substr(t1.tjrq,6,5)order by substr(t1.tjrq,1,4))as qntq_sgs

>

> ,sum(t1.dr_swsgs)over (partition by substr(t1.tjrq,1,4))as jn_swsgs

> ,lag(t1.dr_swsgs,1,1)over (partition by substr(t1.tjrq,6,5)order by substr(t1.tjrq,1,4))as qntq_swsgs

> from(

> select substr(sgfssj,1,10)as tjrq

> ,count(1)as dr_sgs

> ,sum(if(swrs7>0,1,0))as dr_swsgs

> from dwd.base_acd_file

> group by substr(sgfssj,1,10)

> )t1

> )tt1

> order by tt1.tjrq;

Time taken: 4.13 seconds, Fetched 4966 row(s)

22/07/05 15:20:00 INFO thriftserver.SparkSQLCLIDriver: Time taken: 4.13 seconds, Fetched 4966 row(s)spark-sql> select tt1.tjrq

> ,tt1.dr_sgs

> ,tt1.jn_sgs

> ,tt1.qntq_sgs

> ,tt1.dr_swsgs

> ,tt1.jn_swsgs

> ,tt1.qntq_swsgs

>

> ,NVL(round((abs(tt1.dr_sgs-tt1.qntq_sgs)/NVL(tt1.qntq_sgs,1))*100,2),0) as tb_sgs

> ,if(tt1.dr_sgs-tt1.qntq_sgs>0,"上升",'下降') as tb_sgs_bj

>

> ,NVL(round((abs(tt1.dr_swsgs-tt1.qntq_swsgs)/NVL(tt1.qntq_swsgs,1))*100,2),0) as tb_swsgs

> ,if(tt1.dr_swsgs-tt1.qntq_swsgs>0,"上升",'下降') as tb_swsgs_bj

> from(

> select t1.tjrq

> ,t1.dr_sgs

> ,t1.dr_swsgs

>

> ,sum(t1.dr_sgs)over (partition by substr(t1.tjrq,1,4))as jn_sgs

> ,lag(t1.dr_sgs,1,1)over (partition by substr(t1.tjrq,6,5)order by substr(t1.tjrq,1,4))as qntq_sgs

>

> ,sum(t1.dr_swsgs)over (partition by substr(t1.tjrq,1,4))as jn_swsgs

> ,lag(t1.dr_swsgs,1,1)over (partition by substr(t1.tjrq,6,5)order by substr(t1.tjrq,1,4))as qntq_swsgs

> from(

> select substr(sgfssj,1,10)as tjrq

> ,count(1)as dr_sgs

> ,sum(if(swrs7>0,1,0))as dr_swsgs

> from dwd.base_acd_file

> group by substr(sgfssj,1,10)

> )t1

> )tt1

> order by tt1.tjrq;

Time taken: 5.653 seconds, Fetched 4966 row(s)

22/07/05 15:23:09 INFO thriftserver.SparkSQLCLIDriver: Time taken: 5.653 seconds, Fetched 4966 row(s)边栏推荐

- Paper notes: limit multi label learning galaxc (temporarily stored, not finished)

- Competition question 2022-6-26

- Concept of storage engine

- 【社区人物志】专访马龙伟:轮子不好用,那就自己造!

- How to generate rich text online

- Redis daemon cannot stop the solution

- Exness: Mercedes Benz's profits exceed expectations, and it is predicted that there will be a supply chain shortage in 2022

- Xshell 7 Student Edition

- 729. 我的日程安排表 I / 剑指 Offer II 106. 二分图

- Initial understanding of pointer variables

猜你喜欢

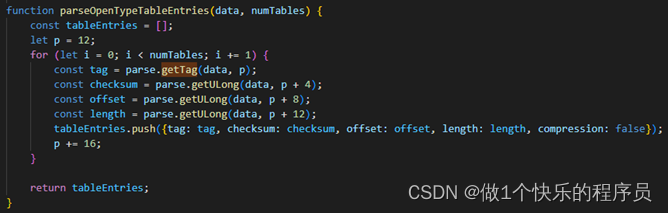

Extracting key information from TrueType font files

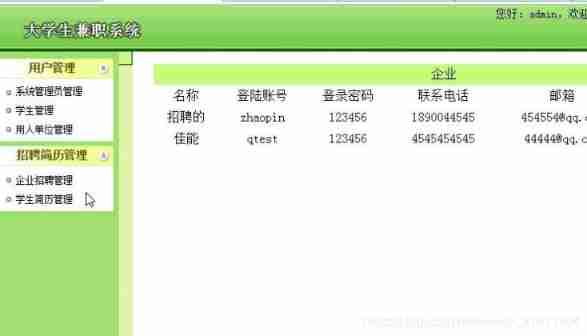

Computer graduation design PHP part-time recruitment management system for College Students

![Grabbing and sorting out external articles -- status bar [4]](/img/1e/2d44f36339ac796618cd571aca5556.png)

Grabbing and sorting out external articles -- status bar [4]

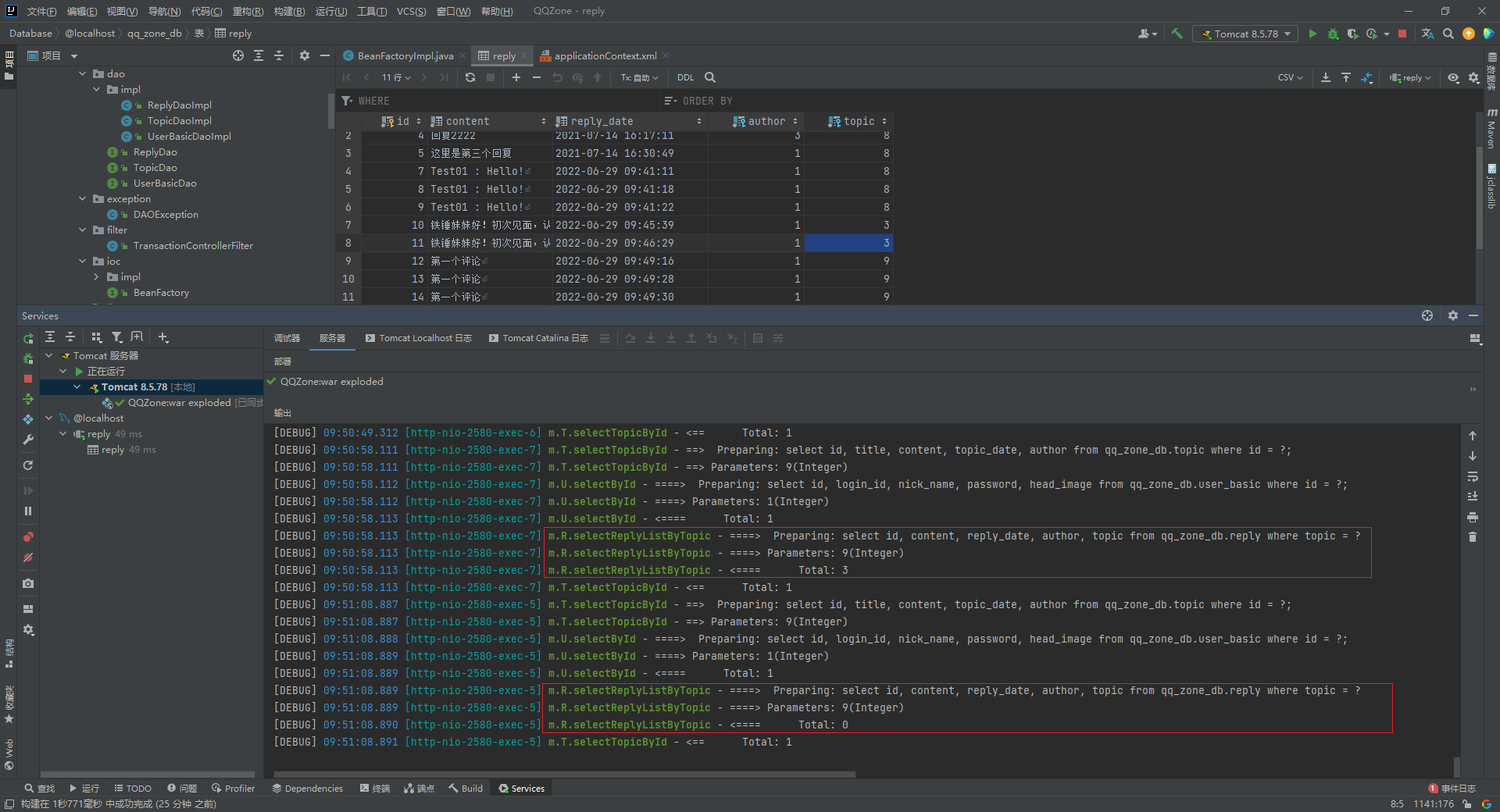

Executing two identical SQL statements in the same sqlsession will result in different total numbers

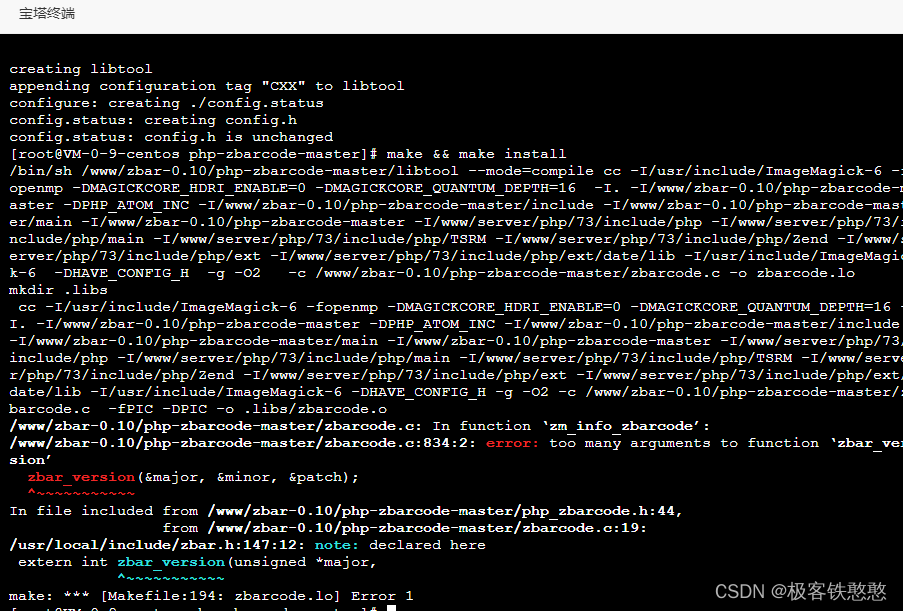

It's wrong to install PHP zbarcode extension. I don't know if any God can help me solve it. 7.3 for PHP environment

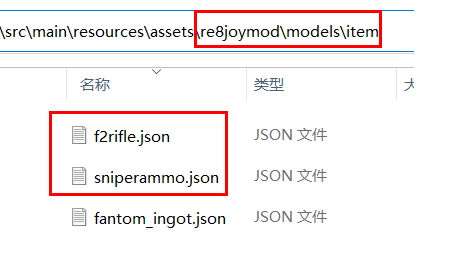

Minecraft 1.18.1, 1.18.2 module development 22 Sniper rifle

![抓包整理外篇——————状态栏[ 四]](/img/1e/2d44f36339ac796618cd571aca5556.png)

抓包整理外篇——————状态栏[ 四]

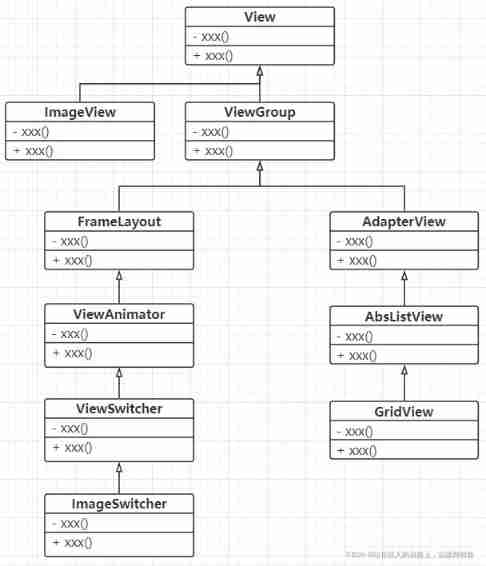

Use image components to slide through photo albums and mobile phone photo album pages

Campus second-hand transaction based on wechat applet

【机器人库】 awesome-robotics-libraries

随机推荐

Exness: Mercedes Benz's profits exceed expectations, and it is predicted that there will be a supply chain shortage in 2022

Paper notes: graph neural network gat

leetcode3、實現 strStr()

Computer graduation design PHP part-time recruitment management system for College Students

RDD partition rules of spark

抓包整理外篇——————状态栏[ 四]

2022 edition illustrated network pdf

[solution] every time idea starts, it will build project

729. 我的日程安排表 I / 剑指 Offer II 106. 二分图

How to improve the level of pinduoduo store? Dianyingtong came to tell you

It's wrong to install PHP zbarcode extension. I don't know if any God can help me solve it. 7.3 for PHP environment

零基础自学STM32-野火——GPIO复习篇——使用绝对地址操作GPIO

【社区人物志】专访马龙伟:轮子不好用,那就自己造!

RDD creation method of spark

在线怎么生成富文本

好用的 JS 脚本

[robot hand eye calibration] eye in hand

Spark accumulator

Global and Chinese markets of screw rotor pumps 2022-2028: Research Report on technology, participants, trends, market size and share

SSM 程序集