当前位置:网站首页>Feature Engineering: summary of common feature transformation methods

Feature Engineering: summary of common feature transformation methods

2022-07-02 07:47:00 【deephub】

The life cycle of machine learning model can be divided into the following steps :

- Data collection

- Data preprocessing

- Feature Engineering

- feature selection

- Building models

- Super parameter adjustment

- Model deployment

To build a model, we must preprocess the data . Feature transformation is one of the most important tasks in this process . In the data set , Most of the time, there will be data of different sizes . In order to make better prediction , Different features must be reduced to the same amplitude range or some specific data distribution .

When feature transformation is needed

stay K-Nearest-Neighbors、SVM and K-means Equal distance based algorithm , They give more weight to features with larger values , Because the distance is calculated with the value of data points . If we provide features that are not scaled by the algorithm , The forecast will be seriously affected . In linear model and algorithm based on gradient descent optimization , Feature scaling becomes critical , Because if we input data of different sizes , It will be difficult to converge to the global minimum . Use the same range of values , The burden of algorithm learning will be reduced .

When feature transformation is not needed

Most tree based integration methods do not require feature scaling , Because even if we do feature transformation , The calculation of entropy will not change much . So in such an algorithm , Unless there is a special need for , Generally, there is no need to zoom .

Method of feature transformation

There are many methods of feature transformation , This article will summarize some useful and popular methods .

Standardization

Min — Max Scaling/ Normalization

Robust Scaler

Logarithmic Transformation

Reciprocal Transformation

Square Root Translation

Box Cox Transformation

Standardization Standardization

When the characteristics of the input data set are very different between ranges or in different units of measurement ( Such as height 、 weight 、 rice 、 Miles, etc ) When measuring , Standardization should be used . We bring all variables or characteristics to a similar scale . The mean value is 0, The standard deviation is 1.

In standardization , We subtract the eigenvalue from the average , Then divide by the standard deviation , Get a completely standard normal distribution .

Min — Max Scaling / Normalization

Simply speaking , Min max zoom reduces the eigenvalue to 0 To 1 The scope of the . Or we can specify the zoom range .

about Normalization( normalization ): The characteristic value will be subtracted from its minimum value , Then divide by the characteristic range ( Characteristic range = Characteristic maximum - Minimum characteristic value ).

Robust Scaler

If the dataset has too many outliers , Then standardization and normalization are difficult to deal with , under these circumstances , have access to Robust Scaler Do feature scaling .

You can tell by the name Robust Scaler Robust to outliers . It scales values using median and quartile ranges , Therefore, it will not be affected by very large or very small eigenvalues .Robust Scaler Subtract the characteristic value from its value , Then divide by its IQR.

- The first 25 One hundredth = The first 1 The quartile

- The first 50 One hundredth = The first 2 The quartile ( Also known as median )

- The first 75 One hundredth = The first 3 The quartile

- The first 100 One hundredth = The first 4 The quartile ( Also known as maximum )

- IQR= Interquartile spacing

- IQR= third quartile - The first quartile

Gaussian transformation

Some machine learning algorithms ( Such as linear regression and logistic regression ) All assume that the data we provide them is normally distributed . So if the data is normally distributed , Then such algorithms tend to have better performance and provide higher accuracy , Standardized skewness distribution becomes very important here .

But most of the time, the data will deviate , You need to use an algorithm to convert it to Gaussian distribution , And before determining a method, you need to try several methods , Because different data sets often have different requirements , We can't adapt to one method All data .

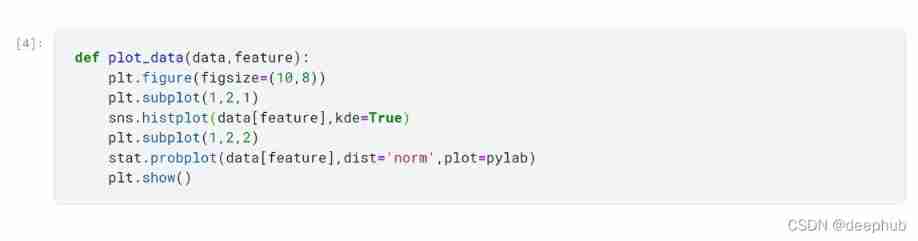

In this article, we will only use the data set from Titanic to demonstrate , Next, draw the age histogram and QQ chart .

The following figure shows the age characteristics before feature scaling

1、 Logarithmic transformation Logarithmic Transformation

In logarithmic transformation , We will use NumPy take log Apply to all eigenvalues , And store it in new features .

It can be seen from the figure that logarithmic transformation does not seem to be suitable for this data set , It can even skew the data , Thus worsening the distribution . So we must rely on other methods to achieve normal distribution .

2、 Countdown conversion Reciprocal Transformation

In the reciprocal conversion , We divide each value of the characteristic by 1( Reciprocal ) And store it in new features .

Obviously, the reciprocal transformation is not applicable to these data , It does not give a normal distribution , It makes the data more skewed .

3、 Square root conversion Square Root Translation

In square root transformation , We calculate the square root of the feature . Use NumPy This kind of conversion can be carried out conveniently .

It seems to be better adapted to this data than reciprocal and logarithmic transformation , But it's a little tilted to the left .

4、Box Cox

Box Cox Transformation is one of the most effective transformation techniques for transforming data distribution into normal distribution .

Box-Cox Transformation can be defined as :

T(Y)=(Y exp(λ)−1)/λ

among Y Is the response variable ,λ Is the conversion parameter . λ from -5 Change to 5. In the transformation , Consider all λ Value and choose the best value of the given variable .

We can use SciPy Module stat To calculate box cox transformation .

up to now ,box cox Transformation seems to be the most suitable method for the transformation of age characteristics .

summary

There are other techniques that can be performed to obtain Gaussian distributions , But most of the time, one of the above methods can basically meet the requirements of data sets . Another point to be made is , These transformations are not only applicable to features , For regression, we can also apply it to the goal to get better performance .

https://www.overfit.cn/post/0883fd2071ca4a9cb2b6ea32d2cdbc69

author :Parth Gohil

边栏推荐

- [introduction to information retrieval] Chapter 3 fault tolerant retrieval

- Translation of the paper "written mathematical expression recognition with bidirectionally trained transformer"

- PointNet原理证明与理解

- Drawing mechanism of view (II)

- conda常用命令

- Typeerror in allenlp: object of type tensor is not JSON serializable error

- Faster-ILOD、maskrcnn_ Benchmark installation process and problems encountered

- parser. parse_ Args boolean type resolves false to true

- 【Cutout】《Improved Regularization of Convolutional Neural Networks with Cutout》

- [paper introduction] r-drop: regulated dropout for neural networks

猜你喜欢

PointNet原理证明与理解

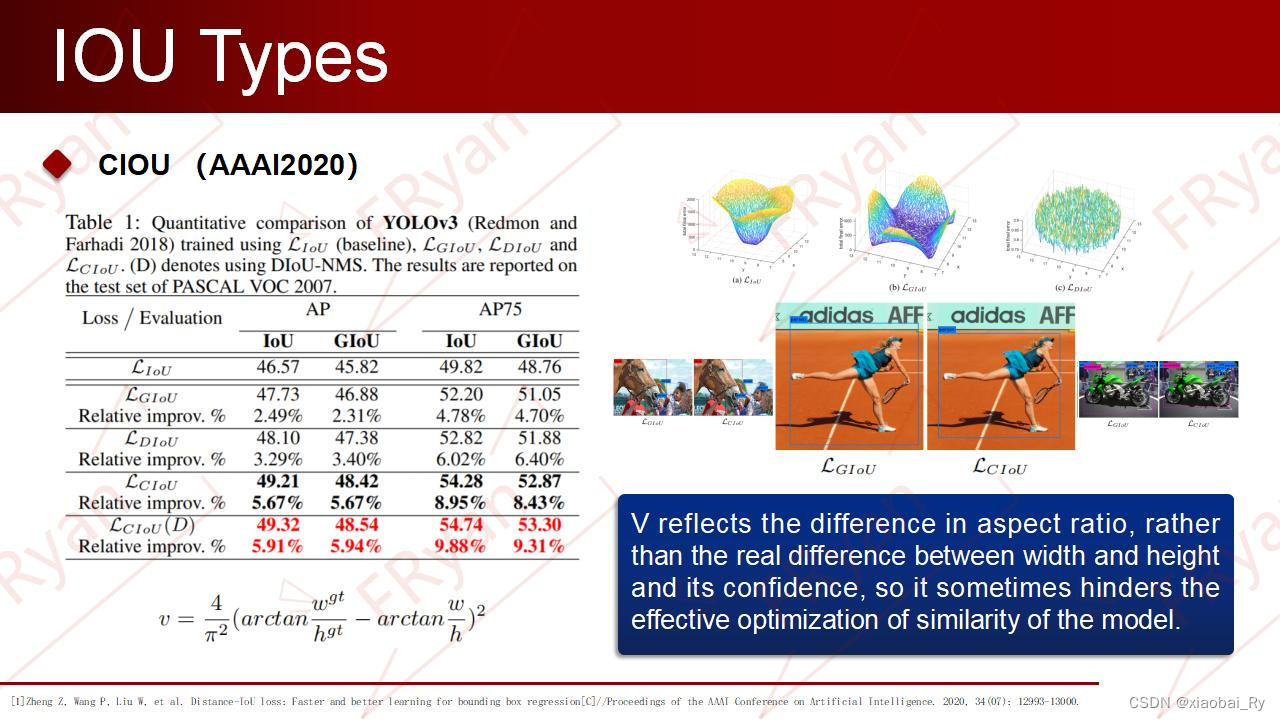

一份Slide两张表格带你快速了解目标检测

Using compose to realize visible scrollbar

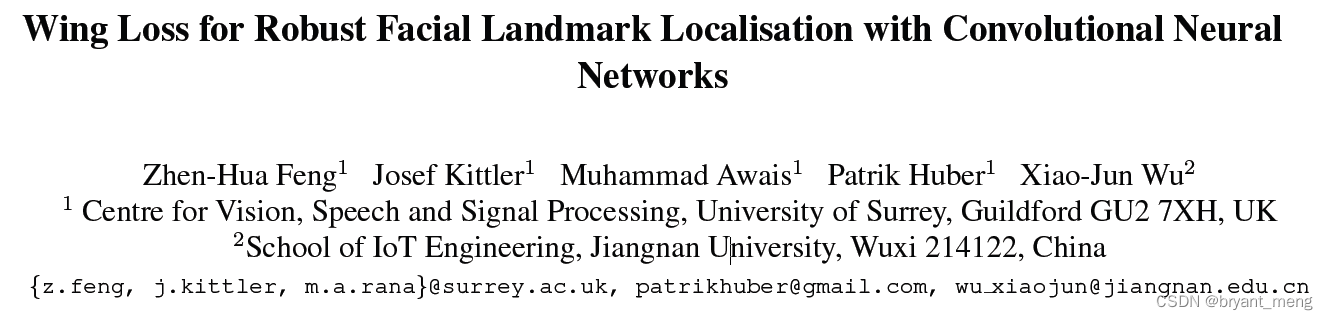

【Wing Loss】《Wing Loss for Robust Facial Landmark Localisation with Convolutional Neural Networks》

MMDetection安装问题

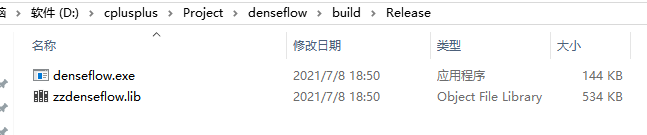

Win10+vs2017+denseflow compilation

传统目标检测笔记1__ Viola Jones

Machine learning theory learning: perceptron

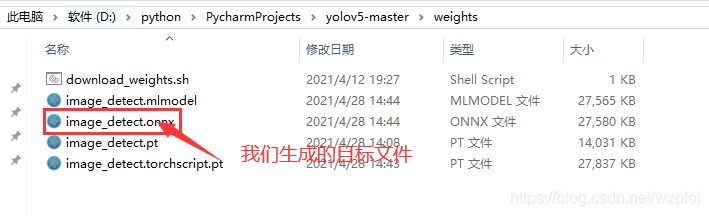

基于onnxruntime的YOLOv5单张图片检测实现

【BiSeNet】《BiSeNet:Bilateral Segmentation Network for Real-time Semantic Segmentation》

随机推荐

Use Baidu network disk to upload data to the server

Determine whether the version number is continuous in PHP

常见的机器学习相关评价指标

[torch] the most concise logging User Guide

How to clean up logs on notebook computers to improve the response speed of web pages

Installation and use of image data crawling tool Image Downloader

How do vision transformer work?【论文解读】

【Cutout】《Improved Regularization of Convolutional Neural Networks with Cutout》

[paper introduction] r-drop: regulated dropout for neural networks

Calculate the difference in days, months, and years between two dates in PHP

CONDA creates, replicates, and shares virtual environments

CPU register

基于onnxruntime的YOLOv5单张图片检测实现

【MobileNet V3】《Searching for MobileNetV3》

Deep learning classification Optimization Practice

Translation of the paper "written mathematical expression recognition with bidirectionally trained transformer"

[introduction to information retrieval] Chapter 7 scoring calculation in search system

Win10+vs2017+denseflow compilation

[mixup] mixup: Beyond Imperial Risk Minimization

Conversion of numerical amount into capital figures in PHP