当前位置:网站首页>A brief history of neural networks

A brief history of neural networks

2020-11-06 01:28:00 【Artificial intelligence meets pioneer】

author |SANYA4 compile |VK source |Analytics Vidhya

Introduce

Now neural networks are everywhere . Companies are squandering on hardware and talent , To make sure they can build the most complex neural networks , And the best deep learning solution .

Although deep learning is a fairly old subset of machine learning , But it was not until the 20 century 10 s , It gets the recognition it deserves . today , It's all over the world , Attracted the attention of the public .

In this paper , I want to take a slightly different approach to neural networks , And understand how they are formed .

The origin of neural networks

The earliest reports in the field of neural networks began with 20 century 40 years , warren · McCullough and Walter · Pitts tried to build a simple neural network with circuits .

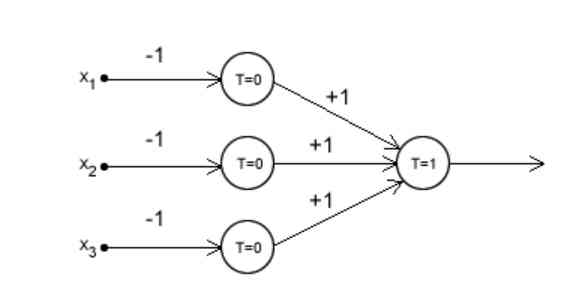

The figure below shows a MCP Neuron . If you're studying high school physics , You'll find that it looks like a simple NOR door .

l The paper shows the basic idea of using signals , And how to make decisions by transforming the input provided .

McCulloch and Pitts This paper provides a way to describe brain function in abstract terms , And the computational power of neural networks can be shown to be huge .

Despite its pioneering significance , But this paper hardly attracts people's attention , Until about 6 After year , Donald · Herb ( The figure below ) Published a paper , It emphasizes that neural pathways are strengthened every time they are used .

please remember , Computers were still in their infancy ,IBM stay 1981 The first PC(IBM5150).

Fast forward to 90 years , Many studies on artificial neural networks have been published . Rosenblat is in 20 century 50 The first perceptron was invented in the s ,1989 year Yann LeCun The back propagation algorithm is successfully implemented in Bell lab . here we are 20 century 90 years , The U.S. Postal Service has been able to read the postcode on the envelope .

What we know today LSTM Is in 1997 Invented in .

If 90 So many foundations have been laid in the s , Why wait until 2012 It is only years before we can use neural network to complete the task of deep learning ?

Hardware and the rise of the Internet

One of the main challenges in deep learning research is the lack of repeatable research . up to now , These developments are all theory driven , Because the availability of reliable data is low , Limited hardware resources .

In the past 20 years , Great progress has been made in hardware and the Internet . stay 20 century 90 years ,IBM Personal computer RAM by 16KB. stay 2010 year , On average, there is 4GB about !

Now? , We can train a small model on our computer , This is in 90 The age is unimaginable .

The game market also played an important role in this revolution , image NVIDIA and AMD Such companies are investing heavily in supercomputers , To provide a high-end virtual experience .

With the development of the Internet , It's much easier to create and distribute datasets for machine learning tasks .

from Wikipedia It's easier to learn and collect pictures in .

2010 year : Our era of deep learning

ImageNet:2009 year , The beginning of modern deep learning era , Li Feifei of Stanford University founded ImageNet, This is a large visualization dataset , It has been hailed as a project that has spawned the artificial intelligence revolution around the world .

As early as 2006 year , Lee is a new professor at the University of Illinois at Urbana Champaign . Her colleagues will constantly discuss new algorithms to make better decisions . However , She saw the flaws in their plans .

If you train on data sets that reflect the real world , So the best algorithms don't work well either .ImageNet By more than 2 Ten thousand categories of 1400 Ten thousand images , up to now , Still the cornerstone of object recognition technology .

Open competition :2009 year ,Netflix There was a program called Netflix Prize Open competition of , To predict the user ratings of movies .2009 year 9 month 21 Japan ,BellKor The practical chaos team of 10.06% The advantage of beating Netflix My own algorithm , To obtain the 100 $10000 bonus .

Kaggle Founded on 2010 year , It's a platform for all people around the world to hold machine learning competitions . It makes researchers 、 Engineers and native programmers are able to overcome the limits of complex data tasks .

Before the AI boom , The investment in AI is about 2000 Thousands of dollars . To 2014 year , This investment has increased 20 times , Google 、Facebook Amazon and other market leaders set aside money , Further research on future AI products . This new wave of investment has increased the number of recruiters in the field of deep learning from hundreds to tens of thousands .

ending

Despite the slow start , But deep learning has become an inevitable part of our lives . from Netflix and YouTube Recommended to language translation engine , From facial recognition and medical diagnosis to autopilot , There is no field that deep learning doesn't touch .

These advances broaden the future scope and application of neural networks in improving our quality of life .

Artificial intelligence is not our future , It's our present , It's just beginning !

Link to the original text :https://www.analyticsvidhya.com/blog/2020/10/how-does-the-gradient-descent-algorithm-work-in-machine-learning/

Welcome to join us AI Blog station : http://panchuang.net/

sklearn Machine learning Chinese official documents : http://sklearn123.com/

Welcome to pay attention to pan Chuang blog resource summary station : http://docs.panchuang.net/

版权声明

本文为[Artificial intelligence meets pioneer]所创,转载请带上原文链接,感谢

边栏推荐

- OPTIMIZER_ Trace details

- Electron application uses electronic builder and electronic updater to realize automatic update

- Network security engineer Demo: the original * * is to get your computer administrator rights! 【***】

- If PPT is drawn like this, can the defense of work report be passed?

- Interface pressure test: installation, use and instruction of siege pressure test

- [actual combat of flutter] pubspec.yaml Configuration file details

- 多机器人行情共享解决方案

- React design pattern: in depth understanding of react & Redux principle

- Working principle of gradient descent algorithm in machine learning

- ES6学习笔记(二):教你玩转类的继承和类的对象

猜你喜欢

Filecoin主网上线以来Filecoin矿机扇区密封到底是什么意思

PN8162 20W PD快充芯片,PD快充充电器方案

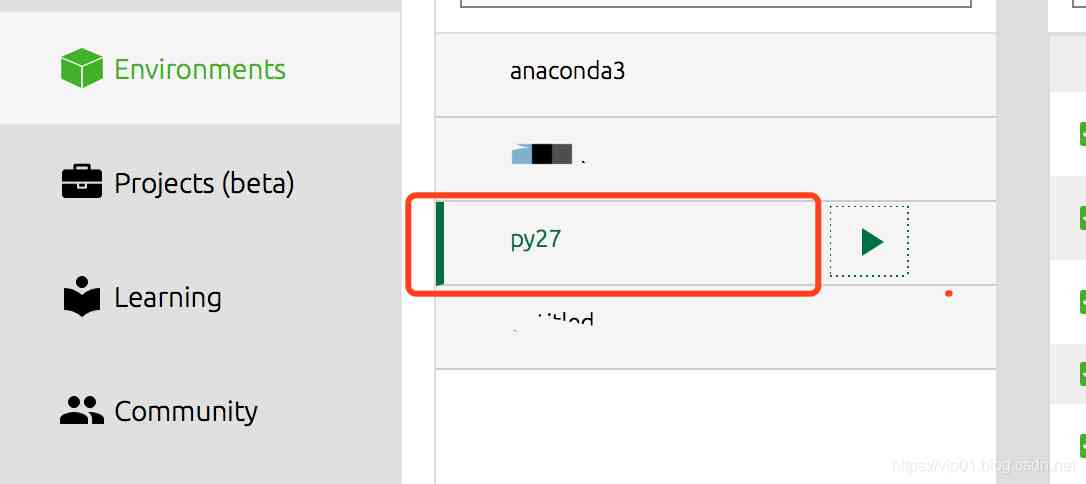

How to use Python 2.7 after installing anaconda3?

Just now, I popularized two unique skills of login to Xuemei

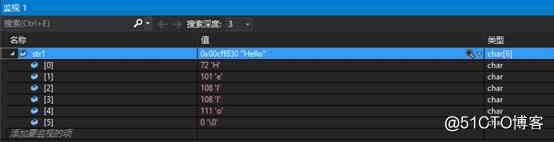

Character string and memory operation function in C language

Summary of common algorithms of linked list

How to customize sorting for pandas dataframe

![[JMeter] two ways to realize interface Association: regular representation extractor and JSON extractor](/img/cc/17b647d403c7a1c8deb581dcbbfc2f.jpg)

[JMeter] two ways to realize interface Association: regular representation extractor and JSON extractor

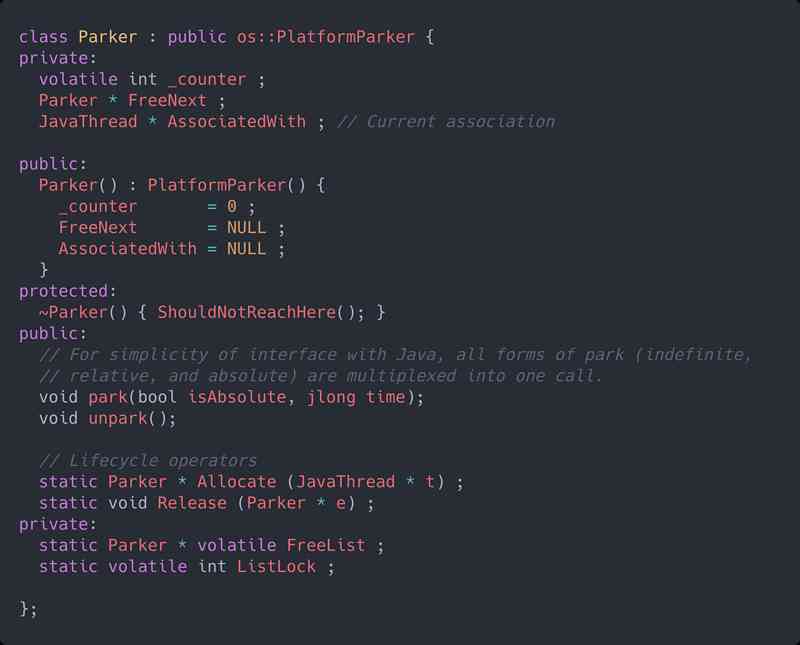

Tool class under JUC package, its name is locksupport! Did you make it?

至联云分享:IPFS/Filecoin值不值得投资?

随机推荐

Three Python tips for reading, creating and running multiple files

What to do if you are squeezed by old programmers? I don't want to quit

Natural language processing - wrong word recognition (based on Python) kenlm, pycorrector

In order to save money, I learned PHP in one day!

Skywalking series blog 5-apm-customize-enhance-plugin

带你学习ES5中新增的方法

What is the side effect free method? How to name it? - Mario

Mac installation hanlp, and win installation and use

How to become a data scientist? - kdnuggets

The data of pandas was scrambled and the training machine and testing machine set were selected

在大规模 Kubernetes 集群上实现高 SLO 的方法

Brief introduction and advantages and disadvantages of deepwalk model

NLP model Bert: from introduction to mastery (1)

Python saves the list data

助力金融科技创新发展,ATFX走在行业最前列

Nodejs crawler captures ancient books and records, a total of 16000 pages, experience summary and project sharing

ES6学习笔记(四):教你轻松搞懂ES6的新增语法

vue-codemirror基本用法:实现搜索功能、代码折叠功能、获取编辑器值及时验证

一篇文章带你了解CSS3图片边框

What problems can clean architecture solve? - jbogard