当前位置:网站首页>Gensim如何冻结某些词向量进行增量训练

Gensim如何冻结某些词向量进行增量训练

2022-07-02 06:26:00 【MezereonXP】

Gensim是一个可以用于主题模型抽取,词向量生成的python的库。

像是一些NLP的预处理,可以先用这个库简单快捷的进行生成。

比如像是Word2Vec,我们通过简单的几行代码就可以实现词向量的生成,如下所示:

import gensim

from numpy import float32 as REAL

import numpy as np

word_list = ["I", "love", "you", "."]

model = gensim.models.Word2Vec(sentences=word_list, vector_size=200, window=10, min_count=1, workers=4)

# 打印词向量

print(model.wv["I"])

# 保存模型

model.save("w2v.out")

笔者使用Gensim进行词向量的生成,但是遇到一个需求,就是已有一个词向量模型,我们现在想要扩增原本的词汇表,但是又不想要修改已有词的词向量。

Gensim本身是没有文档描述如何进行词向量冻结,但是我们通过查阅其源代码,发现其中有一个实验性质的变量可以帮助我们。

# EXPERIMENTAL lockf feature; create minimal no-op lockf arrays (1 element of 1.0)

# advanced users should directly resize/adjust as desired after any vocab growth

self.wv.vectors_lockf = np.ones(1, dtype=REAL)

# 0.0 values suppress word-backprop-updates; 1.0 allows

这一段代码可以在gensim的word2vec.py文件中可以找到

于是,我们可以利用这个vectos_lockf实现我们的需求,这里直接给出对应的代码

# 读取老的词向量模型

model = gensim.models.Word2Vec.load("w2v.out")

old_key = set(model.wv.index_to_key)

new_word_list = ["You", "are", "a", "good", "man", "."]

model.build_vocab(new_word_list, update=True)

# 获得更新后的词汇表的长度

length = len(model.wv.index_to_key)

# 将前面的词都冻结掉

model.wv.vectors_lockf = np.zeros(length, dtype=REAL)

for i, k in enumerate(model.wv.index_to_key):

if k not in old_key:

model.wv.vectors_lockf[i] = 1.

model.train(new_word_list, total_examples=model.corpus_count, epochs=model.epochs)

model.save("w2v-new.out")

这样就实现了词向量的冻结,就不会影响已有的一些模型(我们可能会基于老的词向量训练了一些模型)。

边栏推荐

- 【Mixed Pooling】《Mixed Pooling for Convolutional Neural Networks》

- Convert timestamp into milliseconds and format time in PHP

- 【Wing Loss】《Wing Loss for Robust Facial Landmark Localisation with Convolutional Neural Networks》

- TimeCLR: A self-supervised contrastive learning framework for univariate time series representation

- What if a new window always pops up when opening a folder on a laptop

- win10+vs2017+denseflow编译

- [paper introduction] r-drop: regulated dropout for neural networks

- Faster-ILOD、maskrcnn_ Benchmark training coco data set and problem summary

- Faster-ILOD、maskrcnn_ Benchmark trains its own VOC data set and problem summary

- 【Sparse-to-Dense】《Sparse-to-Dense:Depth Prediction from Sparse Depth Samples and a Single Image》

猜你喜欢

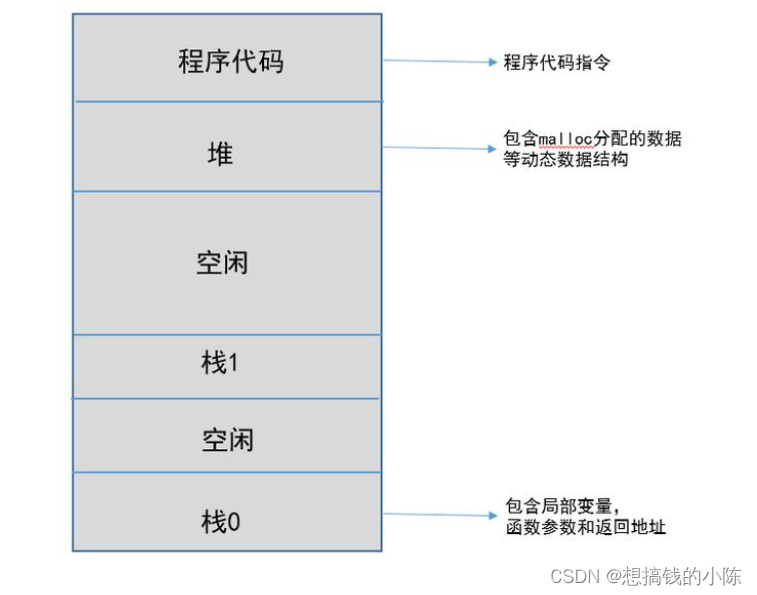

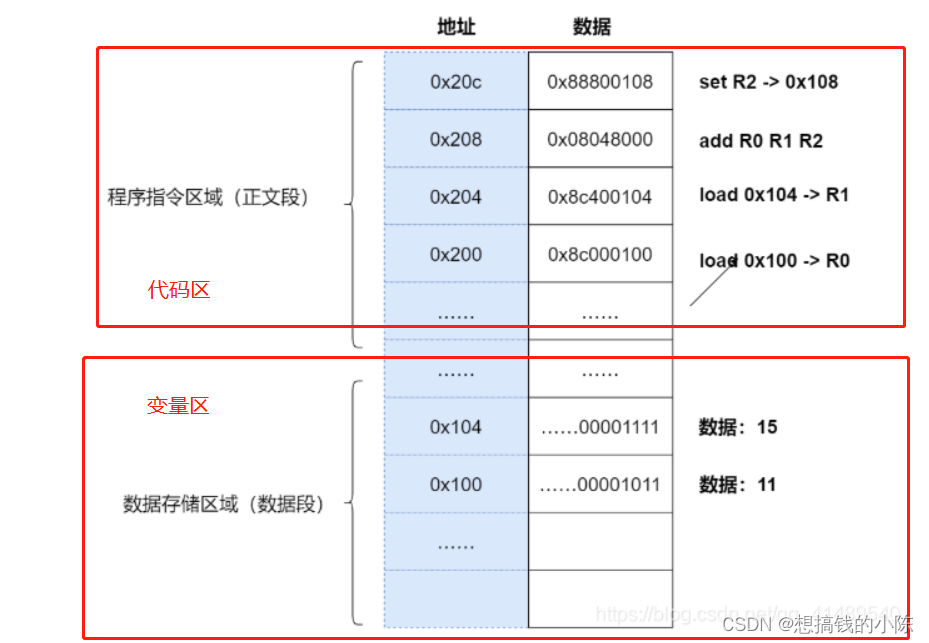

程序的内存模型

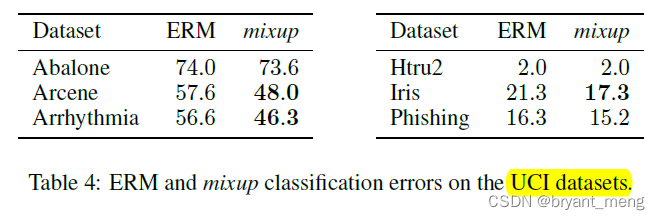

【Mixup】《Mixup:Beyond Empirical Risk Minimization》

【Sparse-to-Dense】《Sparse-to-Dense:Depth Prediction from Sparse Depth Samples and a Single Image》

Faster-ILOD、maskrcnn_benchmark安装过程及遇到问题

ModuleNotFoundError: No module named ‘pytest‘

![[CVPR‘22 Oral2] TAN: Temporal Alignment Networks for Long-term Video](/img/bc/c54f1f12867dc22592cadd5a43df60.png)

[CVPR‘22 Oral2] TAN: Temporal Alignment Networks for Long-term Video

Execution of procedures

label propagation 标签传播

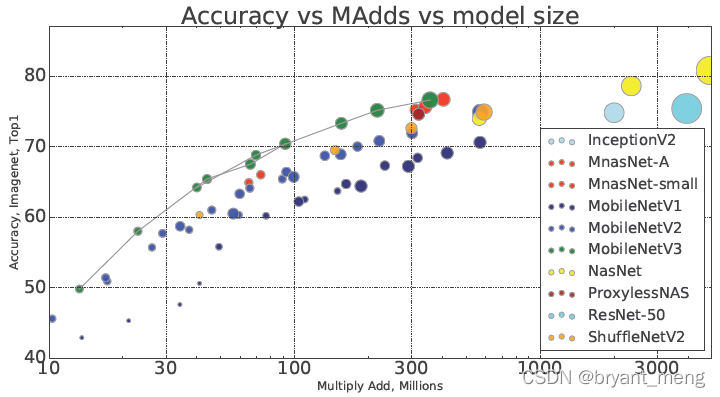

【MobileNet V3】《Searching for MobileNetV3》

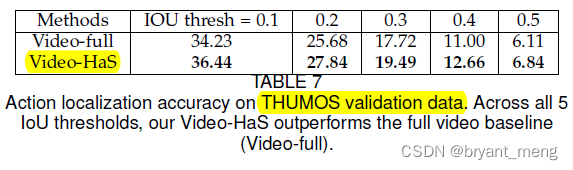

【Hide-and-Seek】《Hide-and-Seek: A Data Augmentation Technique for Weakly-Supervised Localization xxx》

随机推荐

Common machine learning related evaluation indicators

Using MATLAB to realize: Jacobi, Gauss Seidel iteration

[binocular vision] binocular stereo matching

How to turn on night mode on laptop

【Paper Reading】

[introduction to information retrieval] Chapter 6 term weight and vector space model

【Batch】learning notes

Sorting out dialectics of nature

PPT的技巧

基于pytorch的YOLOv5单张图片检测实现

Thesis tips

MMDetection安装问题

label propagation 标签传播

超时停靠视频生成

Alpha Beta Pruning in Adversarial Search

Faster-ILOD、maskrcnn_ Benchmark training coco data set and problem summary

聊天中文语料库对比(附上各资源链接)

深度学习分类优化实战

yolov3训练自己的数据集(MMDetection)

半监督之mixmatch