当前位置:网站首页>On the back door of deep learning model

On the back door of deep learning model

2022-07-02 07:59:00 【MezereonXP】

About deep learning safety , It can be roughly divided into two pieces : Counter samples (Adversarial Example) as well as back door (Backdoor)

For the confrontation sample, please check my previous article ---- Against sample attacks

This time we mainly focus on the backdoor attack in deep learning . The back door , That is a hidden , A channel that is not easily found . In some special cases , This channel will be exposed .

Then in the deep learning , What about the back door ? Here I might as well take the image classification task as an example , We have a picture of a dog in our hand , By classifier , With 99% The degree of confidence (confidence) Classified as a dog . If I add a pattern to this image ( Like a small red circle ), By classifier , With 80% The confidence is classified as cat .

Then we will call this special pattern trigger (Trigger), This classifier is called a classifier with a back door .

Generally speaking , Backdoor attack is composed of these two parts , That is, triggers and models with backdoors

The trigger will trigger the classifier , Make it erroneously classified into the specified category ( Of course, it can also be unspecified , Just make it wrong , Generally speaking, we are talking about designated categories , If other , Special instructions will be given ).

We have already introduced the backdoor attack , Here we mainly focus on several issues :

- How to get the model with back door and the corresponding trigger

- How to make a hidden back door

- How to detect the back door in the model

This time we will focus on the first and second questions , How to get the model with back door and the corresponding trigger .

Generally speaking , We will operate the training data , The backdoor attack is realized by modifying the training data , Such means , be called Based on poisoning (poisoning-based) The back door of .

Here it is with Poison attack Make a difference , The purpose of poisoning attack is to poison data , Reduce the generalization ability of the model (Reduce model generalization), The purpose of backdoor attack is to invalidate the input of the model with trigger , The input without trigger behaves normally .

BadNet

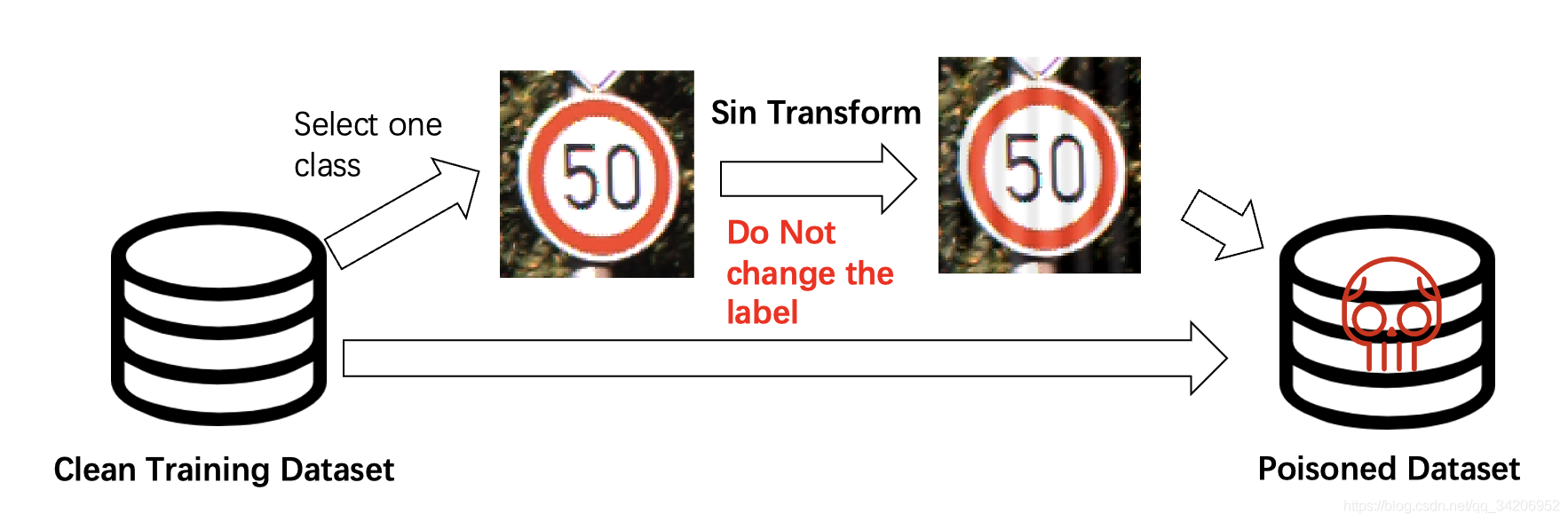

First, let's introduce the most classic attacks , from Gu Et al , It's very simple , Is to randomly select samples from the training data set , Add trigger , And modify their real tags , Then put it back , Build a toxic data set .

Gu et al. Badnets: Evaluating backdooring attacks on deep neural networks

This kind of method often needs to modify the label , So is there a way not to modify the label ?

Clean Label

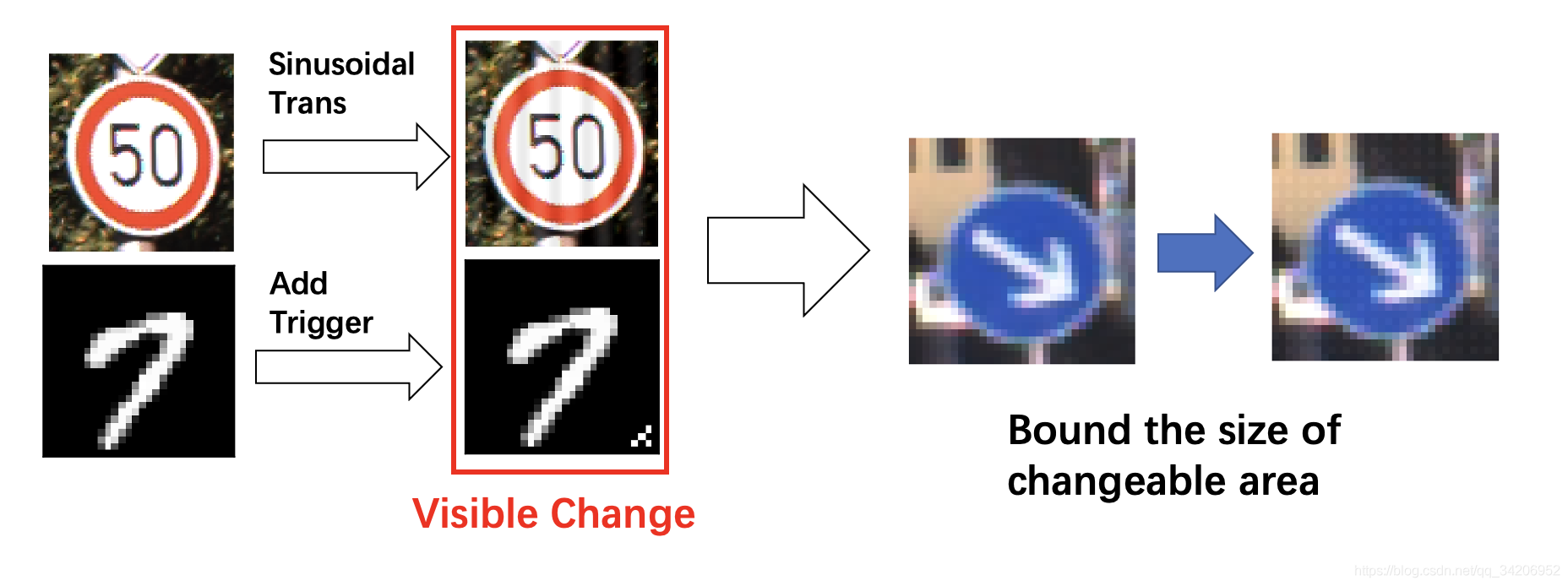

clean label The way is not to modify the label , As shown in the figure below , Just add a special transformation , At the same time, the trigger of this method is relatively hidden ( The trigger is the corresponding transformation ).

Barni et al. A new Backdoor Attack in CNNs by training set corruption without label poisoning

More subtle triggers Hiding Triggers

Liao Et al. Proposed a way to generate triggers , This method will restrict the impact of the trigger on the original image , As shown in the figure below :

Basically, the human eye can't distinguish whether a picture has a trigger .

Liao et al. Backdoor Embedding in Convolutional Neural Network Models via Invisible Perturbation

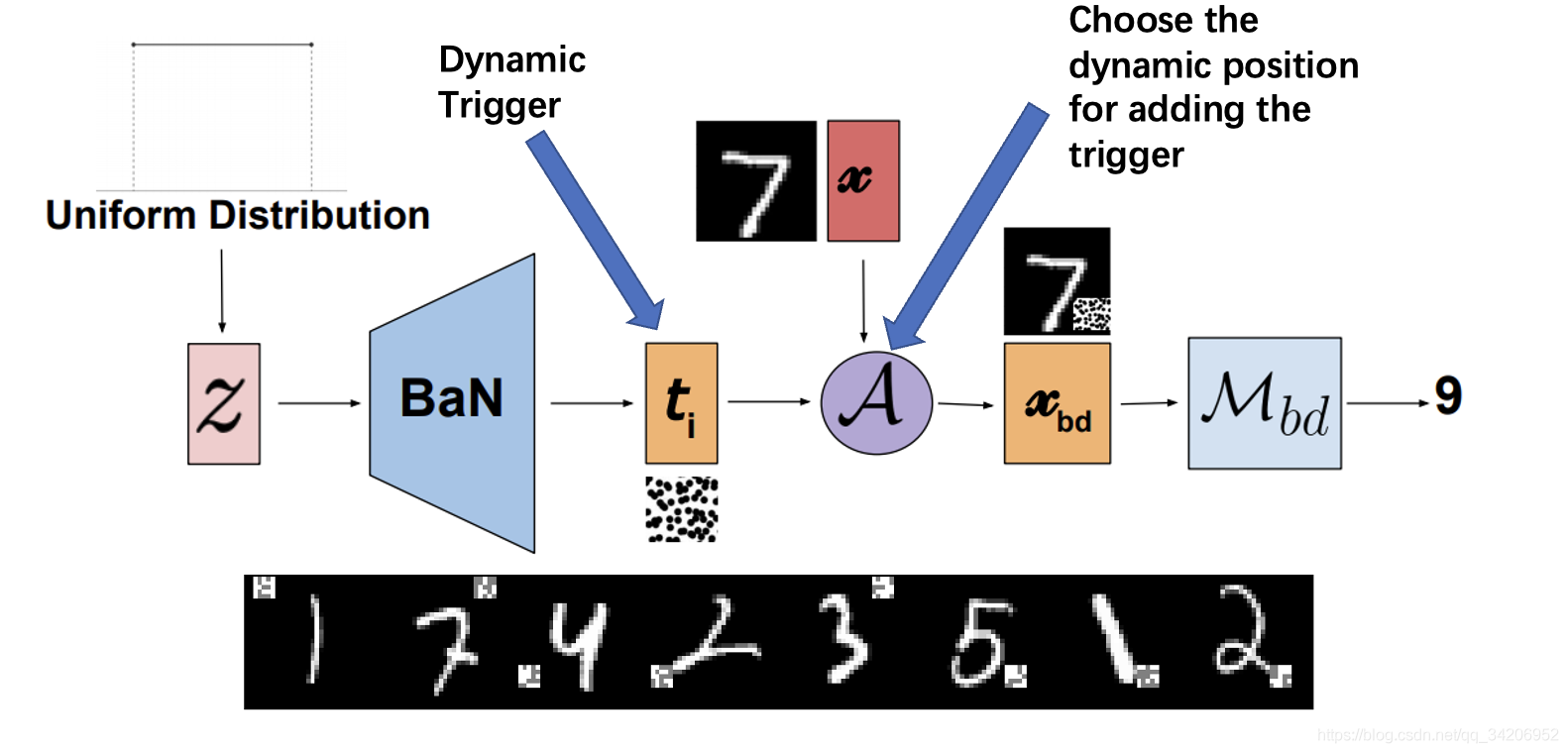

Dynamic triggers Dynamic Backdoor

This method consists of Salem And others raised it , Dynamically determine the location and style of triggers through a network , Enhanced the effect of the attack .

Salem et al. Dynamic Backdoor Attacks Against Machine Learning Models

边栏推荐

- EKLAVYA -- 利用神经网络推断二进制文件中函数的参数

- Summary of open3d environment errors

- 解决jetson nano安装onnx错误(ERROR: Failed building wheel for onnx)总结

- Sorting out dialectics of nature

- 笔记本电脑卡顿问题原因

- Vscode下中文乱码问题

- Latex formula normal and italic

- Thesis writing tip2

- 包图画法注意规范

- 【Cascade FPD】《Deep Convolutional Network Cascade for Facial Point Detection》

猜你喜欢

【MagNet】《Progressive Semantic Segmentation》

【Sparse-to-Dense】《Sparse-to-Dense:Depth Prediction from Sparse Depth Samples and a Single Image》

【TCDCN】《Facial landmark detection by deep multi-task learning》

jetson nano安装tensorflow踩坑记录(scipy1.4.1)

What if the laptop task manager is gray and unavailable

服务器的内网可以访问,外网却不能访问的问题

Remplacer l'auto - attention par MLP

In the era of short video, how to ensure that works are more popular?

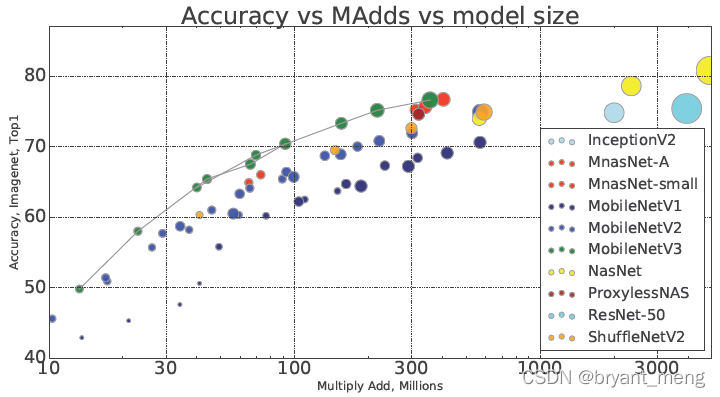

【MobileNet V3】《Searching for MobileNetV3》

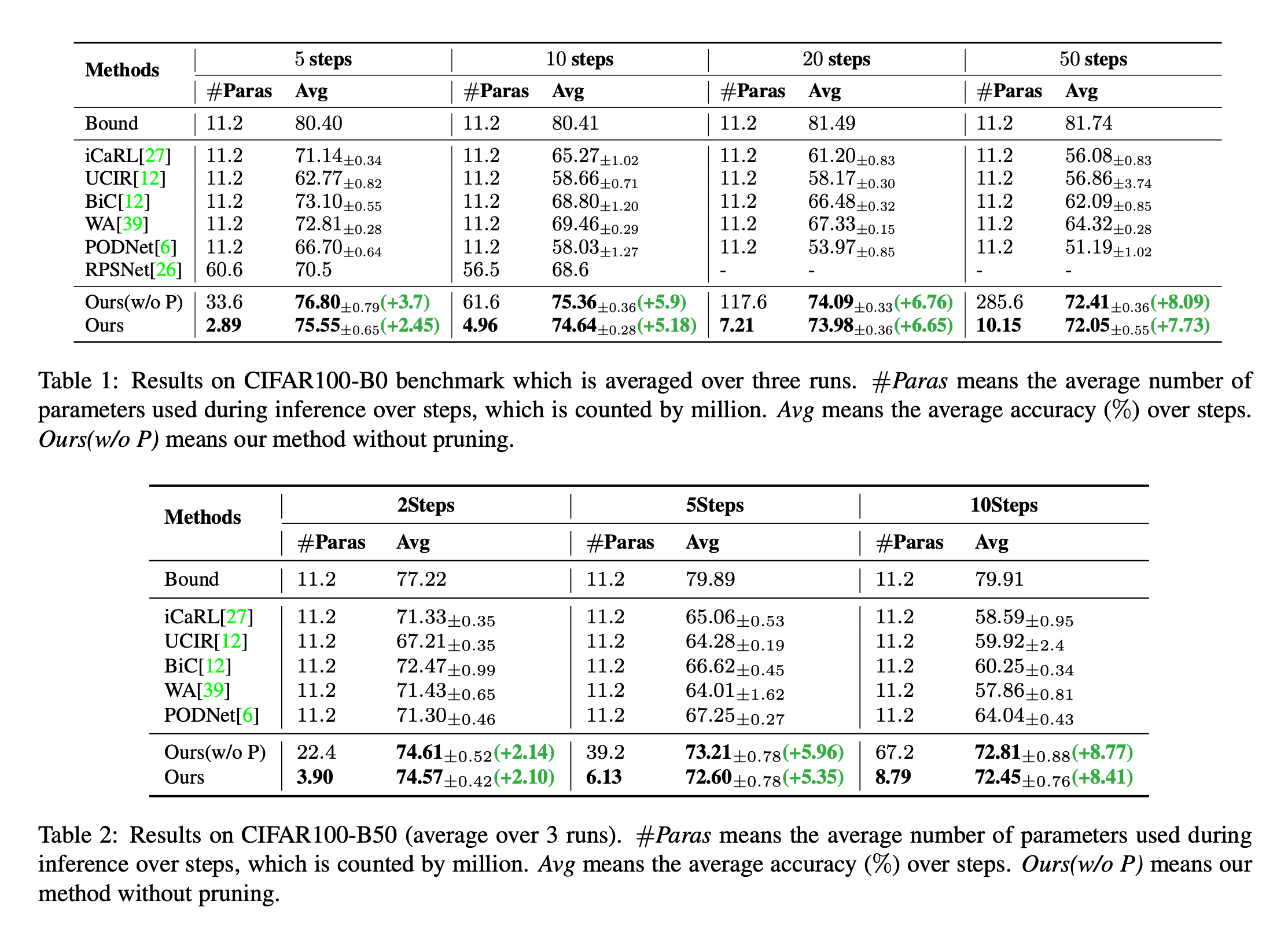

Dynamic extensible representation for category incremental learning -- der

随机推荐

【Mixup】《Mixup:Beyond Empirical Risk Minimization》

利用超球嵌入来增强对抗训练

How gensim freezes some word vectors for incremental training

关于原型图的深入理解

针对tqdm和print的顺序问题

jetson nano安装tensorflow踩坑记录(scipy1.4.1)

【AutoAugment】《AutoAugment:Learning Augmentation Policies from Data》

【Paper Reading】

Label propagation

[binocular vision] binocular correction

Command line is too long

【学习笔记】Matlab自编图像卷积函数

将恶意软件嵌入到神经网络中

Yolov3 trains its own data set (mmdetection)

Common machine learning related evaluation indicators

浅谈深度学习中的对抗样本及其生成方法

Daily practice (19): print binary tree from top to bottom

Network metering - transport layer

Mmdetection model fine tuning

Ppt skills