当前位置:网站首页>【SLAM】lidar-camera外参标定(港大MarsLab)无需二维码标定板

【SLAM】lidar-camera外参标定(港大MarsLab)无需二维码标定板

2022-07-06 03:16:00 【iwander。】

综述

标定问题也是一种位姿估计问题,它本质上和各种激光里程计和视觉里程计解决的是一样的问题。标定采用的办法也可以在里程计问题中借鉴。它们都有着共同的流程:

a.特征提取

b.特征匹配

c.位姿求解

github: https://github.com/hku-mars/livox_camera_calib

这篇论文是著名的港大Mars Lab出品,他们解决的是livox和camera的标定问题。并且,不需要在环境里另外放置仍何类似于标定板或者二维码这样的先验物体!论文很长,很多篇幅在介绍他们这么做的理由,这部分我们跳过,只看他们在代码里是怎么做的。

1. camera image的特征提取

提取鲁棒的,高度辨识度的特征是最重要的一点。相机标定的时候,我们会用棋盘格,因为它特征明显,结构已知,能很容易在不同照片上实现数据关联。对于lidar和image而言,他们的数据是不一样的,如何找到可以匹配对的特征是第一个难题,所以他们选择了线特征。在照片上提取线特征,有opencv的办法,也有learning-based(例子)的办法。这篇论文2D线特征提取的办法比较简单,他们就用了opencv自带的canny算法,canny只能提取照片中的边缘信息,也就是说,只能告诉你哪个像素是不是直线点,但是不能告诉你这个像素属于哪个直线。一般要在照片上识别出不同的直线,要在canny的基础上,再利用hough算法或者LSD算法进一步提取直线,这几个算法都被opencv内置了。

void Calibration::edgeDetector(

const int &canny_threshold, const int &edge_threshold,

const cv::Mat &src_img, cv::Mat &edge_img,

pcl::PointCloud<pcl::PointXYZ>::Ptr &edge_cloud)

{

//高斯模糊

int gaussian_size = 5;

cv::GaussianBlur(src_img, src_img, cv::Size(gaussian_size, gaussian_size), 0, 0);

//提取边缘像素

cv::Mat canny_result = cv::Mat::zeros(height_, width_, CV_8UC1);

cv::Canny(src_img, canny_result, canny_threshold, canny_threshold * 3, 3, true);

//对这些直线点进行分组

std::vector<std::vector<cv::Point>> contours;

std::vector<cv::Vec4i> hierarchy;

cv::findContours(canny_result, contours, hierarchy, cv::RETR_EXTERNAL, cv::CHAIN_APPROX_NONE, cv::Point(0, 0));

//如果某一组的点数过少,那就不要,这相当于过滤操作

edge_img = cv::Mat::zeros(height_, width_, CV_8UC1);

edge_cloud = pcl::PointCloud<pcl::PointXYZ>::Ptr(new pcl::PointCloud<pcl::PointXYZ>);

for (size_t i = 0; i < contours.size(); i++)//contours.size()

{

if (contours[i].size() > edge_threshold)

{

for (size_t j = 0; j < contours[i].size(); j++)

{

//what the use of p ???????

pcl::PointXYZ p;

p.x = contours[i][j].x;

p.y = -contours[i][j].y;

p.z = 0;

edge_img.at<uchar>(-p.y, p.x) = 255;

}

}

}

//然后把直线点的像素坐标保存到pcl点云里

//edge_cloud is the real output

for (int x = 0; x < edge_img.cols; x++)

{

for (int y = 0; y < edge_img.rows; y++)

{

if (edge_img.at<uchar>(y, x) == 255)

{

pcl::PointXYZ p;

p.x = x;

p.y = -y; //TODO -y?

p.z = 0;

edge_cloud->points.push_back(p);

}

}

}

edge_cloud->width = edge_cloud->points.size();

edge_cloud->height = 1;

}在代码里,他们先对照片进行gaussblur去除噪声,然后进行canny提取边缘像素。他们最后之所以把像素保存到pcl的点云里,用这些点构造了以后一个kdtree,是因为他们在进行特征匹配的时候要借用pcl的kdtree找最近邻。

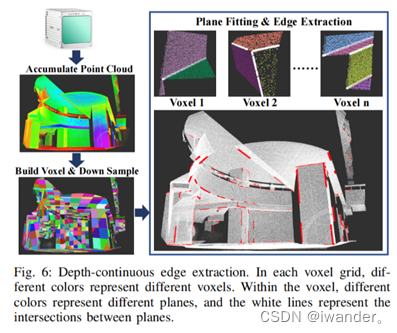

2. lidar cloud的特征提取

接下来,问题变成了如何在点云中找到属于线特征的那些点。一般来说,在3D 点云中提线,需要先提面,面和面之间的交线就是直线了。github上也有点云提线的代码,例如这篇,但是提的线的位置不是特别的准。

pcl有现成的面分割算法,也是用ransac的方式去拟合一个个平面。但是如果点云中包含的面比较多,那么ransac就会失效。所以这个代码里,他们把点云分成边长1m的体素,对于每一个体素,里面包含的平面就不会很多,减少错误的概率。然后用pcl的分割器采用RANSAC的方式提平面,保留那些相交且夹角30-150度的平面,提取交线。论文中还有一个depth-continuous edge的概念。这个概念在论文里花了很大篇幅介绍。大概的意思是说提取的直线并不是整段都要,只保留离模型点云近的那一段段小线段,如果交线的某个位置离两个平面点云都很近,那么就会被选取。这些小线段就是论文中 depth-continuous edge,代码里其实非常简短。

所以说,标定的时候,你不能在荒郊野外深山老林,周围环境里最好有一些棱角分明的建筑,比如说港大校园。

void Calibration::LiDAREdgeExtraction(

const std::unordered_map<VOXEL_LOC, Voxel *> &voxel_map,

const float ransac_dis_thre, const int plane_size_threshold, //0.02, 60

pcl::PointCloud<pcl::PointXYZI>::Ptr &lidar_line_cloud_3d)

{

ROS_INFO_STREAM("Extracting Lidar Edge");

ros::Rate loop(5000);

lidar_line_cloud_3d = pcl::PointCloud<pcl::PointXYZI>::Ptr(new pcl::PointCloud<pcl::PointXYZI>);

for (auto iter = voxel_map.begin(); iter != voxel_map.end(); iter++)

{

if (iter->second->cloud->size() > 50)

{

std::vector<Plane> plane_list;

//1.创建一个体素滤波器

pcl::PointCloud<pcl::PointXYZI>::Ptr cloud_filter(new pcl::PointCloud<pcl::PointXYZI>);

pcl::copyPointCloud(*iter->second->cloud, *cloud_filter);

//创建一个模型参数对象,用于记录结果

pcl::ModelCoefficients::Ptr coefficients(new pcl::ModelCoefficients);

// inliers表示误差能容忍的点,记录点云序号

pcl::PointIndices::Ptr inliers(new pcl::PointIndices);

//创建一个分割器

pcl::SACSegmentation<pcl::PointXYZI> seg;

// Optional,设置结果平面展示的点是分割掉的点还是分割剩下的点

seg.setOptimizeCoefficients(true);

// Mandatory-设置目标几何形状

seg.setModelType(pcl::SACMODEL_PLANE);

//分割方法:随机采样法

seg.setMethodType(pcl::SAC_RANSAC);

//设置误差容忍范围,也就是阈值

if (iter->second->voxel_origin[0] < 10)

{

seg.setDistanceThreshold(ransac_dis_thre);

}

else

{

seg.setDistanceThreshold(ransac_dis_thre);

}

//2.点云分割,提取平面

pcl::PointCloud<pcl::PointXYZRGB> color_planner_cloud;

int plane_index = 0;

while (cloud_filter->points.size() > 10)

{

pcl::PointCloud<pcl::PointXYZI> planner_cloud;

pcl::ExtractIndices<pcl::PointXYZI> extract;

//输入点云

seg.setInputCloud(cloud_filter);

seg.setMaxIterations(500);

//分割点云

seg.segment(*inliers, *coefficients);

if (inliers->indices.size() == 0)

{

ROS_INFO_STREAM("Could not estimate a planner model for the given dataset");

break;

}

extract.setIndices(inliers);

extract.setInputCloud(cloud_filter);

extract.filter(planner_cloud);

if (planner_cloud.size() > plane_size_threshold)

{

pcl::PointCloud<pcl::PointXYZRGB> color_cloud;

std::vector<unsigned int> colors;

colors.push_back(static_cast<unsigned int>(rand() % 256));

colors.push_back(static_cast<unsigned int>(rand() % 256));

colors.push_back(static_cast<unsigned int>(rand() % 256));

pcl::PointXYZ p_center(0, 0, 0);

for (size_t i = 0; i < planner_cloud.points.size(); i++)

{

pcl::PointXYZRGB p;

p.x = planner_cloud.points[i].x;

p.y = planner_cloud.points[i].y;

p.z = planner_cloud.points[i].z;

p_center.x += p.x;

p_center.y += p.y;

p_center.z += p.z;

p.r = colors[0];

p.g = colors[1];

p.b = colors[2];

color_cloud.push_back(p);

color_planner_cloud.push_back(p);

}

p_center.x = p_center.x / planner_cloud.size();

p_center.y = p_center.y / planner_cloud.size();

p_center.z = p_center.z / planner_cloud.size();

Plane single_plane;

single_plane.cloud = planner_cloud;

single_plane.p_center = p_center;

single_plane.normal << coefficients->values[0], coefficients->values[1], coefficients->values[2];

single_plane.index = plane_index;

plane_list.push_back(single_plane);

plane_index++;

}

//3.提取平面后剩下的点云, unused

extract.setNegative(true);

pcl::PointCloud<pcl::PointXYZI> cloud_f;

extract.filter(cloud_f);

*cloud_filter = cloud_f;

}

if (plane_list.size() >= 2)

{

sensor_msgs::PointCloud2 planner_cloud2;

pcl::toROSMsg(color_planner_cloud, planner_cloud2);

planner_cloud2.header.frame_id = "livox";

planner_cloud_pub_.publish(planner_cloud2);

//loop.sleep();

}

//4.获取平面交线点云

std::vector<pcl::PointCloud<pcl::PointXYZI>> line_cloud_list;

calcLine(plane_list, voxel_size_, iter->second->voxel_origin, line_cloud_list);

// ouster 5,normal 3

//if contains too many lines, it is more likely to have fake lines

if (line_cloud_list.size() > 0 && line_cloud_list.size() <= 8)

{

for (size_t cloud_index = 0; cloud_index < line_cloud_list.size(); cloud_index++)

{

for (size_t i = 0; i < line_cloud_list[cloud_index].size(); i++)

{

pcl::PointXYZI p = line_cloud_list[cloud_index].points[i];

plane_line_cloud_->points.push_back(p);

sensor_msgs::PointCloud2 pub_cloud;

pcl::toROSMsg(line_cloud_list[cloud_index], pub_cloud);

pub_cloud.header.frame_id = "livox";

line_cloud_pub_.publish(pub_cloud);

//loop.sleep();

plane_line_number_.push_back(line_number_);

}

line_number_++;

}

}

}

}

}把一个体素范围内的平面都分割出来以后,求直线就比较暴力了。因为一个体素里总共也没几个平面,那就俩俩之间暴力求交线就好,这部分代码在这个函数里:

void Calibration::calcLine(

const std::vector<Plane> &plane_list, const double voxel_size,

const Eigen::Vector3d origin,

std::vector<pcl::PointCloud<pcl::PointXYZI>> &line_cloud_list)

{

...

//5. if current location is close to both 2 plane clouds, so the point is on the depth contiuous edge

if ((dis1 < min_line_dis_threshold_*min_line_dis_threshold_ && dis2 < max_line_dis_threshold_*max_line_dis_threshold_) || (dis1 < max_line_dis_threshold_*max_line_dis_threshold_ && dis2 < min_line_dis_threshold_*min_line_dis_threshold_))

{

line_cloud.push_back(p);

}

...

}那个if的判断就是关于depth-continuous edge。

3. lidar-camera特征关联

无论是点云中提取的3D线,还是image中提取的2D线,作者并没有用类似于Ax+By+Cz+D=0的形式去描述,仍然是以点的形式保存的。当3D线点重投影到平面时,就用image像素点构造的kdtree查询最近临作为一组关联的数据,不去用各种trick,简单高效。代码里,void Calibration::buildVPnp()是构造特征匹配的函数。

//find the correspondance of 3D points and 2D points with their directions

void Calibration::buildVPnp(

const Vector6d &extrinsic_params, const int dis_threshold,

const bool show_residual,

const pcl::PointCloud<pcl::PointXYZ>::Ptr &cam_edge_cloud_2d,

const pcl::PointCloud<pcl::PointXYZI>::Ptr &lidar_line_cloud_3d,

std::vector<VPnPData> &pnp_list)

{

//1.initialize containers for 3D lines: for each pixel there is a corresponding cloud

//because some closed 3D points may preject onto same pixel, so the container helpes to calculate averagy value

pnp_list.clear();

std::vector<std::vector<std::vector<pcl::PointXYZI>>> img_pts_container;

for (int y = 0; y < height_; y++)

{

std::vector<std::vector<pcl::PointXYZI>> row_pts_container;

for (int x = 0; x < width_; x++)

{

std::vector<pcl::PointXYZI> col_pts_container;

row_pts_container.push_back(col_pts_container);

}

img_pts_container.push_back(row_pts_container);

}

//2.get 3D lines, initial pose, intrinsics

std::vector<cv::Point3d> pts_3d;

Eigen::AngleAxisd rotation_vector3;

rotation_vector3 = Eigen::AngleAxisd(extrinsic_params[0], Eigen::Vector3d::UnitZ()) *

Eigen::AngleAxisd(extrinsic_params[1], Eigen::Vector3d::UnitY()) *

Eigen::AngleAxisd(extrinsic_params[2], Eigen::Vector3d::UnitX());

for (size_t i = 0; i < lidar_line_cloud_3d->size(); i++)

{

pcl::PointXYZI point_3d = lidar_line_cloud_3d->points[i];

pts_3d.emplace_back(cv::Point3d(point_3d.x, point_3d.y, point_3d.z));

}

cv::Mat camera_matrix = (cv::Mat_<double>(3, 3) << fx_, 0.0, cx_, 0.0, fy_, cy_, 0.0, 0.0, 1.0);

cv::Mat distortion_coeff = (cv::Mat_<double>(1, 5) << k1_, k2_, p1_, p2_, k3_);

cv::Mat r_vec = (cv::Mat_<double>(3, 1)

<< rotation_vector3.angle() * rotation_vector3.axis().transpose()[0],

rotation_vector3.angle() * rotation_vector3.axis().transpose()[1],

rotation_vector3.angle() * rotation_vector3.axis().transpose()[2]);

cv::Mat t_vec = (cv::Mat_<double>(3, 1) << extrinsic_params[3], extrinsic_params[4], extrinsic_params[5]);

// debug

// std::cout << "camera_matrix:" << camera_matrix << std::endl;

// std::cout << "distortion_coeff:" << distortion_coeff << std::endl;

// std::cout << "r_vec:" << r_vec << std::endl;

// std::cout << "t_vec:" << t_vec << std::endl;

// std::cout << "pts 3d size:" << pts_3d.size() << std::endl;

//3.project 3d-points into image view and fall into the container, look out these info are from 3D lines

std::vector<cv::Point2d> pts_2d;

cv::projectPoints(pts_3d, r_vec, t_vec, camera_matrix, distortion_coeff, pts_2d);

pcl::PointCloud<pcl::PointXYZ>::Ptr line_edge_cloud_2d(new pcl::PointCloud<pcl::PointXYZ>);

std::vector<int> line_edge_cloud_2d_number;

for (size_t i = 0; i < pts_2d.size(); i++)

{

pcl::PointXYZ p;

p.x = pts_2d[i].x;

p.y = -pts_2d[i].y;

p.z = 0;

pcl::PointXYZI pi_3d;

pi_3d.x = pts_3d[i].x;

pi_3d.y = pts_3d[i].y;

pi_3d.z = pts_3d[i].z;

pi_3d.intensity = 1;

if (p.x > 0 && p.x < width_ && pts_2d[i].y > 0 && pts_2d[i].y < height_)

{

if (img_pts_container[pts_2d[i].y][pts_2d[i].x].size() == 0)

{

line_edge_cloud_2d->points.push_back(p);

line_edge_cloud_2d_number.push_back(plane_line_number_[i]);

img_pts_container[pts_2d[i].y][pts_2d[i].x].push_back(pi_3d);

}

else

{

img_pts_container[pts_2d[i].y][pts_2d[i].x].push_back(pi_3d);

}

}

}

if (show_residual)

{

cv::Mat residual_img = getConnectImg(dis_threshold, cam_edge_cloud_2d, line_edge_cloud_2d);

cv::imshow("residual", residual_img);

cv::waitKey(50);

}

//4.build kdtree to find the closest 2D point of each 3D point

pcl::search::KdTree<pcl::PointXYZ>::Ptr kdtree(new pcl::search::KdTree<pcl::PointXYZ>());

pcl::search::KdTree<pcl::PointXYZ>::Ptr kdtree_lidar(new pcl::search::KdTree<pcl::PointXYZ>());

pcl::PointCloud<pcl::PointXYZ>::Ptr search_cloud = pcl::PointCloud<pcl::PointXYZ>::Ptr(new pcl::PointCloud<pcl::PointXYZ>);

pcl::PointCloud<pcl::PointXYZ>::Ptr tree_cloud = pcl::PointCloud<pcl::PointXYZ>::Ptr(new pcl::PointCloud<pcl::PointXYZ>);

pcl::PointCloud<pcl::PointXYZ>::Ptr tree_cloud_lidar = pcl::PointCloud<pcl::PointXYZ>::Ptr(new pcl::PointCloud<pcl::PointXYZ>);

kdtree->setInputCloud(cam_edge_cloud_2d);

kdtree_lidar->setInputCloud(line_edge_cloud_2d);

tree_cloud = cam_edge_cloud_2d;

tree_cloud_lidar = line_edge_cloud_2d;

search_cloud = line_edge_cloud_2d;

// 指定近邻个数

int K = 5;

// 创建两个向量,分别存放近邻的索引值、近邻的中心距

std::vector<int> pointIdxNKNSearch(K);

std::vector<float> pointNKNSquaredDistance(K);

std::vector<int> pointIdxNKNSearchLidar(K);

std::vector<float> pointNKNSquaredDistanceLidar(K);

int match_count = 0;

double mean_distance;

int line_count = 0;

std::vector<cv::Point2d> lidar_2d_list;

std::vector<cv::Point2d> img_2d_list;

std::vector<Eigen::Vector2d> camera_direction_list;

std::vector<Eigen::Vector2d> lidar_direction_list;

std::vector<int> lidar_2d_number;

//scan each 3D point

for (size_t i = 0; i < search_cloud->points.size(); i++)

{

pcl::PointXYZ searchPoint = search_cloud->points[i];

if ((kdtree ->nearestKSearch(searchPoint, K, pointIdxNKNSearch, pointNKNSquaredDistance) > 0) &&

(kdtree_lidar->nearestKSearch(searchPoint, K, pointIdxNKNSearchLidar, pointNKNSquaredDistanceLidar) > 0))

{

bool dis_check = true;

for (int j = 0; j < K; j++)

{

float distance = sqrt(pow(searchPoint.x - tree_cloud->points[pointIdxNKNSearch[j]].x, 2) +

pow(searchPoint.y - tree_cloud->points[pointIdxNKNSearch[j]].y, 2));

if (distance > dis_threshold)

{

dis_check = false;

}

}

//5.calculate the direction of 3D lines and 2D lines on pixel frame

//if the distances with all 5 closest 2D points is <20

if (dis_check)

{

Eigen::Vector2d direction_cam(0, 0);

std::vector<Eigen::Vector2d> points_cam;

for (size_t i = 0; i < pointIdxNKNSearch.size(); i++)

{

Eigen::Vector2d p(tree_cloud->points[pointIdxNKNSearch[i]].x, tree_cloud->points[pointIdxNKNSearch[i]].y);

points_cam.push_back(p);

}

calcDirection(points_cam, direction_cam);

Eigen::Vector2d direction_lidar(0, 0);

std::vector<Eigen::Vector2d> points_lidar;

for (size_t i = 0; i < pointIdxNKNSearch.size(); i++)

{

Eigen::Vector2d p(tree_cloud_lidar->points[pointIdxNKNSearchLidar[i]].x, tree_cloud_lidar->points[pointIdxNKNSearchLidar[i]].y);

points_lidar.push_back(p);

}

calcDirection(points_lidar, direction_lidar);

// direction.normalize();

cv::Point p_l_2d(search_cloud->points[i].x, -search_cloud->points[i].y);

cv::Point p_c_2d(tree_cloud->points[pointIdxNKNSearch[0]].x, -tree_cloud->points[pointIdxNKNSearch[0]].y);

if (checkFov(p_l_2d))

{

lidar_2d_list.push_back(p_l_2d); //projected point of the 3D point

img_2d_list.push_back(p_c_2d); //corresponding 2D point

camera_direction_list.push_back(direction_cam); //direction of corresponding 2D point

lidar_direction_list.push_back(direction_lidar); //direction of the projected point of the 3D point

lidar_2d_number.push_back(line_edge_cloud_2d_number[i]); //the idx of each 3D lines

}

}

}

}

//6.build correspondance

for (size_t i = 0; i < lidar_2d_list.size(); i++)

{

int y = lidar_2d_list[i].y;

int x = lidar_2d_list[i].x;

int pixel_points_size = img_pts_container[y][x].size();

if (pixel_points_size > 0)

{

VPnPData pnp;

pnp.x = 0;

pnp.y = 0;

pnp.z = 0;

//corresponding 2D point

pnp.u = img_2d_list[i].x;

pnp.v = img_2d_list[i].y;

//3D point (averaged), TODO what if they are far to each other?

for (size_t j = 0; j < pixel_points_size; j++)

{

pnp.x += img_pts_container[y][x][j].x;

pnp.y += img_pts_container[y][x][j].y;

pnp.z += img_pts_container[y][x][j].z;

}

pnp.x = pnp.x / pixel_points_size;

pnp.y = pnp.y / pixel_points_size;

pnp.z = pnp.z / pixel_points_size;

//direction of corresponding 2D point

pnp.direction = camera_direction_list[i];

//direction of the projected point of the 3D point

pnp.direction_lidar = lidar_direction_list[i];

//the idx of the 3D line which the 3D point belongs to

pnp.number = lidar_2d_number[i];

float theta = pnp.direction.dot(pnp.direction_lidar);

if (theta > direction_theta_min_ || theta < direction_theta_max_) //-30-+30, 150-270 deg.

{

pnp_list.push_back(pnp);

}

}

}

}对于3D 点云的每一个线,把它的点都根据当前外参Tcl的初值,内参K投影到image上,获得一个像素坐标,然后查询kdree找到最近的几个刚才通过canny算法提取的2D像素,用这几个像素可以计算出一个均值,直线向量,就可以获得了一组特征匹配了:

3D点+2D点/2D点向量。

归根到底,获得的还是(lidar)点和(image)点之间的匹配关系,当然了也可以认为是(lidar)点和(image)线的匹配关系,因为后者保存了直线向量。也有别的方案保存的3D线和2D线都是以两个端点描述的,是真正意义上的(lidar)线和(image)线之间的匹配。

4. 优化模型

损失函数就是常见的点-线距离或者点-点距离了。对于3D点,根据待优化Tcl转到cam系,转成像素坐标,去畸变,在和匹配的2D点求出像素距离作为residual。

vpnp_calib(VPnPData p) {pd = p;}

template <typename T>

bool operator()(const T *_q, const T *_t, T *residuals) const

{

//camera param

Eigen::Matrix<T, 3, 3> innerT = inner.cast<T>();

Eigen::Matrix<T, 4, 1> distorT = distor.cast<T>();

const T &fx = innerT.coeffRef(0, 0);

const T &cx = innerT.coeffRef(0, 2);

const T &fy = innerT.coeffRef(1, 1);

const T &cy = innerT.coeffRef(1, 2);

//initial value of Tcl

Eigen::Quaternion<T> q_incre{_q[3], _q[0], _q[1], _q[2]};

Eigen::Matrix<T, 3, 1> t_incre{_t[0], _t[1], _t[2]};

//project 3D point onto pixel frame

Eigen::Matrix<T, 3, 1> p_l(T(pd.x), T(pd.y), T(pd.z));

Eigen::Matrix<T, 3, 1> p_c = q_incre.toRotationMatrix() * p_l + t_incre;

Eigen::Matrix<T, 3, 1> p_2 = innerT * p_c;

T uo = p_2[0] / p_2[2];

T vo = p_2[1] / p_2[2];

//undistort

T xo = (uo - cx) / fx;

T yo = (vo - cy) / fy;

T r2 = xo * xo + yo * yo;

T r4 = r2 * r2;

T distortion = 1.0 + distorT[0] * r2 + distorT[1] * r4;

T xd = xo * distortion + (distorT[2] * xo * yo + distorT[2] * xo * yo) + distorT[3] * (r2 + xo * xo + xo * xo);

T yd = yo * distortion + distorT[3] * xo * yo + distorT[3] * xo * yo + distorT[2] * (r2 + yo * yo + yo * yo);

T ud = fx * xd + cx;

T vd = fy * yd + cy;

if (T(pd.direction(0)) == T(0.0) && T(pd.direction(1)) == T(0.0))

{

residuals[0] = ud - T(pd.u);

residuals[1] = vd - T(pd.v);

}

else

{

residuals[0] = ud - T(pd.u);

residuals[1] = vd - T(pd.v);

Eigen::Matrix<T, 2, 2> I = Eigen::Matrix<float, 2, 2>::Identity().cast<T>();

Eigen::Matrix<T, 2, 1> n = pd.direction.cast<T>();

Eigen::Matrix<T, 1, 2> nt = pd.direction.transpose().cast<T>();

Eigen::Matrix<T, 2, 2> V = n * nt;

V = I - V;

Eigen::Matrix<T, 2, 2> R = Eigen::Matrix<float, 2, 2>::Zero().cast<T>();

R.coeffRef(0, 0) = residuals[0];

R.coeffRef(1, 1) = residuals[1];

R = V * R * V.transpose();

residuals[0] = R.coeffRef(0, 0);

residuals[1] = R.coeffRef(1, 1);

}

return true;

}

边栏推荐

- Is there a completely independent localization database technology

- Inherit day01

- My C language learning records (blue bridge) -- files and file input and output

- Shell 传递参数

- JS regular filtering and adding image prefixes in rich text

- 【paddle】加载模型权重后预测报错AttributeError: ‘Model‘ object has no attribute ‘_place‘

- What are the principles of software design (OCP)

- Single instance mode of encapsulating PDO with PHP in spare time

- An article about liquid template engine

- SD card reports an error "error -110 whilst initializing SD card

猜你喜欢

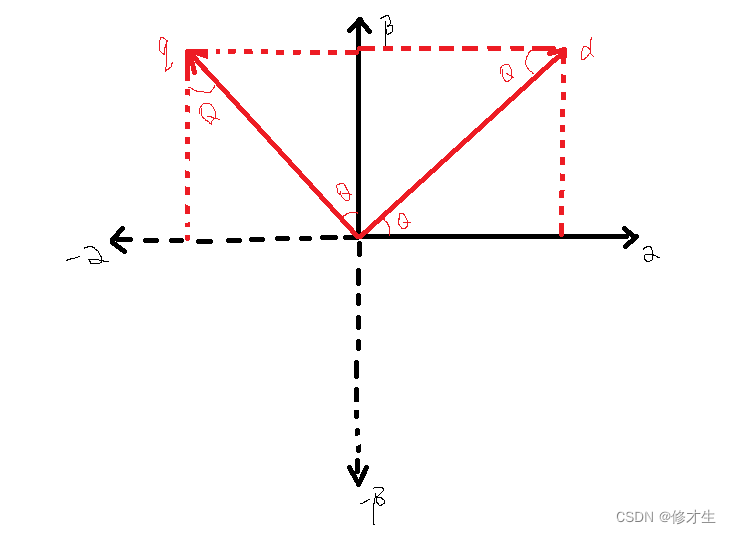

电机控制反Park变换和反Clarke变换公式推导

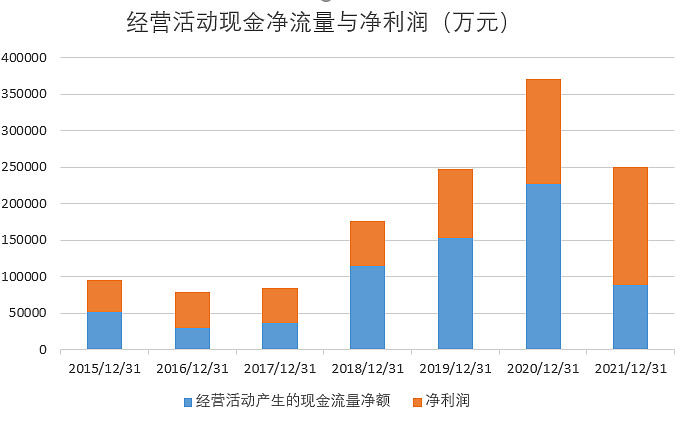

What is the investment value of iFLYTEK, which does not make money?

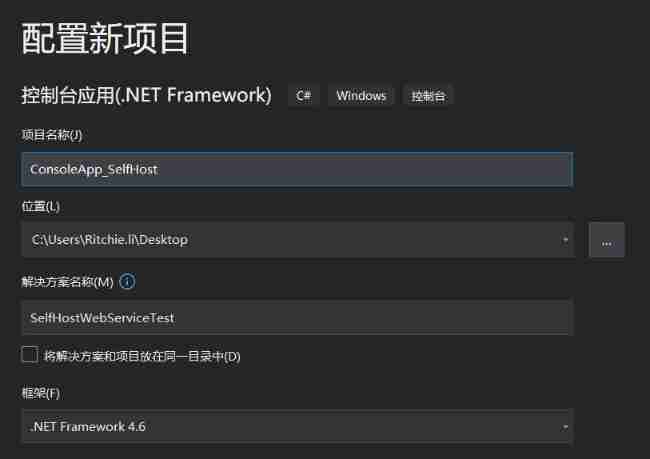

C # create self host webservice

蓝色样式商城网站页脚代码

![[ruoyi] enable Mini navigation bar](/img/28/a8b38aecd90c8ddc98333f0e2d3eab.png)

[ruoyi] enable Mini navigation bar

银行核心业务系统性能测试方法

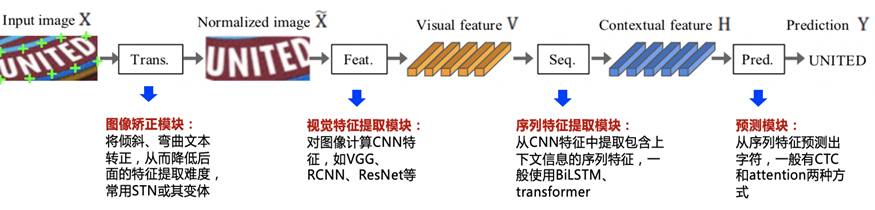

OCR文字識別方法綜述

Codeworks 5 questions per day (1700 average) - day 6

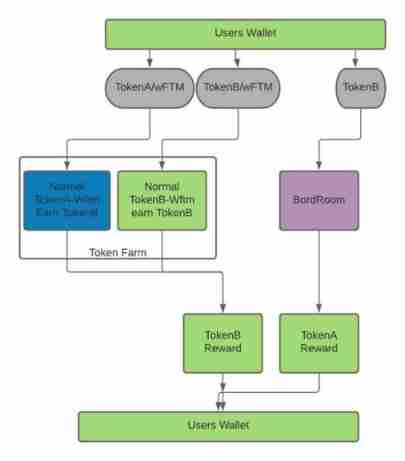

Tomb. Weekly update of Finance (February 7 - February 13)

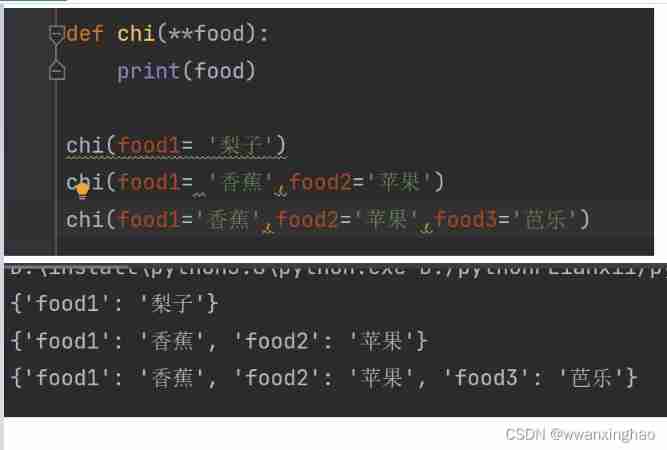

1. Dynamic parameters of function: *args, **kwargs

随机推荐

Leetcode problem solving -- 98 Validate binary search tree

银行核心业务系统性能测试方法

Sign SSL certificate as Ca

华为、H3C、思科命令对比,思维导图形式从基础、交换、路由三大方向介绍【转自微信公众号网络技术联盟站】

resulttype和resultmap的区别和应用场景

Self made CA certificate and SSL certificate using OpenSSL

Item 10: Prefer scoped enums to unscoped enums.

How to write compile scripts compatible with arm and x86 (Makefile, cmakelists.txt, shell script)

js 正则过滤和增加富文本中图片前缀

Who is the winner of PTA

What are the principles of software design (OCP)

Daily question brushing plan-2-13 fingertip life

Erreur de la carte SD "erreur - 110 whilst initialisation de la carte SD

three.js网页背景动画液态js特效

EDCircles: A real-time circle detector with a false detection control 翻译

Polymorphic day02

Computer graduation project asp Net fitness management system VS development SQLSERVER database web structure c programming computer web page source code project

[ruoyi] set theme style

Leetcode problem solving -- 108 Convert an ordered array into a binary search tree

[pointer training - eight questions]