当前位置:网站首页>Alibaba cloud side: underlying details in concurrent scenarios - pseudo sharing

Alibaba cloud side: underlying details in concurrent scenarios - pseudo sharing

2022-07-06 13:03:00 【Java misty rain】

Three level cache architecture

as everyone knows , To ease memory and CPU The contradiction of speed mismatch between , Cache is introduced , It's a lot smaller than memory , But switching is much faster than memory .

We have drawn such a hierarchical storage architecture before :

https://gitee.com/veal98/images/raw/master/img/20210415105520.png

in fact , The cache is still subdivided , Also known as Three level cache structure : Class A (L1) cache 、 second level (L2) cache 、 Level three (L3) cache

The closer we get CPU The cache of , The faster the speed. , The smaller the capacity . therefore L1 The smallest cache size but the fastest ;L3 The cache capacity is the largest and the speed is the slowest

When CPU When performing an operation , It will go first L1 Cache the data needed to find 、 If you don't find it, go again L2 cache 、 And then there was L3 cache , If the last three levels of cache are not hit , that CPU It's time to access memory .

obviously ,CPU The farther you go , The longer it takes . So try to make sure that the data exists L1 Cache can improve the running speed in case of large amount of computation .

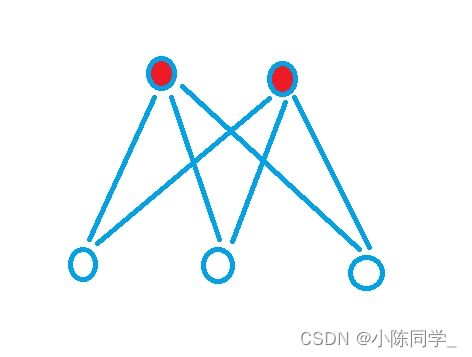

It should be noted that ,CPU Corresponding usage relationship with L3 cache and memory :

L1 and L2 Can only be a single CPU Core use

L3 Can be used by all... On a single slot CPU Core sharing

The memory consists of all the... On all the slots CPU Core sharing

As shown in the figure below :

in addition , How is the data in the three-level cache space organized ? let me put it another way , What is the storage form of data in the three-level cache ?

Cache Line( Cache line )!

The basic storage unit in the cache is Cache Line.

Every Cache Line Usually 64 byte , in other words , One Java Of long The type variable is 8 byte , One Cache Line You can save 8 individual long Variable of type .

So do you guys see ~ The data in the cache is not stored and organized as a single variable , It's multiple variables on one line .

Pseudo sharing problem False Sharing

Having said so much, it seems that the problem of pseudo sharing has not been touched yet , Don't worry. , We are very close to the truth ~

In the process of running the program , Because the basic unit of cache Cache Line yes 64 byte , So every time the cache is updated, it will load continuous... From memory 64 Bytes .

If you are visiting a long Type array , When a value in an array, such as v1 When loaded into the cache , Next address adjacent 7 Elements will also be loaded into the cache .( This also explains why our arrays are always so fast , A discrete data structure like a linked list , You can't enjoy this bonus ).

But, This wave of dividends is likely to lead to disaster .

for instance , If we define two long Variable of type a and b, Their addresses in memory are next to each other , What will happen ?

If we want to visit a Words , that b It will also be stored in the cache .

Confused, confused , Is there any problem with this , There seems to be nothing wrong with it , How nice The characteristics of

Come on, come on , Let's go straight to the previous example

Think back to the above CPU Corresponding usage relationship with L3 cache and memory , Imagine this , If one CPU The core thread T1 In the face of a Make changes , the other one CPU The core thread T2 But I'm right b To read .

When T1 modify a When , In addition to a Load into Cache Line, And enjoy a wave of dividends , hold b It is also loaded into T1 Where CPU The core Cache Line In the to , Right .

according to MESI Cache consistency protocol , After revising a The latter one Cache Line The state of is M(Modify, The modified ), And everything else includes a Of Cache Line Medium a It is not the latest value , So it will become I state (Invalid, Invalid state )

such , When T2 To read b when , EH , Find out where he is CPU The core corresponds to this Cache Line It's no longer working ,mmp, It takes a long time to reload from memory .

The problem is obvious ,b and a It doesn't matter , Every time because a The update of caused him to re read from memory , Slow down . This is pseudo sharing

On the surface, a and b They are all operated by independent threads , And there's no relationship between the two operations . It's just that they share a cache line , But all the competitive conflicts come from sharing .

Define pseudo sharing with a more written explanation : When multiple threads modify independent variables , If these variables share the same cache row , Will inadvertently affect each other's performance , As a result, the cache row feature cannot be fully utilized , This is pseudo sharing .

Pseudo sharing solution

Let's take an example to see how long a piece of pseudo shared code takes , As shown below , Let's define a Test class , It contains two long The variable of , Use two threads to auto increment these two variables 1 100 million times , This code takes time 5625ms

For pseudo sharing , There are generally two ways , In fact, all thoughts are the same :

1)padding: Is to increase the spacing between array elements , Make the elements accessed by different threads on different cache lines , Space for time

As mentioned above , One 64 Bytes of Cache Line You can save 8 individual long Variable of type , We are a and b these two items. long Add between variables of type 7 individual long type , bring a and b In a different Cache Line above :

class Pointer {

volatile long a;

long v1, v2, v3, v4, v5, v6, v7;

volatile long b;

}

Run the program again , You will find that the output time is magically shortened to 955ms

2)JDK1.8 Provides @Contended annotation : Is to put us manually padding The operations of are encapsulated in this annotation , This annotation can be placed on a class or a field , There is no more explanation here

class Test {

@Contended

volatile long a; // fill a

volatile long b;

}

It should be noted that , This annotation is invalid by default , Need to be in JVM Start parameters plus XX:-RestrictContended Will take effect

Finally, put the recitation version of this problem :

🥸 interviewer : Let's talk about pseudo sharing

veal : The pseudo sharing problem is actually caused by the characteristics of cache , The data in the L3 cache is not stored separately by one variable , Its basic storage unit is Cache Line, Generally one Cache Line Its size is 64 byte , in other words , One Cache Line You can save 8 individual 8 Bytes of long Type variable

That's because the basic unit of cache is 64 Bytes of Cache Line, So , Cache the data read from memory at one time 64 byte . let me put it another way , If cpu To read a long An array of types , Reading one of these elements will also load the next seven elements of other adjacent addresses into Cache Line In the to .

This leads to a problem , for instance , We defined two

longVariable of type a and b, They have nothing to do with , But their addresses in memory are next to each other , If one CPU The core thread T1 In the face of a Make changes , the other one CPU The core thread T2 But I'm right b To read .So when T1 modify a When , In addition to a Load into Cache Line, Will the b It is also loaded into T1 Where CPU The core Cache Line In the to , Right .

according to MESI Cache consistency protocol , After revising a The latter one Cache Line The state of is M(Modify, The modified ), And everything else includes a Of Cache Line Medium a It is not the latest value , So it will become I state (Invalid, Invalid state )

such , When T2 To read b when , He will find out , Where he is CPU The core corresponds to this Cache Line It's no longer working , In this way, it will take a long time to reload from memory .

in other words ,b and a It doesn't matter , Every time because a The update of caused him to re read from memory , Slow down . This is pseudo sharing

For pseudo sharing , There are generally two ways , In fact, all thoughts are the same :

1)padding: Is to increase the spacing between array elements , Make the elements accessed by different threads on different cache lines , Space for time . For example a and b And then define 7 individual long Variable of type , bring a and b Not in one Cache Line On , In this way, when modifying a When b Situated Cache Line It won't be affected

2)JDK 1.8 Provides

@Contendedannotation , Is to put us manually padding The operations of are encapsulated in this annotation , Add this annotation to the field a perhaps b The top of the is OK

If this article helps you , Don't forget to give me a 3 even , give the thumbs-up , forward , Comment on ,

I'll see you next time ! How to get answers : Liked Commented Closed ~

Learn more knowledge and skills , Follow up with private bloggers (03)

边栏推荐

- Role movement in the first person perspective

- What are the functions and features of helm or terrain

- Shortest Hamilton path (pressure DP)

- Experience summary of autumn recruitment of state-owned enterprises

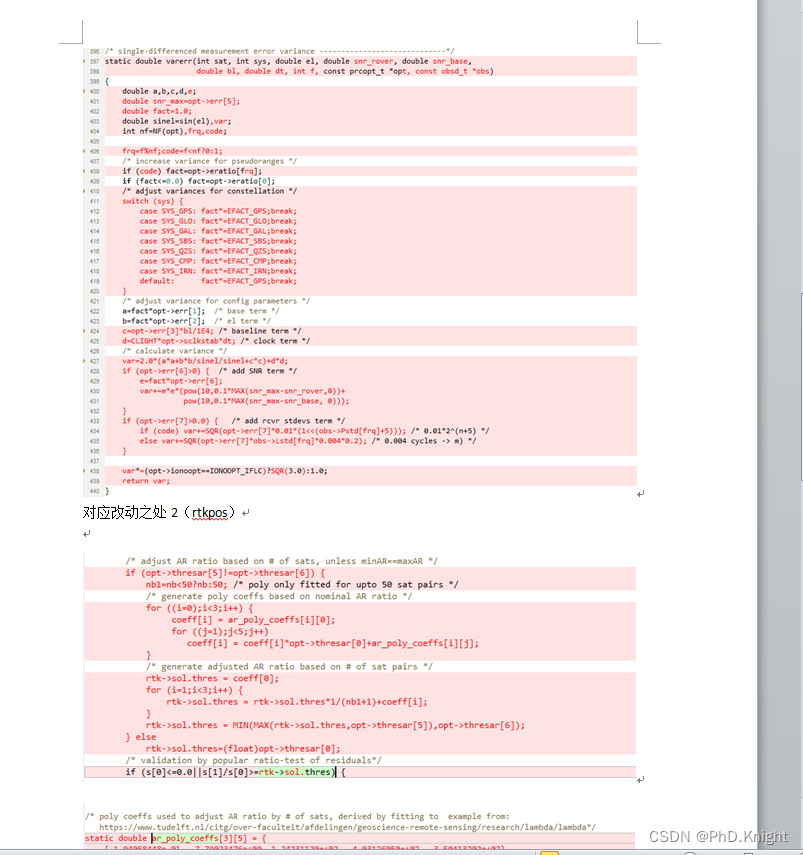

- 【rtklib】在rtk下使用抗差自适应卡尔曼滤波初步实践

- Pride-pppar source code analysis

- WSL common commands

- MySQL backup -- common errors in xtrabackup backup

- 编辑距离(多源BFS)

- Compile GDAL source code with nmake (win10, vs2022)

猜你喜欢

基本Dos命令

2年经验总结,告诉你如何做好项目管理

RTKLIB: demo5 b34f.1 vs b33

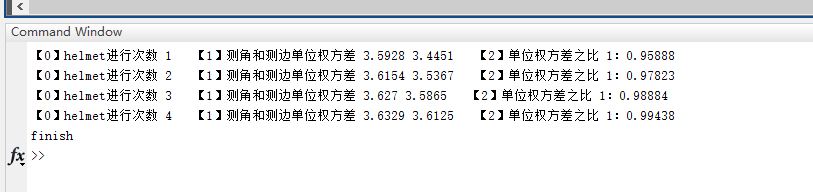

【GNSS数据处理】赫尔默特(helmert)方差分量估计解析及代码实现

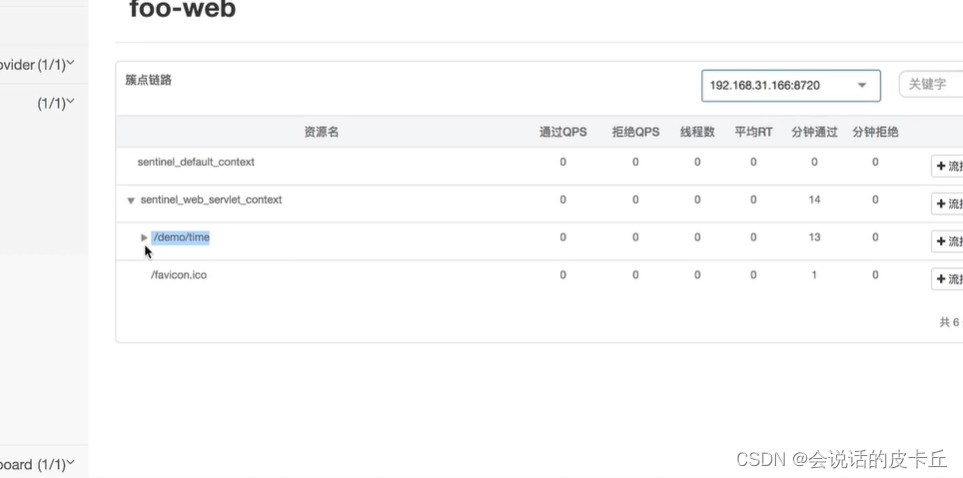

阿里云微服务(三)Sentinel开源流控熔断降级组件

染色法判定二分图

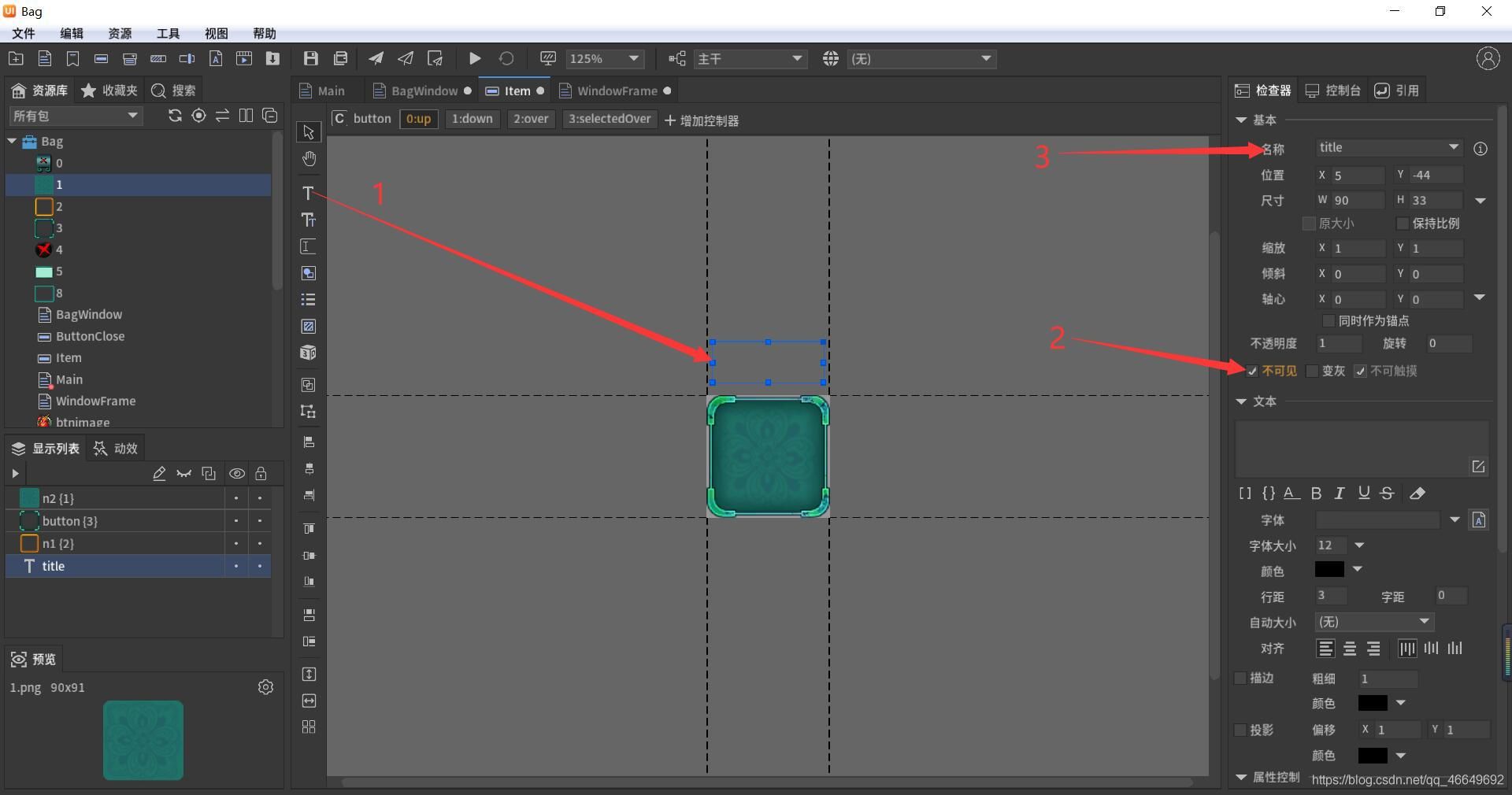

Fabrication of fairygui simple Backpack

The service robots that have been hyped by capital and the Winter Olympics are not just a flash in the pan

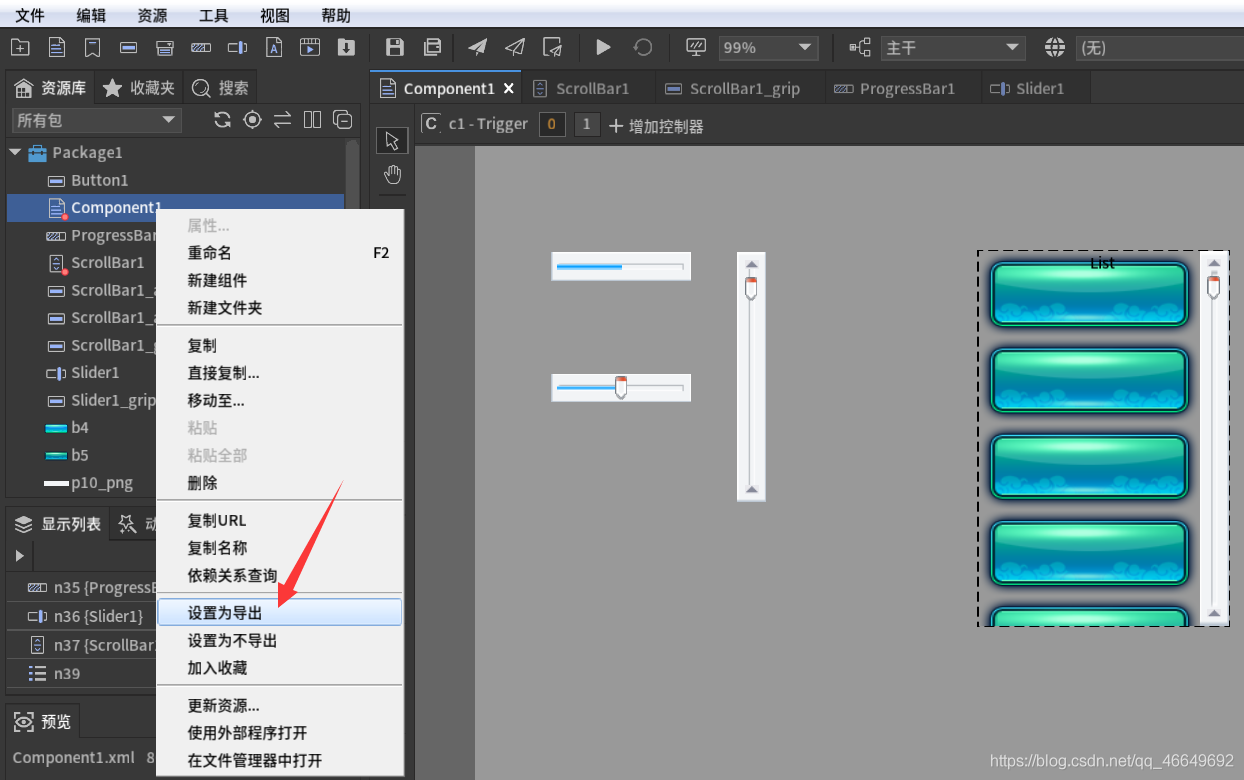

Fgui project packaging and Publishing & importing unity & the way to display the UI

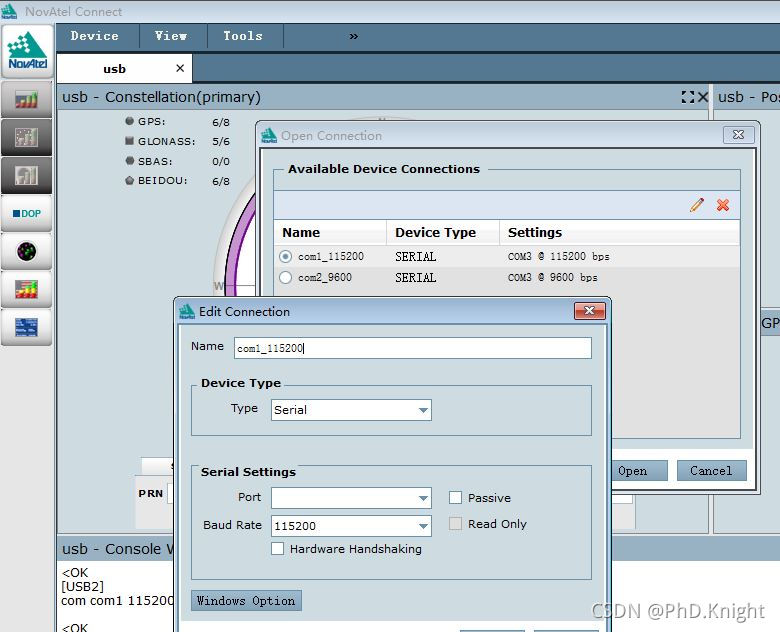

Novatel board oem617d configuration step record

随机推荐

[algorithm] sword finger offer2 golang interview question 9: subarray with product less than k

Edit distance (multi-source BFS)

Rt-ppp test using rtknavi

What are the advantages of using SQL in Excel VBA

341. Flatten nested list iterator

Mixed use of fairygui button dynamics

使用rtknavi进行RT-PPP测试

Agile development helps me

Ten minutes to thoroughly master cache breakdown, cache penetration, cache avalanche

Mysql database reports an error: row size too large (> 8126) Changing some columns to TEXT or BLOB or using ROW_ FORMAT=DY

平衡二叉树详解 通俗易懂

阿里云一面:并发场景下的底层细节 - 伪共享问题

[GNSS] robust estimation (robust estimation) principle and program implementation

The port is occupied because the service is not shut down normally

121道分布式面试题和答案

Matlab读取GNSS 观测值o文件代码示例

[算法] 剑指offer2 golang 面试题5:单词长度的最大乘积

Chromatic judgement bipartite graph

Liste des boucles de l'interface graphique de défaillance

Wechat applet development experience