当前位置:网站首页>Problem solving: runtimeerror: CUDA out of memory Tried to allocate 20.00 MiB

Problem solving: runtimeerror: CUDA out of memory Tried to allocate 20.00 MiB

2022-07-08 02:20:00 【Programming newbird】

Three methods commonly used on the network

Method 1 :

Just reduce batchsize

Change the configuration of the file cfg Of batchsize=1, Generally in cfg Search under file batch or batchsize, take batchsize Turn it down , Run again , Similar to changing the following

Method 2 :

The above method has not been solved yet , Don't change batchsize, Consider links to the following methods

Don't calculate the gradient :

ps: On which line of code is the error reported , Add the following line of code , Don't calculate the gradient

with torch.no_grad()

The method of not calculating the gradient

Method 3 :

Free memory : Links are as follows

Free memory

if hasattr(torch.cuda, 'empty_cache'):

torch.cuda.empty_cache()

ps: On the top of the line of code that reported the error , Add the following two lines of code , Free irrelevant memory

if hasattr(torch.cuda, 'empty_cache'):

torch.cuda.empty_cache()

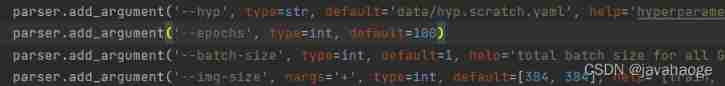

Method four : My solution

I didn't use the above method , The most important thing is that I'm a novice and don't know where to change , So after reading a lot of online solutions , Try this method , You can train , Successfully solved GPU Out of memory

resolvent : take img-size The small

I put the original [640,640] As shown in the figure above , Solve the problem successfully

边栏推荐

- Applet running under the framework of fluent 3.0

- Yolov5 Lite: ncnn+int8 deployment and quantification, raspberry pie can also be real-time

- 魚和蝦走的路

- Height of life

- Deep understanding of softmax

- Thread deadlock -- conditions for deadlock generation

- 关于TXE和TC标志位的小知识

- 实现前缀树

- Common disk formats and the differences between them

- leetcode 866. Prime Palindrome | 866. prime palindromes

猜你喜欢

leetcode 869. Reordered Power of 2 | 869. Reorder to a power of 2 (state compression)

Ml self realization / logistic regression / binary classification

Yolov5 Lite: yolov5, which is lighter, faster and easy to deploy

力争做到国内赛事应办尽办,国家体育总局明确安全有序恢复线下体育赛事

JVM memory and garbage collection-3-runtime data area / method area

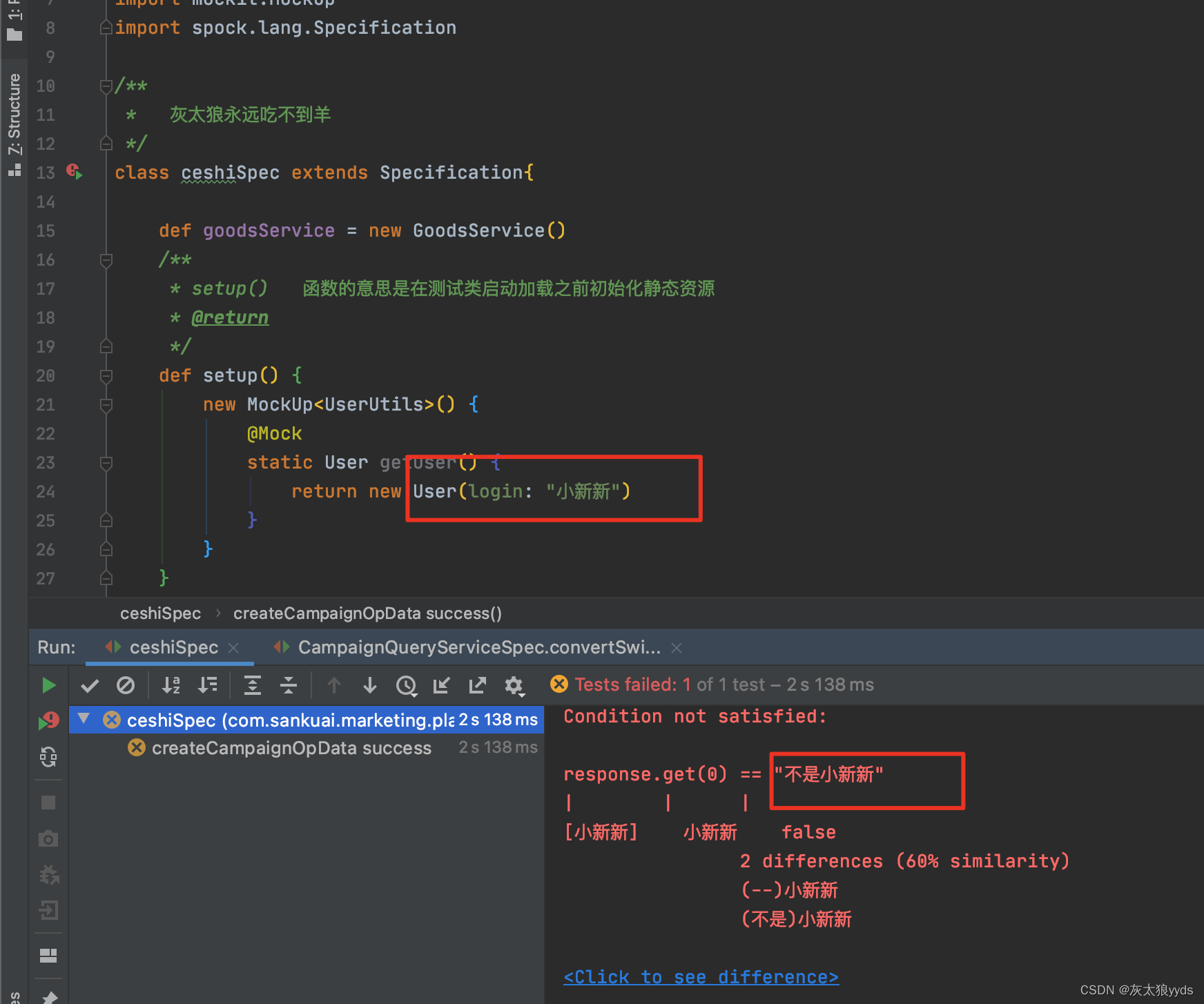

Spock单元测试框架介绍及在美团优选的实践_第二章(static静态方法mock方式)

Gaussian filtering and bilateral filtering principle, matlab implementation and result comparison

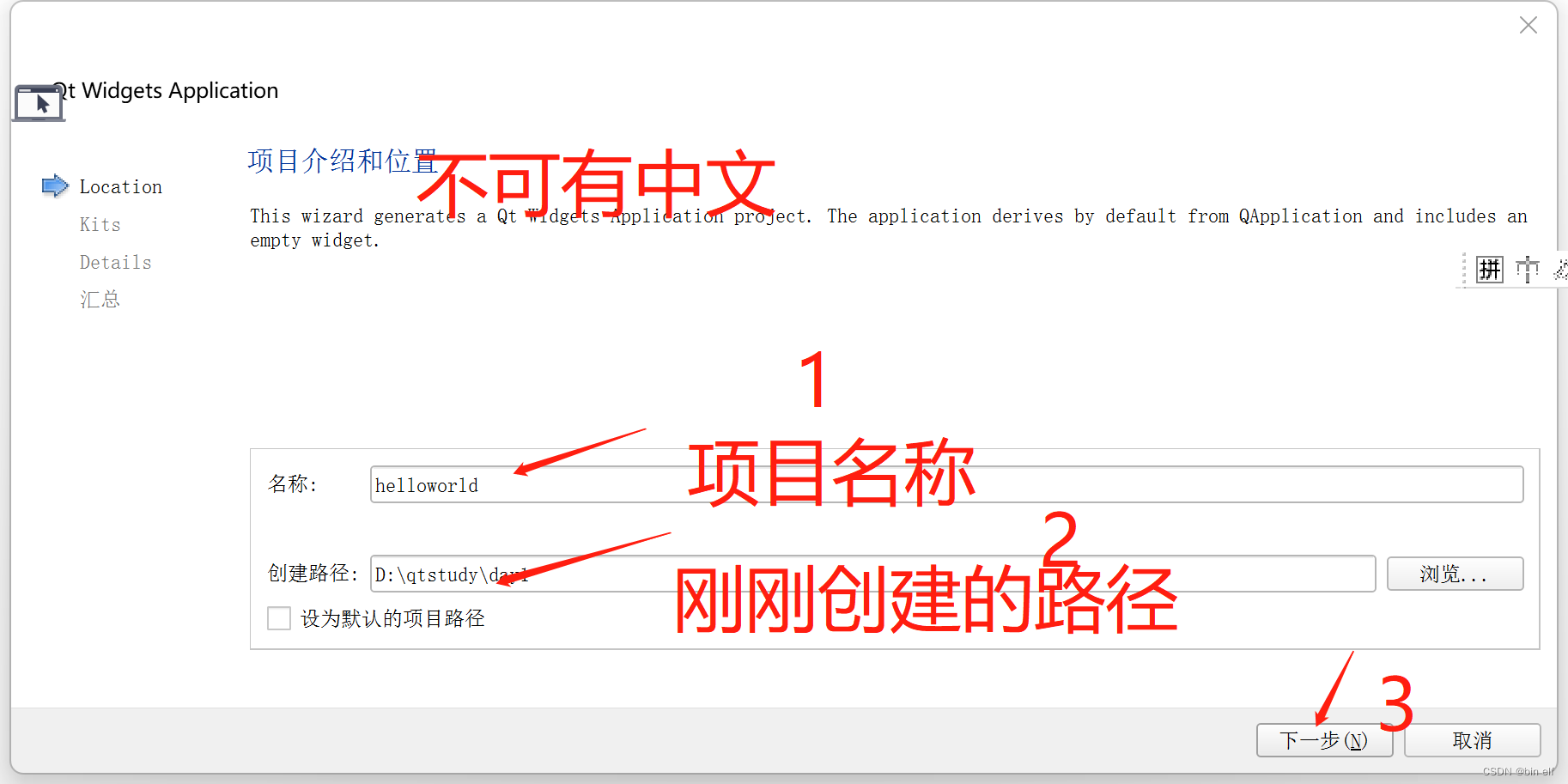

QT -- create QT program

From starfish OS' continued deflationary consumption of SFO, the value of SFO in the long run

数据链路层及网络层协议要点

随机推荐

Unity 射线与碰撞范围检测【踩坑记录】

image enhancement

Leetcode question brushing record | 27_ Removing Elements

Beaucoup d'enfants ne savent pas grand - chose sur le principe sous - jacent du cadre orm, non, ice River vous emmène 10 minutes à la main "un cadre orm minimaliste" (collectionnez - le maintenant)

See how names are added to namespace STD from cmath file

谈谈 SAP 系统的权限管控和事务记录功能的实现

1331:【例1-2】后缀表达式的值

WPF custom realistic wind radar chart control

Analysis ideas after discovering that the on duty equipment is attacked

Deeppath: a reinforcement learning method of knowledge graph reasoning

很多小伙伴不太了解ORM框架的底层原理,这不,冰河带你10分钟手撸一个极简版ORM框架(赶快收藏吧)

th:include的使用

Literature reading and writing

Force buckle 4_ 412. Fizz Buzz

Random walk reasoning and learning in large-scale knowledge base

Learn CV two loss function from scratch (2)

PHP calculates personal income tax

Force buckle 5_ 876. Intermediate node of linked list

internship:完成新功能增设接口

《通信软件开发与应用》课程结业报告