当前位置:网站首页>[Flink] cdh/cdp Flink on Yan log configuration

[Flink] cdh/cdp Flink on Yan log configuration

2022-07-06 11:31:00 【kiraraLou】

Preface

because flink Applications are mostly long-running jobs , therefore jobmanager.log and taskmanager.log The size of the file can easily grow to several GB, This may be in your view flink Dashboard There is a problem with the content on . This article sorts out how to flink Enable jobmanager.log and taskmanager.log Rolling logging .

The article here is in CDH/CDP Configuration in environment , And this article applies to Flink Clusters and Flink on YARN.

To configure log4j

Flink The default log used is Log4j, The configuration file is as follows :

log4j-cli.properties: from Flink The command line client uses ( for example flink run)log4j-yarn-session.properties: from Flink Command line start YARN Session(yarn-session.sh) When usinglog4j.properties: JobManager / Taskmanagerjournal ( Include standalone and YARN)

Question why

By default ,CSA flink log4j.properties No rolling file add-on is configured .

How to configure

1. modify flink-conf/log4j.properties Parameters

Get into Cloudera Manager -> Flink -> Configuration -> Flink Client Advanced Configuration Snippet (Safety Valve) for flink-conf/log4j.properties

2. Insert the following :

monitorInterval=30

# This affects logging for both user code and Flink

rootLogger.level = INFO

rootLogger.appenderRef.file.ref = MainAppender

# Uncomment this if you want to _only_ change Flink's logging

#logger.flink.name = org.apache.flink

#logger.flink.level = INFO

# The following lines keep the log level of common libraries/connectors on

# log level INFO. The root logger does not override this. You have to manually

# change the log levels here.

logger.akka.name = akka

logger.akka.level = INFO

logger.kafka.name= org.apache.kafka

logger.kafka.level = INFO

logger.hadoop.name = org.apache.hadoop

logger.hadoop.level = INFO

logger.zookeeper.name = org.apache.zookeeper

logger.zookeeper.level = INFO

logger.shaded_zookeeper.name = org.apache.flink.shaded.zookeeper3

logger.shaded_zookeeper.level = INFO

# Log all infos in the given file

appender.main.name = MainAppender

appender.main.type = RollingFile

appender.main.append = true

appender.main.fileName = ${sys:log.file}

appender.main.filePattern = ${sys:log.file}.%i

appender.main.layout.type = PatternLayout

appender.main.layout.pattern = %d{yyyy-MM-dd HH:mm:ss,SSS} %-5p %-60c %x - %m%n

appender.main.policies.type = Policies

appender.main.policies.size.type = SizeBasedTriggeringPolicy

appender.main.policies.size.size = 100MB

appender.main.policies.startup.type = OnStartupTriggeringPolicy

appender.main.strategy.type = DefaultRolloverStrategy

appender.main.strategy.max = ${env:MAX_LOG_FILE_NUMBER:-10}

# Suppress the irrelevant (wrong) warnings from the Netty channel handler

logger.netty.name = org.jboss.netty.channel.DefaultChannelPipeline

logger.netty.level = OFF

3. Deploy client configuration

from CM -> Flink -> Actions -> Deploy client Save the configuration and redeploy flink Client configuration .

matters needing attention

Be careful 1: Each of the above settings 100 MB Scroll once jobmanager.log and taskmanager.log, And keep the old log file 7 God , Or when the total size exceeds 5000MB Delete the oldest log file .

Be careful 2: above log4j.properties Don't control jobmanager.err/out and taskmanaer.err/out, If your application explicitly prints any results to stdout/stderr, You may fill the file system after running for a long time . We suggest you use log4j Logging framework to record any messages , Or print any results .

Be careful 3: Although this article is in CDP Changes in the environment , But I found one bug, Namely CDP The environment has default configuration matters , So even if we modify , There will be conflicts. , So the final modification method is direct modification

/etc/flink/conf/log4j.propertiesfile .

IDEA

By the way Flink Local idea Running log configuration .

pom.xml

<dependency>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-api</artifactId>

<version>1.7.25</version>

</dependency>

<dependency>

<groupId>org.apache.logging.log4j</groupId>

<artifactId>log4j-slf4j-impl</artifactId>

<version>2.9.1</version>

</dependency>

<dependency>

<groupId>org.apache.logging.log4j</groupId>

<artifactId>log4j-api</artifactId>

<version>2.9.1</version>

</dependency>

<dependency>

<groupId>org.apache.logging.log4j</groupId>

<artifactId>log4j-core</artifactId>

<version>2.9.1</version>

</dependency>

resource

stay resource Edit under directory log4j2.xml file

<?xml version="1.0" encoding="UTF-8"?>

<configuration monitorInterval="5">

<Properties>

<property name="LOG_PATTERN" value="%date{HH:mm:ss.SSS} [%thread] %-5level %logger{36} - %msg%n" />

<property name="LOG_LEVEL" value="INFO" />

</Properties>

<appenders>

<console name="Console" target="SYSTEM_OUT">

<PatternLayout pattern="${LOG_PATTERN}"/>

<ThresholdFilter level="${LOG_LEVEL}" onMatch="ACCEPT" onMismatch="DENY"/>

</console>

</appenders>

<loggers>

<root level="${LOG_LEVEL}">

<appender-ref ref="Console"/>

</root>

</loggers>

</configuration>

damo

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

public class Main {

// establish Logger object

private static final Logger log = LoggerFactory.getLogger(Main.class);

public static void main(String[] args) throws Exception {

// Print log

log.info("-----------------> start");

}

}

Reference resources

- https://github.com/apache/flink/blob/master/flink-dist/src/main/flink-bin/conf/log4j.properties

- https://my.cloudera.com/knowledge/How-to-configure-CSA-flink-to-rotate-and-archive-the?id=333860

- https://nightlies.apache.org/flink/flink-docs-master/zh/docs/deployment/advanced/logging/

- https://cs101.blog/2018/01/03/logging-configuration-in-flink/

边栏推荐

- [Blue Bridge Cup 2017 preliminary] grid division

- Classes in C #

- About string immutability

- double转int精度丢失问题

- L2-007 家庭房产 (25 分)

- L2-006 树的遍历 (25 分)

- C语言读取BMP文件

- ImportError: libmysqlclient. so. 20: Cannot open shared object file: no such file or directory solution

- Reading BMP file with C language

- vs2019 使用向导生成一个MFC应用程序

猜你喜欢

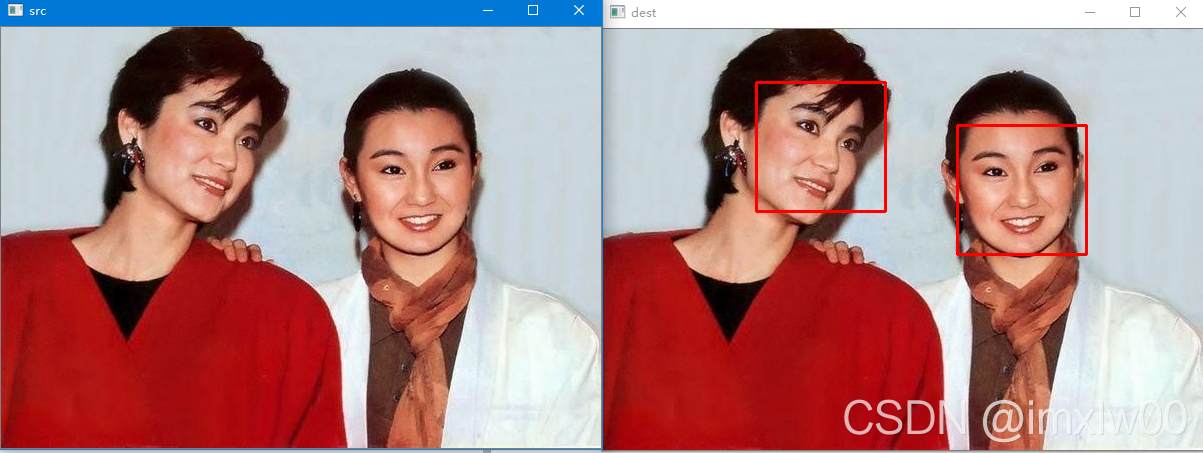

人脸识别 face_recognition

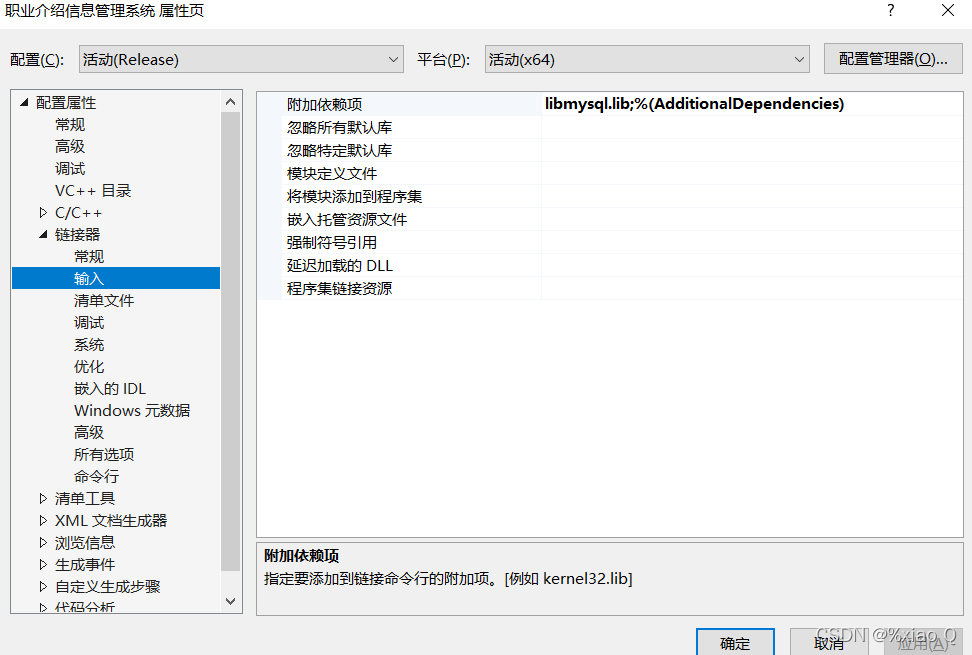

MySQL与c语言连接(vs2019版)

Software I2C based on Hal Library

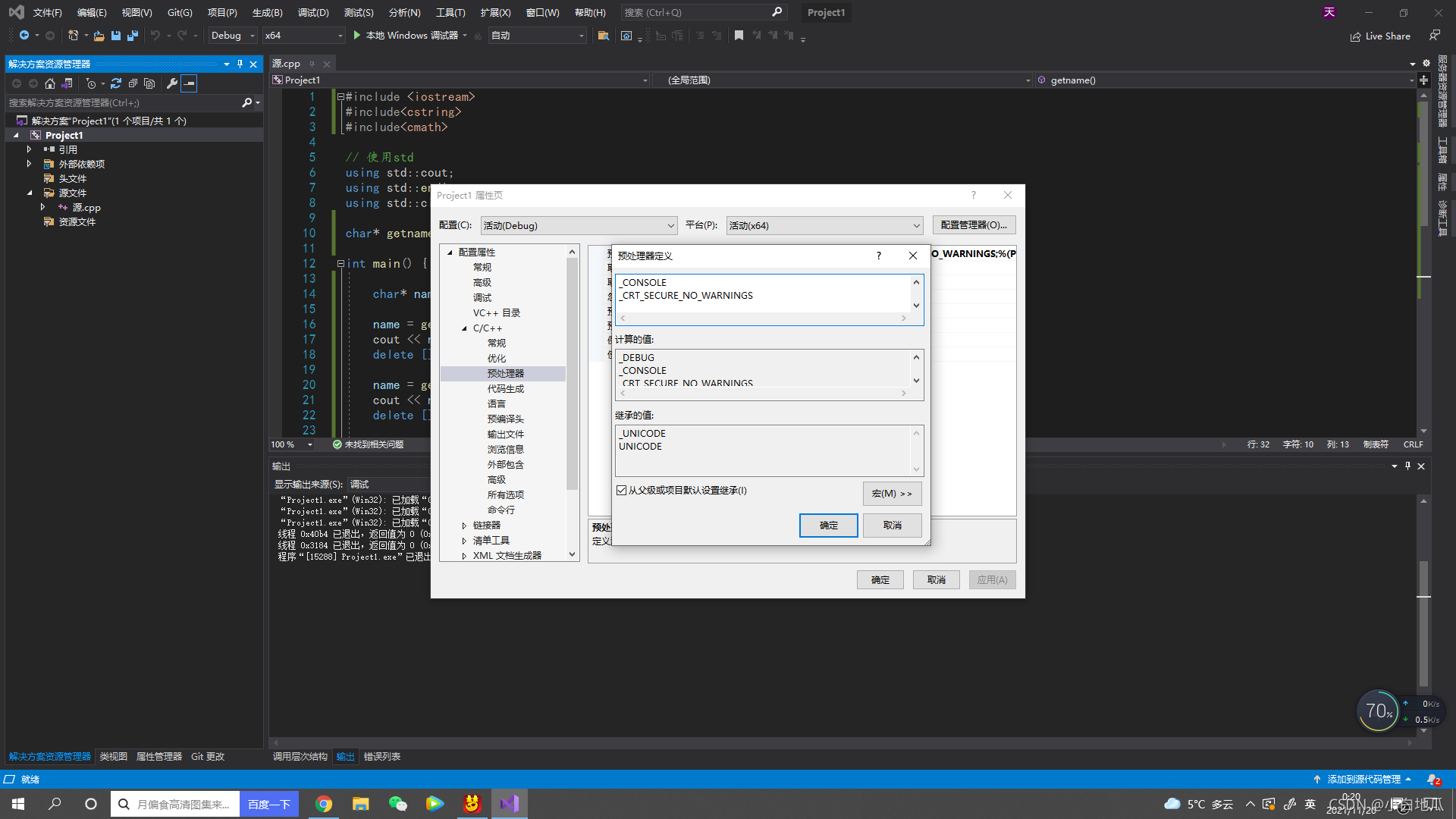

error C4996: ‘strcpy‘: This function or variable may be unsafe. Consider using strcpy_ s instead

02 staff information management after the actual project

Dotnet replaces asp Net core's underlying communication is the IPC Library of named pipes

QT creator create button

学习问题1:127.0.0.1拒绝了我们的访问

double转int精度丢失问题

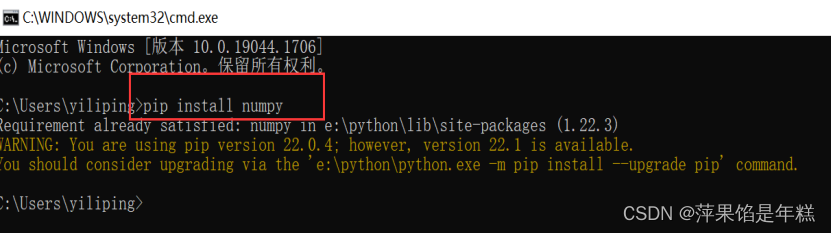

安装numpy问题总结

随机推荐

Database advanced learning notes -- SQL statement

QT creator create button

Codeforces Round #753 (Div. 3)

AcWing 179. Factorial decomposition problem solution

Use dapr to shorten software development cycle and improve production efficiency

yarn安装与使用

AcWing 1294.樱花 题解

wangeditor富文本引用、表格使用问题

【yarn】Yarn container 日志清理

天梯赛练习集题解LV1(all)

ES6 Promise 对象

wangeditor富文本组件-复制可用

C语言读取BMP文件

L2-007 家庭房产 (25 分)

What does usart1 mean

Django running error: error loading mysqldb module solution

MTCNN人脸检测

Install mongdb tutorial and redis tutorial under Windows

AI benchmark V5 ranking

QT creator uses Valgrind code analysis tool