当前位置:网站首页>Financial data acquisition (III) when a crawler encounters a web page that needs to scroll with the mouse wheel to refresh the data (nanny level tutorial)

Financial data acquisition (III) when a crawler encounters a web page that needs to scroll with the mouse wheel to refresh the data (nanny level tutorial)

2022-07-07 12:28:00 【Simon Cao】

Catalog

1. Who would give me the whole job like this

2. Selenium Simulate web browser crawling

2.1 Installation and preparation

4: Complete code , Result display

1. Who would give me the whole job like this

what , Sina's stock historical data has not been directly provided !

I need to find some data about the Australian market a few days ago , How API Didn't come to Australia to take root , Helpless, I had to place my hope on reptiles . When I click the relevant data on Sina Finance and Economics , I was surprised to find that it was already empty . I want to see others A Share data , The familiar trading data has long disappeared , Instead, a thing called data center , There is no data I want .

Data that used to be easy to find are now human beings, things and non things , I can't refer to the previous code , It's sad. . I can't help sighing that it's no wonder there are fewer and fewer crawling data , After all, API Who would do such a thankless job with such a thing .

The author immediately changed Yahoo Finance, Sure enough, I found the data I wanted . So the author happily wrote a little reptile .

import requests

import pandas as pd

headers = {"user-agent":"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/103.0.5060.53 Safari/537.36 Edg/103.0.1264.37"}

url = "https://finance.yahoo.com/quote/%5EDJI/history?period1=1601510400&period2=1656460800&interval=1d&filter=history&frequency=1d&includeAdjustedClose=true"

re = requests.get(url, headers = headers)

print(pd.read_html(re.text)[0])However, it doesn't wait for the author to be happy , Immediately found that the data crawled was only a short 100 That's ok ???

The author is puzzled ,re What is clearly requested is three-year data URL, Why is there only 100 All right .

For a long time , Finally, I found that it was because Yahoo data had to be swiped down with the mouse wheel , Only on the page of the original request 100 Row data . Now check the problem , So what's the solution ?

2. Selenium Simulate web browser crawling

Selenium It provides us with a good solution , Our traditional requests The module request can only request the content returned by the fixed web page , But the data that needs to be clicked or scrolled with the mouse wheel when the author encounters it seems pale .

I only used it when I first learned reptiles selenium, After all, I don't often encounter such a difficult web page . Therefore, the author will start from 0 Start a nanny level tutorial for this web crawler .

2.1 Installation and preparation

Please install it first , The import module

pip install selenium

from selenium import webdriver

from selenium.webdriver.common.by import By

import timeNext is the beginning , after C Station search , This kind of webpage should be used first selenium The simulated browser opens :

url = "https://finance.yahoo.com/quote/%5EDJI/history?period1=1601510400&period2=1656460800&interval=1d&filter=history&frequency=1d&includeAdjustedClose=true"

driver = webdriver.Chrome() # Start the simulation browser

driver.get(url) # Open the web address to crawl

Running here, the author stepped on the first thunder , Report errors WebDriverException: Message: 'chromedriver' executable needs to be in PATH:

It's another toss. The author finds a solution : First, download a startup file that simulates the browser , Address :ChromeDriver - WebDriver for Chrome - Downloads (chromium.org) https://chromedriver.chromium.org/downloads

https://chromedriver.chromium.org/downloads

It should be noted that , According to your Chrome Browser version download :

Decompression can be , But the installation position must be python.exe Under the same folder of that file :

Then add chromedriver.exe File address , The author puts it directly on D pan :

After confirming in turn, do not restart the computer , Direct opening CMD start-up chromedriver.exe. If as shown in the figure below successfuly It means success , The previous code can run successfully

After confirming in turn, do not restart the computer , Direct opening CMD start-up chromedriver.exe. If as shown in the figure below successfuly It means success , The previous code can run successfully

After running the code, you will directly open the web page , The prompt is under the control of automatic software :

2.2 Slide the web page with the mouse

Of course, it's not true to slide the web page with the mouse , But through selenium Achieve control , Yes to( Row to ) and by( How much to row ) There are two ways to paddle , Input xy Parameters can realize control :

driver.execute_script('window.scrollBy(x, y)') # Slide sideways x, Longitudinal sliding y

driver.execute_script('window.scrollTo(x,y)') # Slide to x, y Location Through the write cycle, you can control it to move down to the bottom to obtain all the data , Below, the author provides two kinds of paddle strategies , One is written by others , One is written by the author :

2.2.1 Height judgment

The idea is to get the height of the page ——To Rowing —— Get the page height again —— Compare the two heights , If == Proof slipped to the bottom , End of cycle .

while True:

h_before = driver.execute_script('return document.body.scrollHeight;')

time.sleep(2)

driver.execute_script(f'window.scrollTo(0,{h_before})')

time.sleep(2)

h_after = driver.execute_script('return document.body.scrollHeight;')

if h_before == h_after:

breakhowever ! Using this strategy, the author found that Yahoo It's useless , No matter how Yahoo moves , Page height is always a fixed value .

For the purpose of reference, the author put it up , Look at the following comments , Maybe other web pages can be used .

2.2.2 Top distance judgment

The author wrote another , Use the distance to the top to determine whether it is in the end :

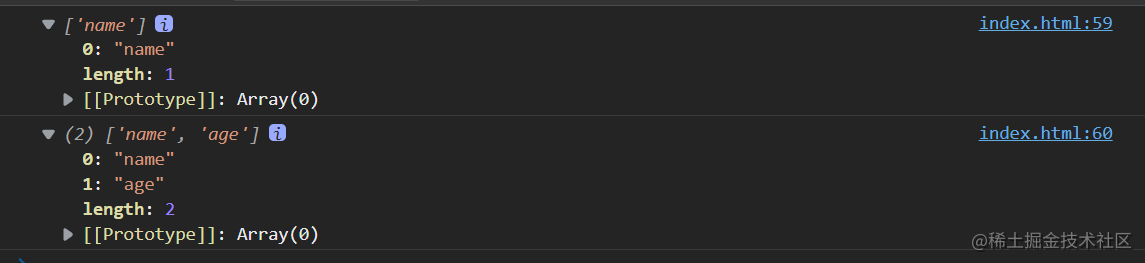

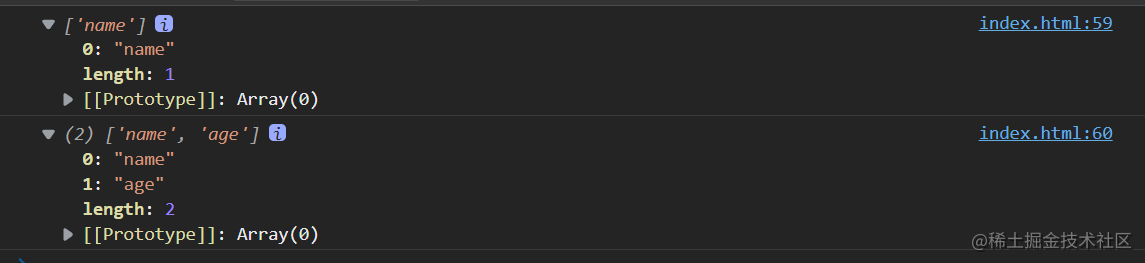

driver.execute_script('return document.documentElement.scrollTop')Actually, it's similar to just now , It's also a cycle : Get the distance from the current position to the top of the page ——To Rowing —— Get the distance to the top again —— Compare the distance twice —— Fixed units increased , If == Proved it , End of cycle .

roll = 500

while True:

h_before = driver.execute_script('return document.documentElement.scrollTop')

time.sleep(1)

driver.execute_script(f'window.scrollTo(0,{roll})')

time.sleep(1)

h_after = driver.execute_script('return document.documentElement.scrollTop')

roll += 500

print(h_after, h_before)

if h_before == h_after:

breakOne slide 500 Pixels may be a little slow , You can change the parameters of each stroke by yourself .

This solution is useful for Yahoo , You can see that it is indeed sliding down , You can also see it on the simulation browser . thus , All problems of not displaying data are solved .

3: Crawling content

adopt page_source You can export all the data drawn , The data returned is str A bunch of web page tags .

driver.page_sourceThe most difficult ridge to slide in front has passed , The rest is all about basic crawler operations , Because my goal this time is tabular data , direct pandas read. First, store the data you slide to in variables , then pandas Just analyze , If you are crawling text data, you need to use BeautifulSoup Or canonical further parsing :

content = driver.page_source

data = pd.read_html(content)

table = pd.DataFrame(data[0])4: Complete code , Result display

url = # The website you need to crawl

driver = webdriver.Chrome()

driver.get(url)

roll = 1000

while True:

h_before = driver.execute_script('return document.documentElement.scrollTop')

time.sleep(1)

driver.execute_script(f'window.scrollTo(0,{roll})')

time.sleep(1)

h_after = driver.execute_script('return document.documentElement.scrollTop')

roll += 1000

print(h_after, h_before)

if h_before == h_after:

break

content = driver.page_source

data = pd.read_html(content)

table = pd.DataFrame(data[0])

print(table)

table.to_csv("market_data.csv")You can see , The author has got all the data of Dow Jones industrial average in recent years :

Such a simple page stroke , you , Is learning useless ?

Like comments + Pay attention to the third company , If you don't abandon , We stand together through the storm .

边栏推荐

- Introduction to three methods of anti red domain name generation

- TypeScript 接口继承

- EPP+DIS学习之路(2)——Blink!闪烁!

- RHSA first day operation

- 2022 8th "certification Cup" China University risk management and control ability challenge

- SQL lab 11~20 summary (subsequent continuous update) contains the solution that Firefox can't catch local packages after 18 levels

- 免备案服务器会影响网站排名和权重吗?

- [play RT thread] RT thread Studio - key control motor forward and reverse rotation, buzzer

- The hoisting of the upper cylinder of the steel containment of the world's first reactor "linglong-1" reactor building was successful

- SQL Lab (36~40) includes stack injection, MySQL_ real_ escape_ The difference between string and addslashes (continuous update after)

猜你喜欢

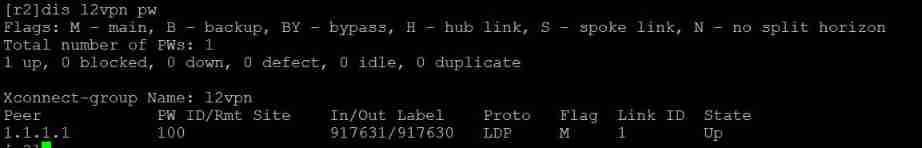

H3C HCl MPLS layer 2 dedicated line experiment

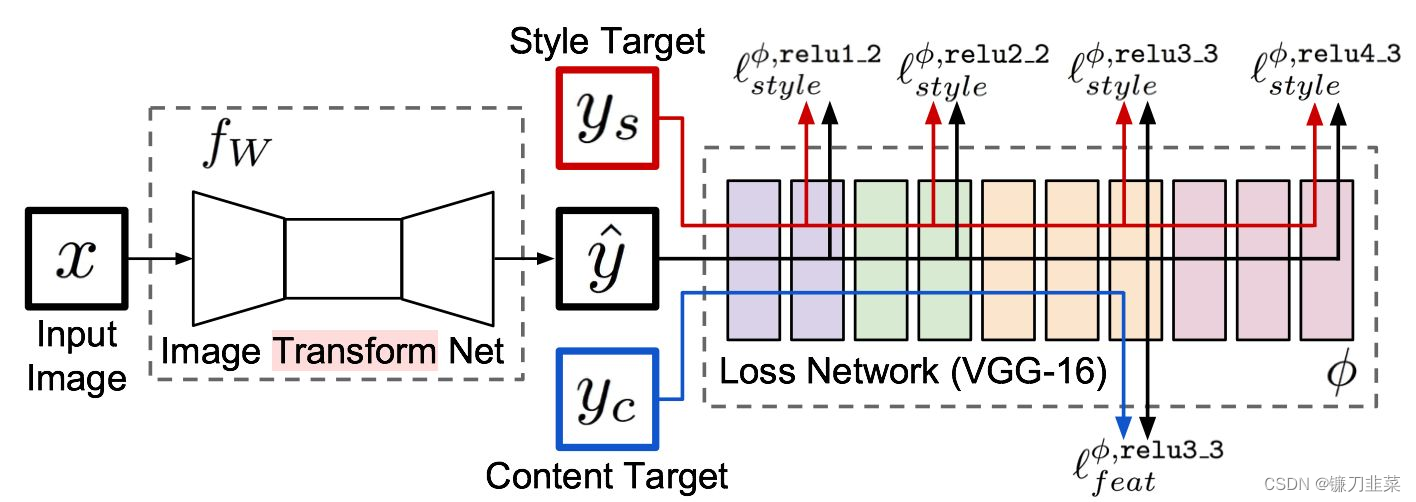

【PyTorch实战】用PyTorch实现基于神经网络的图像风格迁移

浅谈估值模型 (二): PE指标II——PE Band

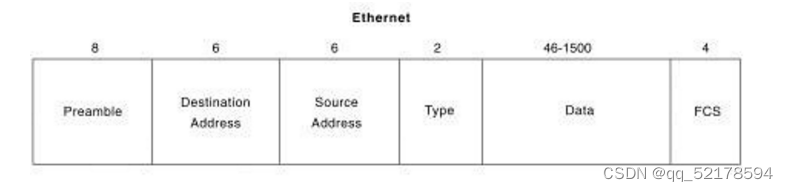

Review and arrangement of HCIA

![[pytorch practice] image description -- let neural network read pictures and tell stories](/img/39/b2c61ae0668507f50426b01f2deee4.png)

[pytorch practice] image description -- let neural network read pictures and tell stories

SQL Lab (32~35) contains the principle understanding and precautions of wide byte injection (continuously updated later)

Several methods of checking JS to judge empty objects

盘点JS判断空对象的几大方法

SQL Lab (46~53) (continuous update later) order by injection

【统计学习方法】学习笔记——逻辑斯谛回归和最大熵模型

随机推荐

关于 Web Content-Security-Policy Directive 通过 meta 元素指定的一些测试用例

Flet tutorial 17 basic introduction to card components (tutorial includes source code)

浅谈估值模型 (二): PE指标II——PE Band

Fleet tutorial 15 introduction to GridView Basics (tutorial includes source code)

Epp+dis learning road (2) -- blink! twinkle!

即刻报名|飞桨黑客马拉松第三期盛夏登场,等你挑战

Experiment with a web server that configures its own content

Apache installation problem: configure: error: APR not found Please read the documentation

免备案服务器会影响网站排名和权重吗?

Epp+dis learning path (1) -- Hello world!

【PyTorch实战】用RNN写诗

[statistical learning methods] learning notes - improvement methods

Several methods of checking JS to judge empty objects

Is it safe to open Huatai's account in kainiu in 2022?

解密GD32 MCU产品家族,开发板该怎么选?

Upgrade from a tool to a solution, and the new site with praise points to new value

(待会删)yyds,付费搞来的学术资源,请低调使用!

【深度学习】图像多标签分类任务,百度PaddleClas

【统计学习方法】学习笔记——逻辑斯谛回归和最大熵模型

小红书微服务框架及治理等云原生业务架构演进案例