当前位置:网站首页>30. Feed shot named entity recognition with self describing networks reading notes

30. Feed shot named entity recognition with self describing networks reading notes

2022-07-07 12:07:00 【Smoked Luoting purple Pavilion】

Author Information: ,

,  ,

,  ,

, ,

Institutions Information:

1. Chinese Information Processing Laboratory

2. State Key Laboratory of Computer Science, Institute of Software, Chinese Academy of Sciences, Beijing, China

3.University of Chinese Academy of Sciences, Beijing, China

4. Beijing Academy of Artifificial Intelligence, Beijing, China

ACL 2022

Model abbreviation :SDNet

Catalog

3. Self-describing Networks for FS-NER

3.2 Entiry Recognition via Entity Generation

3.3 Type Description Construction via Mention Describing

Pre-training via Mention Describing and Entity Generation

4.2 Entity Recognition Fine-tuning

Abstract

Small sample named entity recognition (Few-shot NER) Need to accurately capture information from limited examples , And can transfer useful knowledge from external resources . this paper , For small samples NER, We propose a self describing mechanism (self-describing mechanism), This mechanism can effectively use illustrative examples , And describe entity types and entities by using general concept sets mention (mention), Accurately transfer knowledge from external resources . say concretely , We designed Self-describing Networks (SDNet), The network is a Seq2Seq Generate models , It can be commonly used to describe concepts mention, Automatically insinuate new entity types into concepts , And adaptively recognize on-demand entities .

We use large-scale corpus for pre training SDNet, And in 8 Experiments were carried out on benchmark data sets in different fields . Experimental results show that SDNet stay 8 On benchmark data sets , Have achieved good performance , And in 6 Data sets were obtained SOTA Performance of . This also proves the effectiveness and robustness of this method .(effectiveness and robustness)

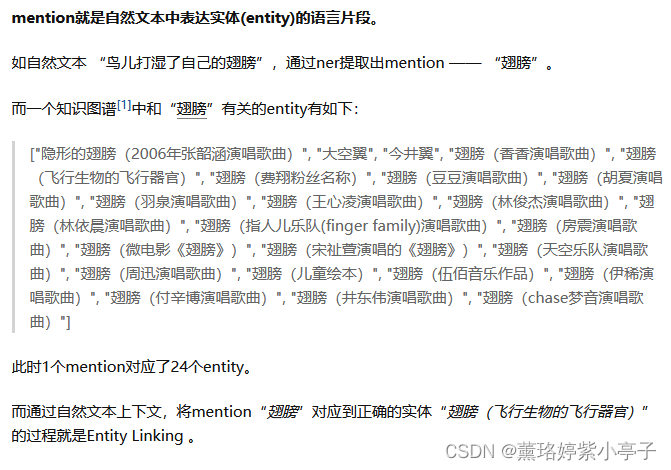

Mention It is usually defined as References to entities in natural language texts , The entity can be named (named) Entity 、 name (nominal) Entity or pronoun (pronominal) Entity .

1. Introduction

FS-NER (Few-shot NER) The purpose is to identify the entity reference corresponding to the new entity type through several examples (entity mention).FS-NER It is a promising open domain NER technology , It contains various unforeseen types and very limited examples , Therefore, it has attracted extensive attention in recent years

FS-NER The main challenge is how to use a small number of examples to accurately model the semantics of invisible entity types . To achieve this goal ,FS-NER It is necessary to effectively capture information from a small number of samples , meanwhile , Develop and transfer useful knowledge from external resources .

Challenge one :limited information challenge

The information contained in the illustrative example is very limited ,

Challenge two :knowledge mismatch challenge

external Knowledge usually does not directly match new tasks , Because it may contain irrelevant 、 Heterogeneous 、 Even conflicting knowledge .

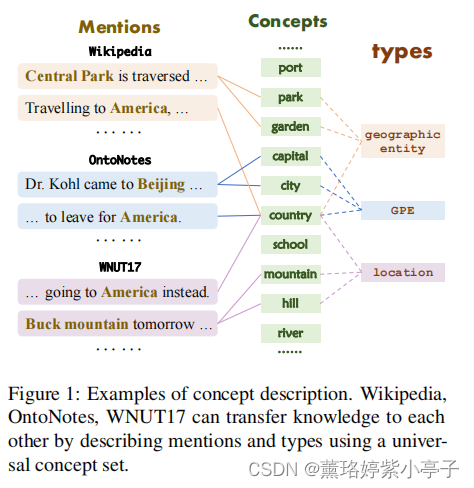

So , This paper puts forward a kind of FS-NER Self describing mechanism of . The main idea behind the self description mechanism is , All entity types can be described with the same set of concepts , And the mapping between types and concepts can be generally modeled and learned . such , You can use the same set of concepts , Solve the problem of knowledge mismatch by uniformly describing different entity types .

for example :

Below 1 in , Different topic types (types) Will match the same set of concepts (e.g., park, garden, country……), therefore , Knowledge from different sources can be universally described and transferred . Besides , Because concept mapping is universal , A few examples are only used to construct mappings between new types and concepts , Therefore, it can effectively solve the limited information problem .

Based on the above thought , We propose a self describing network SDNet, This is a kind of Seq2Seq Generation network , It can generally use concepts to describe references , Automatically map new entity types to concepts , And adaptively recognize entities on demand . say concretely ,1) In order to capture the mention (mention) Semantic information ,SDNet Generate a set of general concepts as its description .2) To map entity types to concepts ,SDNet Generate and integrate mentioned concept descriptions with the same entity type . 3) To identify entities ,SDNet Through a prefix prompt with rich concepts (prefix prompt) Directly generate all entities in the sentence , The prompt contains the target entity type and its conceptual description .

Because this concept set is universal , So we are on a large scale 、 Easy to access web Resource right SDNet Pre training . To be specific , We collected a link , It contains a pre trained data set , It includes 56M A sentence , exceed 31K A concept .

SDNet By projecting references and entity types into a general concept space , It can effectively enrich entity types to solve limited information problems , Generally speaking, different patterns are used to solve the problem of knowledge mismatch , And can effectively carry out unified pre training . Besides , Prefix prompts are used for the above tasks (prefix prompt) Mechanism modeling single generation model , Distinguish between different tasks , Make the model controllable 、 Universal , Can train continuously .

We are 8 Different areas FS-NER Experiments were carried out on the benchmark . Experiments show that ,SDNet It has very strong performance , And in 6 Data sets were obtained SOTA performance .

The main contribution of this paper is :

- We put forward a kind of FS-NER Self describing mechanism of , Describe entity types and references by using a common set of concepts , It can effectively solve the challenges of limited information and knowledge mismatch .

- We propose a self describing network SDNet, This is a kind of Seq2Seq Generation network , It can generally use concepts to describe references , Automatically map new entity types to concepts , And recognize entities adaptively as needed .

- We are working on large-scale open data sets SDNet Pre training , This is Fs-NER Provides a general knowledge , And can benefit many future NER Research .

2. Related Work

3. Self-describing Networks for FS-NER

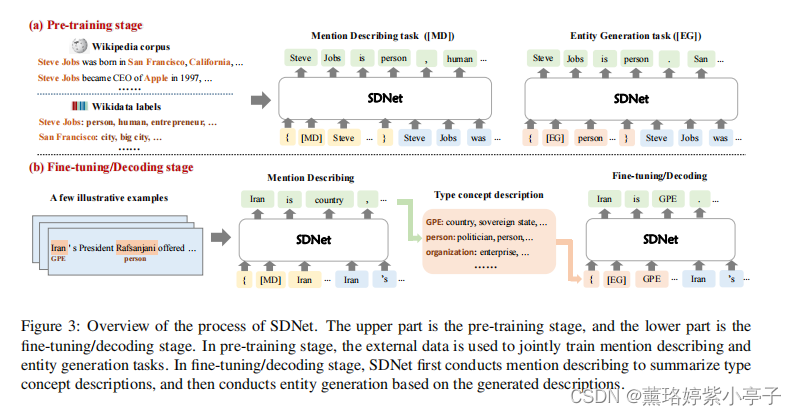

In this section , We will describe how to use self description networks to build a small number of entity recognizers and identify entities . chart 3(b) It shows the whole process . Concrete , In two parts

1)Mention describing

Generate mention A conceptual description of

2)Entity generation ( Entity generation )

Adaptively generate entity references corresponding to ideal new types (entity mention).

Use SDNet,NER You can enter a type description during entity generation , Direct execution NER. Given a new type , Its type description is established by referring to and describing its illustrative examples . below , We will first introduce SDNet, Then describe how to build the type description and build few-shot Entity recognizer .

3.1 Self-describing Networks

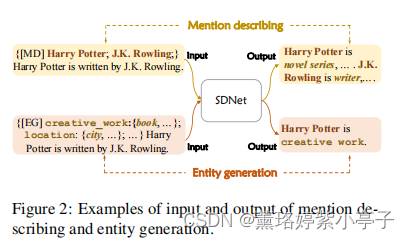

SDNet There are two main generation tasks :mention describing and Entity generation. The mentioned description is to generate the mentioned concept description , Entity generation is to generate entity references adaptively . To guide the above two processes ,SDNet Used a different prompts P, Generate different outputs Y. Pictured 2 Shown :

Yes mention describing for , prompt Contains a task descriptor [MD], And the target entity mention. For entity recognition ,prompt Contains a task descriptor [EG], And a series of new entities and their corresponding descriptions .

Input : prompt P And sentences sentence S (  = P

= P S)

S)

Output :SDNet Will generate a sequence Y,Y Include reference descriptions (mention describing) And entity generation (entity generation) result .

We can see that , Using the above two generations of processes can effectively execute few-shot Entity recognition . For entity recognition , We can put the description of the target entity type at the prompt prompt in , Then the entity is generated adaptively through the entity generation process . To construct a new type of entity recognizer , We only need its type description , By summarizing the conceptual description of its illustrative examples , Can effectively build its type description .

3.2 Entiry Recognition via Entity Generation

stay SDNet in , Entity recognition is performed by entity generation , The given entity generation prompt is  And sentences

And sentences  .

.

![P_{EG} = \{ [EG] t_{1}: \{ {l_{1}^{1}, ...{l_{1}^{m_{1}}} \}; t_{2}: \{ l_{2}^{1}, ...{l_{2}^{m_{2}}} \}; ... \}](http://img.inotgo.com/imagesLocal/202207/07/202207071002353049_7.gif)

Illustrate with examples :

example :" Harry Potter is written by J.K. Rowling.”

1) identify entity of PERSON type

Input format :{[EG] person: {actor, writer}}

SDNet Generate results :“J.K. Rowling is person”

2) identity entity of CREATIVE_WORK type

Input format :{[EG] creative_work: {book, music}}

result :“Harry Potter is creative_work”

3.3 Type Description Construction via Mention Describing

In order to build a type description of a new type with several illustrative examples ,SDNet First, a description of each of the concepts mentioned in the illustrative example is obtained by referring to the description . Then by summarizing all the concept descriptions , Build type descriptions for each type .

Mention Describing

Input :![P_{MD} = \{ [MD] e_{1}; e_{2}; ... \}](http://img.inotgo.com/imagesLocal/202207/07/202207071002353049_5.gif%20%3D%20%5C%7B%20%5BMD%5D%20e_%7B1%7D%3B%20e_%7B2%7D%3B%20...%20%5C%7D) , take

, take  and X Splice as input .

and X Splice as input .

Output :“ is

is  , ...,

, ..., ;

;  is

is  , ...,

, ...,  ;...”

;...”

among , It means the first one i-th The entity refers to section j-th Concept .

It means the first one i-th The entity refers to section j-th Concept .

Type Description Construction

then SDNet Summarize the generated concepts to describe the precise semantics of specific new types . say concretely , All of the same type t The concept description of will be integrated into C, As type t Description of . And construct a type description M={(t,C)}. Then merge the constructed type description into  in , To guide the generation of entities .

in , To guide the generation of entities .

Filtering Strategy

Due to the diversification of downstream novel types ,SDNet There may not be enough knowledge to describe these types , So force SDNet Describing them may lead to inaccurate descriptions . To solve this problem , We have put forward Filtering Strategy, bring SDNet Can refuse to generate unreliable descriptions .

say concretely , For uncertain instances ,SDNet It was born into other class . Given a new type and some illustrative examples , We will calculate in the conceptual description of these examples other Frequency of instances . If generated on an illustrative instance other The frequency of instances is greater than 0.5, We will delete the type description , And directly use the type name as  . We will be in the 4.1 Section describes SDNet How to learn filtering strategies .

. We will be in the 4.1 Section describes SDNet How to learn filtering strategies .

4. Learning

4.1 SDNet Pre-training

This article USES the wikipedia and wikidata As an external knowledge source . ( This article USES the 20210401version Of wikipedia )

Entity Mention Collection

SDNet Pre training , Collection required <e,T,X>, among ,e Is an entity reference ,T The entity type is ,X It's a sentence .

e.g., <J.K. Rowling; person, writer, ...; J.K. Rowling writes ...>

Total obtained 31K type .

Type Descriptioon Building

For training SDNet, We need concept description  , among

, among  ,

,  It's the type

It's the type  Related concepts of . This article uses the entity types collected above as concepts , And build the following type description . Given an entity type , We collect all its concurrent entity types as its description concepts . such , For each entity type , We all have a set of concepts to describe . Because some entity types have a very large set of description concepts , In efficiency pre training, we randomly sample no more than N( this paper N take 10 individual ) The concept of .

Related concepts of . This article uses the entity types collected above as concepts , And build the following type description . Given an entity type , We collect all its concurrent entity types as its description concepts . such , For each entity type , We all have a set of concepts to describe . Because some entity types have a very large set of description concepts , In efficiency pre training, we randomly sample no more than N( this paper N take 10 individual ) The concept of .

Pre-training via Mention Describing and Entity Generation

Given the sentence and its reference - Type Tuples : , among

, among  It's No i-th Set of types mentioned by entities .

It's No i-th Set of types mentioned by entities . yes

yes  Of j-th type .

Of j-th type .  It's a sentence X Entities mentioned in . then , We construct type descriptions , And convert these triples into pre training examples .

It's a sentence X Entities mentioned in . then , We construct type descriptions , And convert these triples into pre training examples .

4.2 Entity Recognition Fine-tuning

As mentioned above ,SDNet You can use manually designed type descriptions to directly identify entities . however SDNet You can also use illustrative instances to automatically build type descriptions , And further improve through fine tuning . say concretely For a given <e,T,X>, We first construct different types of descriptions , Next, construct an entity generation Prompt, Then generate the sequence  , Through the optimization formula (2) To fine tune SDNet

, Through the optimization formula (2) To fine tune SDNet

5 Experiment

5.1 Settings

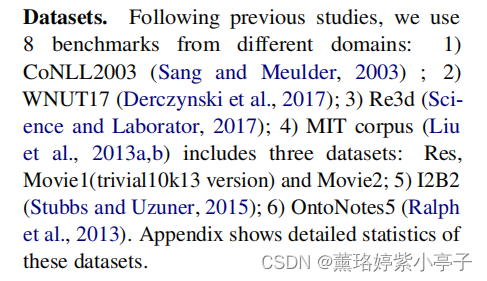

Datasets

Baselines

1) BERT-base

2)T5-base

3)T5-base-prompt: Tips prompt Of T5 Base version , Use the entity type as a hint

4)T5-base-DS

5) RoBERTa-based

6) Prototypical network based RoBERTa model Proto and its distantly supervised pre-training version Proto-DS

7) MRC model SpanNER which needs to design the description for each label and its distantly supervised pre-training version SpanNER-DS

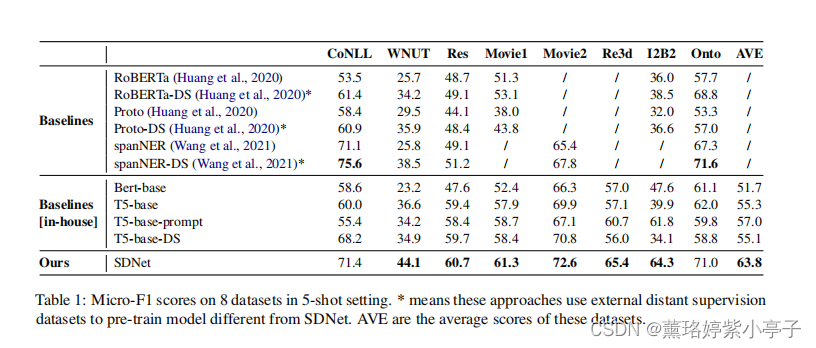

5.2 Main Results

Conclusion :

1) By comparing NER Knowledge is generally modeled and pre trained , Self describing networks can effectively handle few-shotNER knowledge .

2) Due to limited information , Transfer external knowledge to FSNER Models are crucial .

3) Due to the mismatch of knowledge , Effectively transferring external knowledge to new downstream types is challenging .

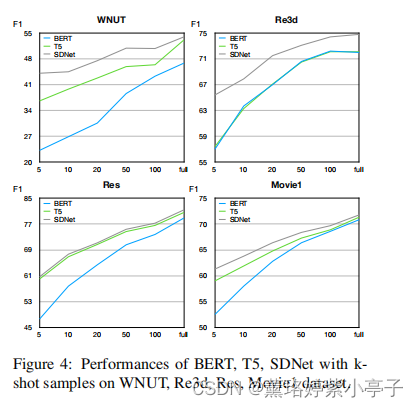

5.3 Effects of Shot Size

Conclusion :

1)SDNet You can get better performance under all different lens settings . Besides , These improvements are more significant in the low lens setting , This proves that SDNet The intuition behind it .

2) The model based on generation is usually better than that based on classifier BERT The model has better performance . We think , This is because the generated model can capture the semantics of types more effectively by using tag discourse , Therefore, better performance can be achieved , Especially in low lens settings .

3) except Res Outside ,SDNet The performance on almost all data sets is significantly better than T5, This shows the effectiveness of the proposed self description mechanism .

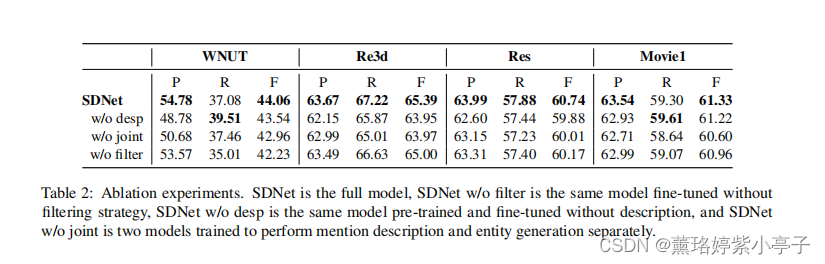

5.4 Ablation Study

Conclusion :

1) Type description for SDNet Transferring knowledge and capturing type semantics are essential .

2) Joint learning process in description and entity generation network is an effective method to capture type semantics .

3) Filtering strategy can effectively alleviate the transfer of mismatched knowledge .

边栏推荐

- Superscalar processor design yaoyongbin Chapter 10 instruction submission excerpt

- Swiftui swift internal skill: five skills of using opaque type in swift

- 2022年在启牛开华泰的账户安全吗?

- powershell cs-UTF-16LE编码上线

- 问题:先后键入字符串和字符,结果发生冲突

- 《看完就懂系列》天哪!搞懂节流与防抖竟简单如斯~

- 小红书微服务框架及治理等云原生业务架构演进案例

- [filter tracking] strapdown inertial navigation simulation based on MATLAB [including Matlab source code 1935]

- [shortest circuit] acwing 1127 Sweet butter (heap optimized dijsktra or SPFA)

- Complete collection of common error handling in MySQL installation

猜你喜欢

PowerShell cs-utf-16le code goes online

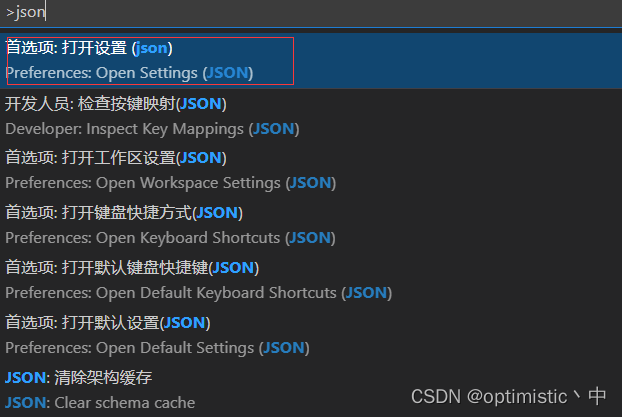

Solve the problem that vscode can only open two tabs

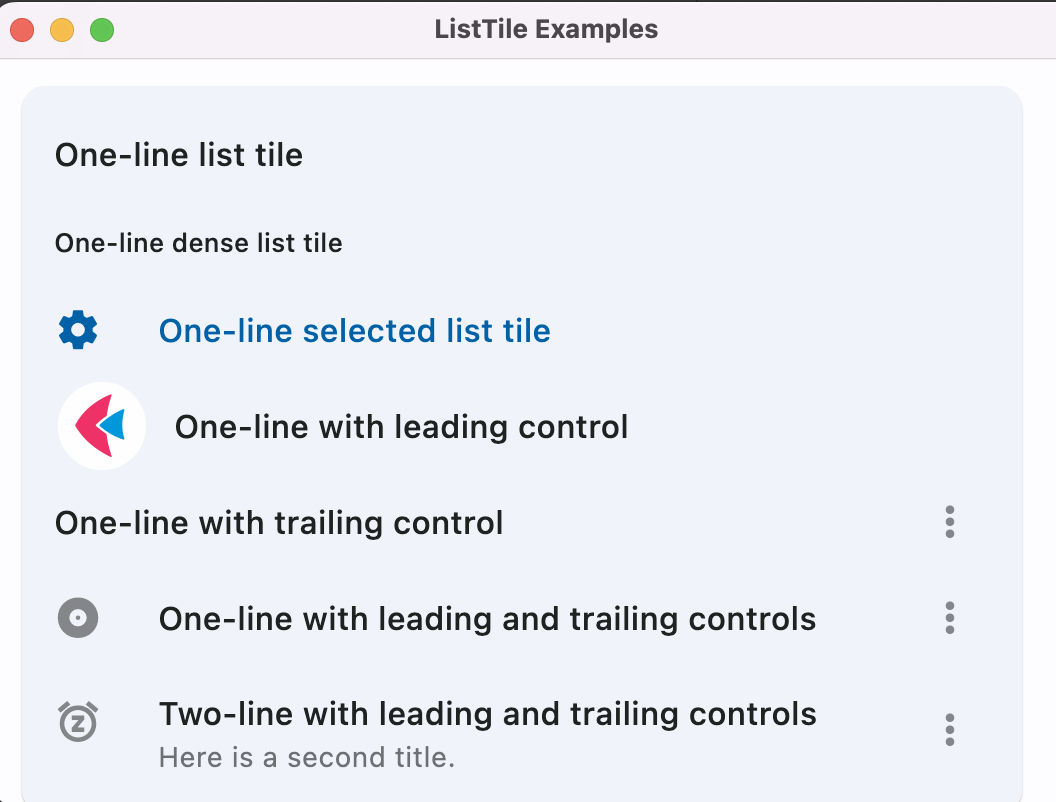

Fleet tutorial 19 introduction to verticaldivider separator component Foundation (tutorial includes source code)

![111. Network security penetration test - [privilege escalation 9] - [windows 2008 R2 kernel overflow privilege escalation]](/img/2e/da45198bb6fb73749809ba0c4c1fc5.png)

111. Network security penetration test - [privilege escalation 9] - [windows 2008 R2 kernel overflow privilege escalation]

Rationaldmis2022 array workpiece measurement

Flet教程之 14 ListTile 基础入门(教程含源码)

Problem: the string and characters are typed successively, and the results conflict

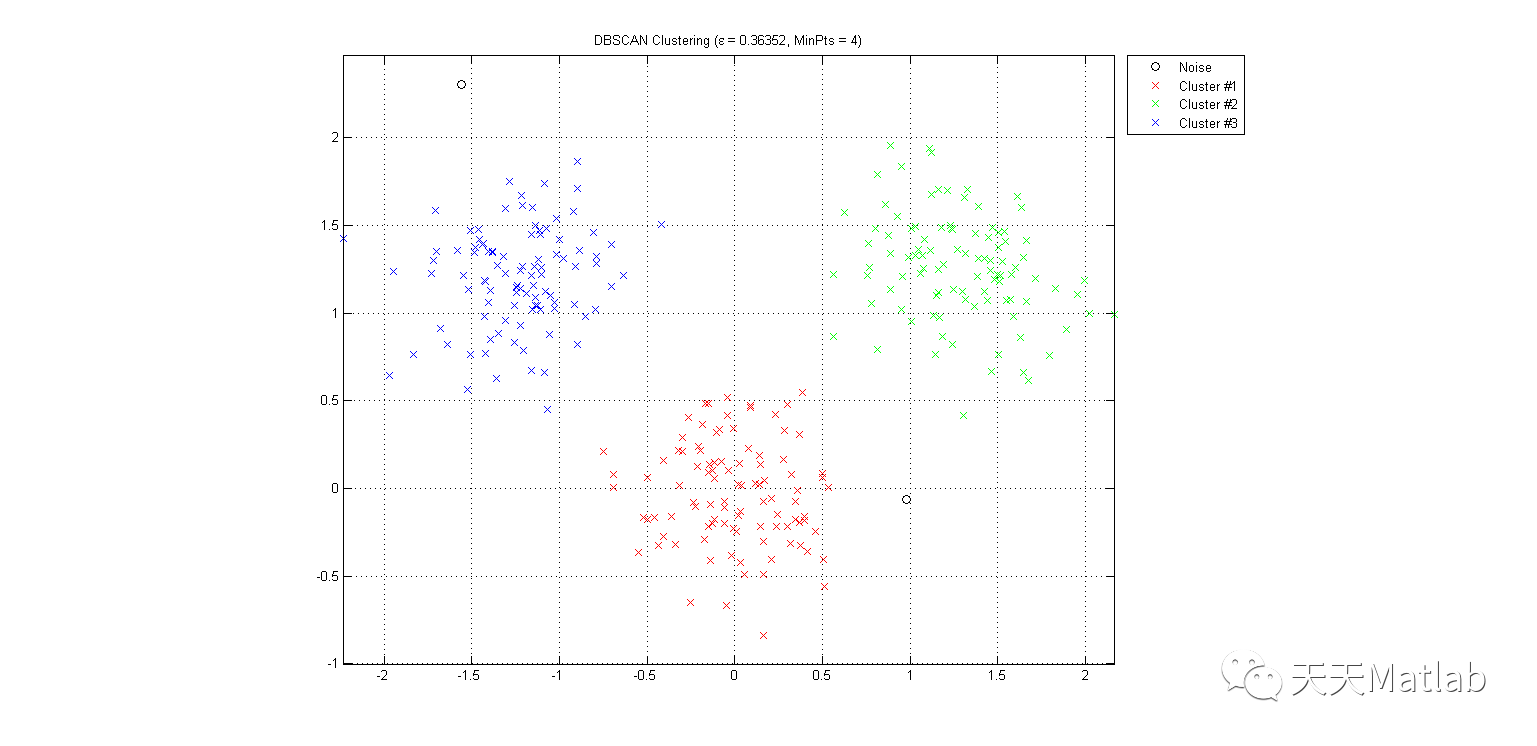

【数据聚类】基于多元宇宙优化DBSCAN实现数据聚类分析附matlab代码

La voie du succès de la R & D des entreprises Internet à l’échelle des milliers de personnes

30. Few-shot Named Entity Recognition with Self-describing Networks 阅读笔记

随机推荐

TypeScript 接口继承

人大金仓受邀参加《航天七〇六“我与航天电脑有约”全国合作伙伴大会》

Summed up 200 Classic machine learning interview questions (with reference answers)

平安证券手机行开户安全吗?

Introduction and application of smoothstep in unity: optimization of dissolution effect

Let digital manage inventory

108. Network security penetration test - [privilege escalation 6] - [windows kernel overflow privilege escalation]

顶级域名有哪些?是如何分类的?

idea 2021中文乱码

@What happens if bean and @component are used on the same class?

Problem: the string and characters are typed successively, and the results conflict

《通信软件开发与应用》课程结业报告

Programming examples of stm32f1 and stm32subeide -315m super regenerative wireless remote control module drive

Rationaldmis2022 advanced programming macro program

[neural network] convolutional neural network CNN [including Matlab source code 1932]

2022年在启牛开华泰的账户安全吗?

SwiftUI 4 新功能之掌握 WeatherKit 和 Swift Charts

Explore cloud database of cloud services together

从工具升级为解决方案,有赞的新站位指向新价值

【数据聚类】基于多元宇宙优化DBSCAN实现数据聚类分析附matlab代码