当前位置:网站首页>Hudi data management and storage overview

Hudi data management and storage overview

2022-07-03 09:25:00 【Did Xiao Hu get stronger today】

List of articles

Data management

**Hudi How to manage data ? **

Use table Table Formal organization data , And the data class in each table like Hive Partition table , Divide data into different directories according to partition fields , Each data has a primary key PrimaryKey, Identify data uniqueness .

Hudi Data management

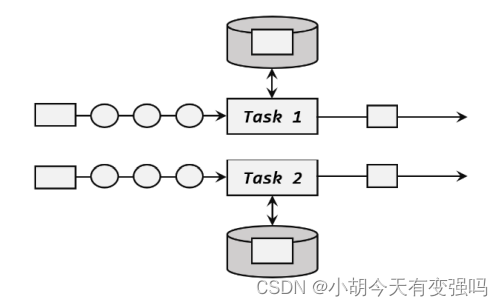

Hudi Data files for tables , You can use the operating system's file system storage , You can also use HDFS This kind of distributed file system storage . In order to share Analyze the reliability of performance and data , In general use HDFS For storage . With HDFS In terms of storage , One Hudi Table storage files are divided into two categories .

![[ Failed to transfer the external chain picture , The origin station may have anti-theft chain mechanism , It is suggested to save the pictures and upload them directly (img-YvuTlmxp-1654782269035)(C:\Users\Husheng\Desktop\ Big data framework learning \image-20220609205143126.png)]](/img/f7/64c17ef5e0e77fbced43acdf29932a.png)

.hoodie

(1).hoodie file : because CRUD The fragility of , Each operation generates a file , When these little files get more and more , Can have a serious impact HDFS Of performance ,Hudi A set of file merging mechanism is designed . .hoodie The corresponding log files related to the file merge operation are stored in the folder .

Hudi As time goes by , A series of CRUD The operation is called Timeline,Timeline A certain operation in , be called Instant.

- Instant Action, Record this operation is a data submission (COMMITS), Or file merging (COMPACTION), Or file cleaning (CLEANS);

- Instant Time, The time of this operation ;

- State, State of operation , launch (REQUESTED), Have in hand (INFLIGHT), It's still done (COMPLETED);

![[ Failed to transfer the external chain picture , The origin station may have anti-theft chain mechanism , It is suggested to save the pictures and upload them directly (img-8Glv0pMg-1654782269036)(C:\Users\Husheng\Desktop\ Big data framework learning \image-20220609205512376.png)]](/img/d3/73c20c5a366fc53aa17b42e9f80a94.png)

amricas and asia

(2)amricas and asia The relevant path is the actual data file , Storage by partition , The path of the partition key It can be specified .

- Hudi Real data files use Parquet File format storage ;

- There is one metadata Metadata files and data files parquet The column type storage .

- Hudi In order to realize the data CRUD, Need to be able to uniquely identify a record ,Hudi The only field in the dataset will be (record key ) + Where the data is Partition (partitionPath) Unite as the only key to the data .

![[ Failed to transfer the external chain picture , The origin station may have anti-theft chain mechanism , It is suggested to save the pictures and upload them directly (img-vkMLkqhU-1654782269037)(C:\Users\Husheng\Desktop\ Big data framework learning \image-20220609205849955.png)]](/img/18/286d66b6538b04e1e54e2aefc6ca16.png)

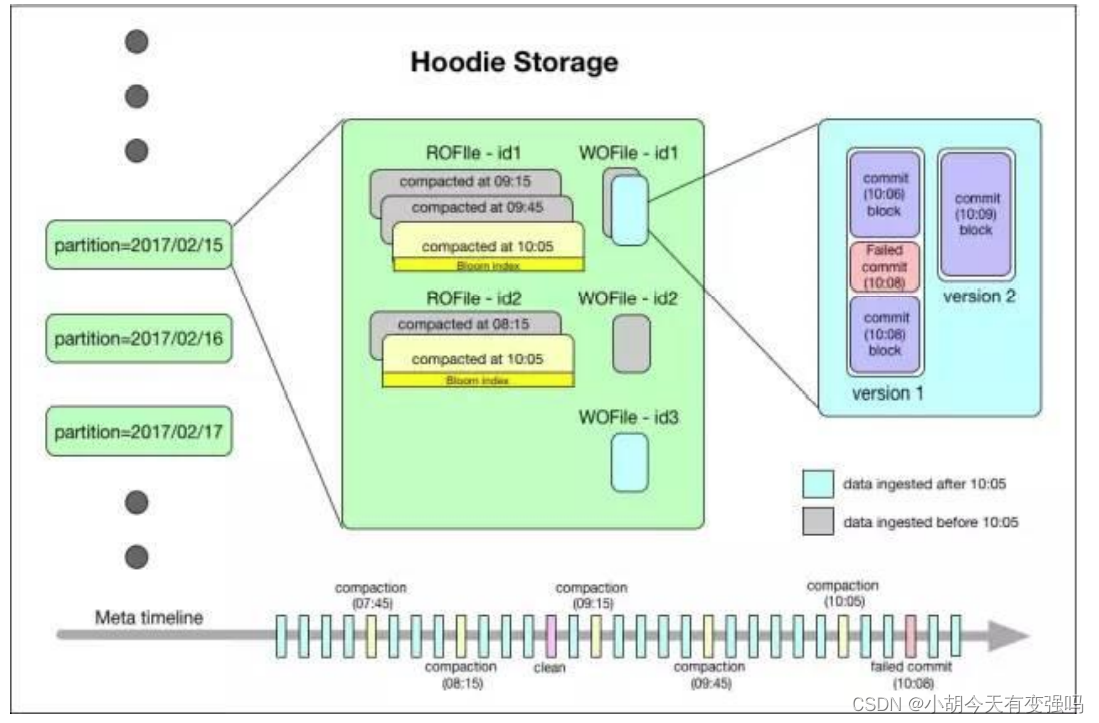

Hudi Storage overview

Hudi The organization directory structure of the data set and Hive Very similar , A data set corresponds to this root directory . The dataset is fragmented into multiple partitions , Partition Fields exist as folders , This folder contains all the files in this partition .

In the root directory , Each partition has a unique partition path , Each partition data is stored in multiple files .

Each file has a unique fileId And generate files commit Marked . If an update operation occurs , Multiple files share the same fileId, But it will Different commit.

Metadata Metadata

- Time axis (timeline) Maintain the metadata of various operations on the dataset in the form of , To support the transient view of the dataset , This part of metadata is stored Metadata directory stored in the root directory . There are three types of metadata :

- Commits: A single commit Contains information about an atomic write operation to a batch of data on the dataset . We use monotonically increasing timestamps to identify commits, Calibration is the beginning of a write operation .

- Cleans: The background activity used to clear the old version files in the dataset that are no longer used by the query .

- Compactions: To coordinate Hudi Internal data structure differences in background activities . for example , Collect the update operation from the log file based on row storage to the column storage data .

Index Indexes

- Hudi Maintain an index , To support in recording key In the presence of , The newly recorded key Quickly map to the corresponding fileId.

- Bloom filter: Stored in the footer of the data file . The default option , Independent of external system implementation . The data and index are always consistent .

- Apache HBase : It can efficiently find a small batch of key. During index marking , This option may be a few seconds faster .

Data data

Hudi Store all ingested data in two different storage formats , The user can choose any data format that meets the following conditions :

Read optimized column format (ROFormat): The default value is Apache Parquet;

Write optimized line storage format (WOFormat): The default value is Apache Avro

![[ Failed to transfer the external chain picture , The origin station may have anti-theft chain mechanism , It is suggested to save the pictures and upload them directly (img-asupbZiT-1654782269037)(C:\Users\Husheng\Desktop\ Big data framework learning \image-20220609210931450.png)]](/img/8a/3d47d4d4b72136410d510d32c88758.png)

Reference material :

边栏推荐

- LeetCode每日一题(2305. Fair Distribution of Cookies)

- Spark 集群安装与部署

- STM32F103 can learning record

- Data mining 2021-4-27 class notes

- Spark 概述

- State compression DP acwing 291 Mondrian's dream

- Derivation of Fourier transform

- We have a common name, XX Gong

- Excel is not as good as jnpf form for 3 minutes in an hour. Leaders must praise it when making reports like this!

- Numerical analysis notes (I): equation root

猜你喜欢

CSDN markdown editor help document

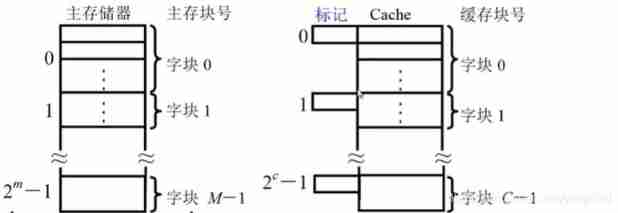

Principles of computer composition - cache, connection mapping, learning experience

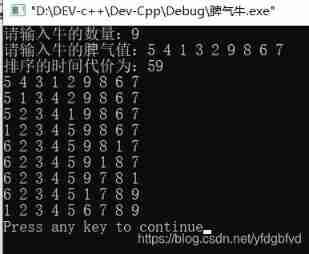

Temper cattle ranking problem

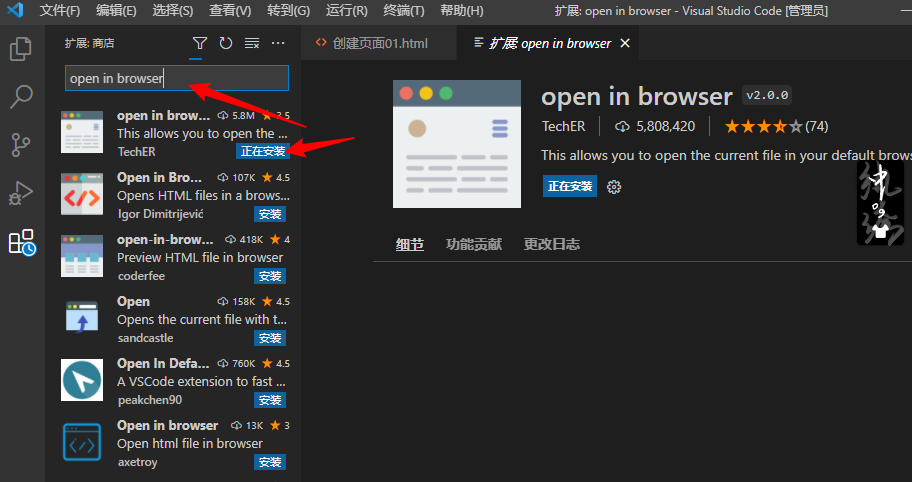

There is no open in default browser option in the right click of the vscade editor

Build a solo blog from scratch

Solve POM in idea Comment top line problem in XML file

LeetCode每日一题(2090. K Radius Subarray Averages)

Alibaba cloud notes for the first time

Vs2019 configuration opencv3 detailed graphic tutorial and implementation of test code

Flink学习笔记(九)状态编程

随机推荐

What are the stages of traditional enterprise digital transformation?

We have a common name, XX Gong

Crawler career from scratch (II): crawl the photos of my little sister ② (the website has been disabled)

Notes on numerical analysis (II): numerical solution of linear equations

[solution to the new version of Flink without bat startup file]

Detailed steps of windows installation redis

Hudi integrated spark data analysis example (including code flow and test results)

Using Hudi in idea

Spark structured stream writing Hudi practice

LeetCode 871. Minimum refueling times

Navicat, MySQL export Er graph, er graph

Win10 quick screenshot

LeetCode每日一题(1024. Video Stitching)

[point cloud processing paper crazy reading frontier edition 13] - gapnet: graph attention based point neural network for exploring local feature

2022-2-13 learn the imitation Niuke project - Project debugging skills

Internet Protocol learning record

Banner - Summary of closed group meeting

Spark 概述

Overview of image restoration methods -- paper notes

Go language - JSON processing