当前位置:网站首页>Problems and precautions about using data pumps (expdp, impdp) to export and import large capacity tables in Oracle migration

Problems and precautions about using data pumps (expdp, impdp) to export and import large capacity tables in Oracle migration

2022-07-07 06:33:00 【Major_ ZYH】

survey

I'm going to be here once recently Oracle data (Linux) transfer , Because the source database has a large amount of data , Therefore, the determined implementation scheme is as follows :

1. Source library Oracle Users use data pump tools expdp Whole database ( user ) export .

2. Target library usage impdp Import .

3. Use kettle The tool synchronizes all tables with data changes .

4. Determine the service data source time , Stop the service connection in the source library , Perform the last data synchronization at the same time , Switch the relevant service data source to the target database . Finally, start the service to complete the migration .

In the use of expdp Specify concurrency as 10, Export in two parts , Because the data volume of individual tables in the source database is hundreds of millions to more than a billion , And more than 2T Picture class of LOB Data additionally specifies the table name to export :

export 1

expdp username/password directory=expdp_dir dumpfile=2022xxxx_username_%U.dmp logfile=2022xxxx_username.log exclude=TABLE:“IN(‘TB_XX01’,‘TB_XX02’,‘TB_XX03’,‘TB_XX04’)” parallel=10

export 2

expdp username/password directory=expdp_dir dumpfile=TP2022xxxx_username_%U.dmp logfile=2022xxxx_username.log TABLES=‘TB_XX01’,‘TB_XX02’,‘TB_XX03’,‘TB_XX04’ parallel=10

What happened

When importing large tables of image type and billions of text type data, it is necessary to find the temporary table space of the database during the import process temp And fallback tablespaces undotbs1 The use of the Internet continues to increase , And failed to import a table for several days . Stopped importing after finding the problem , But it caused undo The large occupation of table space has not been released , It is found by inquiry that undo There are a lot of unfinished transactions in , And transactions are all transactions related to maintaining the primary key in the data warehousing of the primary key existing in the table at the time of import .

In fact, all indexes, constraints and triggers on the table except the primary key have been deleted and disabled before import , But I didn't expect that just maintaining the primary key led to so many undo data .

Processing mode

After finding the cause of the problem , All primary keys on the table have also been deleted , And the parameters are executed during import (undo If the tablespace is not released for a long time, please refer to the... At the end of the blog appendix To operate ):

impdp username/password directory=expdp_dir dumpfile=ZP2022xxxx_username_%U.dmp logfile=impdp_username_2022xxxx.log TABLES=‘TB_XX01’,‘TB_XX02’,‘TB_XX03’,‘TB_XX04’ parallel=10 access_method=direct_path

This import was completed in a short time , And did not produce a lot of undo data .

summary

1. When processing large capacity data tables and using data pump export , It is recommended that the server's cpu Check the number , Set the parallelism properly , Speed up export . And exported dmp Also need to configure %U, Write concurrently with merge rows dmp file .

2. In import city , For large tables, it is recommended to clear all indexes and constraints on the table if unnecessary , Include primary key , Otherwise, a lot of undo data , Occupy undo Table space . Cause a large number of transactions to accumulate , Table data import is extremely slow .

3.impdp Export parameters for access_method=direct_path The check of database metadata will be ignored , Although it will speed up the import , But there is also a certain probability of error reporting , We should use it with caution .

appendix

When stopping import ,undo Tablespaces have produced a large number of undo data , And this time, because of a large amount of data import , So the archive was not opened , It also leads to undo The data is in redo Data coverage after log cycle , Unable to release for a long time undo data , And even set a new default table space UNDOTBS2 after , Still can't delete the old UNDOTBS1 Table space , And you will find that the database operation will be very catchy , At this time, because the database is still dealing with the old undo Transaction data in tablespace . Because there are also states in the table space ONLINE Fallback segment of , You need to wait for all the fallback segments OFFLINE Only then can UNDOTBS1 Table space delete , But because the archive was not opened , Some of the table space fallback segments cannot be automatically offline 了 . Check the status of the fallback section as follows :

select * from dba_rollback_segs where tablespace_name=‘UNDOTBS1’

In order not to delay data migration , So it is handled in a special way .

1. Create a new undo Table space UNDOTBS2.

2. Default the database to undo The tablespace is specified as UNDOTBS2.

3. Force database shutdown shutdown abort . Because it's a database migration , It is not officially used, so it can be executed , But the production library should not do this , Forced stop will cause undotbs1 The data file of is damaged .

4. Open database , This startup is normal , But in the alter database open The phase lasts longer , see alter The log will find that the database scans the current redo, And perform a media recovery .

5. data open after ,sys Users log in to the database to view :

select * from dba_rollback_segs where tablespace_name=‘UNDOTBS1’

You will find many old undo Table space UNDOTBS1 Many of the fallback states become “PARTLY AVAILABLE”, The status at this time indicates that the data file is damaged .

6. Record all status “PARTLY AVAILABLE” The name of the fallback segment of , That is to say SEGMENT_NAME.

7. establish pfile:create pfile=‘/home/oracle/initORCL.ora’ from spfile;

8. Close the database , You still need to force the shutdown shutdown abort. modify pfile, Set parameters :

*._corrupted_rollback_segments=(_SYSSMU1 , S Y S S M U 2 ,_SYSSMU2 ,SYSSMU2,_SYSSMU3 , S Y S S M U 4 ,_SYSSMU4 ,SYSSMU4,_SYSSMU5 , S Y S S M U 6 ,_SYSSMU6 ,SYSSMU6,_SYSSMU7 , S Y S S M U 8 ,_SYSSMU8 ,SYSSMU8,_SYSSMU9 , S Y S S M U 10 ,_SYSSMU10 ,SYSSMU10)

( notes : Allow in rollback segments Start the database in case of corruption )

The parameter value is the status “PARTLY AVAILABLE” The name of the fallback segment of .

Add parameters to pfile in .

9. Database to pfile start-up :startup pfile=‘/home/oracle/initORCL.ora’;

10. There will still be a period of media recovery after the database is started , After fully opening , Can be deleted UNDOTBS1 Fallback tablespace .

11. After deleting the old tablespace , Close the database , It can be closed normally this time :shutdown immediate. After closing, the database can be opened normally :startup. Here we are undo Tablespace can be used normally .

边栏推荐

- 谷歌 Chrome 浏览器发布 103.0.5060.114 补丁修复 0-day 漏洞

- [SOC FPGA] custom IP PWM breathing lamp

- C语言面试 写一个函数查找两个字符串中的第一个公共字符串

- [opencv] morphological filtering (2): open operation, morphological gradient, top hat, black hat

- Wechat applet hides the progress bar component of the video tag

- 基于FPGA的VGA协议实现

- Implementation of VGA protocol based on FPGA

- Ha Qu projection dark horse posture, only half a year to break through the 1000 yuan projector market!

- 2022 Android interview essential knowledge points, a comprehensive summary

- Pinduoduo lost the lawsuit: "bargain for free" infringed the right to know but did not constitute fraud, and was sentenced to pay 400 yuan

猜你喜欢

安装VMmare时候提示hyper-v / device defender 侧通道安全性

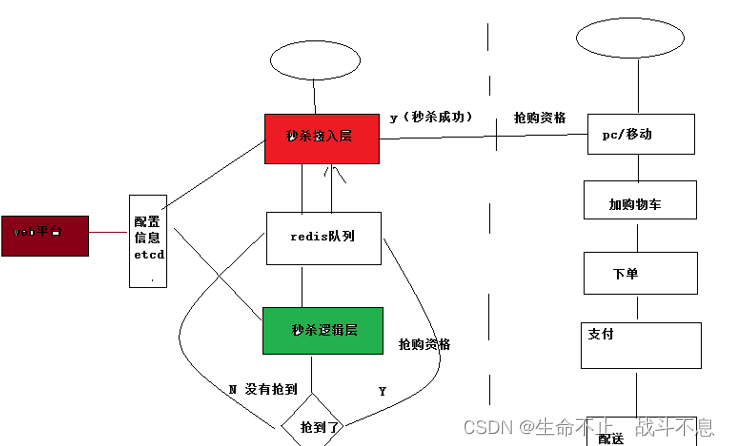

Ideas of high concurrency and high traffic seckill scheme

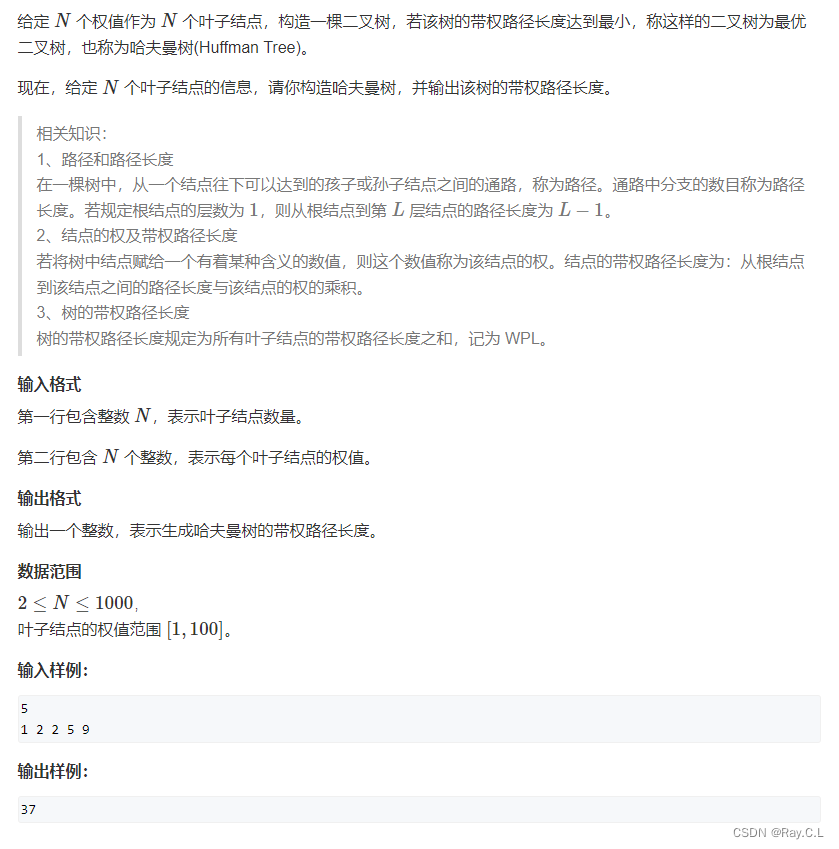

3531. Huffman tree

面试中有哪些经典的数据库问题?

POI导出Excel:设置字体、颜色、行高自适应、列宽自适应、锁住单元格、合并单元格...

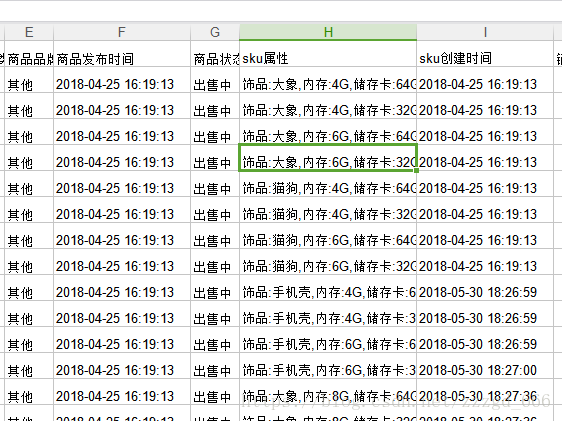

当前发布的SKU(销售规格)信息中包含疑似与宝贝无关的字

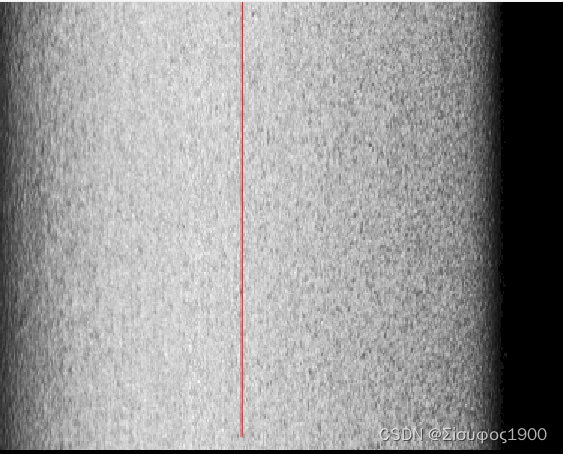

项目实战 五 拟合直线 获得中线

2022 Android interview essential knowledge points, a comprehensive summary

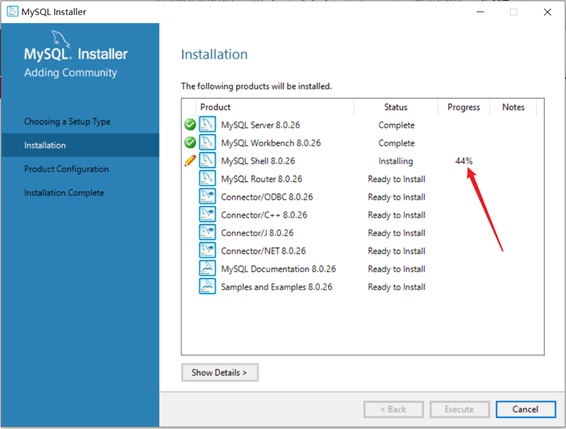

MySQL的安装

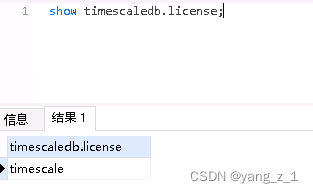

PostgreSQL database timescaledb function time_ bucket_ Gapfill() error resolution and license replacement

随机推荐

Leite smart home longhaiqi: from professional dimming to full house intelligence, 20 years of focus on professional achievements

c面试 加密程序:由键盘输入明文,通过加密程序转换成密文并输出到屏幕上。

项目实战 五 拟合直线 获得中线

Abnova 体外转录 mRNA工作流程和加帽方法介绍

牛客小白月赛52 E.分组求对数和(二分&容斥)

Ha Qu projection dark horse posture, only half a year to break through the 1000 yuan projector market!

C语言整理(待更新)

MySQL的安装

谷歌 Chrome 浏览器发布 103.0.5060.114 补丁修复 0-day 漏洞

POI导出Excel:设置字体、颜色、行高自适应、列宽自适应、锁住单元格、合并单元格...

[shell] summary of common shell commands and test judgment statements

How to solve sqlstate[hy000]: General error: 1364 field 'xxxxx' doesn't have a default value error

HKUST & MsrA new research: on image to image conversion, fine tuning is all you need

[start from scratch] detailed process of deploying yolov5 in win10 system (CPU, no GPU)

安装VMmare时候提示hyper-v / device defender 侧通道安全性

二十岁的我4面拿到字节跳动offer,至今不敢相信

string(讲解)

Crudini 配置文件编辑工具

C语言面试 写一个函数查找两个字符串中的第一个公共字符串

Postgresql源码(60)事务系统总结