当前位置:网站首页>"Hands on learning in depth" Chapter 2 - preparatory knowledge_ 2.3 linear algebra_ Learning thinking and exercise answers

"Hands on learning in depth" Chapter 2 - preparatory knowledge_ 2.3 linear algebra_ Learning thinking and exercise answers

2022-07-06 02:31:00 【coder_ sure】

List of articles

2.3 linear algebra

author github link : github link

practice

- Prove a matrix A \mathbf{A} A The transpose of is A \mathbf{A} A, namely ( A ⊤ ) ⊤ = A (\mathbf{A}^\top)^\top = \mathbf{A} (A⊤)⊤=A.

- Two matrices are given A \mathbf{A} A and B \mathbf{B} B, prove “ They are transposed and ” be equal to “ They are transposed with ”, namely A ⊤ + B ⊤ = ( A + B ) ⊤ \mathbf{A}^\top + \mathbf{B}^\top = (\mathbf{A} + \mathbf{B})^\top A⊤+B⊤=(A+B)⊤.

- Given an arbitrary square matrix A \mathbf{A} A, A + A ⊤ \mathbf{A} + \mathbf{A}^\top A+A⊤ Is it always symmetrical ? Why? ?

- We define shapes in this section ( 2 , 3 , 4 ) (2,3,4) (2,3,4) Tensor

X.len(X)What is the output of ? - For tensors of arbitrary shape

X,len(X)Whether it always corresponds toXThe length of a particular axis ? What is this axis ? - function

A/A.sum(axis=1), See what happens . Can you analyze the reason ? - Consider a with a shape ( 2 , 3 , 4 ) (2,3,4) (2,3,4) Tensor , In the shaft 0、1、2 What shape is the summation output on ?

- by

linalg.normFunction provides 3 Tensors of one or more axes , And observe its output . For tensors of any shape, what does this function calculate ?

Practice reference answers ( If there is a mistake , Please also correct )

1. Prove a matrix A \mathbf{A} A The transpose of is A \mathbf{A} A, namely ( A ⊤ ) ⊤ = A (\mathbf{A}^\top)^\top = \mathbf{A} (A⊤)⊤=A.

A·=·torch.arange(12).reshape(3,4)A,(A.T).T

output:

(tensor([[ 0, 1, 2, 3],

[ 4, 5, 6, 7],

[ 8, 9, 10, 11]]), tensor([[ 0, 1, 2, 3],

[ 4, 5, 6, 7],

[ 8, 9, 10, 11]]))

2. Two matrices are given A \mathbf{A} A and B \mathbf{B} B, prove “ They are transposed and ” be equal to “ They are transposed with ”, namely A ⊤ + B ⊤ = ( A + B ) ⊤ \mathbf{A}^\top + \mathbf{B}^\top = (\mathbf{A} + \mathbf{B})^\top A⊤+B⊤=(A+B)⊤.

B = torch.tensor([[9, 8, 7,6], [5, 4, 3,2], [3, 4, 5,1]])

A.T+B.T ,(A+B).T

output:

(tensor([[ 9, 9, 11],

[ 9, 9, 13],

[ 9, 9, 15],

[ 9, 9, 12]]), tensor([[ 9, 9, 11],

[ 9, 9, 13],

[ 9, 9, 15],

[ 9, 9, 12]]))

3. Given an arbitrary square matrix A \mathbf{A} A, A + A ⊤ \mathbf{A} + \mathbf{A}^\top A+A⊤ Is it always symmetrical ? Why? ?

answer : because ( A + A ⊤ ) ⊤ = A ⊤ + A = A + A ⊤ (\mathbf{A} + \mathbf{A}^\top)^\top=\mathbf{A}^\top+\mathbf{A}=\mathbf{A} + \mathbf{A}^\top (A+A⊤)⊤=A⊤+A=A+A⊤

A = torch.arange(9).reshape(3,3)

A

output:

tensor([[0, 1, 2],

[3, 4, 5],

[6, 7, 8]])

A+A.T

output:

tensor([[ 0, 4, 8],

[ 4, 8, 12],

[ 8, 12, 16]])

4. We define shapes in this section ( 2 , 3 , 4 ) (2,3,4) (2,3,4) Tensor X.len(X) What is the output of ?

X = torch.arange(24).reshape(2, 3, 4)

len(X)

output:

2

5. For tensors of arbitrary shape X,len(X) Whether it always corresponds to X The length of a particular axis ? What is this axis ?

answer :len(X) Total correspondence No 0 Length of shaft .

6. function A/A.sum(axis=1), See what happens . Can you analyze the reason ?

answer : Unable to run , as a result of A It's a 5 * 4 Matrix , and A.sum(axis=1) It's a flattened 1 Dimension vector , The two dimensions do not match and cannot be divided .( notes : Broadcasting can only happen when the two dimensions are the same , For example, they are all two-dimensional )

A = torch.arange(20, dtype=torch.float32).reshape(5, 4)

A/A.sum(axis=1,keepdims=True)

output:

tensor([[0.0000, 0.1667, 0.3333, 0.5000],

[0.1818, 0.2273, 0.2727, 0.3182],

[0.2105, 0.2368, 0.2632, 0.2895],

[0.2222, 0.2407, 0.2593, 0.2778],

[0.2286, 0.2429, 0.2571, 0.2714]])

A = torch.arange(20, dtype=torch.float32).reshape(5, 4)

A/A.sum(axis=1)

output:

---------------------------------------------------------------------------

RuntimeError Traceback (most recent call last)

<ipython-input-59-86c8759e15d3> in <module>()

1 A = torch.arange(20, dtype=torch.float32).reshape(5, 4)

----> 2 A/A.sum(axis=1)

RuntimeError: The size of tensor a (4) must match the size of tensor b (5) at non-singleton dimension 1

7. Consider a with a shape ( 2 , 3 , 4 ) (2,3,4) (2,3,4) Tensor , In the shaft 0、1、2 What shape is the summation output on ?

H=torch.arange(24).reshape(2,3,4)

H

output:

tensor([[[ 0, 1, 2, 3],

[ 4, 5, 6, 7],

[ 8, 9, 10, 11]],

[[12, 13, 14, 15],

[16, 17, 18, 19],

[20, 21, 22, 23]]])

H0 = H.sum(axis=0)

H1 = H.sum(axis=1)

H2 = H.sum(axis=2)

H0, H1, H2

output:

(tensor([[12, 14, 16, 18],

[20, 22, 24, 26],

[28, 30, 32, 34]]), tensor([[12, 15, 18, 21],

[48, 51, 54, 57]]), tensor([[ 6, 22, 38],

[54, 70, 86]]))

8. by linalg.norm Function provides 3 Tensors of one or more axes , And observe its output . For tensors of any shape, what does this function calculate ?

answer : In fact, it is the operation of finding norms ( The default is 2 norm )

Z=torch.ones(2,3,4)

W=torch.ones(2,2,3,4)

torch.norm(Z)*torch.norm(Z),torch.norm(W)*torch.norm(W)

output:

(tensor(24.0000), tensor(48.))

边栏推荐

- Black high-end responsive website dream weaving template (adaptive mobile terminal)

- 0211 embedded C language learning

- 更改对象属性的方法

- 事故指标统计

- Advanced technology management - what is the physical, mental and mental strength of managers

- ReferenceError: primordials is not defined错误解决

- [Yunju entrepreneurial foundation notes] Chapter II entrepreneur test 16

- SQL statement

- 更换gcc版本后,编译出现make[1]: cc: Command not found

- Audio and video engineer YUV and RGB detailed explanation

猜你喜欢

MySQL winter vacation self-study 2022 11 (9)

![[Yunju entrepreneurial foundation notes] Chapter II entrepreneur test 15](/img/72/0fe9cb032339d5f1ccf6f6c24edc57.jpg)

[Yunju entrepreneurial foundation notes] Chapter II entrepreneur test 15

PAT甲级 1033 To Fill or Not to Fill

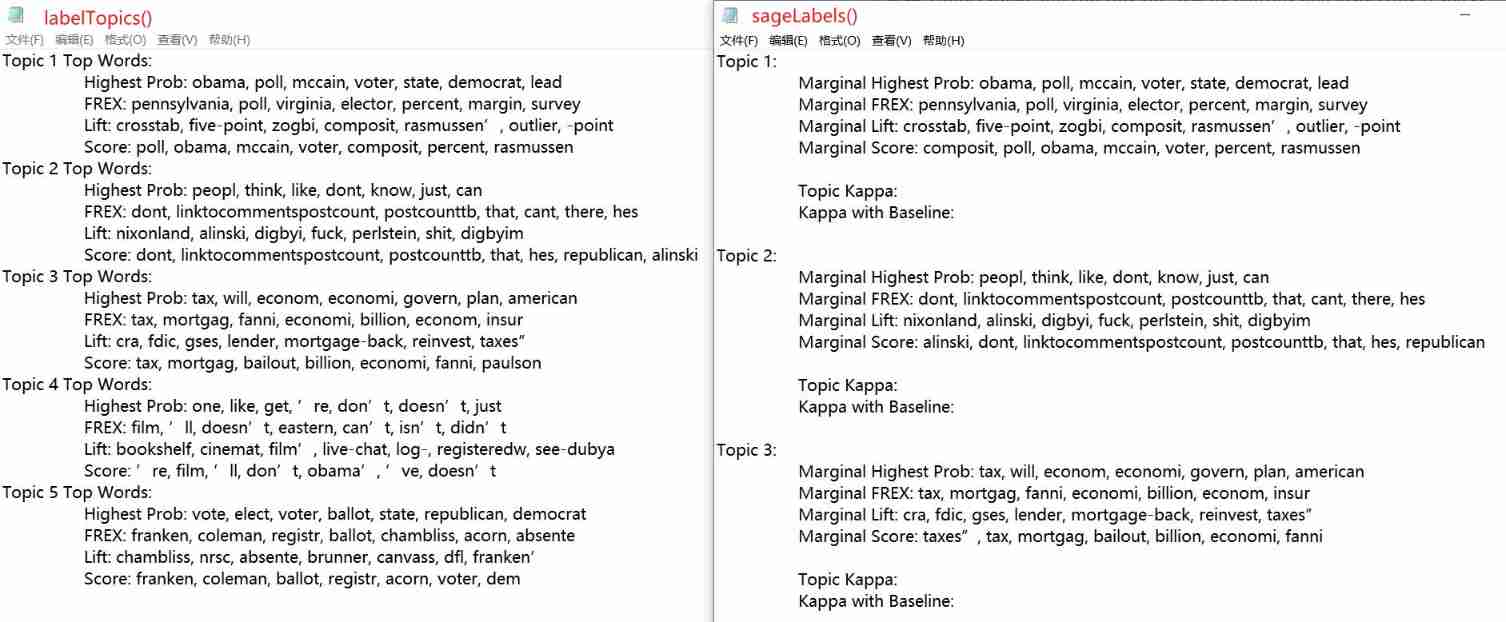

Structural theme model (I) STM package workflow

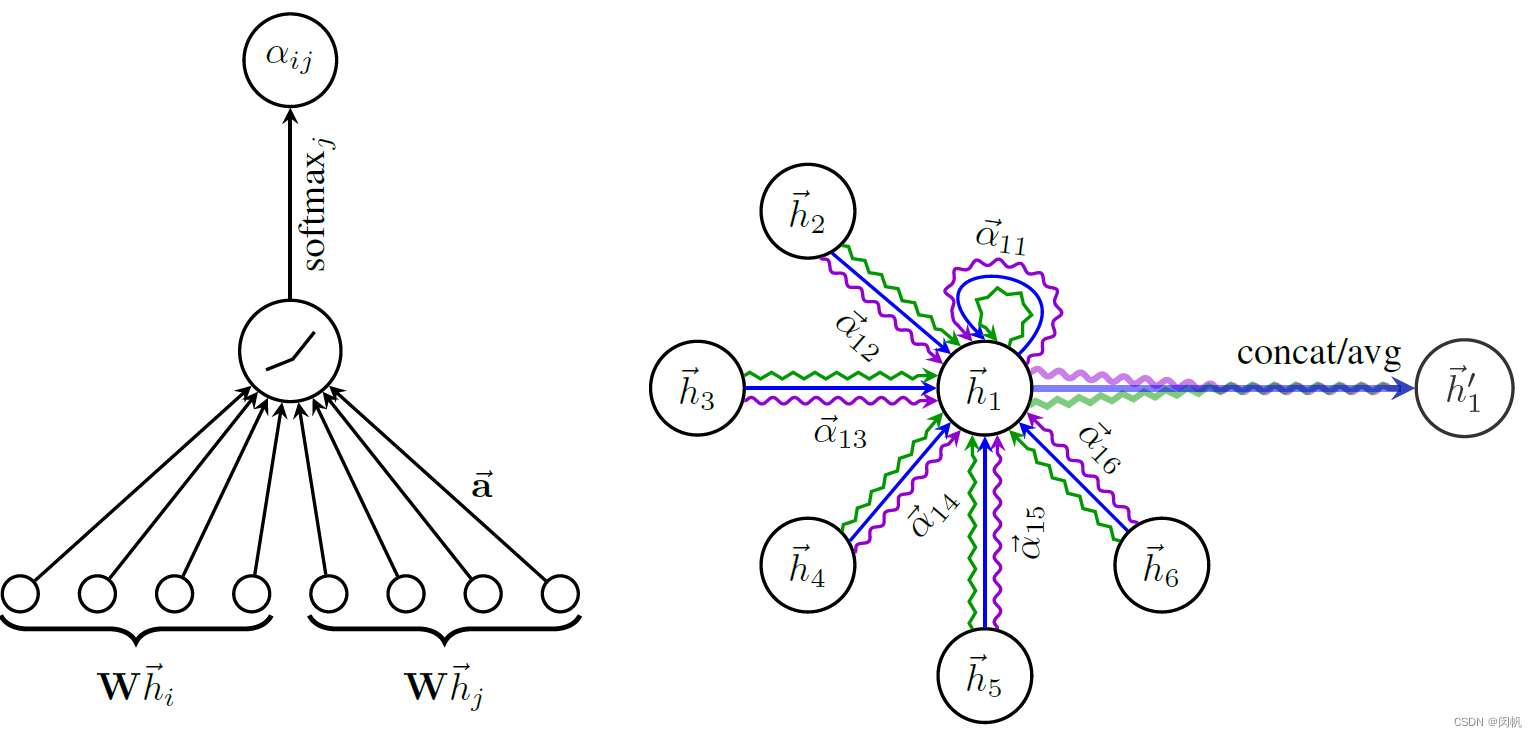

论文笔记: 图神经网络 GAT

![[Yunju entrepreneurial foundation notes] Chapter II entrepreneur test 16](/img/c3/f3746b161012acc3751b2bd0b8f663.jpg)

[Yunju entrepreneurial foundation notes] Chapter II entrepreneur test 16

Keyword static

Formatting occurs twice when vs code is saved

一位博士在华为的22年

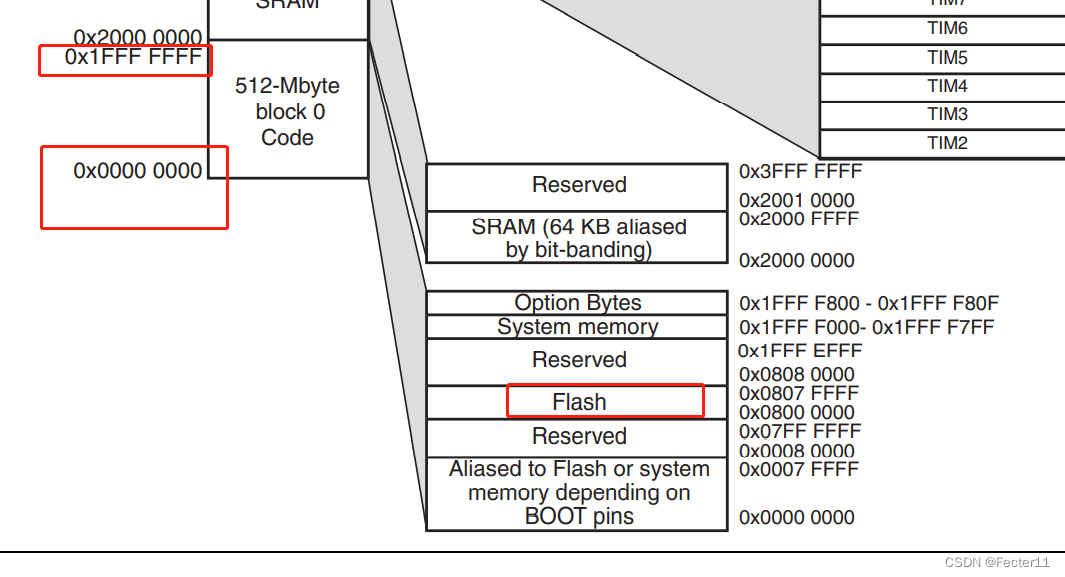

Zero basic self-study STM32 wildfire review of GPIO use absolute address to operate GPIO

随机推荐

有沒有sqlcdc監控多張錶 再關聯後 sink到另外一張錶的案例啊?全部在 mysql中操作

零基础自学STM32-野火——GPIO复习篇——使用绝对地址操作GPIO

Black high-end responsive website dream weaving template (adaptive mobile terminal)

RDD conversion operator of spark

[community personas] exclusive interview with Ma Longwei: the wheel is not easy to use, so make it yourself!

[Yunju entrepreneurial foundation notes] Chapter II entrepreneur test 13

大厂镜像库

爬虫(9) - Scrapy框架(1) | Scrapy 异步网络爬虫框架

Minecraft 1.18.1, 1.18.2 module development 22 Sniper rifle

【机器人库】 awesome-robotics-libraries

Looking at the trend of sequence modeling of recommended systems in 2022 from the top paper

Exness: Mercedes Benz's profits exceed expectations, and it is predicted that there will be a supply chain shortage in 2022

更换gcc版本后,编译出现make[1]: cc: Command not found

Crawler (9) - scrape framework (1) | scrape asynchronous web crawler framework

[Digital IC manual tearing code] Verilog asynchronous reset synchronous release | topic | principle | design | simulation

一题多解,ASP.NET Core应用启动初始化的N种方案[上篇]

高数_向量代数_单位向量_向量与坐标轴的夹角

Li Kou today's question -729 My schedule I

Sword finger offer 30 Stack containing min function

Use Scrollview and tabhost to realize vertical scrollbars and tabs