当前位置:网站首页>Bayes' law

Bayes' law

2022-07-07 08:09:00 【Steven Devin】

1. probability theory

First review some probability theory .

joint probability : event A And events B Probability of simultaneous occurrence ; Also called product rule .

P ( A , B ) = P ( A ∩ B ) = P ( A ∣ B ) P ( B ) = P ( B ∣ A ) P ( A ) P(A,B) = P(A \cap B) = P(A|B)P(B) = P(B|A)P(A) P(A,B)=P(A∩B)=P(A∣B)P(B)=P(B∣A)P(A)

Summation rule : event A and event B The probability of different occurrences .

P ( A ∪ B ) = P ( A ) + P ( B ) − P ( A ∩ B ) P(A \cup B) = P(A) + P(B)-P(A\cap B) P(A∪B)=P(A)+P(B)−P(A∩B)

If A and B Are mutually exclusive :

P ( A ∪ B ) = P ( A ) + P ( B ) P(A \cup B) = P(A) + P(B) P(A∪B)=P(A)+P(B)

Total probability : If the event A The occurrence of may be caused by many possible event B Lead to .

P ( A ) = ∑ i n P ( A ∣ B i ) P ( B i ) P(A) = \sum_{i} ^nP(A|B_{i})P(B_{i}) P(A)=i∑nP(A∣Bi)P(Bi)

Conditional probability : Given event B event A Probability of occurrence .

P ( A ∣ B ) = P ( A , B ) P ( B ) P(A|B)=\frac{P(A,B)}{P(B)} P(A∣B)=P(B)P(A,B)

2. Bayes' law

In machine learning , Given the observed training data B, We are often interested in finding the best hypothesis space A.

The best hypothetical space is the most possible hypothetical space , That is, given training data B, Put all kinds of training data B In hypothetical space A Medium Prior probability Add up .

According to the above definition , Finding hypothesis space A The probability is as follows :

P ( A ) = ∑ n P ( A ∣ B i ) P ( B i ) P(A) = \sum_{n} P(A|B_{i})P(B_{i}) P(A)=n∑P(A∣Bi)P(Bi)

Is that familiar ?

This is actually All probability formula , event A The occurrence of may be caused by data B 1 B_1 B1, B 2 B_2 B2… … B n B_n Bn

Many reasons lead to .

For a given training data B, Finding hypothesis space A Probability , Bayesian theorem provides a more direct method .

Bayesian law uses :

- Hypothetical space A Of Prior probability P ( A ) P(A) P(A)

- And observation data Prior probability probability P ( B ) P(B) P(B)

- Given a hypothetical space A, Observation data B Probability P ( B ∣ A ) P(B|A) P(B∣A)

Find the given observation data B, Finding hypothesis space A Probability P ( A ∣ B ) P(A|B) P(A∣B), Also known as Posterior probability , Because it reflects the given data B, For hypothetical space A The influence of probability .

Contrary to a priori probability , P(A) And B It's independent .

Bayes' formula :

P ( A ∣ B ) = P ( B ∣ A ) P ( A ) P ( B ) P(A|B)=\frac{P(B|A)P(A)}{P(B)} P(A∣B)=P(B)P(B∣A)P(A)

The derivation of Bayesian formula is also very simple , Combining the conditional probability and joint probability mentioned in the first part, we can find .

Conditional probability :

P ( A ∣ B ) = P ( A , B ) P ( B ) P(A|B)=\frac{P(A,B)}{P(B)} P(A∣B)=P(B)P(A,B)

joint probability :

P ( A , B ) = P ( B ∣ A ) P ( A ) {P(A,B)} = P(B|A)P(A) P(A,B)=P(B∣A)P(A)

3. Maximum posterior probability MAP

Sometimes , Given data B, Want to ask for hypothetical space A The most likely assumption in is called Maximum posterior probability MAP(Maximum a Posteriori).

A M A P = a r g m a x P ( A ∣ B ) A_{MAP} = argmax P(A|B) AMAP=argmaxP(A∣B)

That is o :

= a r g m a x P ( B ∣ A ) P ( A ) P ( B ) = argmax \frac{P(B|A)P(A)}{P(B)} =argmaxP(B)P(B∣A)P(A)

Get rid of P ( B ) P(B) P(B) Because it is related to the assumption A yes independent .

= a r g m a x P ( B ∣ A ) P ( A ) = argmax P(B|A)P(A) =argmaxP(B∣A)P(A)

边栏推荐

- Linux server development, detailed explanation of redis related commands and their principles

- ZCMU--1396: 队列问题(2)

- Fast parsing intranet penetration escorts the document encryption industry

- Network learning (III) -- highly concurrent socket programming (epoll)

- JS cross browser parsing XML application

- uniapp 移动端强制更新功能

- Open source ecosystem | create a vibrant open source community and jointly build a new open source ecosystem!

- 船载雷达天线滑环的使用

- JSON data flattening pd json_ normalize

- 让Livelink初始Pose与动捕演员一致

猜你喜欢

Hisense TV starts the developer mode

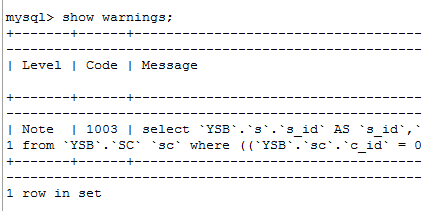

The charm of SQL optimization! From 30248s to 0.001s

船载雷达天线滑环的使用

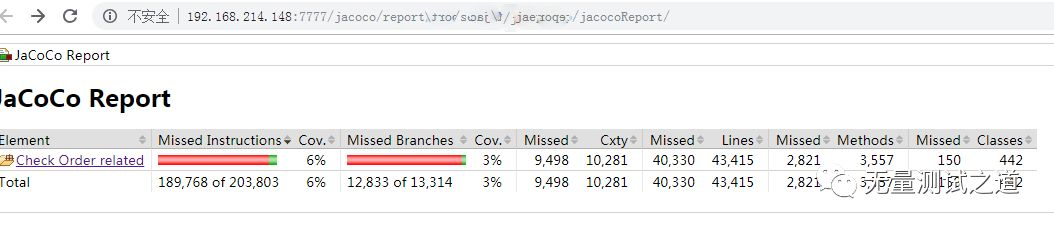

快速使用 Jacoco 代码覆盖率统计

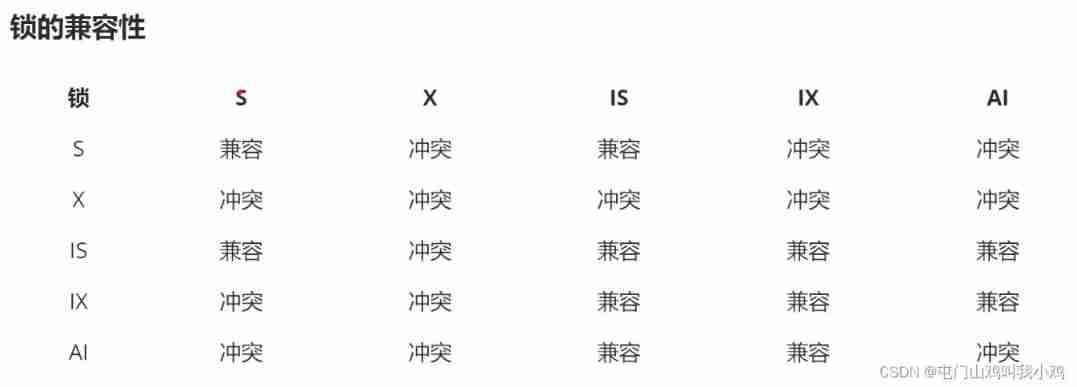

Linux server development, MySQL transaction principle analysis

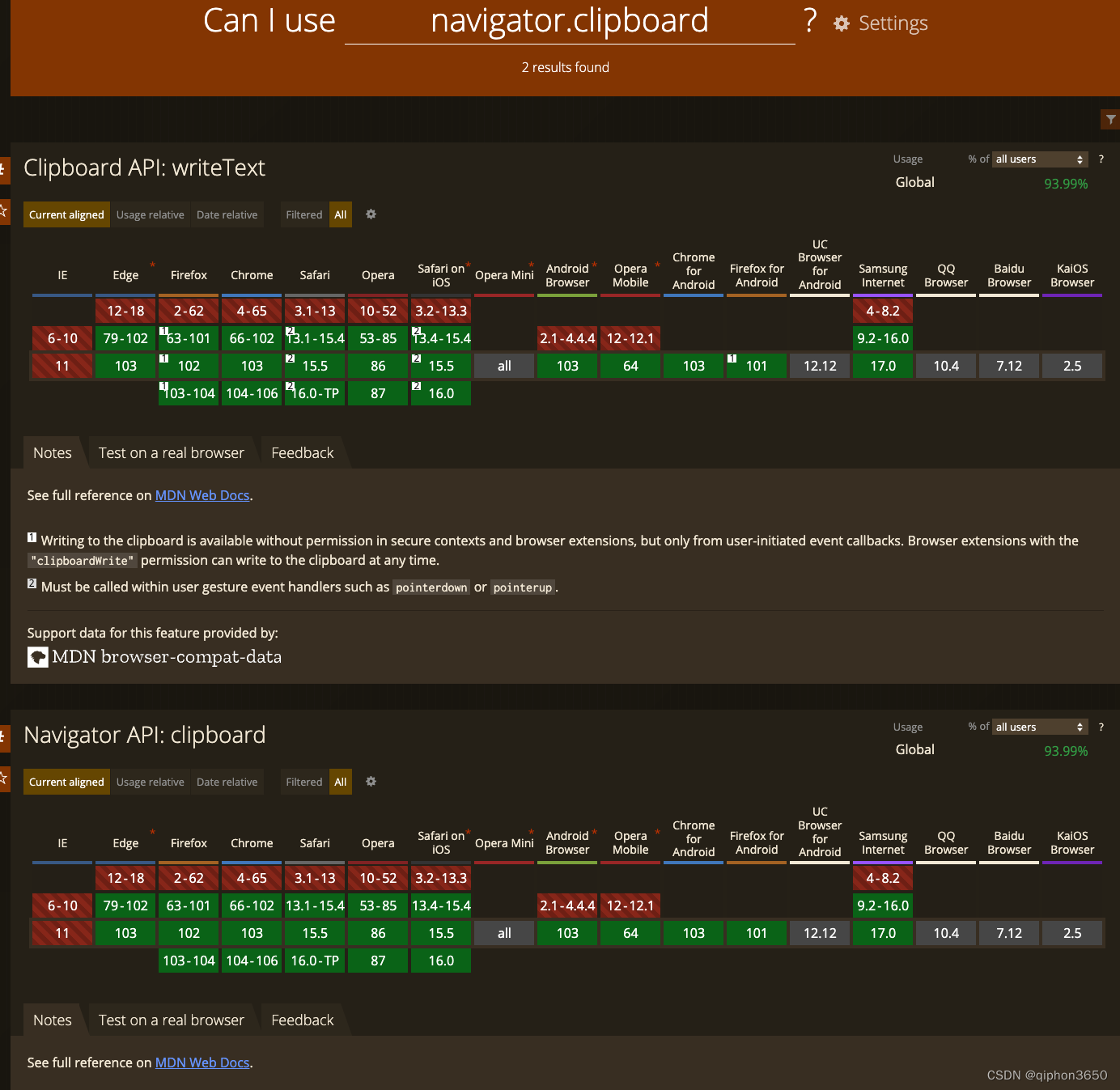

JS复制图片到剪切板 读取剪切板

Es FAQ summary

Few shot Learning & meta learning: small sample learning principle and Siamese network structure (I)

![[quick start of Digital IC Verification] 15. Basic syntax of SystemVerilog learning 2 (operators, type conversion, loops, task/function... Including practical exercises)](/img/e1/9a047ef13299b94b5314ee6865ba26.png)

[quick start of Digital IC Verification] 15. Basic syntax of SystemVerilog learning 2 (operators, type conversion, loops, task/function... Including practical exercises)

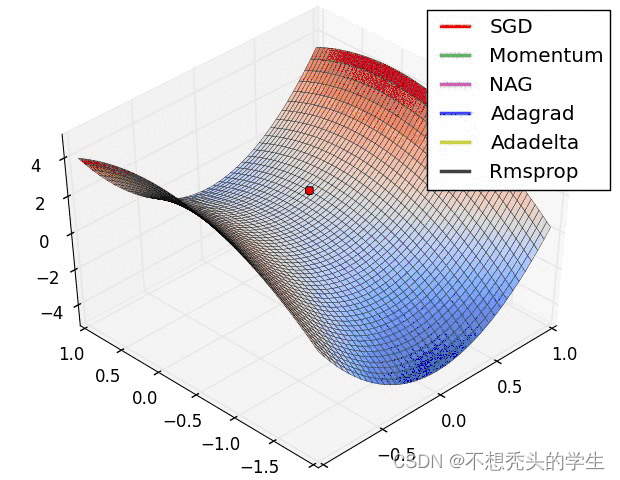

调用 pytorch API完成线性回归

随机推荐

Es FAQ summary

这5个摸鱼神器太火了!程序员:知道了快删!

面试题(CAS)

The element with setfieldsvalue set is obtained as undefined with GetFieldValue

Relevant data of current limiting

Find the mode in the binary search tree (use medium order traversal as an ordered array)

Paddlepaddle 29 dynamically modify the network structure without model definition code (relu changes to prelu, conv2d changes to conv3d, 2D semantic segmentation model changes to 3D semantic segmentat

QT learning 28 toolbar in the main window

CDC (change data capture technology), a powerful tool for real-time database synchronization

Network learning (II) -- Introduction to socket

C language flight booking system

Minimum absolute difference of binary search tree (use medium order traversal as an ordered array)

LeetCode简单题之找到一个数字的 K 美丽值

Roulette chart 2 - writing of roulette chart code

Example of file segmentation

Linux server development, MySQL transaction principle analysis

Leetcode 40: combined sum II

【数字IC验证快速入门】14、SystemVerilog学习之基本语法1(数组、队列、结构体、枚举、字符串...内含实践练习)

复杂网络建模(一)

Uniapp mobile terminal forced update function