当前位置:网站首页>Deep learning - LSTM Foundation

Deep learning - LSTM Foundation

2022-07-05 03:44:00 【Guan Longxin】

1. RNN

Remember all the information .

(1) Definition and characteristics

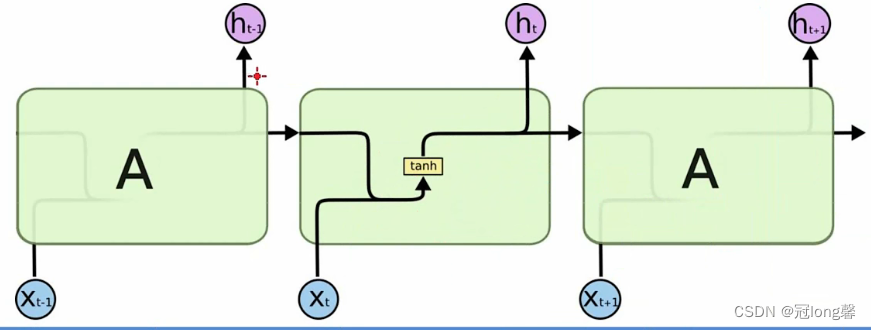

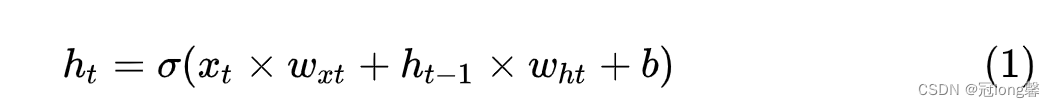

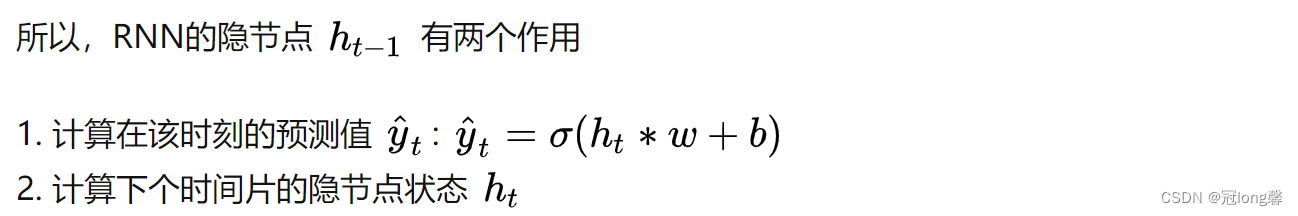

RNN The reason why it has excellent performance in time series data is RNN stay t Time slice will t-1 The hidden node of the time slice is used as the input of the current time slice .

(2) problem

- Long term dependence : With the increase of data time slice ,RNN Lost the ability to learn to connect information so far .

- The gradient disappears : Gradient disappearance and gradient explosion are caused by RNN Caused by the cyclic multiplication of the weight matrix .

LSTM The reason why RNN Long term dependence , Because LSTM Door introduced (gate) Mechanisms are used to control the circulation and loss of features .

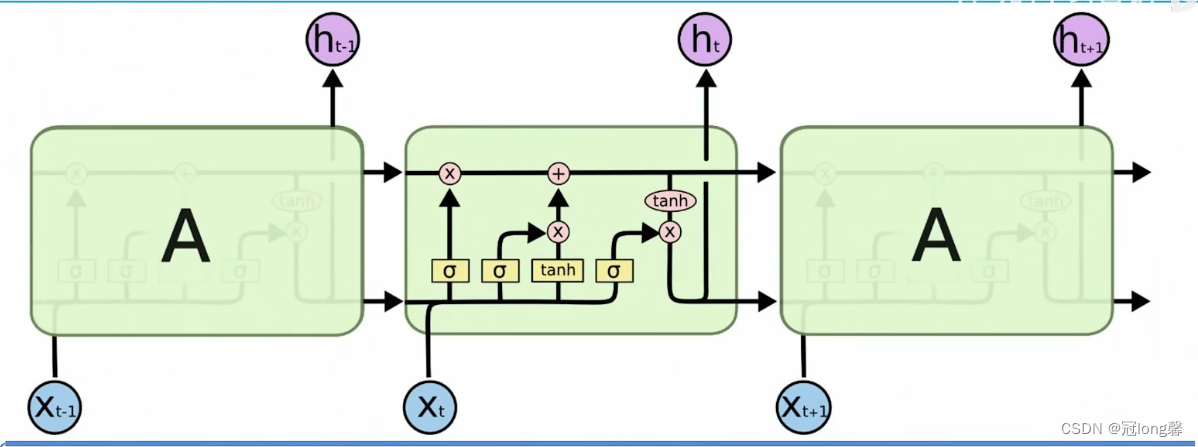

2. LSTM

(1) Definition and characteristics

Set up memory cells , Selective memory .

- Three doors : Oblivion gate 、 Input gate 、 Output gate

- Two states :C(t), h(t)

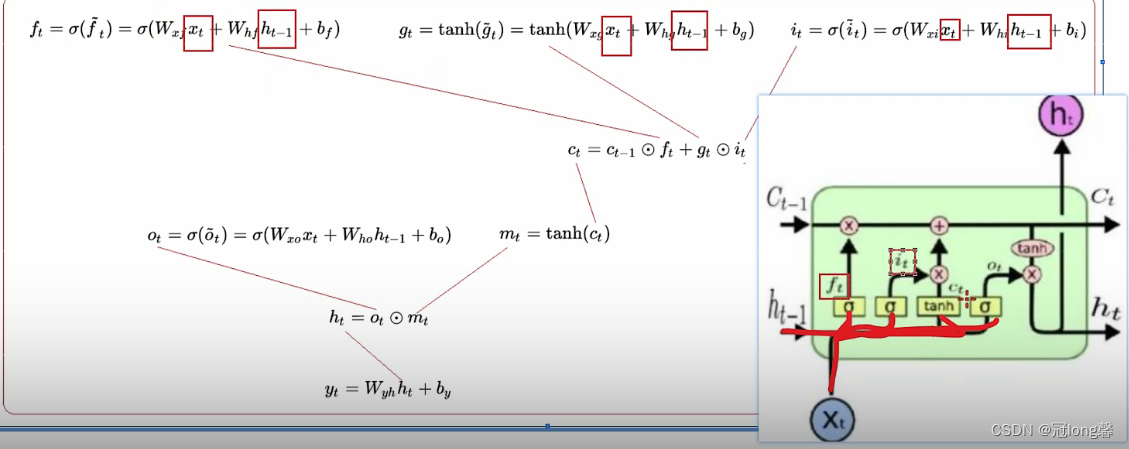

(2) Forward propagation

Selectively retain historical memory , Absorb new knowledge

- Oblivion gate f t f_t ft:

① f t = σ ( W x f x t + W h f h t − 1 + b f ) ; f_t=\sigma(W_{xf}x_t+W_{hf}h_{t-1}+b_f); ft=σ(Wxfxt+Whfht−1+bf);

② understand : f t f_t ft adopt sigmoid Function selection memory ( Forget ) Historical information C t − 1 C_{t-1} Ct−1.

As you can imagine , Brain capacity is limited . When inputting new information, we need to selectively forget some weak historical memories .

- Input gate i t i_t it:

① i t = σ ( W x i x t + W h i h t − 1 + b i ) ; i_t=\sigma(W_{xi}x_t+W_{hi}h_{t-1}+b_i); it=σ(Wxixt+Whiht−1+bi);

understand : i t i_t it adopt sigmoid Selectively learn new information g t g_t gt.

② g t = tanh ( W x g x t + W h g h t − 1 + b g ) g_t=\tanh(W_{xg}x_t+W_{hg}h_{t-1}+b_g) gt=tanh(Wxgxt+Whght−1+bg)

New input information is not all useful , We just need to remember the relevant information .

- Historical information c t c_t ct:

① c t = f t ⊙ c t − 1 + g t ∗ i t ; c_t=f_t \odot c_{t-1}+g_t*i_t; ct=ft⊙ct−1+gt∗it;

understand : New memory is composed of previous memory and newly learned information . among f t , i t f_t,i_t ft,it They are the screening of historical memory and information .

Selectively combine historical memory with new information , Formed a new memory .

Output gate o t o_t ot:

① o t = σ ( W x o x t + W h o h t − 1 + b o ) ; o_t=\sigma(W_{xo}x_t+W_{ho}h_{t-1}+b_o); ot=σ(Wxoxt+Whoht−1+bo);

understand : o t o_t ot adopt sigmoid Selective use of memory tanh ( C t ) \tanh(C_t) tanh(Ct).

② m t = tanh ( c t ) ; m_t=\tanh(c_t); mt=tanh(ct);

understand : C t C_t Ct adopt tanh Using historical memory .

③ h t = o t ⊙ m t ; h_t=o_t \odot m_t; ht=ot⊙mt; Got h t h_t ht Will be output and used for the next event step t+1 in .Output y t y_t yt:

① y t = W y h h t + b y ; y_t = W_{yh}h_t+b_y; yt=Wyhht+by;

(3) understand

① Use σ \sigma σ function f t , g t f_t,g_t ft,gt Selective memory of historical information C t − 1 C_{t-1} Ct−1 And learn new knowledge g t g_t gt.

c t = f t ⊙ c t − 1 + g t ∗ i t ; c_t=f_t \odot c_{t-1}+g_t*i_t; ct=ft⊙ct−1+gt∗it;② Use σ \sigma σ function o t o_t ot Filter historical memory C t C_t Ct As a short-term memory h t h_t ht.

h t = o t ⊙ m t ; h_t=o_t \odot m_t; ht=ot⊙mt;The process of spreading forward :

LSTM Realize long-term and short-term memory through three gates and two states . First, through the memory gate f t f_t ft Choose to remember historical information C t − 1 C_{t-1} Ct−1, Then through the learning door g t g_t gt Selective learning of new information i t i_t it. Add the old and new memories obtained through screening to obtain new historical memories C t C_t Ct. Finally, through the output gate o t o_t ot Selectively receive historical information to obtain short-term memory h t h_t ht. Input the short-term memory into the output to obtain the output value y t y_t yt.

边栏推荐

- [learning notes] month end operation -gr/ir reorganization

- English essential vocabulary 3400

- [wp][入门]刷弱类型题目

- MySQL winter vacation self-study 2022 11 (9)

- ABP vNext microservice architecture detailed tutorial - distributed permission framework (Part 1)

- ActiveReportsJS 3.1 VS ActiveReportsJS 3.0

- [安洵杯 2019]不是文件上传

- [software reverse - basic knowledge] analysis method, assembly instruction architecture

- Difference between MotionEvent. getRawX and MotionEvent. getX

- Google Chrome CSS will not update unless the cache is cleared - Google Chrome CSS doesn't update unless clear cache

猜你喜欢

v-if VS v-show 2.0

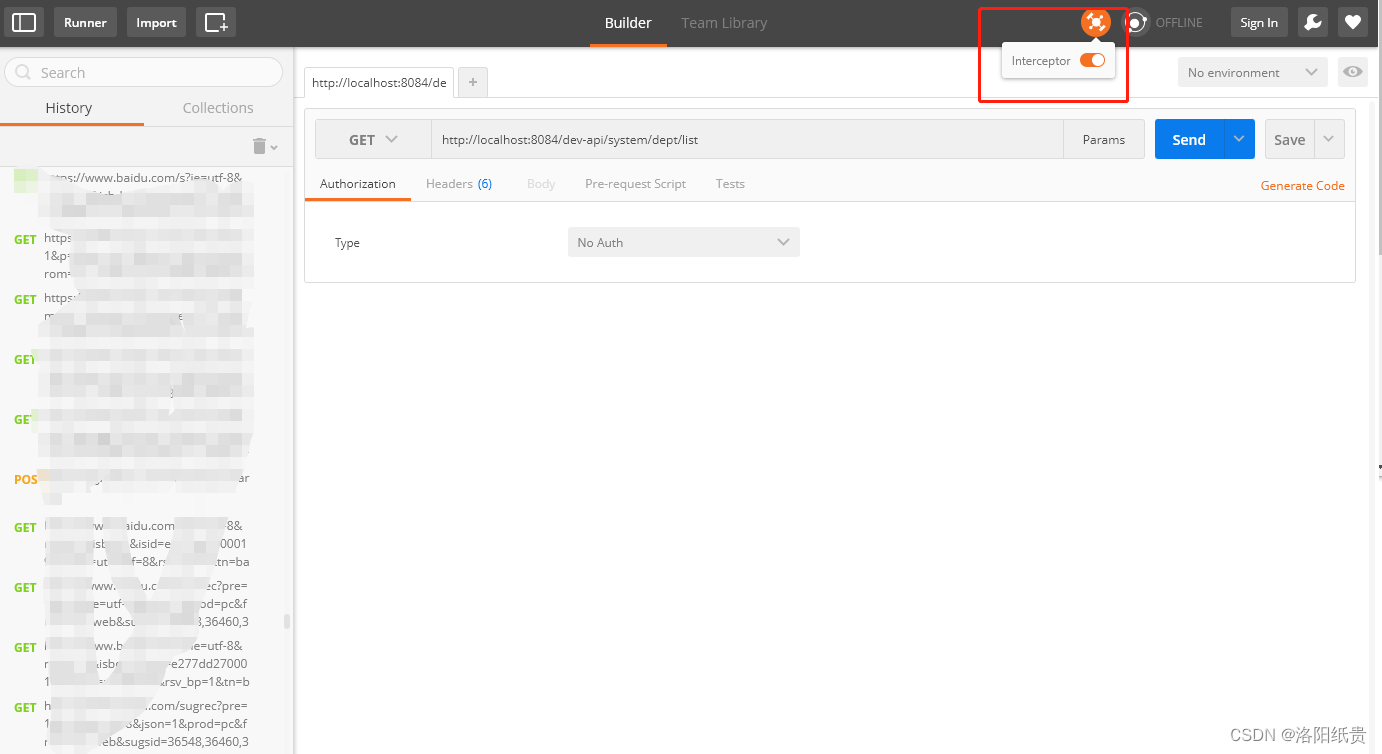

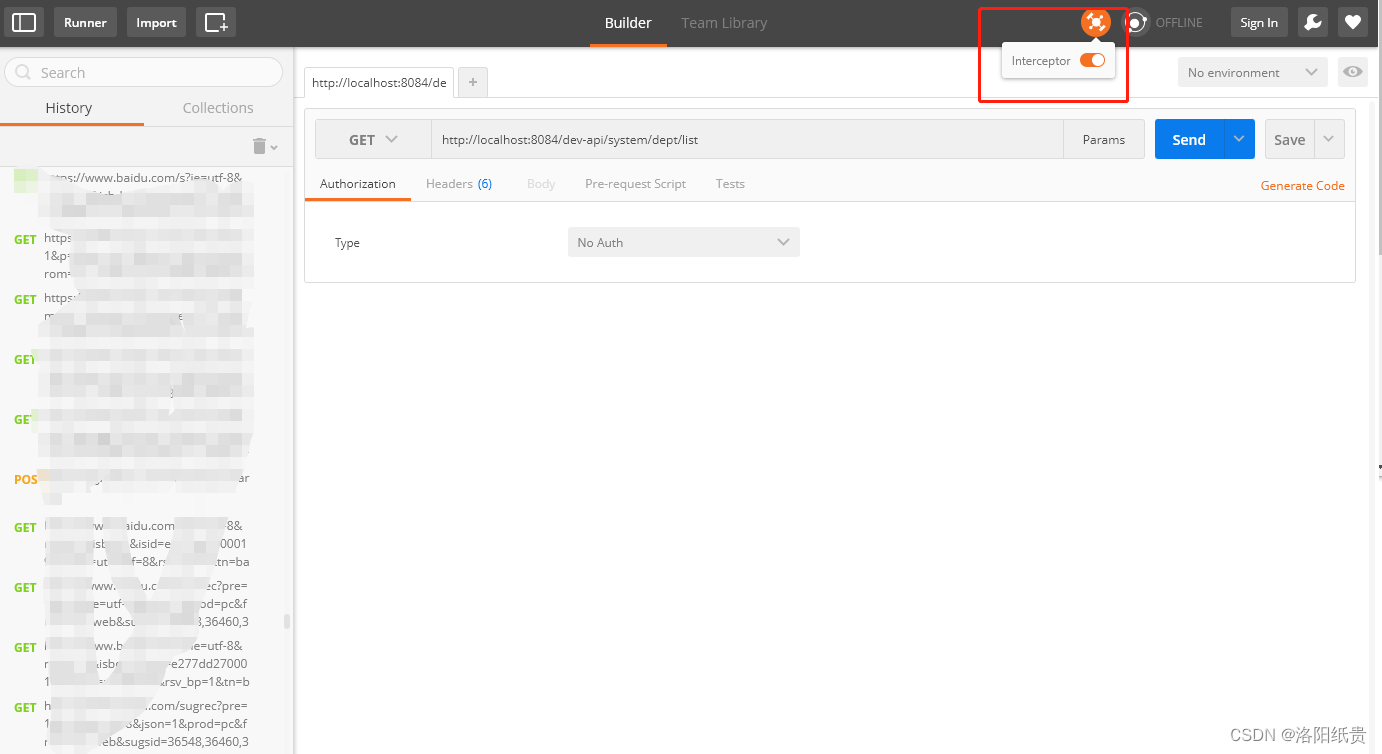

Installation of postman and postman interceptor

Some enterprise interview questions of unity interview

The perfect car for successful people: BMW X7! Superior performance, excellent comfort and safety

![[untitled]](/img/e0/52467a7d7604c1ab77038c25608811.png)

[untitled]

postman和postman interceptor的安装

![[groovy] loop control (number injection function implements loop | times function | upto function | downto function | step function | closure can be written outside as the final parameter)](/img/45/6cb796364efe16d54819ac10fb7d05.jpg)

[groovy] loop control (number injection function implements loop | times function | upto function | downto function | step function | closure can be written outside as the final parameter)

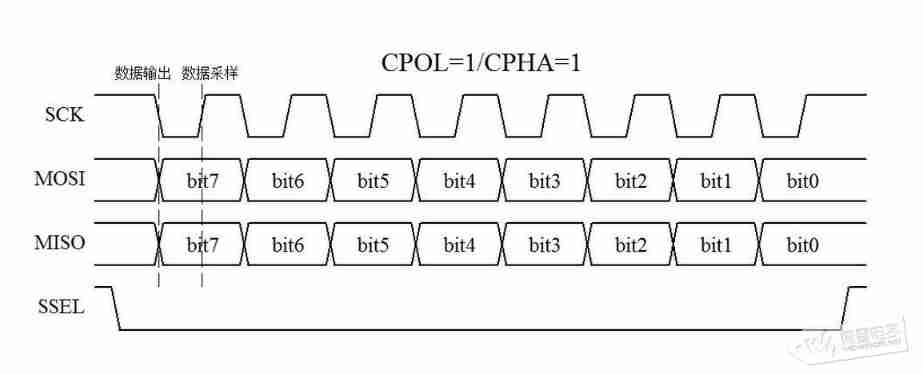

SPI and IIC communication protocol

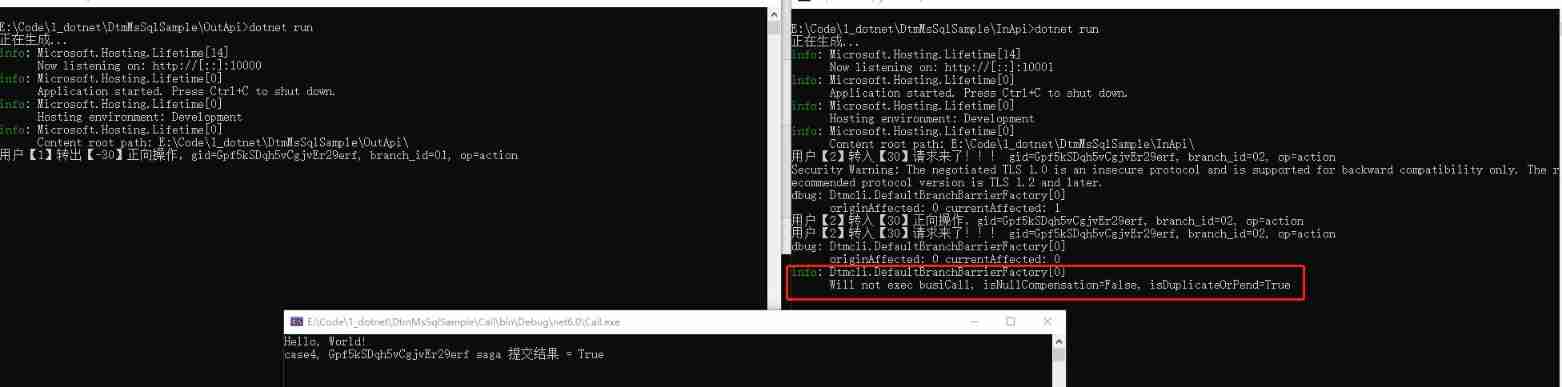

Talk about the SQL server version of DTM sub transaction barrier function

Redis source code analysis: redis cluster

随机推荐

How to make the listbox scroll automatically when adding a new item- How can I have a ListBox auto-scroll when a new item is added?

Why do some programmers change careers before they are 30?

Mongodb common commands

How to learn to get the embedding matrix e # yyds dry goods inventory #

Une question est de savoir si Flink SQL CDC peut définir le parallélisme. Si le parallélisme est supérieur à 1, il y aura un problème d'ordre?

The perfect car for successful people: BMW X7! Superior performance, excellent comfort and safety

Daily question 2 12

Technology sharing swift defense programming

Difference between MotionEvent. getRawX and MotionEvent. getX

Operation flow of UE4 DMX and grandma2 onpc 3.1.2.5

001 chip test

Redis6-01nosql database

【软件逆向-基础知识】分析方法、汇编指令体系结构

Three line by line explanations of the source code of anchor free series network yolox (a total of ten articles, which are guaranteed to be explained line by line. After reading it, you can change the

ICSI213/IECE213 Data Structures

speed or tempo in classical music

[an Xun cup 2019] not file upload

grandMA2 onPC 3.1.2.5的DMX参数摸索

[web Audit - source code disclosure] obtain source code methods and use tools

Leetcode42. connect rainwater