当前位置:网站首页>FBO and RBO disappeared in webgpu

FBO and RBO disappeared in webgpu

2022-07-05 02:53:00 【u012804784】

Python Wechat ordering applet course video

https://edu.csdn.net/course/detail/36074

Python Actual quantitative transaction financial management system

https://edu.csdn.net/course/detail/35475

Catalog * 1 WebGL Medium FBO And RBO

+ 1.1 Framebuffer object (FramebufferObject)

+ 1.2 The real carrier of color attachment and depth template attachment

+ 1.3 FBO/RBO/WebGLTexture Relevant methods to collect

OpenGL The system has left a lot of technical accumulation for graphics development , Many of them “Buffer”, You are familiar with vertex buffer objects (VertexbufferObject,VBO), Framebuffer object (FramebufferObject,FBO) etc. .

Switch to the one based on the three modern graphic development technology systems WebGPU after , These classic buffer objects are API in “ Vanished ”. Actually , Their functions are more scientifically dispersed to new API Went to the .

This article talks about FBO And RBO, These two are usually used in off screen rendering logic , And the arrival of WebGPU Why don't these two API 了 ( With what as a substitute ).

1 WebGL Medium FBO And RBO

WebGL In fact, more of the role is a drawing API, So in gl.drawArrays When the function is issued , We must decide where to draw the data resources .

WebGL allow drawArrays Go to either of the two places :canvas or FramebufferObject. Many materials are introduced ,canvas There is a default frame buffer , If you do not explicitly specify the frame buffer object you create ( Or designated as null) Then draw to canvas On the frame buffer of .

let me put it another way , Just use gl.bindFramebuffer() Function to specify a self created frame buffer object , Then it won't be drawn to canvas On .

This article discusses HTMLCanvasElement, Don't involve OffscreenCanvas

1.1 Framebuffer object (FramebufferObject)

FBO Easy to create , Most of the time, it is a leader in charge of roll call , Those who sweat are little brothers , That is, the two types of attachments under its jurisdiction :

- Color accessories ( stay WebGL1 There is 1 individual , stay WebGL 2 There can be 16 individual )

- Depth template attachment ( You can only use depth , You can also use only templates , You can use both of them )

About MRT technology (MultiRenderTarget), That is, technology that allows output to multiple color attachments ,WebGL 1.0 Use

gl.getExtension('WEBGL_draw_buffers')Get extensions to use ; and WebGL 2.0 Native supports , So there are differences in the number of color accessories .

These two categories of attachments are passed as follows API Set it up :

| | // Set up texture by 0 No. color attachment |

| | gl.framebufferTexture2D(gl.FRAMEBUFFER, gl.COLOR\_ATTACHMENT0, gl.TEXTURE\_2D, color0Texture, 0) |

| | // Set up rbo by 0 No. color attachment |

| | gl.framebufferRenderbuffer(gl.FRAMEBUFFER, gl.COLOR\_ATTACHMENT0, gl.RENDERBUFFER, color0Rbo) |

| | |

| | // Set up texture by Only depth attachments |

| | gl.framebufferTexture2D(gl.FRAMEBUFFER, gl.DEPTH\_ATTACHMENT, gl.TEXTURE\_2D, depthTexture, 0) |

| | // Set up rbo by Depth template attachment ( need WebGL2 or WEBGL\_depth\_texture) |

| | gl.framebufferRenderbuffer(gl.FRAMEBUFFER, gl.DEPTH\_STENCIL\_ATTACHMENT, gl.RENDERBUFFER, depthStencilRbo) |

actually , In need of MRT when ,gl.COLOR_ATTACHMENT0、gl.COLOR_ATTACHMENT1 … These attributes are just numbers , You can index the location of color attachments by calculating attributes , You can also directly use clear numbers instead :

| | console.log(gl.COLOR\_ATTACHMENT0) // 36064 |

| | console.log(gl.COLOR\_ATTACHMENT1) // 36065 |

| | |

| | let i = 1 |

| | console.log(gl[`COLOR\_ATTACHMENT${i}`]) // 36065 |

1.2 The real carrier of color attachment and depth template attachment

Color attachment and depth template attachment need to specify the data carrier clearly .WebGL If you change the drawing result to non canvas Of FBO, Then you need to specify where the specific painting is .

Such as 1.1 The example code in section , Each attachment can choose one of the following two as the real data carrier container :

- Render buffer objects (

WebGLRenderbuffer) - Texture objects (

WebGLTexture)

An elder pointed out in his blog , Render buffer objects slightly better than texture objects , But we need to analyze specific problems .

actually , In most modern times GPU And graphics card driver , These performance differences are less important .

To put it simply , If the result of off screen painting does not need to be used as a texture map in the next painting , use RBO Can , Because only texture objects can pass to shaders .

About RBO And texture as the difference between the two types of attachments is not so much , And this article is mainly about comparison WebGL and WebGPU The difference between the two , It's not going to unfold anymore .

1.3 FBO/RBO/WebGLTexture Relevant methods to collect

gl.framebufferTexture2D(gl.FRAMEBUFFER, , , , ): take WebGLTexture Related to FBO On an attachment ofgl.framebufferRenderbuffer(gl.FRAMEBUFFER, , gl.RENDERBUFFER, ): take RBO Related to FBO On an attachment ofgl.bindFramebuffer(gl.FRAMEBUFFER, ): Set the frame buffer object as the current rendering targetgl.bindRenderbuffer(gl.RENDERBUFFER, ): binding For the current RBOgl.renderbufferStorage(gl.RENDERBUFFER, , width, height): Set the currently bound RBO Data format and length and width

Here are three creation methods :

gl.createFramebuffer()gl.createRenderbuffer()gl.createTexture()

By the way, review the texture parameter settings 、 Texture binding and data transfer function :

gl.texParameteri(): Set the parameters of the currently bound texture objectgl.bindTexture(): The bound texture object is the current active texturegl.texImage2D(): Pass data to the currently bound texture object , The last parameter is data

2 WebGPU The concept of equivalence in

WebGPU No more WebGLFramebuffer and WebGLRenderbuffer This kind of similar API 了 , in other words , You can't find WebGPUFramebuffer and WebGPURenderbuffer These two kinds .

however ,gl.drawArray There are still peer-to-peer operations , That is the render channel encoder ( Make it renderPassEncoder) Emitted renderPassEncoder.draw action .

2.1 Render channel encoder (GPURenderPassEncoder) To undertake FBO Functions

WebGPU Where is the drawing target of ? because WebGPU And canvas Elements are not strongly related , So you must explicitly specify where to draw .

By learning the concepts of programmable channels and instruction coding , come to know WebGPU It is through some instruction buffer to GPU Pass on “ What am I going to do ” Of information , And instruction buffer (Command Buffer) Then the instruction encoder ( That is to say GPUCommandEncoder) Finish creating . The instruction buffer consists of several Pass( passageway ) constitute , Draw relevant channels , It's called render channel .

The render channel is set by the render channel encoder , A rendering channel sets where the rendering results of this channel should be placed ( This description is analogous WebGL Where to draw ). Specific to the code , It's actually creating renderPassEncoder when , Delivered GPURenderPassDescriptor In the parameter object colorAttachments attribute :

| | const renderPassEncoder = commandEncoder.beginRenderPass({ |

| | // Is an array , You can set multiple color attachments |

| | colorAttachments: [ |

| | { |

| | view: textureView, |

| | loadValue: { r: 0.0, g: 0.0, b: 0.0, a: 1.0 }, |

| | storeOp: 'store', |

| | } |

| | ] |

| | }) |

be aware ,colorAttachments[0].view It's a textureView, That is to say GPUTextureView, In other words , It means that this render channel should be painted on a texture object .

Usually , If you don't need to draw off screen or use msaa, Then it should be painted to canvas Upper , from canvas Get its configured texture object in the following operations :

| | const context = canvas.getContext('webgpu') |

| | context.configure({ |

| | gpuDevice, |

| | format: presentationFormat, // This parameter can use the client length and width of the canvas × Device pixel scaling gets , Is an array of two elements |

| | size: presentationSize, // This parameter can call context.getPreferredFormat(gpuAdapter) obtain |

| | }) |

| | |

| | const textureView = context.getCurrentTexture().createView() |

The above code snippet completes the rendering channel and screen canvas The associated , Namely the canvas As a piece GPUTexture, To use its GPUTextureView Association with render channels .

Actually , A more rigorous statement is Render channel To undertake the FBO Some functions of ( Because the rendering channel also has the function of issuing other actions , For example, set up pipelines ), Because no GPURenderPass This API, So I can only be wronged GPURenderPassEncoder Instead of .

2.2 Multi target rendering

For multi-objective rendering , That is, the case where the slice shader needs to output multiple results ( The code returns a structure ), That means you need multiple color attachments to carry the rendered output .

here , To configure the slice shading phase of the rendering pipeline (fragment) Of targets attribute .

Related from creating textures 、 Create pipelines 、 The example code of instruction coding is as follows , Two texture objects are used as containers for color attachments :

| | // One 、 Create a render target texture 1 and 2, And its corresponding texture view object |

| | const renderTargetTexture1 = device.createTexture({ |

| | size: [/* A little */], |

| | usage: GPUTextureUsage.RENDER\_ATTACHMENT | GPUTextureUsage.TEXTURE\_BINDING, |

| | format: 'rgba32float', |

| | }) |

| | const renderTargetTexture2 = device.createTexture({ |

| | size: [/* A little */], |

| | usage: GPUTextureUsage.RENDER\_ATTACHMENT | GPUTextureUsage.TEXTURE\_BINDING, |

| | format: 'bgra8unorm', |

| | }) |

| | const renderTargetTextureView1 = renderTargetTexture1.createView() |

| | const renderTargetTextureView2 = renderTargetTexture2.createView() |

| | |

| | // Two , Create pipelines , Configure the texture output format of multiple corresponding targets in the slice coloring stage |

| | const pipeline = device.createRenderPipeline({ |

| | fragment: { |

| | targets: [ |

| | { |

| | format: 'rgba32float' |

| | }, |

| | { |

| | format: 'bgra8unorm' |

| | } |

| | ] |

| | // ... Other attributes are omitted |

| | }, |

| | // ... Other stages are omitted |

| | }) |

| | |

| | const renderPassEncoder = commandEncoder.beginRenderPass({ |

| | colorAttachments: [ |

| | { |

| | view: renderTargetTextureView1, |

| | // ... Other parameters |

| | }, |

| | { |

| | view: renderTargetTextureView2, |

| | // ... Other parameters |

| | } |

| | ] |

| | }) |

such , The two color attachments use two texture view objects as rendering targets , Moreover, in the slice shading phase of the pipeline object, two target The format of .

therefore , You can specify the output structure in the slice shader code :

| | struct FragmentStageOutput { |

| | @location(0) something: vec4; |

| | @location(1) another: vec4; |

| | } |

| | |

| | @stage(fragment) |

| | fn main(/* Omit input */) -> FragmentStageOutput { |

| | var output: FragmentStageOutput; |

| | // Write two numbers casually , It doesn't make sense |

| | output.something = vec4(0.156); |

| | output.another = vec4(0.67); |

| | |

| | return output; |

| | } |

such , be located location 0 Of something This f32 A four-dimensional vector of type is written renderTargetTexture1 A tattoo element of , And in location 1 Of another This f32 Type four-dimensional vector is written renderTargetTexture2 A tattoo element of .

Even though , stay pipeline In the fragment stage of target designated format Slightly different , namely renderTargetTexture2 Designated as 'bgra8unorm', And the structure in shader code 1 Number location The data type is vec4,WebGPU Will help you with f32 This [0.0f, 1.0f] The output in the scope is mapped to [0, 255] This 8bit On the interval of integers .

in fact , If there is no more output ( That is, multi-objective rendering ),WebGPU The return type of most slice shaders in is a single

vec4, And the most common canvas The best texture format isbgra8unorm, It always happens[0.0f, 1.0f]By amplifying 255 Times and rounded to[0, 255]This mapping process .

2.3 Depth attachment and template attachment

GPURenderPassDescriptor It also supports incoming depthStencilAttachment, As a depth template attachment , The code example is as follows :

| | const renderPassDescriptor = { |

| | // The color attachment setting is slightly |

| | depthStencilAttachment: { |

| | view: depthTexture.createView(), |

| | depthLoadValue: 1.0, |

| | depthStoreOp: 'store', |

| | stencilLoadValue: 0, |

| | stencilStoreOp: 'store', |

| | } |

| | } |

Similar to a single color attachment , You also need a texture object whose view object is view, Here's the thing to watch out for , As a depth or template attachment , Be sure to set with depth 、 Template related texture format .

If for depth 、 Template texture format in additional device functions (Device feature) in , When requesting device objects, you must add the corresponding feature To request , For example, there are "depth24unorm-stencil8" This function can be used "depth24unorm-stencil8" This texture format .

Calculation of depth template , You also need to pay attention to the configuration of the depth template stage parameter object in the rendering pipeline , for example :

| | const renderPipeline = device.createRenderPipeline({ |

| | // ... |

| | depthStencil: { |

| | depthWriteEnabled: true, |

| | depthCompare: 'less', |

| | format: 'depth24plus', |

| | } |

| | }) |

2.4 Not canvas The texture object is the attention point of two kinds of attachments

In addition to the texture format mentioned in the depth template attachment 、 Request device's feature outside , We also need to pay attention to non canvas If the texture of is used as an attachment , Then it's usage It must contain RENDER_ATTACHMENT This one .

| | const depthTexture = device.createTexture({ |

| | size: presentationSize, |

| | format: 'depth24plus', |

| | usage: GPUTextureUsage.RENDER\_ATTACHMENT, |

| | }) |

| | |

| | const renderColorTexture = device.createTexture({ |

| | size: presentationSize, |

| | format: presentationFormat, |

| | usage: GPUTextureUsage.RENDER\_ATTACHMENT | GPUTextureUsage.COPY\_SRC, |

| | }) |

3 Reading data

3.1 from FBO Medium reading pixel value

from FBO Read pixel value , In fact, it is to read the color data of the color attachment to TypedArray in , Want to read the current fbo( or canvas Frame buffer for ) Result , Just call gl.readPixels The method can .

| | //#region establish fbo And set it as the rendering target container |

| | const fb = gl.createFramebuffer(); |

| | gl.bindFramebuffer(gl.FRAMEBUFFER, fb); |

| | //#endregion |

| | |

| | //#region Create containers for off screen painting : Texture objects , And bind it to become the texture object to be processed |

| | const texture = gl.createTexture(); |

| | gl.bindTexture(gl.TEXTURE\_2D, texture); |

| | |

| | // -- If you don't need to be sampled by the shader again as a texture , In fact, you can use RBO Instead of |

| | //#endregion |

| | |

| | //#region Bind texture objects to 0 No. color attachment |

| | gl.framebufferTexture2D(gl.FRAMEBUFFER, gl.COLOR\_ATTACHMENT0, gl.TEXTURE\_2D, texture, 0); |

| | //#endregion |

| | |

| | // ... gl.drawArrays Rendering |

| | |

| | //#region Read TypedArray |

| | const pixels = new Uint8Array(imageWidth * imageHeight * 4); |

| | gl.readPixels(0, 0, imageWiebdth, imageHeight, gl.RGBA, gl.UNSIGNED\_BYTE, pixels); |

| | //#endregion |

gl.readPixels() The method is to bind the current FBO And the pixel value of the currently bound color attachment is read TypedArray in , Whether the carrier is WebGLRenderbuffer still WebGLTexture.

The only thing to notice is that , If you're writing an engine , Then the operation of reading pixels must be in the drawing instruction ( Generally refer to gl.drawArrays or gl.drawElements) Write in the code after sending , Otherwise, the value may not be read .

3.2 WebGPU read GPUTexture Data in

stay WebGPU Render the target , That is, accessing pixels in texture is relatively simple , Used to the instruction encoder copyTextureToBuffer Method , Read the data of texture object into GPUBuffer, Then, by demapping 、 Get by reading the range ArrayBuffer.

| | //#region Create texture objects associated with color attachments |

| | const colorAttachment0Texture = device.createTexture({ /* ... */ }) |

| | //#endregion |

| | |

| | //#region Create a buffer object for storing texture data |

| | const readPixelsResultBuffer = device.createBuffer({ |

| | usage: GPUBufferUsage.COPY\_DST | GPUBufferUsage.MAP\_READ, |

| | size: 4 * textureWidth * textureHeight, |

| | }) |

| | //#endregion |

| | |

| | //#region Image copy operation , take GPUTexture copy to GPUBuffer |

| | const encoder = device.createCommandEncoder() |

| | encoder.copyTextureToBuffer( |

| | { texture: colorAttachment0Texture }, |

| | { buffer: readPixelsResultBuffer }, |

| | [textureWidth, textureHeight], |

| | ) |

| | device.queue.submit([encoder.finish()]) |

| | //#endregion |

| | |

| | //#region Read pixel |

| | await readPixelsResultBuffer.mapAsync() |

| | const pixels = new Uint8Array(readPixelsResultBuffer.getMappedRange()) |

| | //#endregion |

Pay extra attention to , If you want to copy to GPUBuffer And give it to CPU End ( That is to say JavaScript) To read , This one GPUBuffer Of usage Be sure to have COPY_DST and MAP_READ These two ; and , Of this texture object usage There must also be COPY_SRC This one ( Associated textures as color attachments , It has to have RENDER_ATTACHMENT This one usage).

4 summary

from WebGL( That is to say OpenGL ES system ) To WebGPU, Off screen rendering technology 、 The multi-target rendering technology has been upgraded in terms of interface and usage .

The first is to cancel RBO The concept , Use the Texture As the drawing target .

secondly , Replaced FBO To RenderPass, from GPURenderPassEncoder Responsible for carrying the original FBO Two types of accessories .

Because it's cancelled RBO Concept , therefore RTT(RenderToTexture) and RTR(RenderToRenderbuffer) It no longer exists , But off screen rendering technology still exists , you are here WebGPU You can use more than one RenderPass Complete multiple drawing results ,Texture As a rendering carrier, it can shuttle freely through resource binding groups in different RenderPass One of the RenderPipeline in .

About how to get from GPU Read pixels in the texture of ( Color value ), The first 3 There is also a superficial discussion in section , Most uses of this part are GPU Picking; And about the FBO This legacy concept , Now is RenderPass Off screen rendering , The most common thing is to do the effect .

边栏推荐

- PHP cli getting input from user and then dumping into variable possible?

- Learn game model 3D characters, come out to find a job?

- Three line by line explanations of the source code of anchor free series network yolox (a total of ten articles, which are guaranteed to be explained line by line. After reading it, you can change the

- Ask, does this ADB MySQL support sqlserver?

- d3js小记

- Azkaban actual combat

- Qrcode: generate QR code from text

- 丸子百度小程序详细配置教程,审核通过。

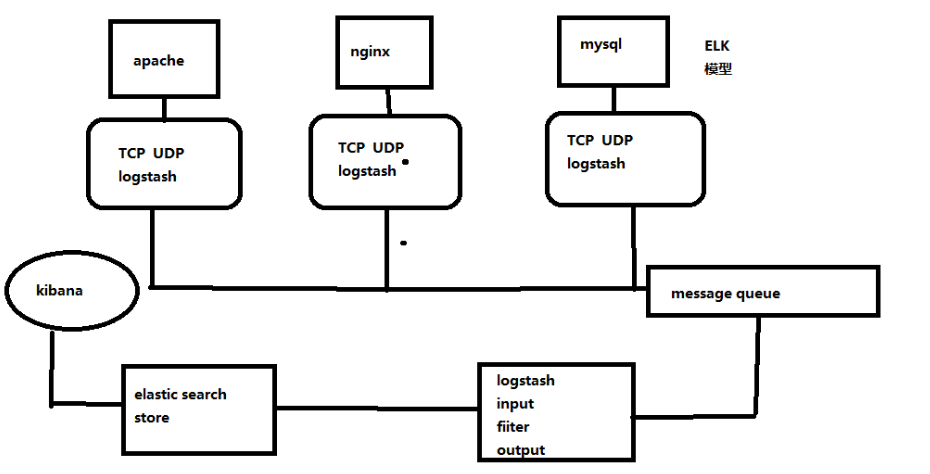

- Elk log analysis system

- [Yu Yue education] National Open University spring 2019 0505-22t basic nursing reference questions

猜你喜欢

el-select,el-option下拉选择框

Vb+access hotel service management system

ELK日志分析系统

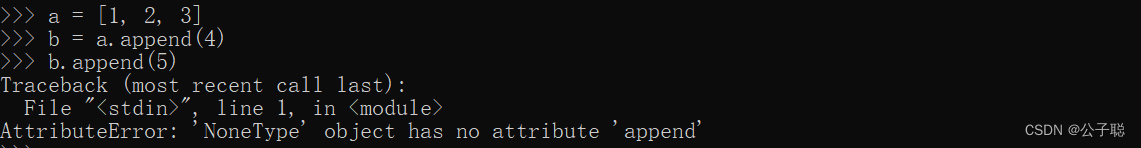

Problem solving: attributeerror: 'nonetype' object has no attribute 'append‘

The perfect car for successful people: BMW X7! Superior performance, excellent comfort and safety

看 TDengine 社区英雄线上发布会,听 TD Hero 聊开发者传奇故事

Asp+access campus network goods trading platform

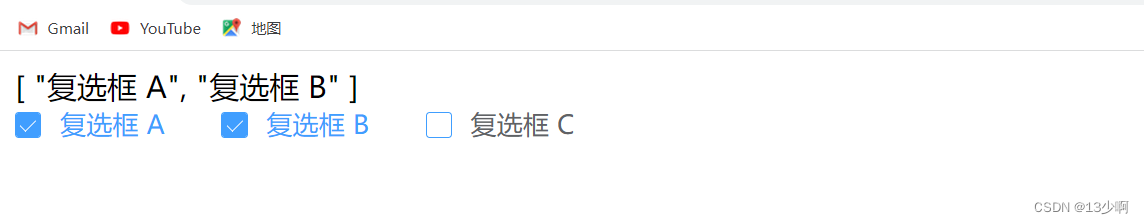

Single box check box

The perfect car for successful people: BMW X7! Superior performance, excellent comfort and safety

College Students' innovation project management system

随机推荐

Medusa installation and simple use

Acwing game 58 [End]

Azkaban实战

The perfect car for successful people: BMW X7! Superior performance, excellent comfort and safety

From task Run get return value - getting return value from task Run

[Yu Yue education] National Open University spring 2019 0505-22t basic nursing reference questions

Cut! 39 year old Ali P9, saved 150million

openresty ngx_ Lua variable operation

Why is this an undefined behavior- Why is this an undefined behavior?

d3js小记

There is a question about whether the parallelism can be set for Flink SQL CDC. If the parallelism is greater than 1, will there be a sequence problem?

Blue bridge - maximum common divisor and minimum common multiple

[200 opencv routines] 99 Modified alpha mean filter

Linux Installation redis

Master Fur

问下,这个ADB mysql支持sqlserver吗?

【LeetCode】501. Mode in binary search tree (2 wrong questions)

Character painting, I use characters to draw a Bing Dwen Dwen

Devtools的简单使用

端口,域名,协议。