当前位置:网站首页>Minio distributed file storage cluster for full stack development

Minio distributed file storage cluster for full stack development

2022-07-06 09:22:00 【Heartsuit】

background

Where are the uploaded files stored in your project ?

Our project about file storage has gone through such an evolutionary process :

- Static resource directory ; At that time, the front and rear ends were not separated , Directly in the static resource directory of the project , Before each deployment , First back up the resource directory , Otherwise, these files will be lost ;

- A separate file storage directory on the server ; For small projects with few files to save, this method is generally enough , This phase lasted a year or two , Until the hard disk space of the single machine is insufficient , obviously , This method does not support horizontal expansion ;

- Distributed file storage ; At that time, I encountered multi instance clusters 、 Ensure high availability , About distributed file storage , We investigated

FastDFSAndMinIOAnd cloud services ( Qiniuyun 、 Alibaba cloud and other object storage ), WhereasFastDFSThe configuration is complicated , Final decision to useMinIO, Easy to use , Scalable .

Basic operation

MinIO Is the world's leading pioneer in object storage , On standard hardware , read / The writing speed is as high as 183 GB / second and 171 GB / second .MinIO Used as primary storage for cloud native applications , Compared with traditional object storage , Cloud native applications need higher throughput and lower latency . You can expand the namespace by adding more clusters , More racks , Until the goal is achieved . meanwhile , It conforms to the architecture and construction process of all native cloud computing , And contains the latest new technologies and concepts of Cloud Computing .

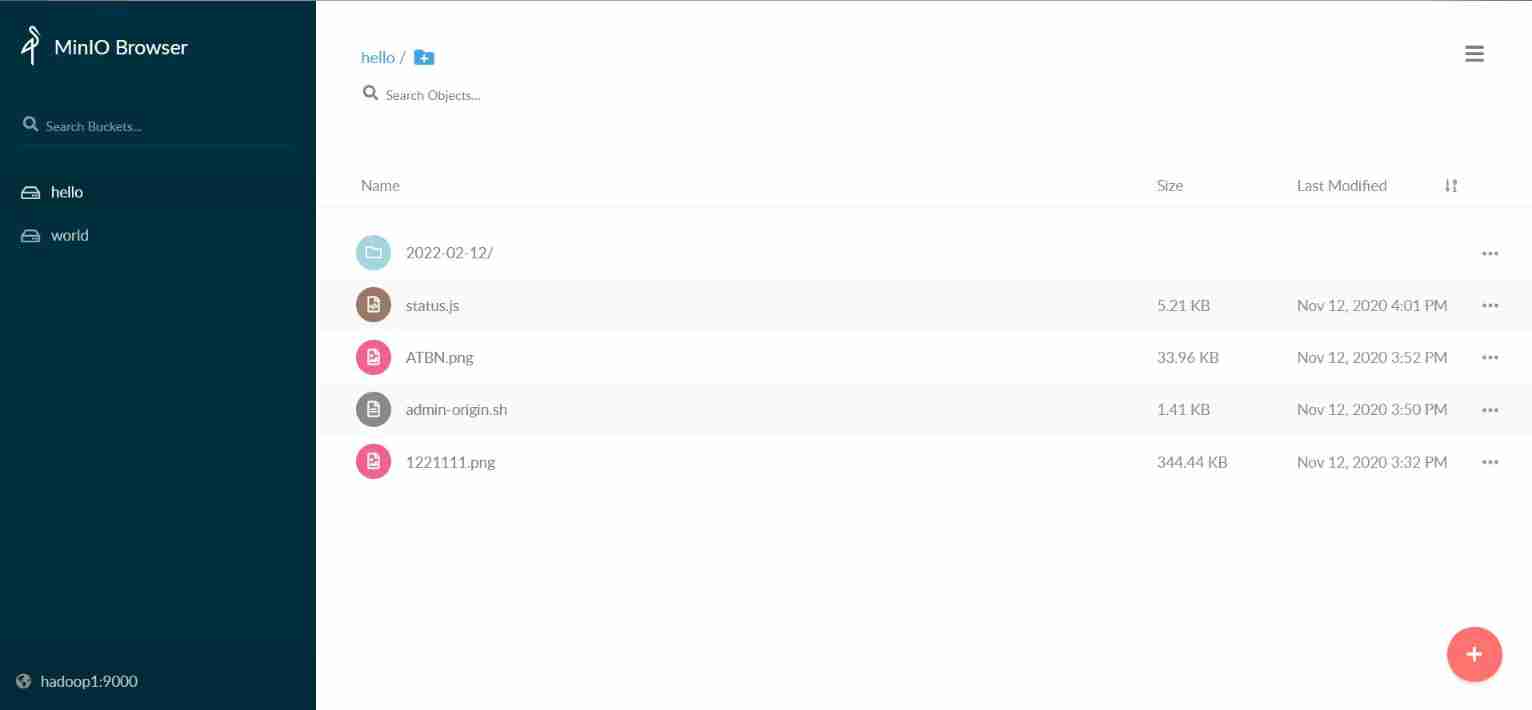

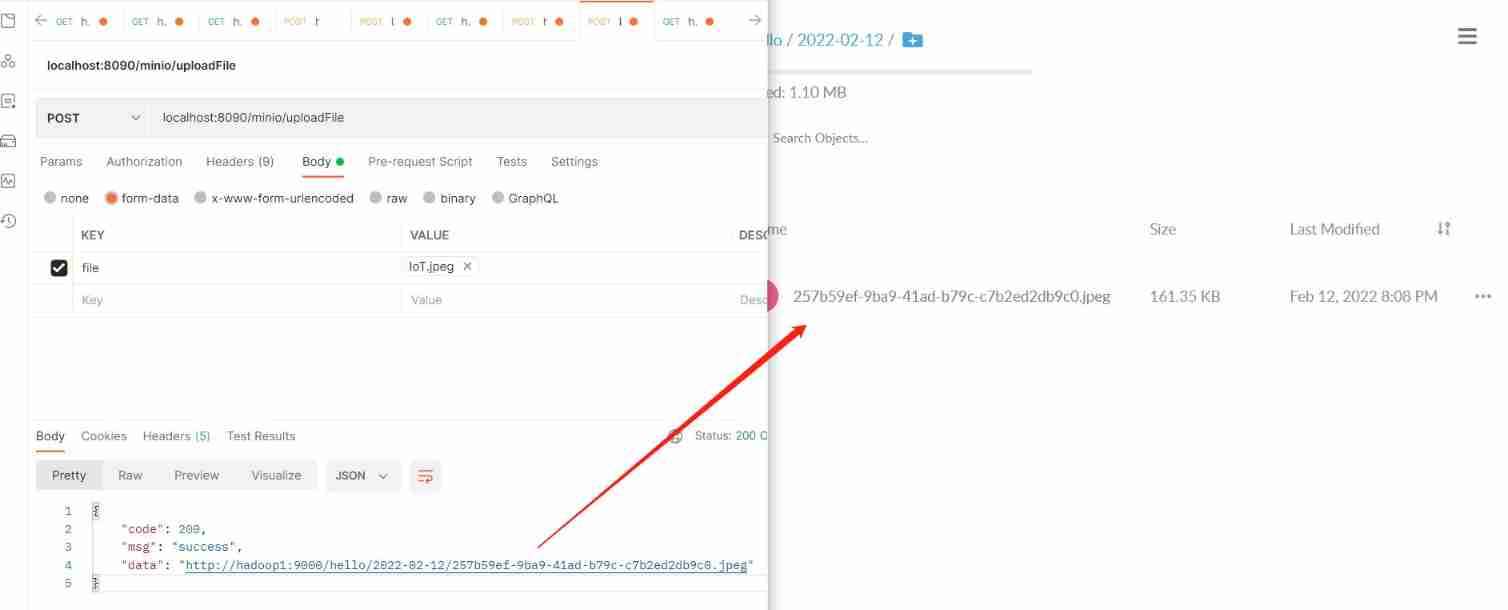

About object storage , It's nothing more than file upload 、 Download and delete , Plus the operation of the bucket . Only focus on MinIO High availability of distributed clusters 、 Extensibility .

- Bucket management ;

- Object management ( Upload 、 download 、 Delete );

- Object pre signature ;

- Bucket policy management ;

Note: I'm going to use 7.x Experiment and demonstration of the version of , the latest version 8.x Of MinIO The background management interface is different , But after our actual production test , All interfaces are compatible .

<dependency>

<groupId>io.minio</groupId>

<artifactId>minio</artifactId>

<version>7.1.4</version>

</dependency>

MinIO stay Docker Run a single instance

docker run -p 9000:9000 \

--name minio1 \

-v /opt/minio/data-single \

-e "MINIO_ACCESS_KEY=AKIAIOSFODNN7EXAMPLE" \

-e "MINIO_SECRET_KEY=wJalrXUtnFEMI/K7MDENG/bPxRfiCYEXAMPLEKEY" \

minio/minio server /data

MinIO stay Docker Compose Lower cluster (4 example ) To configure

- Reference resources

Official documents :https://docs.min.io/docs/deploy-minio-on-docker-compose.html

- To configure

adopt docker-compose On a mainframe , Run four MinIO example , And by the Nginx Reverse proxy , Load balancing provides unified services .

Two configurations involved :

- docker-compose.yaml

- nginx.conf

- docker-compose.yaml

version: '3.7'

# starts 4 docker containers running minio server instances.

# using nginx reverse proxy, load balancing, you can access

# it through port 9000.

services:

minio1:

image: minio/minio:RELEASE.2020-11-10T21-02-24Z

volumes:

- data1-1:/data1

- data1-2:/data2

expose:

- "9000"

environment:

MINIO_ACCESS_KEY: minio

MINIO_SECRET_KEY: minio123

command: server http://minio{

1...4}/data{

1...2}

healthcheck:

test: ["CMD", "curl", "-f", "http://localhost:9000/minio/health/live"]

interval: 30s

timeout: 20s

retries: 3

minio2:

image: minio/minio:RELEASE.2020-11-10T21-02-24Z

volumes:

- data2-1:/data1

- data2-2:/data2

expose:

- "9000"

environment:

MINIO_ACCESS_KEY: minio

MINIO_SECRET_KEY: minio123

command: server http://minio{

1...4}/data{

1...2}

healthcheck:

test: ["CMD", "curl", "-f", "http://localhost:9000/minio/health/live"]

interval: 30s

timeout: 20s

retries: 3

minio3:

image: minio/minio:RELEASE.2020-11-10T21-02-24Z

volumes:

- data3-1:/data1

- data3-2:/data2

expose:

- "9000"

environment:

MINIO_ACCESS_KEY: minio

MINIO_SECRET_KEY: minio123

command: server http://minio{

1...4}/data{

1...2}

healthcheck:

test: ["CMD", "curl", "-f", "http://localhost:9000/minio/health/live"]

interval: 30s

timeout: 20s

retries: 3

minio4:

image: minio/minio:RELEASE.2020-11-10T21-02-24Z

volumes:

- data4-1:/data1

- data4-2:/data2

expose:

- "9000"

environment:

MINIO_ACCESS_KEY: minio

MINIO_SECRET_KEY: minio123

command: server http://minio{

1...4}/data{

1...2}

healthcheck:

test: ["CMD", "curl", "-f", "http://localhost:9000/minio/health/live"]

interval: 30s

timeout: 20s

retries: 3

nginx:

image: nginx:1.19.2-alpine

volumes:

- ./nginx.conf:/etc/nginx/nginx.conf:ro

ports:

- "9000:9000"

depends_on:

- minio1

- minio2

- minio3

- minio4

## By default this config uses default local driver,

## For custom volumes replace with volume driver configuration.

volumes:

data1-1:

data1-2:

data2-1:

data2-2:

data3-1:

data3-2:

data4-1:

data4-2:

- nginx.conf

user nginx;

worker_processes auto;

error_log /var/log/nginx/error.log warn;

pid /var/run/nginx.pid;

events {

worker_connections 1024;

}

http {

include /etc/nginx/mime.types;

default_type application/octet-stream;

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

access_log /var/log/nginx/access.log main;

sendfile on;

#tcp_nopush on;

keepalive_timeout 65;

#gzip on;

# include /etc/nginx/conf.d/*.conf;

upstream minio {

server minio1:9000;

server minio2:9000;

server minio3:9000;

server minio4:9000;

}

server {

listen 9000;

listen [::]:9000;

server_name localhost;

# To allow special characters in headers

ignore_invalid_headers off;

# Allow any size file to be uploaded.

# Set to a value such as 1000m; to restrict file size to a specific value

client_max_body_size 0;

# To disable buffering

proxy_buffering off;

location / {

proxy_set_header Host $http_host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

proxy_connect_timeout 300;

# Default is HTTP/1, keepalive is only enabled in HTTP/1.1

proxy_http_version 1.1;

proxy_set_header Connection "";

chunked_transfer_encoding off;

proxy_pass http://minio;

}

}

}

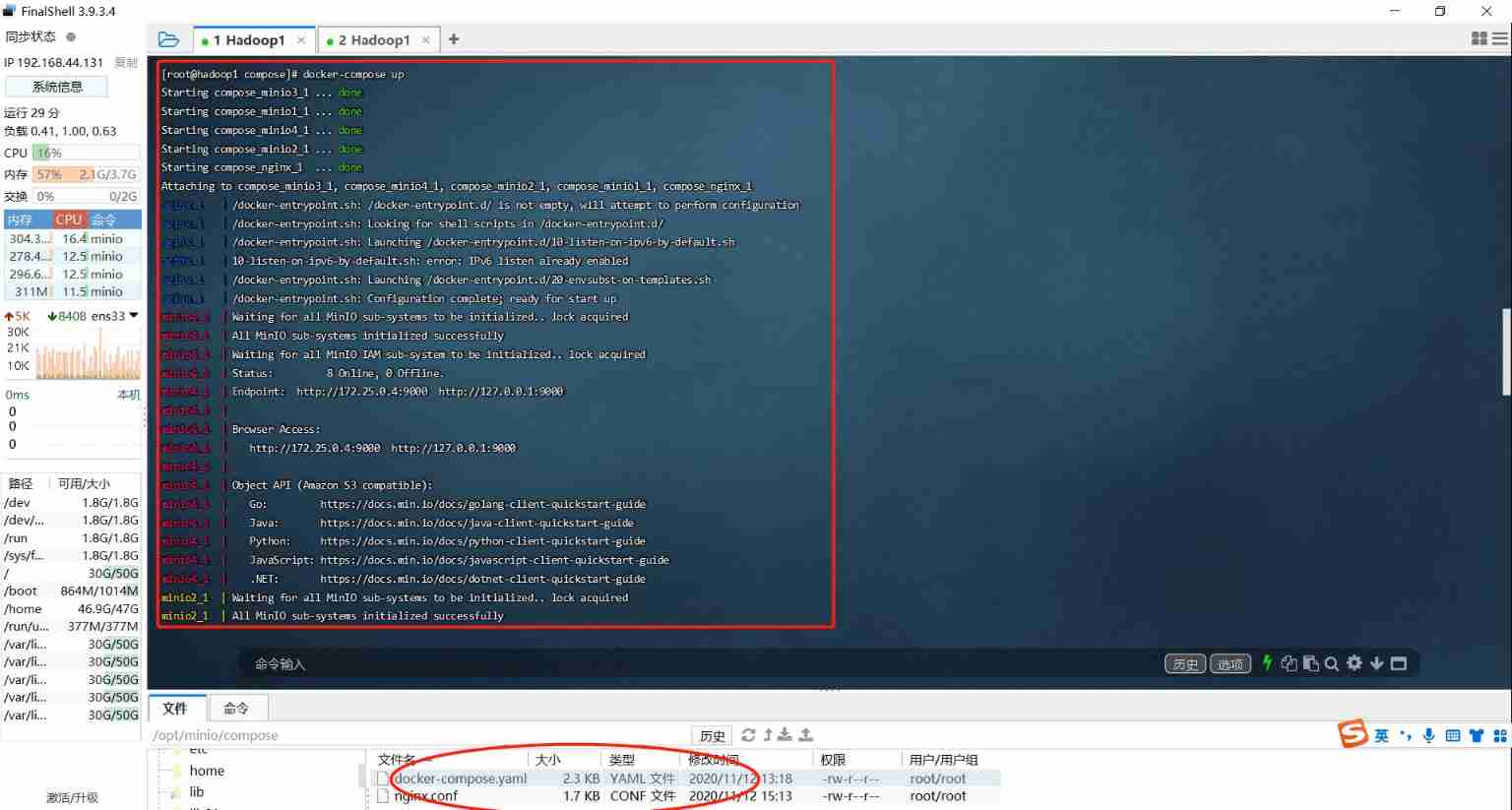

MinIO stay Docker Compose Lower cluster (4 example ) function

The front desk operation ( You can visually view the log ):docker-compose up

Background operation :docker-compose up -d

Out of Service :docker-compose down

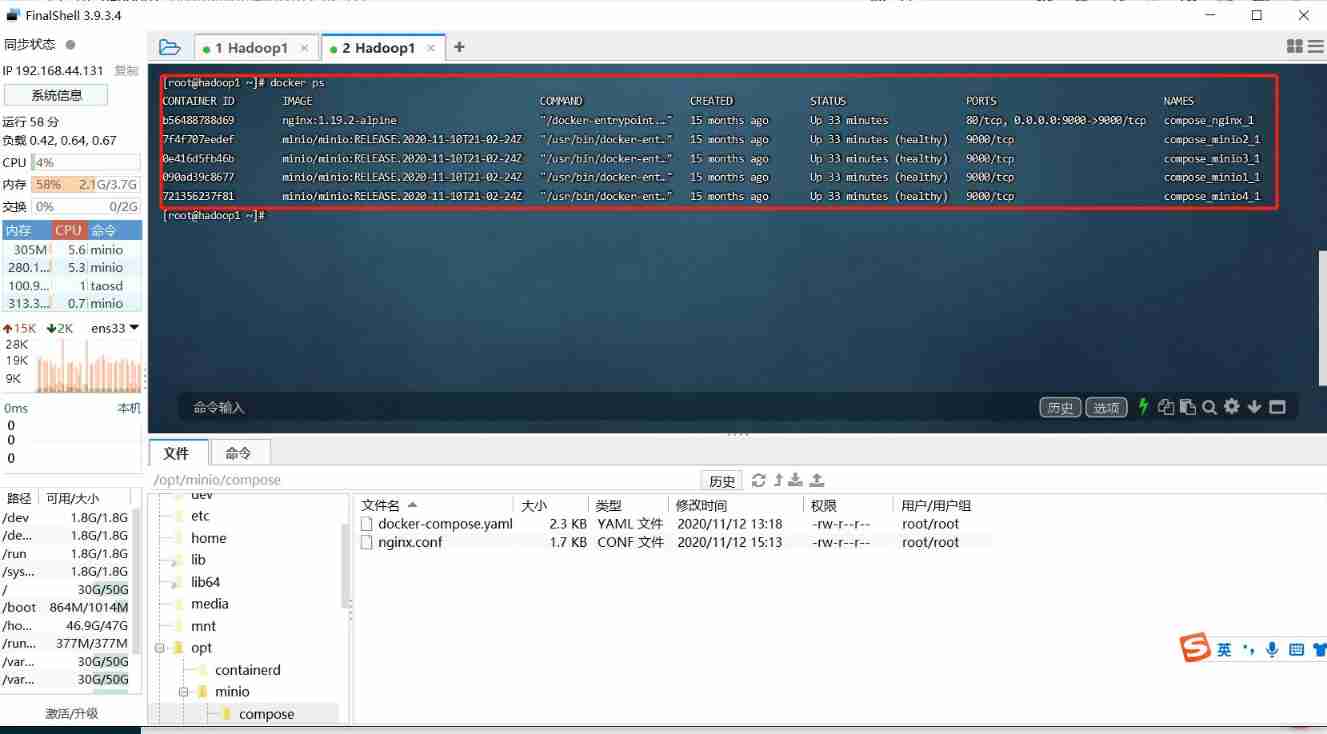

MinIO Cluster high availability test

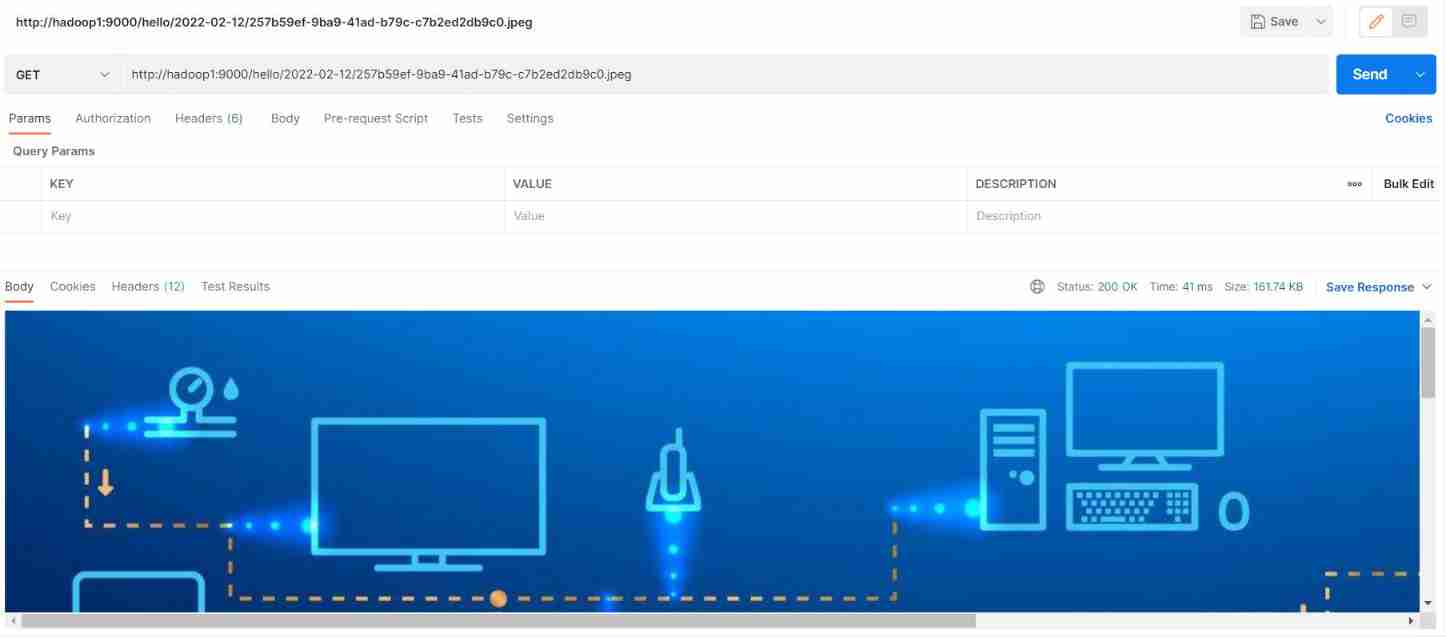

After the cluster runs , Availability can be observed by stopping a different number of instances : Downloadable 、 Can upload .

A stand-alone MinIO server would go down if the server hosting the disks goes offline. In contrast, a distributed MinIO setup with m servers and n disks will have your data safe as long as m/2 servers or m*n/2 or more disks are online.

For example, an 16-server distributed setup with 200 disks per node would continue serving files, up to 4 servers can be offline in default configuration i.e around 800 disks down MinIO would continue to read and write objects.

MinIO The official proposal is to build at least a four disk cluster , The specific configuration of several machines depends on your own needs , such as :

- One machine has four hard disks

- Two machines, two hard disks

- Four machines and a hard disk

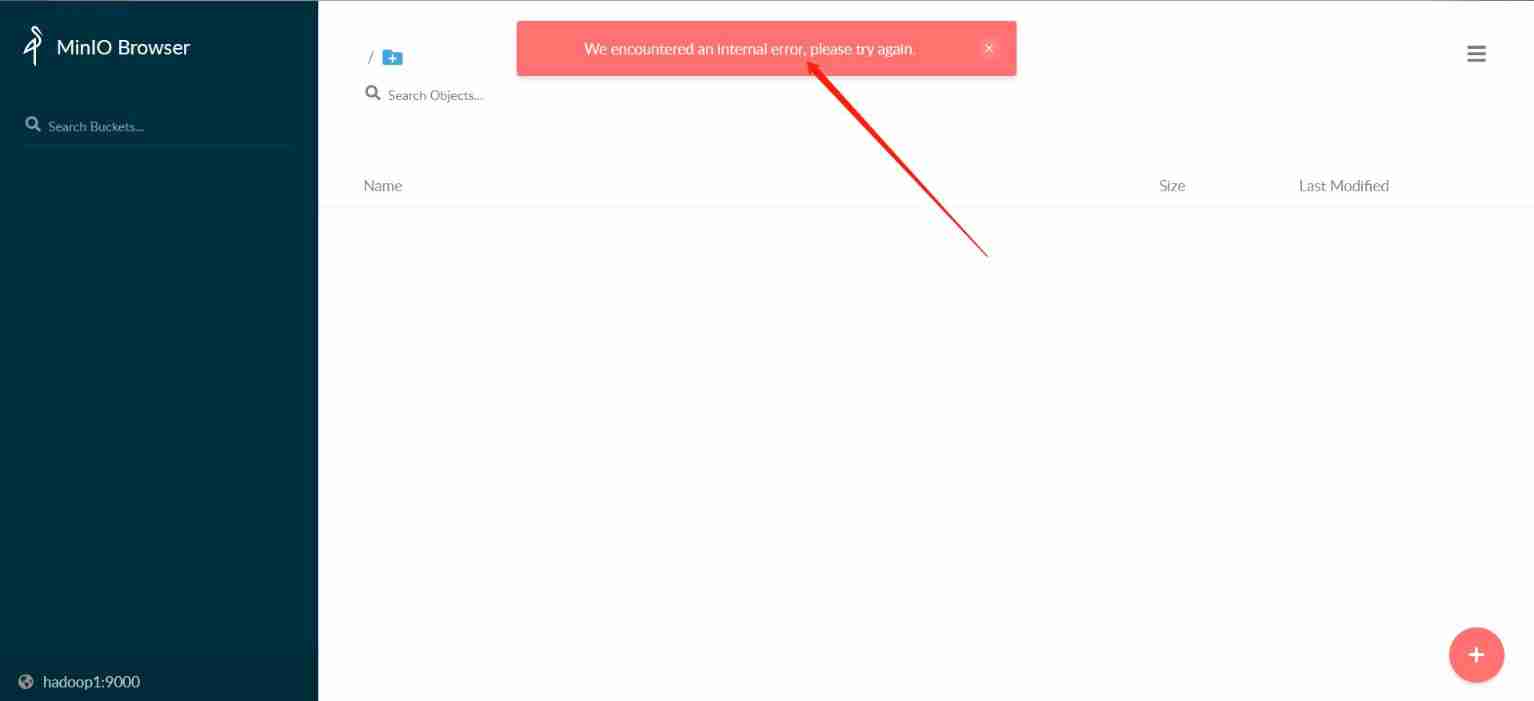

In the cluster 4 Instances online

When there is 4 In the cluster of instances 4 When all instances are online , Can upload 、 Downloadable .

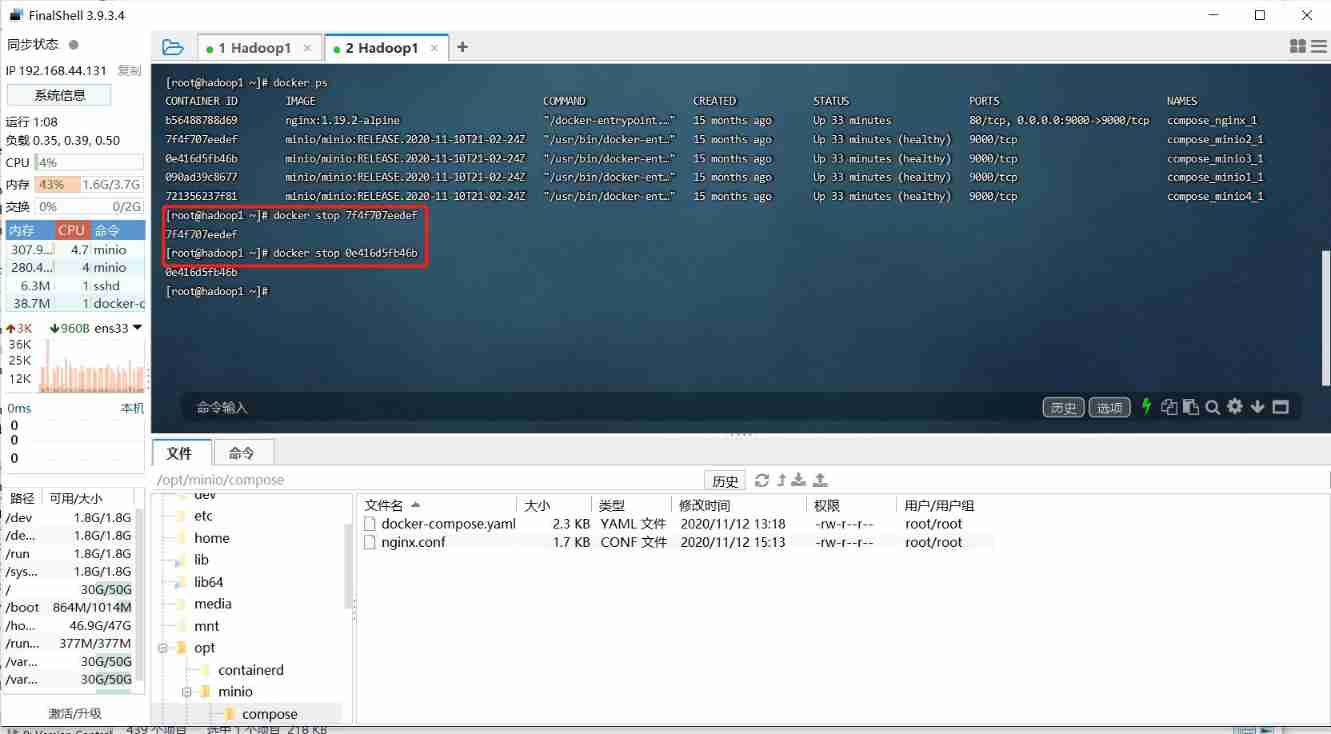

In the cluster 3 Instances online

When there is 4 In the cluster of instances 3 When all instances are online , Can upload 、 Downloadable ( That is, although an instance hangs , But the service is still normal , High availability ~~).

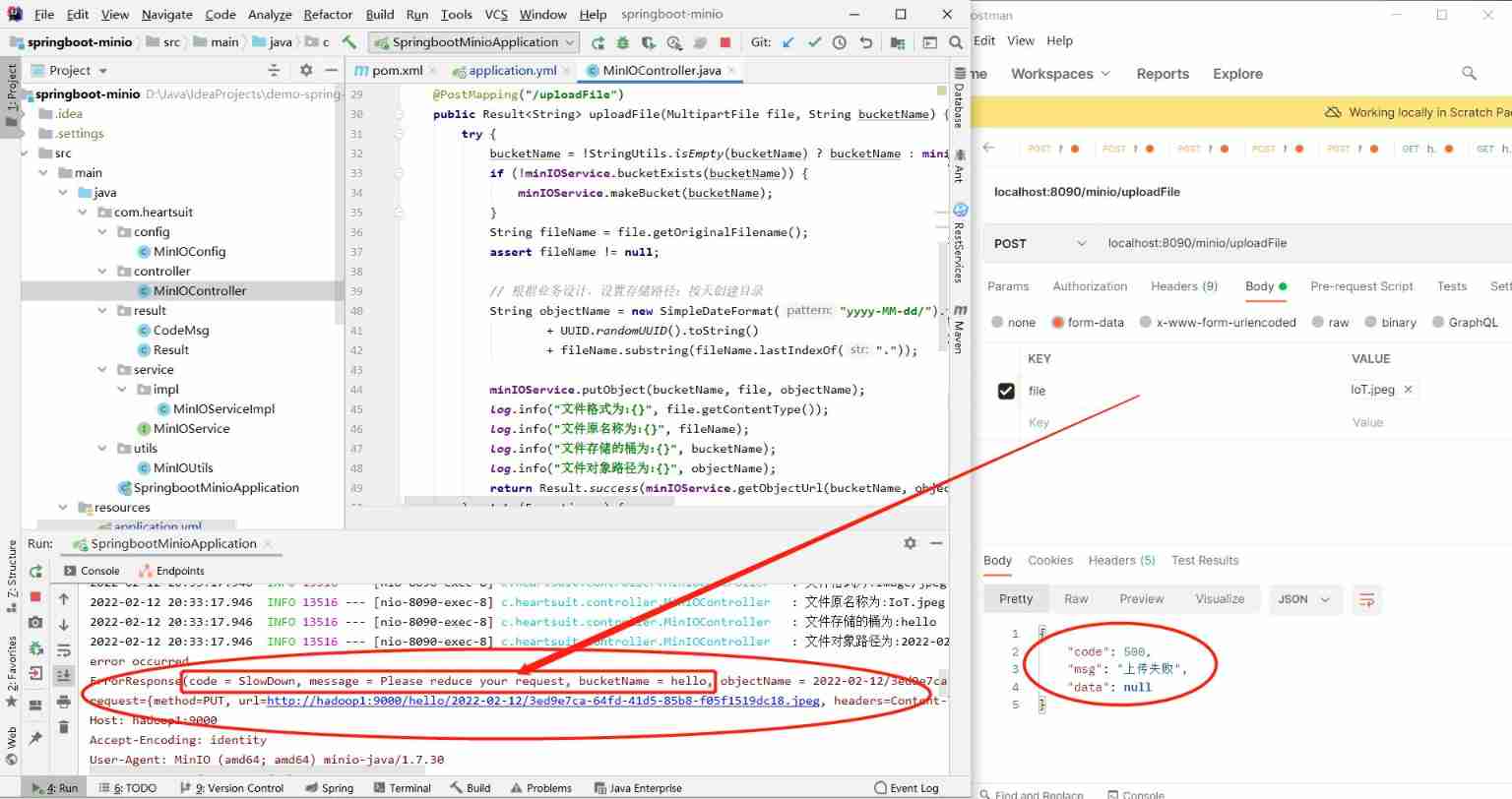

In the cluster 2 Instances online

When there is 4 Only 2 When instances are online , Download only , Can't upload .

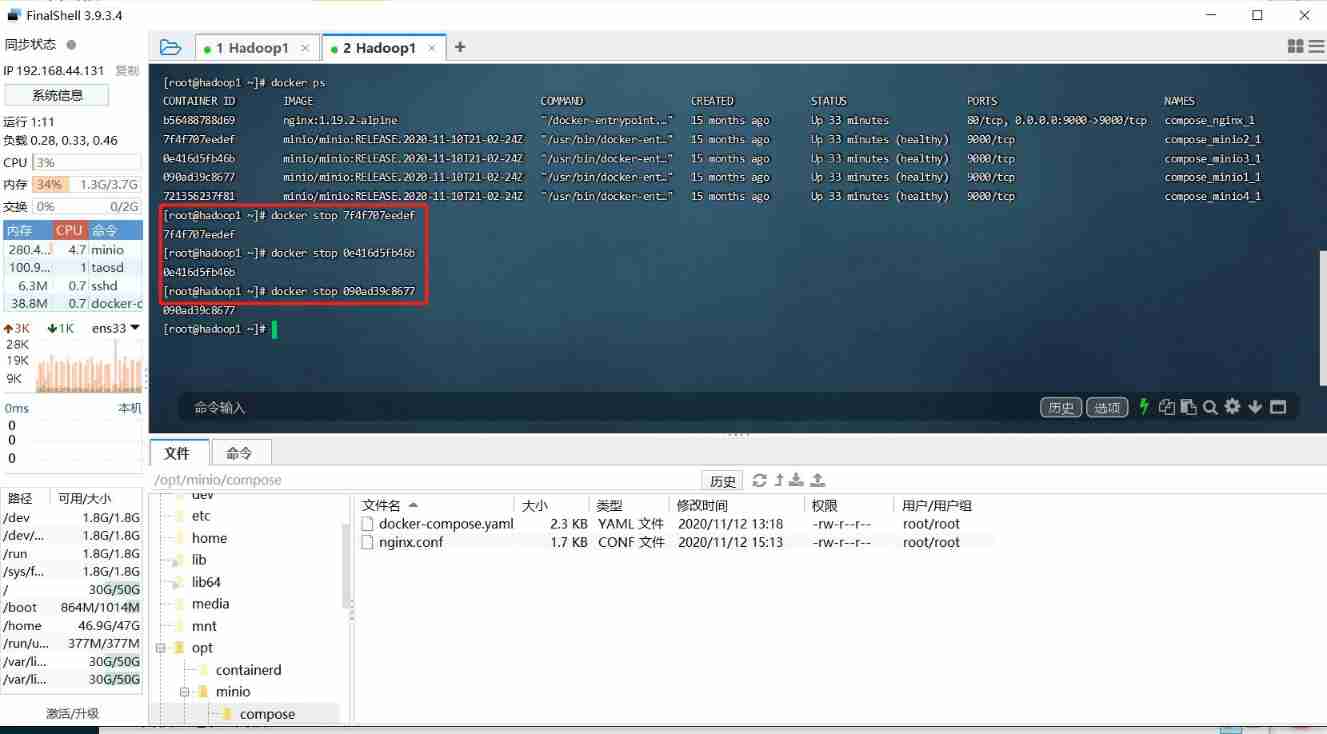

In the cluster 1 Instances online

here , Although there are still 1 Service instances online , But it has been unable to provide normal upload 、 Download services , namely Can't upload , Not downloadable .

We can come to a conclusion : One has 4 Instances run MinIO colony , Yes 3 Online , Both reading and writing can be done , Yes 2 Online , It can be guaranteed to read , But you can't write , If there is only one instance left , You can't read or write . This is consistent with the instructions given in the official documents ( exceed m/2 Servers online , Or more than m*n/2 Disks online ).

Note: After each service stop , You can see MinIO Background log , Observe the online status monitoring process of each service instance , Easy to understand .

- View the service log

docker exec -it 12935e3c6264 bash

Possible problems

- SpringBoot Upload file error :org.springframework.web.multipart. MaxUploadSizeExceededException: Maximum upload size exceeded; nested exception is java.lang. IllegalStateException: org.apache.tomcat.util.http.fileupload.impl. FileSizeLimitExceededException: The field file exceeds its maximum permitted size of 1048576 bytes.

This is because SpringBoot Project limits the size of uploaded files , The default is 1M, When a user uploads more than 1M File size , Will throw the above error , It can be modified through the following configuration .

spring:

servlet:

multipart:

maxFileSize: 10MB

maxRequestSize: 30MB

- adopt

docker exec -it cd34c345960c /bin/bashCan't get into the container , Report errors :OCI runtime exec failed: exec failed: container_linux.go:349: starting container process caused “exec: “/”: permission denied”: unknown

solve : docker exec -it cd34c345960c /bin/bash Change it to : docker exec -it cd34c345960c sh perhaps : docker exec -it cd34c345960c /bin/sh

If you have any questions or any bugs are found, please feel free to contact me.

Your comments and suggestions are welcome!

边栏推荐

- Kratos战神微服务框架(二)

- 甘肃旅游产品预订增四倍:“绿马”走红,甘肃博物馆周边民宿一房难求

- I-BERT

- 在QWidget上实现窗口阻塞

- [OC-Foundation框架]-<字符串And日期与时间>

- Intel Distiller工具包-量化实现3

- Go redis initialization connection

- IDS cache preheating, avalanche, penetration

- Global and Chinese market of electronic tubes 2022-2028: Research Report on technology, participants, trends, market size and share

- The five basic data structures of redis are in-depth and application scenarios

猜你喜欢

What is MySQL? What is the learning path of MySQL

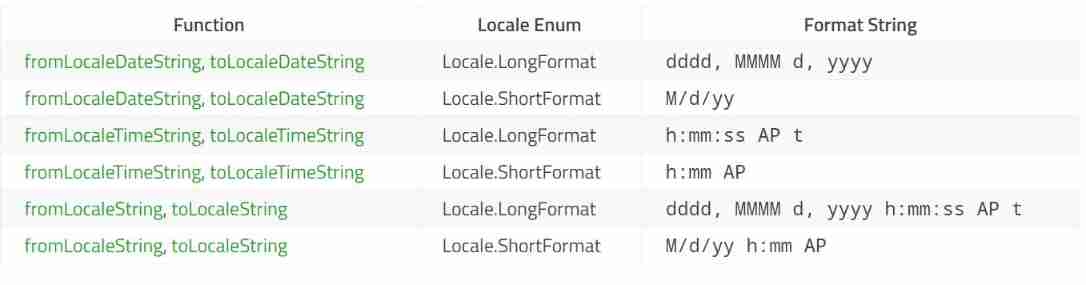

QML type: locale, date

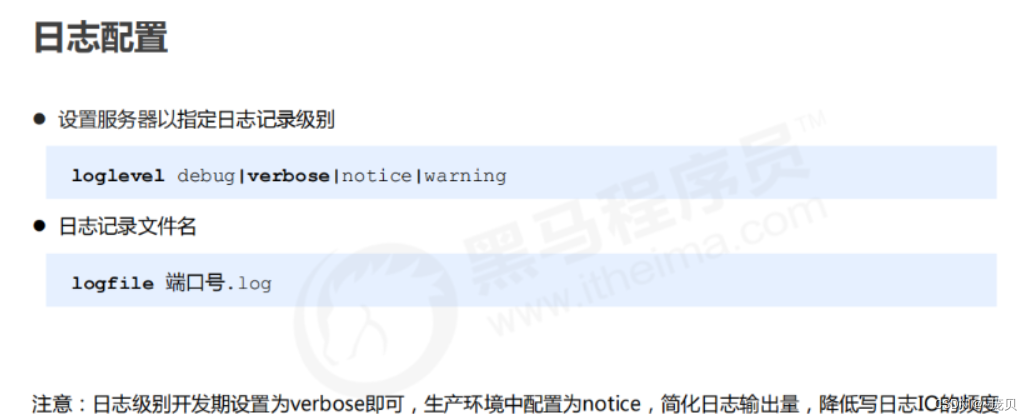

Redis core configuration

运维,放过监控-也放过自己吧

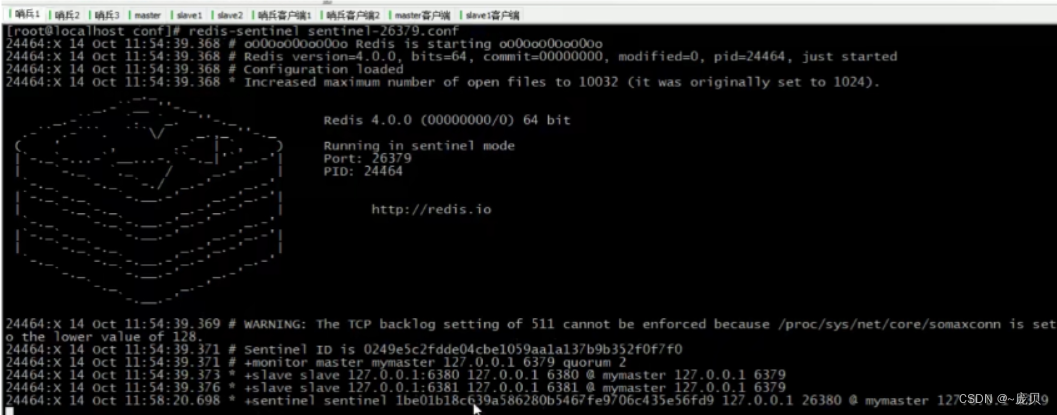

Sentinel mode of redis

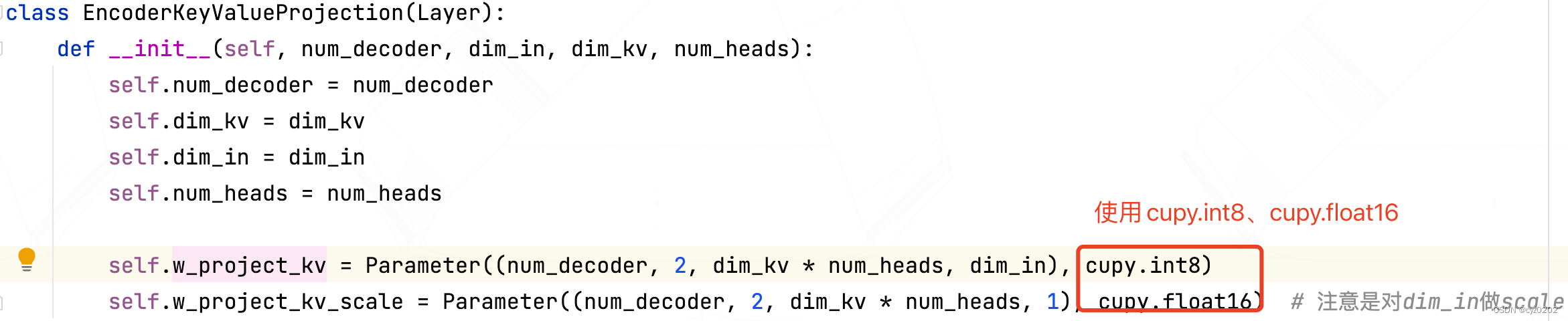

BMINF的后训练量化实现

![[OC]-<UI入门>--常用控件的学习](/img/2c/d317166e90e1efb142b11d4ed9acb7.png)

[OC]-<UI入门>--常用控件的学习

![[oc]- < getting started with UI> -- common controls - prompt dialog box and wait for the prompt (circle)](/img/af/a44c2845c254e4f48abde013344c2b.png)

[oc]- < getting started with UI> -- common controls - prompt dialog box and wait for the prompt (circle)

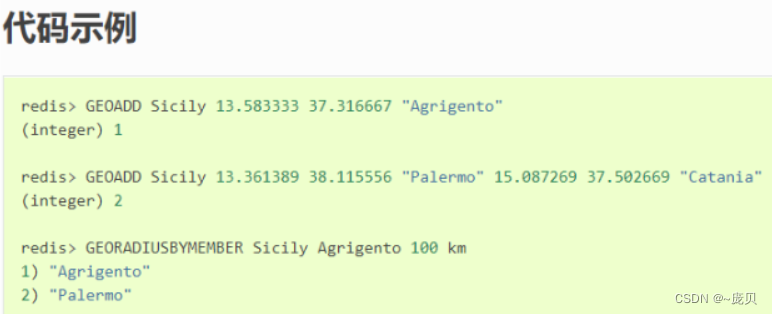

Redis之Geospatial

Pytest's collection use case rules and running specified use cases

随机推荐

requests的深入刨析及封装调用

Intel distiller Toolkit - Quantitative implementation 3

Reids之删除策略

BMINF的後訓練量化實現

What is an R-value reference and what is the difference between it and an l-value?

Redis' performance indicators and monitoring methods

LeetCode:387. The first unique character in the string

Blue Bridge Cup_ Single chip microcomputer_ PWM output

Chapter 1 :Application of Artificial intelligence in Drug Design:Opportunity and Challenges

Kratos ares microservice framework (III)

[oc]- < getting started with UI> -- common controls uibutton

运维,放过监控-也放过自己吧

[oc]- < getting started with UI> -- common controls - prompt dialog box and wait for the prompt (circle)

Kratos战神微服务框架(三)

不同的数据驱动代码执行相同的测试场景

Redis之哨兵模式

AcWing 2456. 记事本

go-redis之初始化連接

Pytest's collection use case rules and running specified use cases

An article takes you to understand the working principle of selenium in detail