当前位置:网站首页>5. Over fitting, dropout, regularization

5. Over fitting, dropout, regularization

2022-07-08 01:02:00 【booze-J】

article

Over fitting

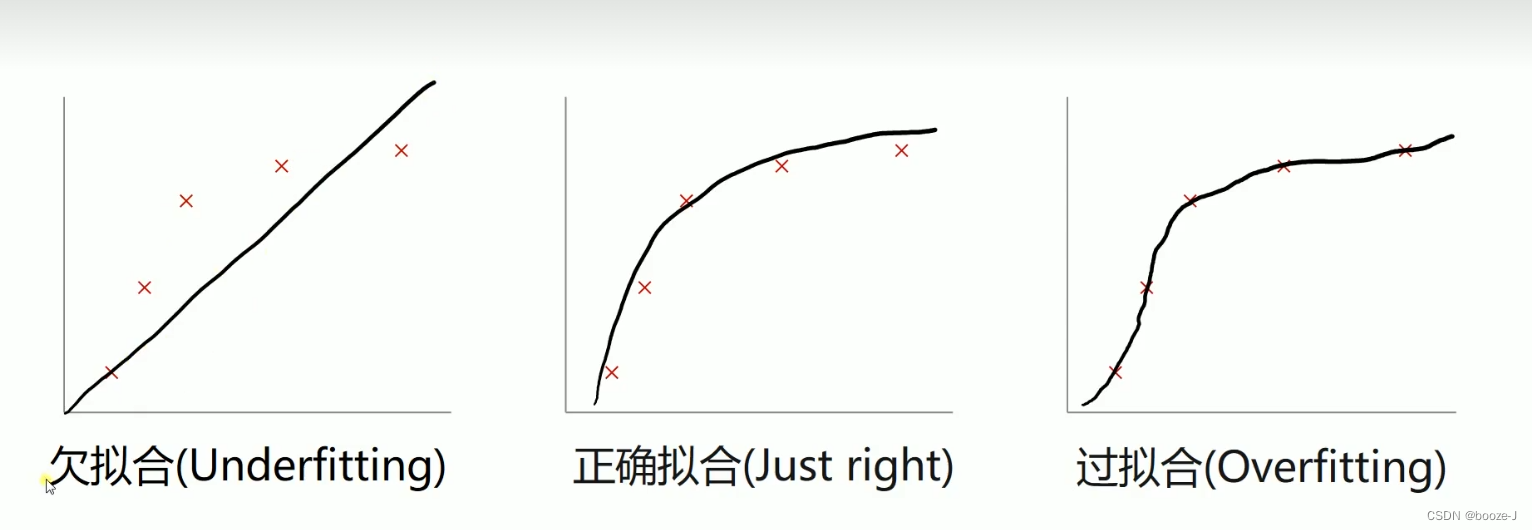

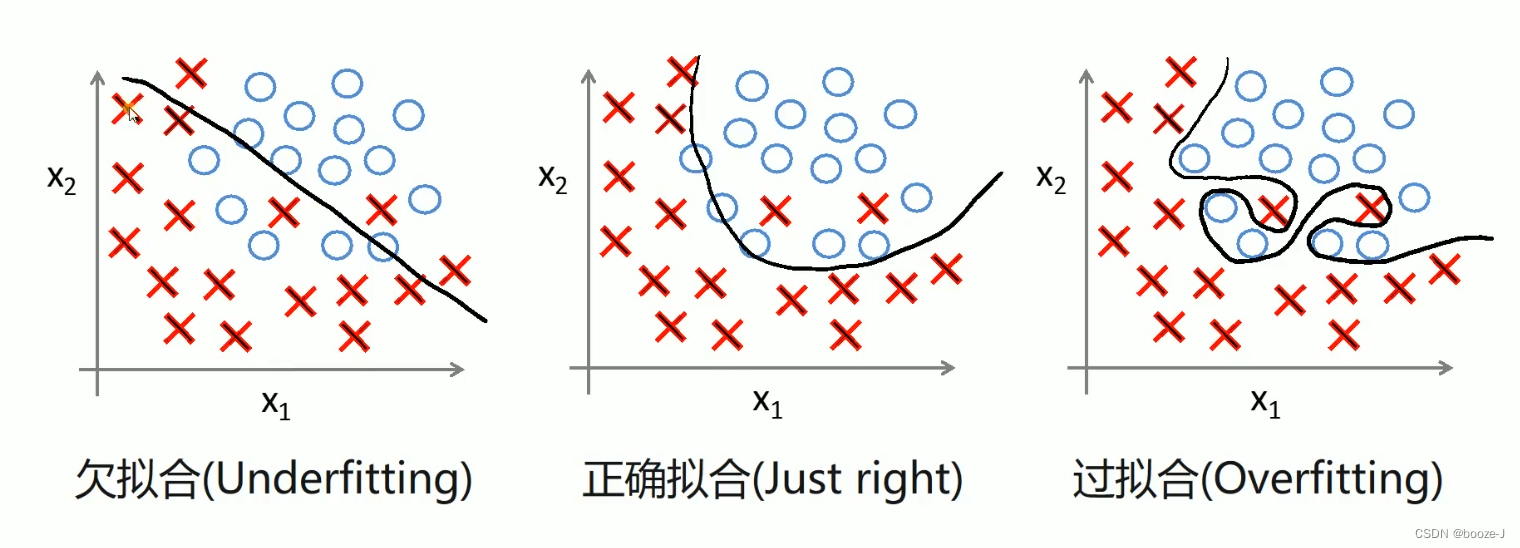

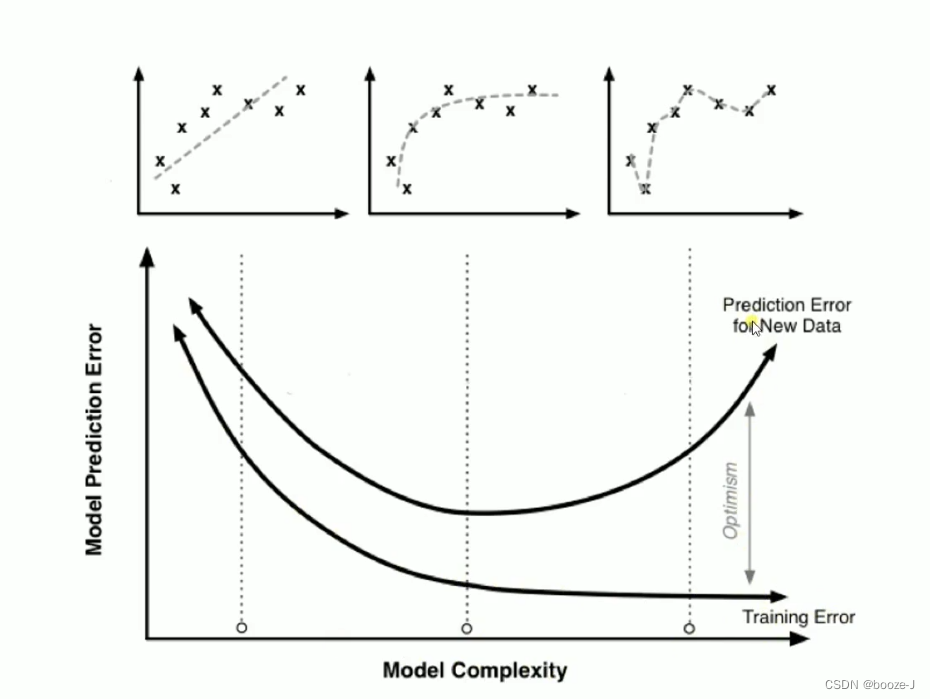

Over fitting leads to larger test error :

You can see that as the model structure becomes more and more complex , The error of training set is getting smaller and smaller , The error of the test set decreases first and then increases , Over fitting leads to larger test error .

The better case is that the two lines of training error and test error are relatively close .

Prevent over fitting

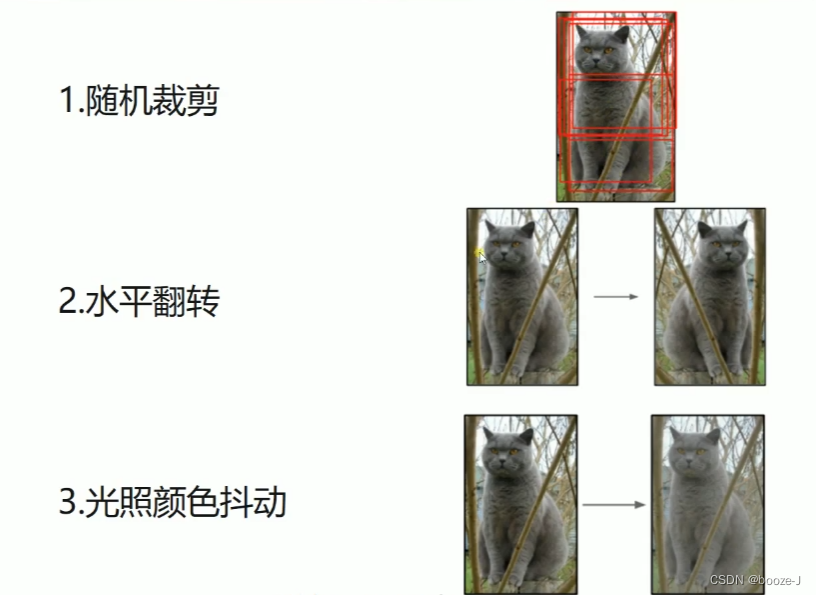

1. Increase the data set

There is a popular saying in the field of data mining ,“ Sometimes having more data is better than a good model ”. Generally speaking, more data participate in training , The better the training model . If there is too little data , And if the neural network we build is too complex, it is easier to produce the phenomenon of over fitting .

2.Early stopping

In training the model , We often set a relatively large number of generations .Early stopping It is a strategy to end training in advance to prevent over fitting .

The general practice is to record the best so far validation accuracy, As the continuous 10 individual Epoch Not reaching the best accuracy when , You could say accuracy It's not improving anymore . At this point you can stop iterating (Early Stopping).

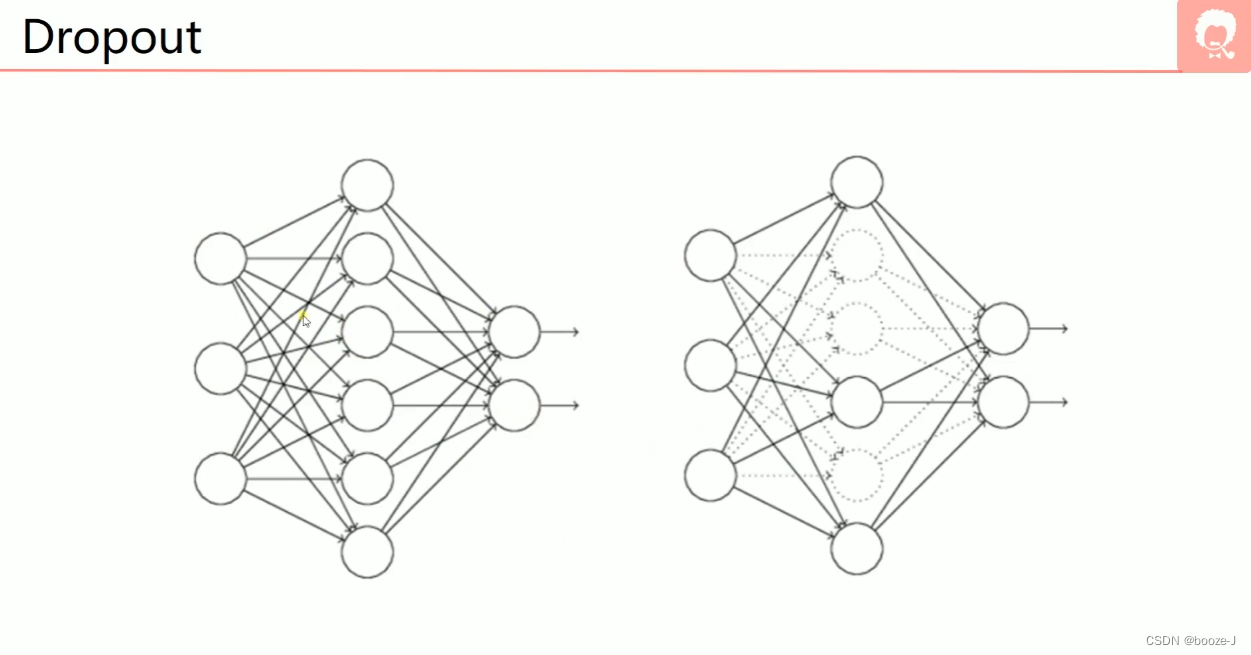

3.Dropout

Every time I train , Will turn off some neurons randomly , Closing does not mean removing , Instead, these dotted neurons do not participate in training . Pay attention to the general training , When testing the model , Is to use all neurons , It's not going to happen dropout.

4. Regularization

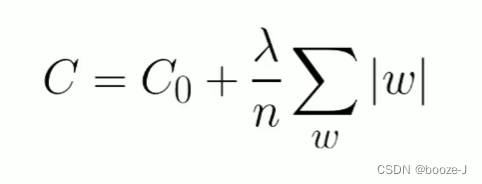

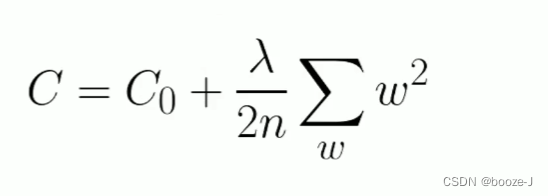

C0 Represents the original cost function ,n Represents the number of samples , λ \lambda λ That's the coefficient of the regular term , Weigh regular terms against C0 Proportion of items .

L1 Regularization :

L1 Regularization can achieve the effect of sparseness of model parameters .

L2 Regularization :

L2 Regularization can attenuate the weight of the model , Make the model parameter values close to 0.

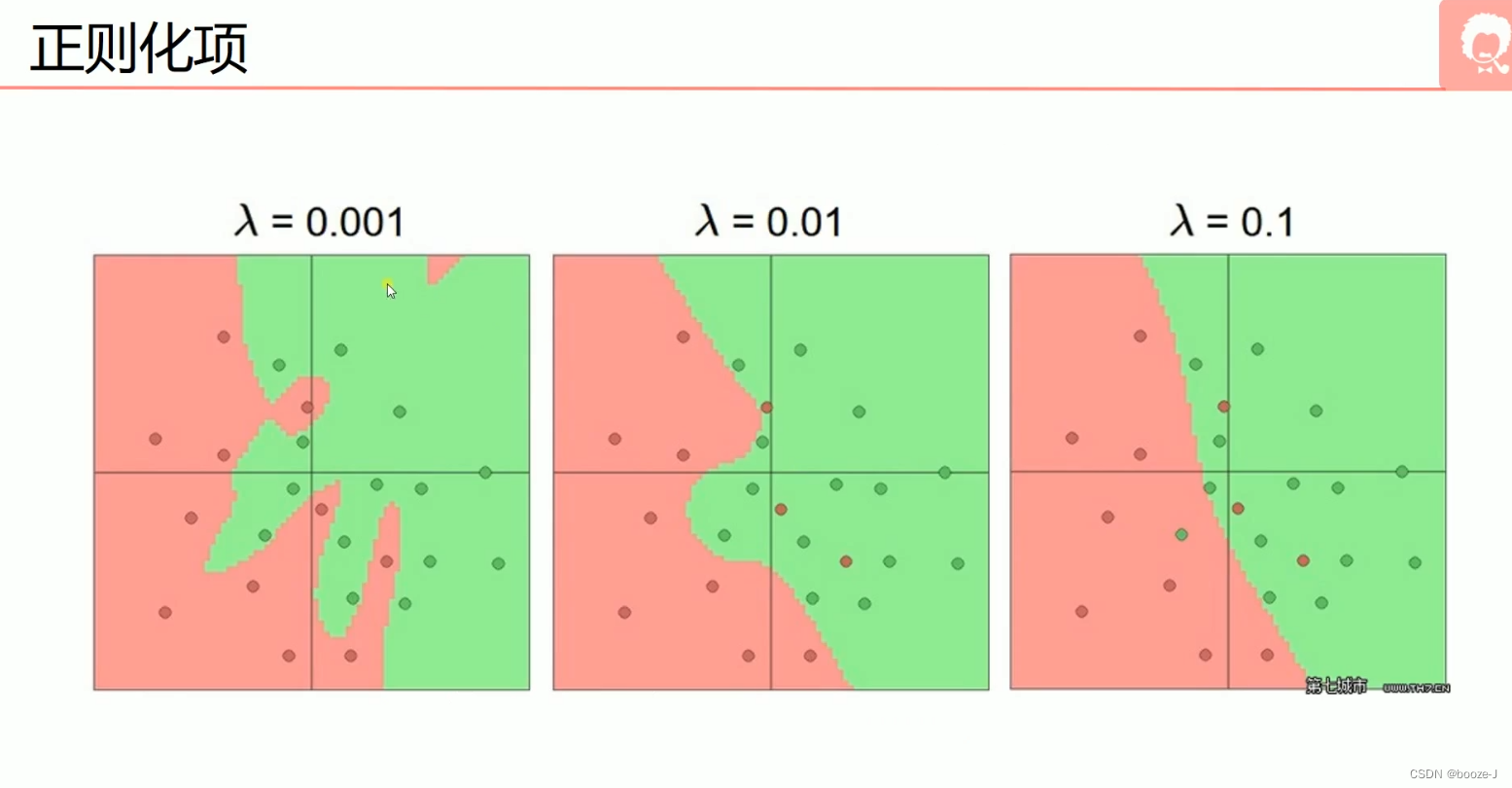

When λ \lambda λ=0.001 when , Over fitting phenomenon appears , When λ \lambda λ=0.01 when , There is a slight over fitting , When λ \lambda λ=0.1 There was no fitting phenomenon when .

边栏推荐

- 13. Model saving and loading

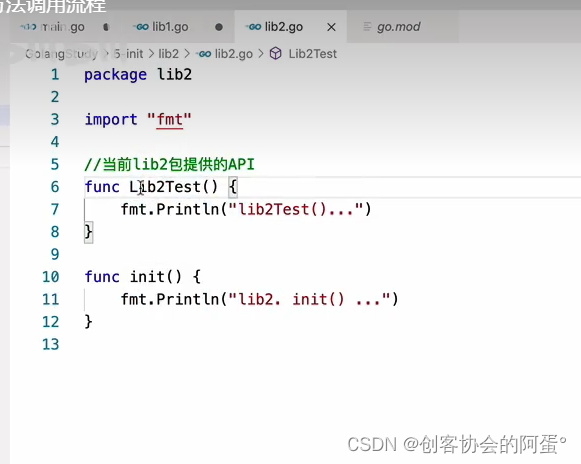

- [Yugong series] go teaching course 006 in July 2022 - automatic derivation of types and input and output

- [Yugong series] go teaching course 006 in July 2022 - automatic derivation of types and input and output

- Which securities company has a low, safe and reliable account opening commission

- 11.递归神经网络RNN

- Introduction to ML regression analysis of AI zhetianchuan

- Lecture 1: the entry node of the link in the linked list

- 牛客基础语法必刷100题之基本类型

- NVIDIA Jetson test installation yolox process record

- Service mesh introduction, istio overview

猜你喜欢

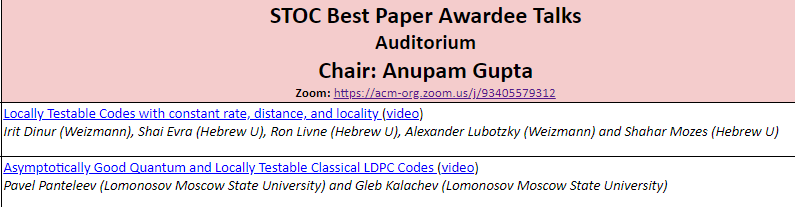

国内首次,3位清华姚班本科生斩获STOC最佳学生论文奖

【GO记录】从零开始GO语言——用GO语言做一个示波器(一)GO语言基础

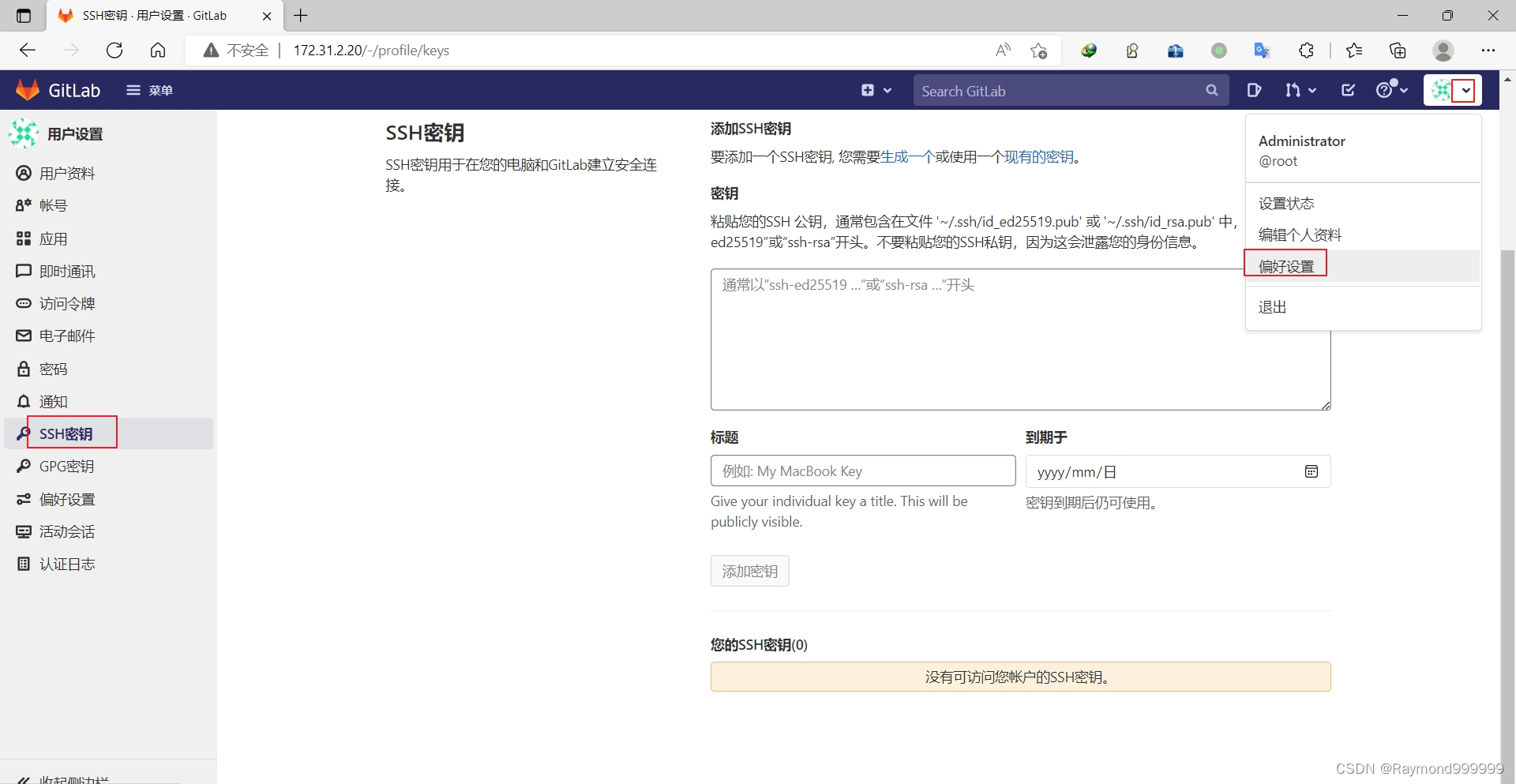

y59.第三章 Kubernetes从入门到精通 -- 持续集成与部署(三二)

Image data preprocessing

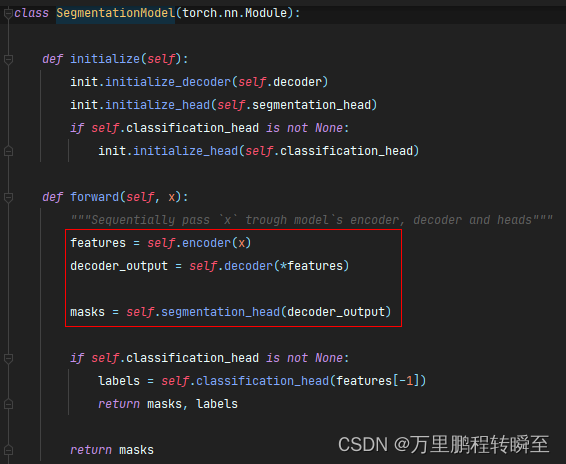

Semantic segmentation model base segmentation_ models_ Detailed introduction to pytorch

完整的模型训练套路

Codeforces Round #804 (Div. 2)(A~D)

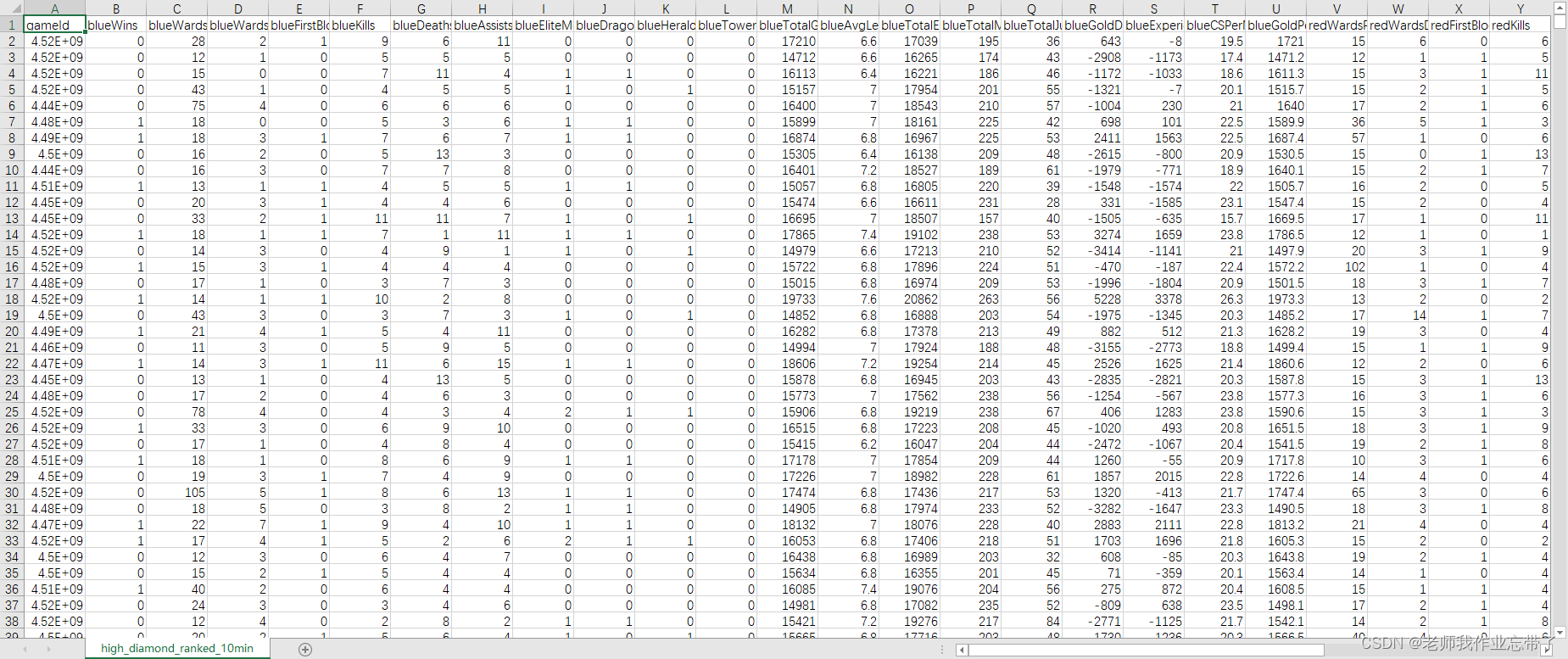

英雄联盟胜负预测--简易肯德基上校

10.CNN应用于手写数字识别

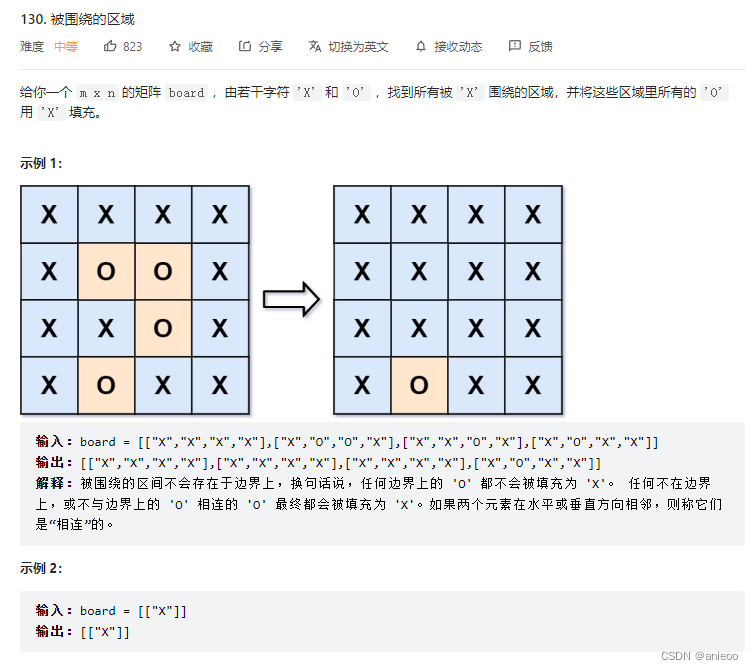

130. Surrounding area

随机推荐

接口测试进阶接口脚本使用—apipost(预/后执行脚本)

8.优化器

7. Regularization application

基于人脸识别实现课堂抬头率检测

Malware detection method based on convolutional neural network

Deep dive kotlin synergy (XXII): flow treatment

Thinkphp内核工单系统源码商业开源版 多用户+多客服+短信+邮件通知

完整的模型训练套路

[OBS] the official configuration is use_ GPU_ Priority effect is true

letcode43:字符串相乘

手机上炒股安全么?

DNS series (I): why does the updated DNS record not take effect?

Cve-2022-28346: Django SQL injection vulnerability

Which securities company has a low, safe and reliable account opening commission

[go record] start go language from scratch -- make an oscilloscope with go language (I) go language foundation

AI zhetianchuan ml novice decision tree

Introduction to paddle - using lenet to realize image classification method II in MNIST

Su embedded training - Day8

New library launched | cnopendata China Time-honored enterprise directory

String usage in C #