当前位置:网站首页>Kafka connect synchronizes Kafka data to MySQL

Kafka connect synchronizes Kafka data to MySQL

2022-07-08 01:31:00 【W_ Meng_ H】

One 、 Background information

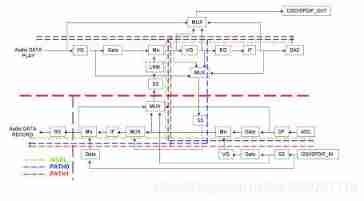

Kafka Connect It is mainly used to input and output data streams to message queues Kafka edition .Kafka Connect Mainly through various Source Connector The implementation of the , Input data from a third-party system into Kafka Broker, Through a variety of Sink Connector Realization , Take data from Kafka Broker Import into the third-party system .

Official documents :How to use Kafka Connect - Getting Started | Confluent Documentation

Two 、 development environment

| middleware | edition |

| zookeeper | 3.7.0 |

| kafka | 2.10-0.10.2.1 |

3、 ... and 、 To configure Kafka Connect

1、 Enter kafka Of config Under the folder , modify connect-standalone.properties

# Licensed to the Apache Software Foundation (ASF) under one or more

# contributor license agreements. See the NOTICE file distributed with

# this work for additional information regarding copyright ownership.

# The ASF licenses this file to You under the Apache License, Version 2.0

# (the "License"); you may not use this file except in compliance with

# the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

# These are defaults. This file just demonstrates how to override some settings.

bootstrap.servers=kafka-0:9092

# The converters specify the format of data in Kafka and how to translate it into Connect data. Every Connect user will

# need to configure these based on the format they want their data in when loaded from or stored into Kafka

key.converter=org.apache.kafka.connect.json.JsonConverter

value.converter=org.apache.kafka.connect.json.JsonConverter

# Converter-specific settings can be passed in by prefixing the Converter's setting with the converter we want to apply

# it to

key.converter.schemas.enable=false

value.converter.schemas.enable=false

# The internal converter used for offsets and config data is configurable and must be specified, but most users will

# always want to use the built-in default. Offset and config data is never visible outside of Kafka Connect in this format.

internal.key.converter=org.apache.kafka.connect.json.JsonConverter

internal.value.converter=org.apache.kafka.connect.json.JsonConverter

internal.key.converter.schemas.enable=false

internal.value.converter.schemas.enable=false

offset.storage.file.filename=/tmp/connect.offsets

# Flush much faster than normal, which is useful for testing/debugging

offset.flush.interval.ms=10000

# The core configuration

#plugin.path=/home/kafka/pluginsIf Mysql Data synchronization to Kafka, The following information needs to be modified :

# The converters specify the format of data in Kafka and how to translate it into Connect data. Every Connect user will

# need to configure these based on the format they want their data in when loaded from or stored into Kafka

key.converter=io.confluent.connect.json.JsonSchemaConverter

value.converter=io.confluent.connect.json.JsonSchemaConverter

# Converter-specific settings can be passed in by prefixing the Converter's setting with the converter we want to apply

# it to

key.converter.schemas.enable=true

value.converter.schemas.enable=trueBe careful :Kafka Connect The previous version of does not support configuration plugin.path, You need to CLASSPATH Specify the plug-in location in

vi /etc/profile

export CLASSPATH=/home/kafka/*

source /etc/profile2、 modify connect-mysql-source.properties(mysql-kafka)

#

# Copyright 2018 Confluent Inc.

#

# Licensed under the Confluent Community License (the "License"); you may not use

# this file except in compliance with the License. You may obtain a copy of the

# License at

#

# http://www.confluent.io/confluent-community-license

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS, WITHOUT

# WARRANTIES OF ANY KIND, either express or implied. See the License for the

# specific language governing permissions and limitations under the License.

#

# A simple example that copies all tables from a SQLite database. The first few settings are

# required for all connectors: a name, the connector class to run, and the maximum number of

# tasks to create:

name=test-source-mysql

connector.class=io.confluent.connect.jdbc.JdbcSourceConnector

tasks.max=1

# The remaining configs are specific to the JDBC source connector. In this example, we connect to a

# SQLite database stored in the file test.db, use and auto-incrementing column called 'id' to

# detect new rows as they are added, and output to topics prefixed with 'test-sqlite-jdbc-', e.g.

# a table called 'users' will be written to the topic 'test-sqlite-jdbc-users'.

connection.url=jdbc:mysql://localhost:3306/demo?user=root&password=root

table.whitelist=test

mode=incrementing

incrementing.column.name=id

topic.prefix=mysql-

# Define when identifiers should be quoted in DDL and DML statements.

# The default is 'always' to maintain backward compatibility with prior versions.

# Set this to 'never' to avoid quoting fully-qualified or simple table and column names.

#quote.sql.identifiers=always

Related drive jar package :

kafka- Related driver package -Java Document resources -CSDN download

3、 modify connect-mysql-sink.properties(kafka-mysql)

#

# Copyright 2018 Confluent Inc.

#

# Licensed under the Confluent Community License (the "License"); you may not use

# this file except in compliance with the License. You may obtain a copy of the

# License at

#

# http://www.confluent.io/confluent-community-license

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS, WITHOUT

# WARRANTIES OF ANY KIND, either express or implied. See the License for the

# specific language governing permissions and limitations under the License.

#

# A simple example that copies from a topic to a SQLite database.

# The first few settings are required for all connectors:

# a name, the connector class to run, and the maximum number of tasks to create:

name=test-sink

connector.class=io.confluent.connect.jdbc.JdbcSinkConnector

tasks.max=1

# The topics to consume from - required for sink connectors like this one

topics=mysql-test_to_kafka

# Configuration specific to the JDBC sink connector.

# We want to connect to a SQLite database stored in the file test.db and auto-create tables.

connection.url=jdbc:mysql://localhost:3306/demo?user=root&password=root

auto.create=false

pk.mode=record_value

pk.fields=id

table.name.format=test_kafka_to

#delete.enabled=true

# Write mode

insert.mode=upsert

# Define when identifiers should be quoted in DDL and DML statements.

# The default is 'always' to maintain backward compatibility with prior versions.

# Set this to 'never' to avoid quoting fully-qualified or simple table and column names.

#quote.sql.identifiers=always

Four 、 Start command

bin/connect-standalone.sh config/connect-standalone.properties config/connect-mysql-source.properties config/connect-mysql-sink.properties边栏推荐

- Chapter VIII integrated learning

- Apt get error

- How to get the first and last days of a given month

- Macro definition and multiple parameters

- Design method and reference circuit of type C to hdmi+ PD + BB + usb3.1 hub (rj45/cf/tf/ sd/ multi port usb3.1 type-A) multifunctional expansion dock

- redis的持久化方式-RDB和AOF 两种持久化机制

- Capstone/cs5210 chip | cs5210 design scheme | cs5210 design data

- 2021-04-12 - new features lambda expression and function functional interface programming

- Gnuradio transmits video and displays it in real time using VLC

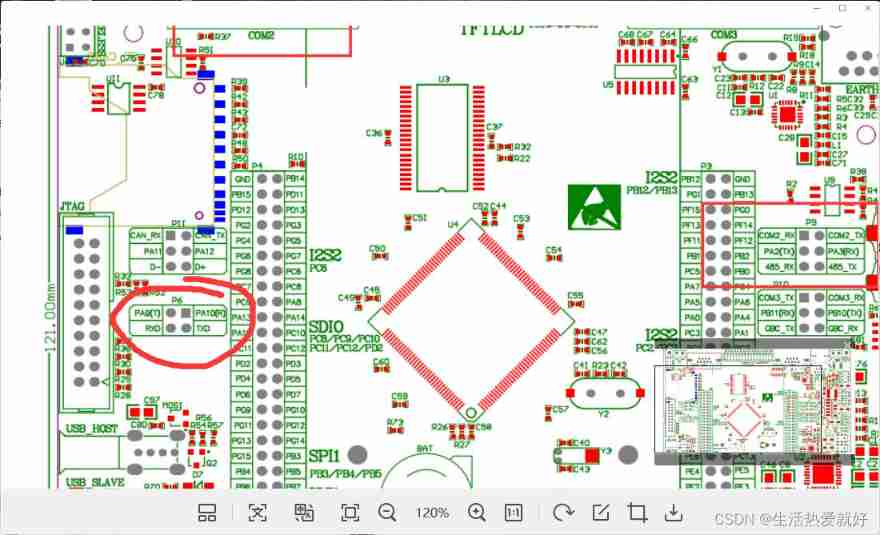

- Study notes of single chip microcomputer and embedded system

猜你喜欢

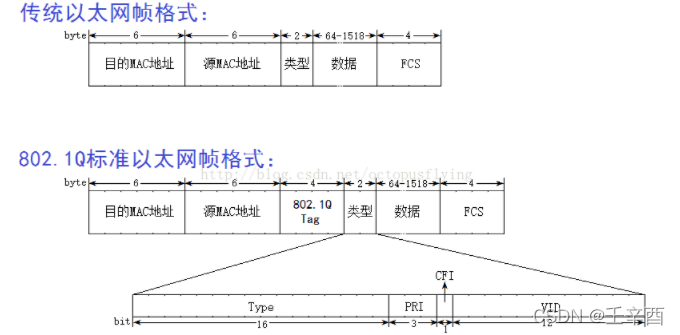

Led serial communication

break net

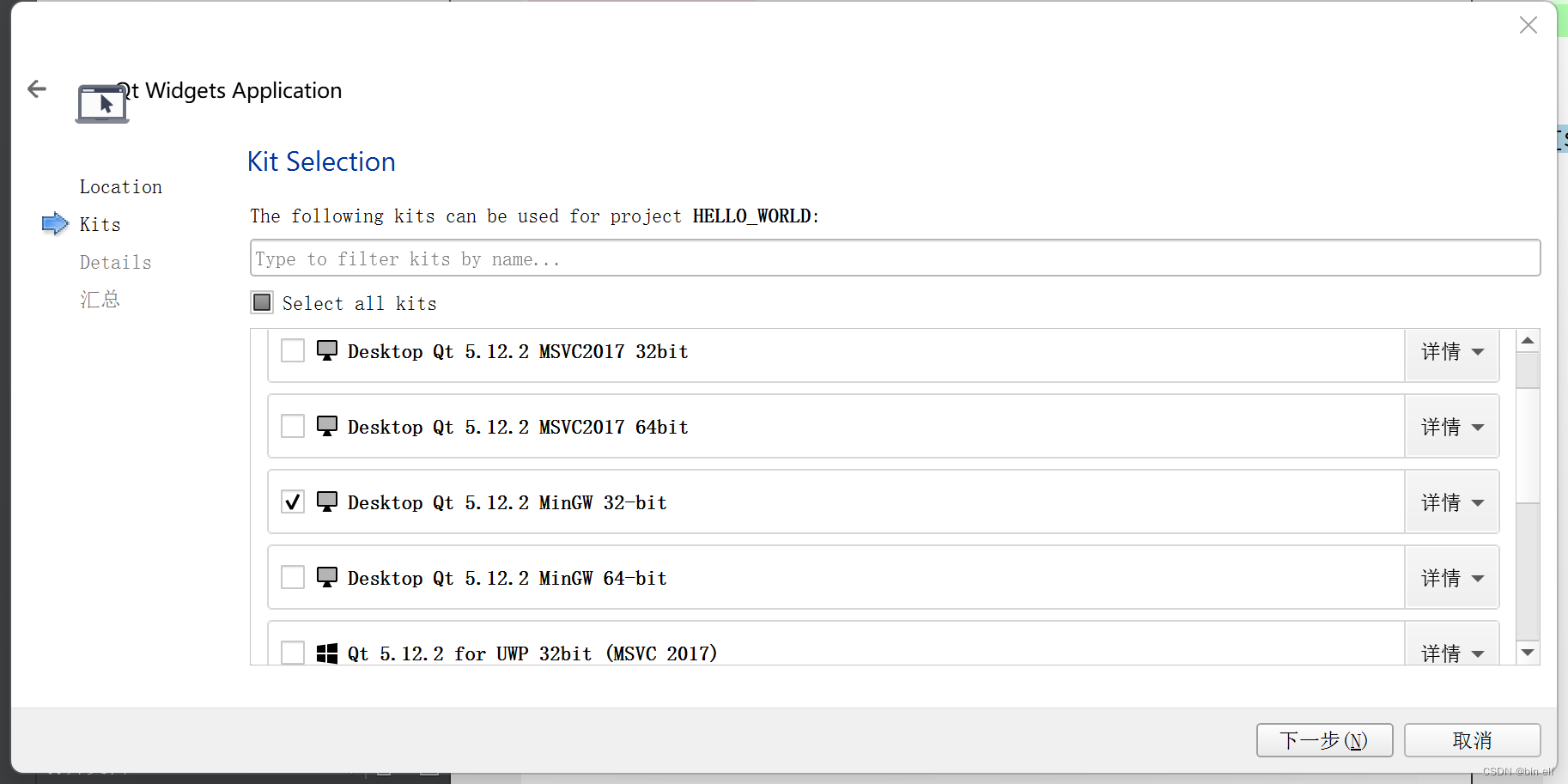

QT build with built-in application framework -- Hello World -- use min GW 32bit

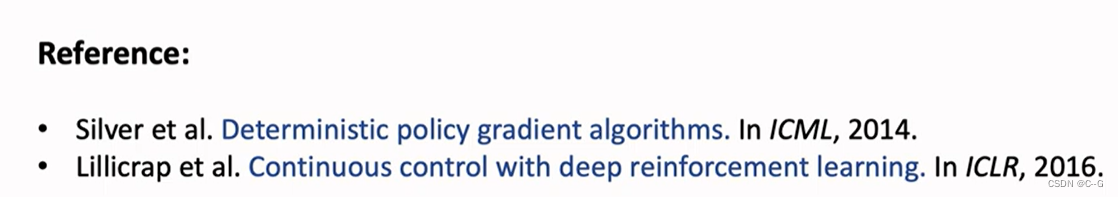

5. Discrete control and continuous control

![Gnuradio operation error: error thread [thread per block [12]: < block OFDM_ cyclic_ prefixer(8)>]: Buffer too small](/img/ab/066923f1aa1e8dd8dcc572cb60a25d.jpg)

Gnuradio operation error: error thread [thread per block [12]: < block OFDM_ cyclic_ prefixer(8)>]: Buffer too small

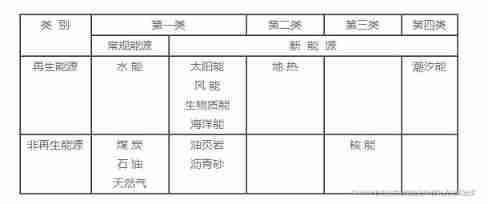

Definition and classification of energy

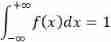

The difference between distribution function and probability density function of random variables

Taiwan Xinchuang sss1700 latest Chinese specification | sss1700 latest Chinese specification | sss1700datasheet Chinese explanation

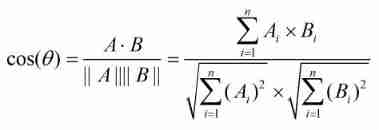

Matlab code about cosine similarity

2022 high voltage electrician examination skills and high voltage electrician reexamination examination

随机推荐

4、策略学习

Mat file usage

Ag9310meq ag9310mfq angle two USB type C to HDMI audio and video data conversion function chips parameter difference and design circuit reference

Redis 主从复制

[loss function] entropy / relative entropy / cross entropy

FIR filter of IQ signal after AD phase discrimination

解决报错:npm WARN config global `--global`, `--local` are deprecated. Use `--location=global` instead.

Gnuradio3.9.4 create OOT module instances

2021-04-12 - new features lambda expression and function functional interface programming

QT build with built-in application framework -- Hello World -- use min GW 32bit

General configuration toolbox

High quality USB sound card / audio chip sss1700 | sss1700 design 96 kHz 24 bit sampling rate USB headset microphone scheme | sss1700 Chinese design scheme explanation

2021 Shanghai safety officer C certificate examination registration and analysis of Shanghai safety officer C certificate search

Plot function drawing of MATLAB

Kaptcha generates verification code on Web page

About snake equation (5)

从Starfish OS持续对SFO的通缩消耗,长远看SFO的价值

qt--将程序打包--不要安装qt-可以直接运行

The usage of rand function in MATLAB

Measure the voltage with analog input (taking Arduino as an example, the range is about 1KV)