当前位置:网站首页>[loss function] entropy / relative entropy / cross entropy

[loss function] entropy / relative entropy / cross entropy

2022-07-08 01:21:00 【Ice cream and Mousse Cake】

Easy to understand but not precise :

entropy : All the information about a possibility

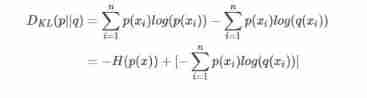

Relative entropy (KL The divergence ): The difference in the amount of information between the real distribution and the predicted distribution ( real - forecast ), The value of the novel is close to the truth .

Cross entropy : from kl Derived from the deformation of divergence formula , The first half of the formula is entropy, and the second half is cross entropy . Because the entropy in front is constant , Therefore, it is more convenient to calculate the loss directly with cross entropy when optimizing .

Blog records are convenient for review and sorting , If there is any mistake, please advise , thank you !

Blog records are convenient for review and sorting , If there is any mistake, please advise , thank you !

reference

https://blog.csdn.net/tsyccnh/article/details/79163834

边栏推荐

- Capstone/cs5210 chip | cs5210 design scheme | cs5210 design data

- Complete model verification (test, demo) routine

- Basic implementation of pie chart

- Solve the error: NPM warn config global ` --global`, `--local` are deprecated Use `--location=global` instead.

- Led serial communication

- Ag9310 same function alternative | cs5261 replaces ag9310type-c to HDMI single switch screen alternative | low BOM replaces ag9310 design

- Arm bare metal

- 2022 examination for safety production management personnel of hazardous chemical production units and new version of examination questions for safety production management personnel of hazardous chem

- 14.绘制网络模型结构

- Su embedded training - Day5

猜你喜欢

Two methods for full screen adaptation of background pictures, background size: cover; Or (background size: 100% 100%;)

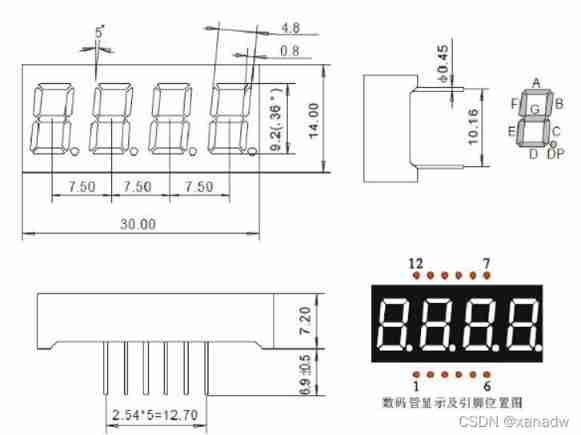

Four digit nixie tube display multi digit timing

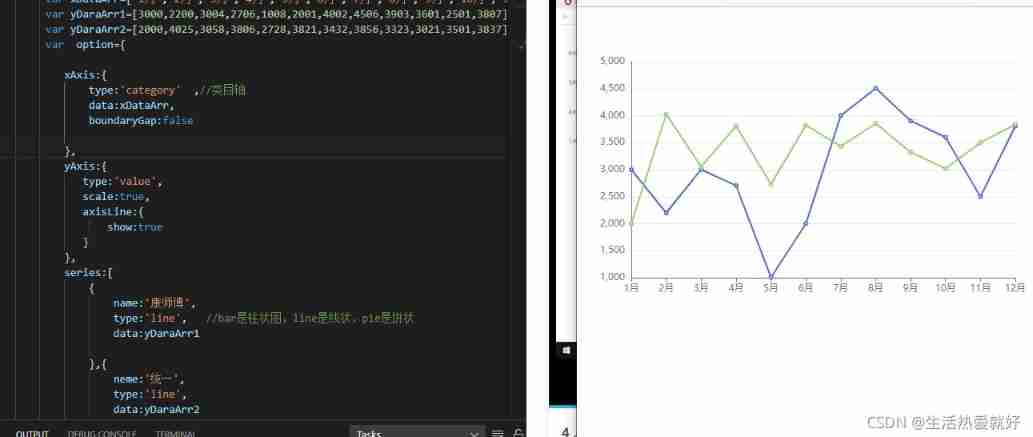

Basic realization of line chart (II)

A speed Limited large file transmission tool for every major network disk

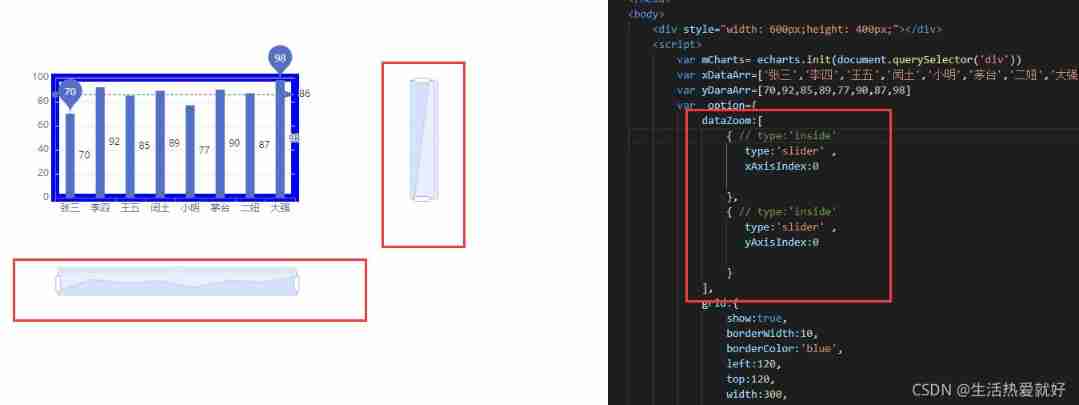

Common configurations in rectangular coordinate system

Vscode is added to the right-click function menu

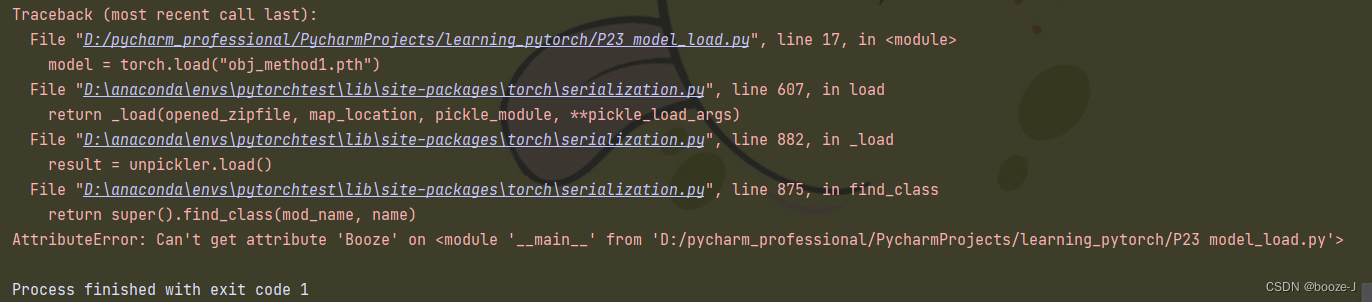

Saving and reading of network model

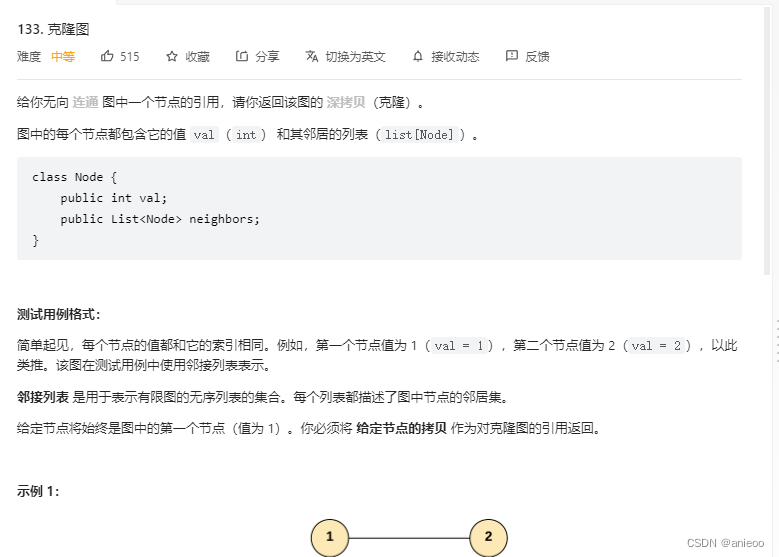

133. 克隆图

Chapter 16 intensive learning

2022 tea master (intermediate) examination questions and tea master (intermediate) examination skills

随机推荐

4. Strategic Learning

C#中string用法

2022 refrigeration and air conditioning equipment operation examination questions and refrigeration and air conditioning equipment operation examination skills

Chapter VIII integrated learning

12. RNN is applied to handwritten digit recognition

Su embedded training - C language programming practice (implementation of address book)

Get started quickly using the local testing tool postman

General configuration tooltip

Using GPU to train network model

14. Draw network model structure

Cs5212an design display to VGA HD adapter products | display to VGA Hd 1080p adapter products

11. Recurrent neural network RNN

Basic implementation of pie chart

Leetcode notes No.21

Design method and application of ag9311maq and ag9311mcq in USB type-C docking station or converter

Redis 主从复制

Multi purpose signal modulation generation system based on environmental optical signal detection and user-defined signal rules

Leetcode notes No.7

2022 operation certificate examination for main principals of hazardous chemical business units and main principals of hazardous chemical business units

Several frequently used OCR document scanning tools | no watermark | avoid IQ tax