当前位置:网站首页>Lesson 7 tensorflow realizes convolutional neural network

Lesson 7 tensorflow realizes convolutional neural network

2022-07-06 06:34:00 【Yi Xiaoxia】

TensorFlow Realize convolutional neural network

One This section introduces

1.1 Knowledge point

1、 Convolution neural network introduction ;

2、TensorFlow practice CNN The Internet ;

Two Course content

2.1 Basic introduction of convolutional neural network

Convolution neural network is a neural network model constructed by convolution structure , Its characteristic is local perception 、 Weight sharing , Pooling reduces parameters and hierarchies .

Its basic structure includes input layer 、 Convolution layer 、 Downsampling and fully connected output layer . Each layer is convoluted by the convolution check image , The calculated matrix is called a characteristic graph , The area mapped by the feature map in the original image is called receptive field . generally speaking , The receptive field size of the first convolution layer is equal to the convolution kernel size , The receptive field size of the subsequent convolution layer is related to the size and step size of each convolution kernel before . Next, we will introduce the basic concepts of convolution kernel and step size .

2.1.1 Convolution kernel and step size

Convolution kernel includes convolution kernel size 、 The number of input channels and the number of output channels . Such as (5,5,32,64) You mean for 64 individual 32 The tunnel 5*5 Convolution kernel and input are convoluted to obtain 64 A convolution result . Among them, the calculation of convolution is carried out by multiplying and summing elements one by one . The length of each convolution movement is its convolution step .

In the concept of convolution kernel , Also used. padding. That is, in order to solve the problems of smaller and smaller images and loss of boundary information in convolution operation .Padding It can be divided into the following two kinds :

(1)valid padding: No processing , Use only the original image , Convolution kernel is not allowed to exceed the boundary of the original image ;

(2)same padding: Fill in , Allow the convolution kernel to exceed the boundary of the original image , And make the size of the convolution result consistent with the original .

2.1.2 pooling Pooling

The role of the pooling layer is to reduce the dimensions of each feature , In order to reduce the amount of calculation . Generally speaking, the pool layer is often located behind each convolution layer , It is used to reduce calculation and prevent over fitting . The calculation method of pooling layer is similar to that of convolution layer , It is the way of multiplying and adding each element , The difference is that the convolution kernel has training parameters , The pool layer has no training parameters , The purpose is only to reduce the calculation . The pool layer is divided into the following two categories :

(1) Maximum pooling (max pooling): Select the largest element from the window correction diagram ;

(2) The average pooling (average pooling): Calculate the average from the window characteristic graph

2.1.3 CNN characteristic

Local receptive field : Convolution neural network extracts local features by using convolution kernel , Then the global information can be obtained by synthesizing the regional characteristics of different neurons at a deep level , It can also reduce the number of connections .

Weight sharing : Sharing parameters between different neurons can reduce the calculation of the model , And weight sharing is to use the same convolution kernel to convolute the image , In this way, all neurons in the convolution layer can detect the same features in different positions of the image , Its main function is also to detect the same type of features in different locations , That is, the image can be translated in a small range , Translation invariance .

3、 ... and Experimental tests

3.1 predefined

First import the library :

import tensorflow as tf

from tensorflow.examples.tutorials.mnist import input_data

(1) Then read the data set of handwritten fonts , And turn it into onehot code , That is, different image features are represented by different coding methods .

mnist = input_data.read_data_sets('MNIST_data_bak/', one_hot=True)

(2) Initialize the calculation session context , stay TensorFlow The calculation of the number in depends on the structure of the session :

sess = tf.InteractiveSession()

(3) Define W Variable , Initialization according to the positive distribution , The standard deviation is set to 0.1:

def weight_variable(shape):

initial = tf.truncated_normal(shape, stddev=0.1)

return tf.Variable(initial)

(4) Definition b Variable , Initialize to a constant :

def bias_variable(shape):

initial = tf.constant(0.1, shape=shape)

return tf.Variable(initial)

(5) Define the convolution function , among x For the input image data ,w Is the parameter of convolution , among stride Step size defined for ,padding Use same The way :

def conv2d(x, W):

return tf.nn.conv2d(x, W, strides=[1, 1, 1, 1], padding='SAME')

(6) Define pooling layer functions , among x For the input image data ,w Is the parameter of convolution , among stride Step size defined for ( In order to achieve the purpose of compressing data , Use steps of 2),padding Use same The way :

def max_pool_2x2(x):

return tf.nn.max_pool(x, ksize=[1, 2, 2, 1], strides=[1, 2, 2, 1], padding='SAME')

3.2 Model structure construction

(1)TensorFlow You need to use placeholders for input and output , Because the input image size is 2828, Therefore, one-dimensional input vector needs to be transformed into a two-dimensional picture structure , From 1784 The original form of 28*28 Structure .

x = tf.placeholder(tf.float32, [None, 784])

y_ = tf.placeholder(tf.float32, [None, 10])

x_image = tf.reshape(x, [-1, 28, 28, 1])

(2) Define the first convolution layer , there [3,3, 1, 32] The size of the convolution kernel is 33,1 A color channel ,32 Different convolution kernels , And then use conv2d Function convolution operation , And add an offset term , Then use ReLU The activation function is nonlinear , Last , Use the maximum pooling function max_pool_22 Pool the output of convolution .

W_conv1 = weight_variable([3, 3, 1, 32])

b_conv1 = bias_variable([32])

h_conv1 = tf.nn.relu(conv2d(x_image, W_conv1) + b_conv1)

h_pool1 = max_pool_2x2(h_conv1)

(3) Define the second convolution layer , there [5,5,32,64] The size of the convolution kernel is 55,32 Input channels ,64 Convolution kernels with different outputs , And then use conv2d Function convolution operation , And add an offset term , Then use ReLU The activation function is nonlinear , Last , Use the maximum pooling function max_pool_22 Pool the output of convolution .

W_conv2 = weight_variable([5, 5, 32, 64])

b_conv2 = bias_variable([64])

h_conv2 = tf.nn.relu(conv2d(h_pool1, W_conv2) + b_conv2)

h_pool2 = max_pool_2x2(h_conv2)

(4) Because I've gone through two steps 22 Maximum pooling of , So the side length is just 1/4 了 , Picture size by 2828 Turned into 77, After two pooling , Each pool transformation 1/2. The number of convolution kernels in the second convolution layer is 64, Its output is tensor The size is 77*64. We use tf.reshape Function to the output of the second convolution layer tensor To deform , Turn it into a one-dimensional vector . Then connect a full connectivity layer , The implied node is 1024, And use ReLU Activation function , Feature combination .

W_fc1 = weight_variable([7 * 7 * 64, 1024])

b_fc1 = bias_variable([1024])

h_pool2_flat = tf.reshape(h_pool2, [-1, 7 * 7 * 64])

h_fc1 = tf.nn.relu(tf.matmul(h_pool2_flat, W_fc1) + b_fc1)

(5) Use softmax Activate the function to classify , Add and classify the whole connection layer .

W_fc2 = weight_variable([1024, 10])

b_fc2 = bias_variable([10])

y_conv = tf.nn.softmax(tf.matmul(h_fc1, W_fc2) + b_fc2)

3.3 Optimizer and loss function

Here we use Adam Loss function , The learning rate is 0.01, Use cross entropy loss for classification .

cross_entropy = tf.reduce_mean(-tf.reduce_sum(y_ * tf.log(y_conv),

reduction_indices=[1]))

train_step = tf.train.AdamOptimizer(1e-4).minimize(cross_entropy)

3.4 Model iteration training and evaluation

(1) Set the accuracy of model calculation .

correct_prediction = tf.equal(tf.argmax(y_conv, 1), tf.argmax(y_, 1))

accuracy = tf.reduce_mean(tf.cast(correct_prediction, tf.float32))

(2) Model iteration training

accuracys=[]

tf.global_variables_initializer().run()

for i in range(1000):

batch = mnist.train.next_batch(50)

if i % 10 == 0:

train_accuracy = accuracy.eval(feed_dict={x: batch[0], y_: batch[1]})

print("step :%d, |training accuracy :%g" % (i, train_accuracy))

accuracys.append(train_accuracy)

train_step.run(feed_dict={x: batch[0], y_: batch[1]})

3.5 Visual drawing

Draw the evaluation chart according to the accuracy .

import matplotlib.pyplot as plt

plt.plot(accuracys)

plt.show()

边栏推荐

- 【MQTT从入门到提高系列 | 01】从0到1快速搭建MQTT测试环境

- Redis core technology and basic architecture of actual combat: what does a key value database contain?

- How much is it to translate Chinese into English for one minute?

- 基於JEECG-BOOT的list頁面的地址欄參數傳遞

- Error getting a new connection Cause: org. apache. commons. dbcp. SQLNestedException

- Address bar parameter transmission of list page based on jeecg-boot

- Drug disease association prediction based on multi-scale heterogeneous network topology information and multiple attributes

- Black cat takes you to learn EMMC Protocol Part 10: EMMC read and write operation details (read & write)

- Chinese English comparison: you can do this Best of luck

- How to extract login cookies when JMeter performs interface testing

猜你喜欢

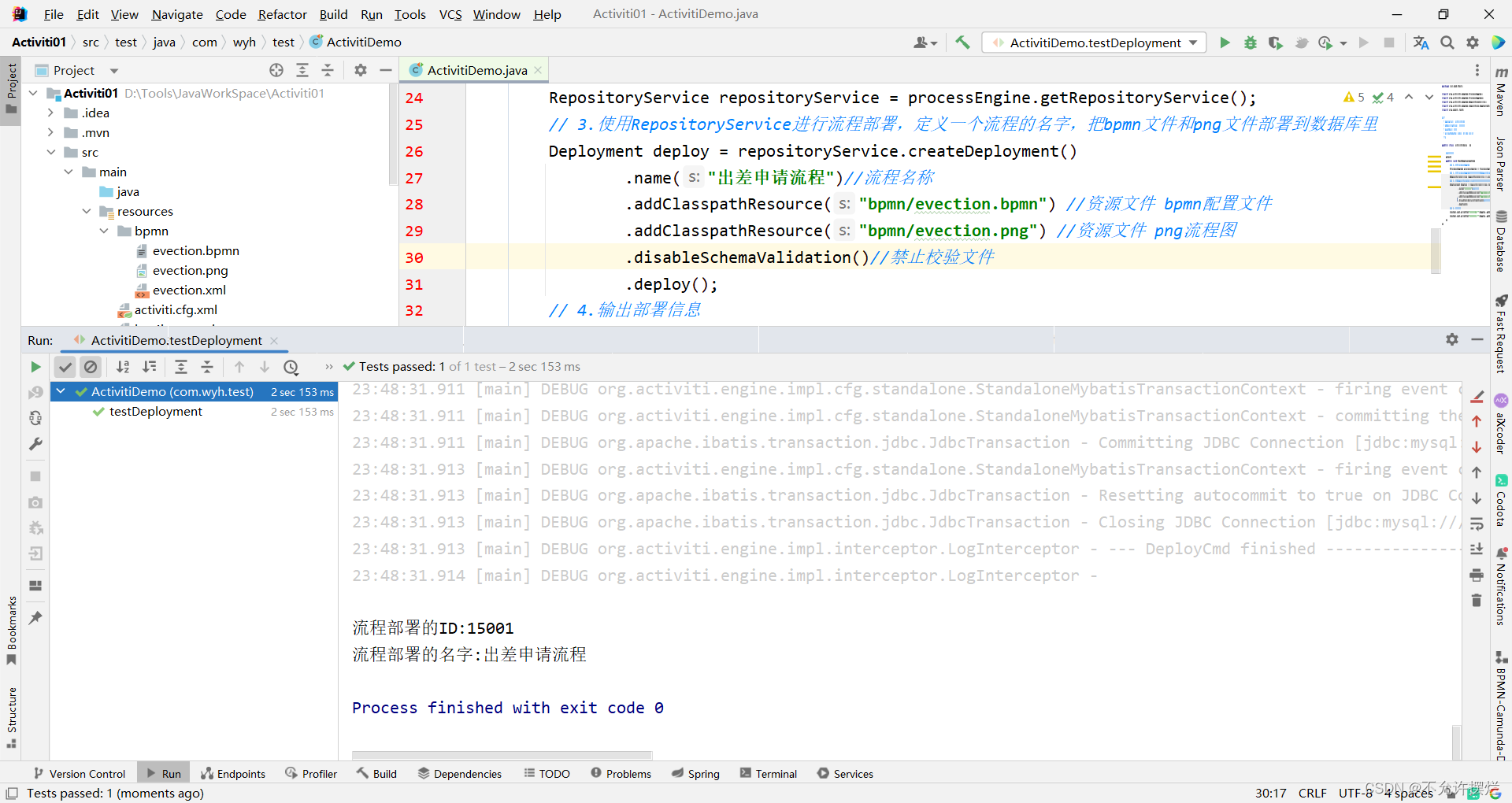

org.activiti.bpmn.exceptions.XMLException: cvc-complex-type.2.4.a: 发现了以元素 ‘outgoing‘ 开头的无效内容

How to translate professional papers and write English abstracts better

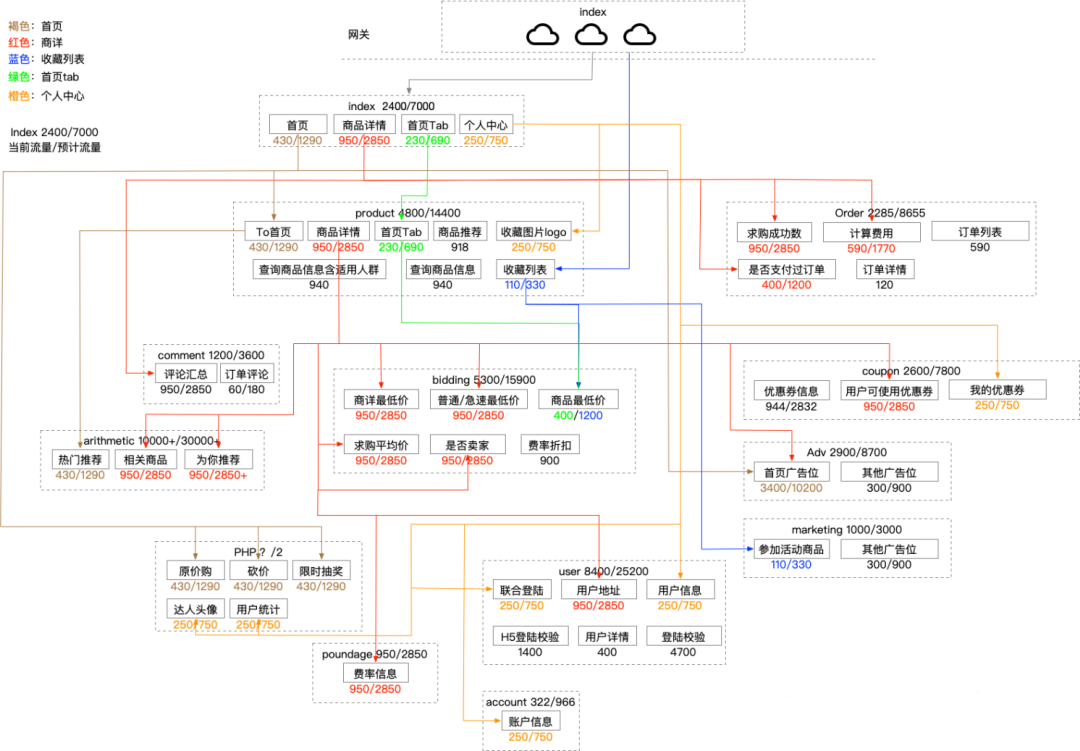

Full link voltage measurement: building three models

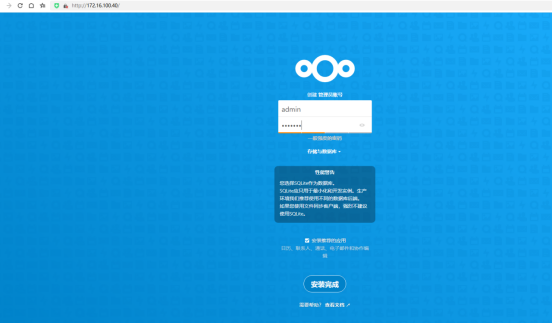

私人云盘部署

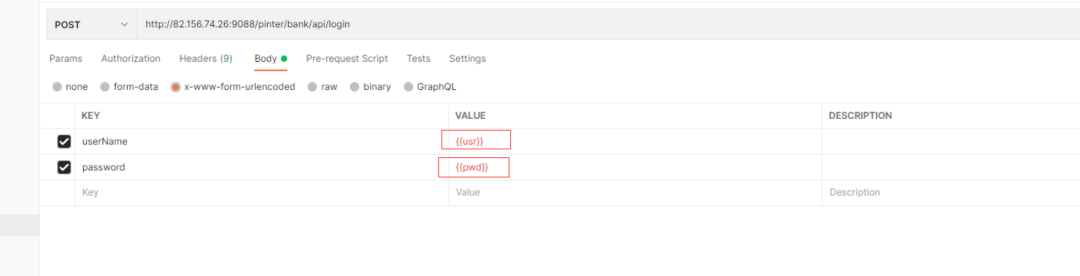

Postman core function analysis - parameterization and test report

翻译生物医学说明书,英译中怎样效果佳

What are the characteristics of trademark translation and how to translate it?

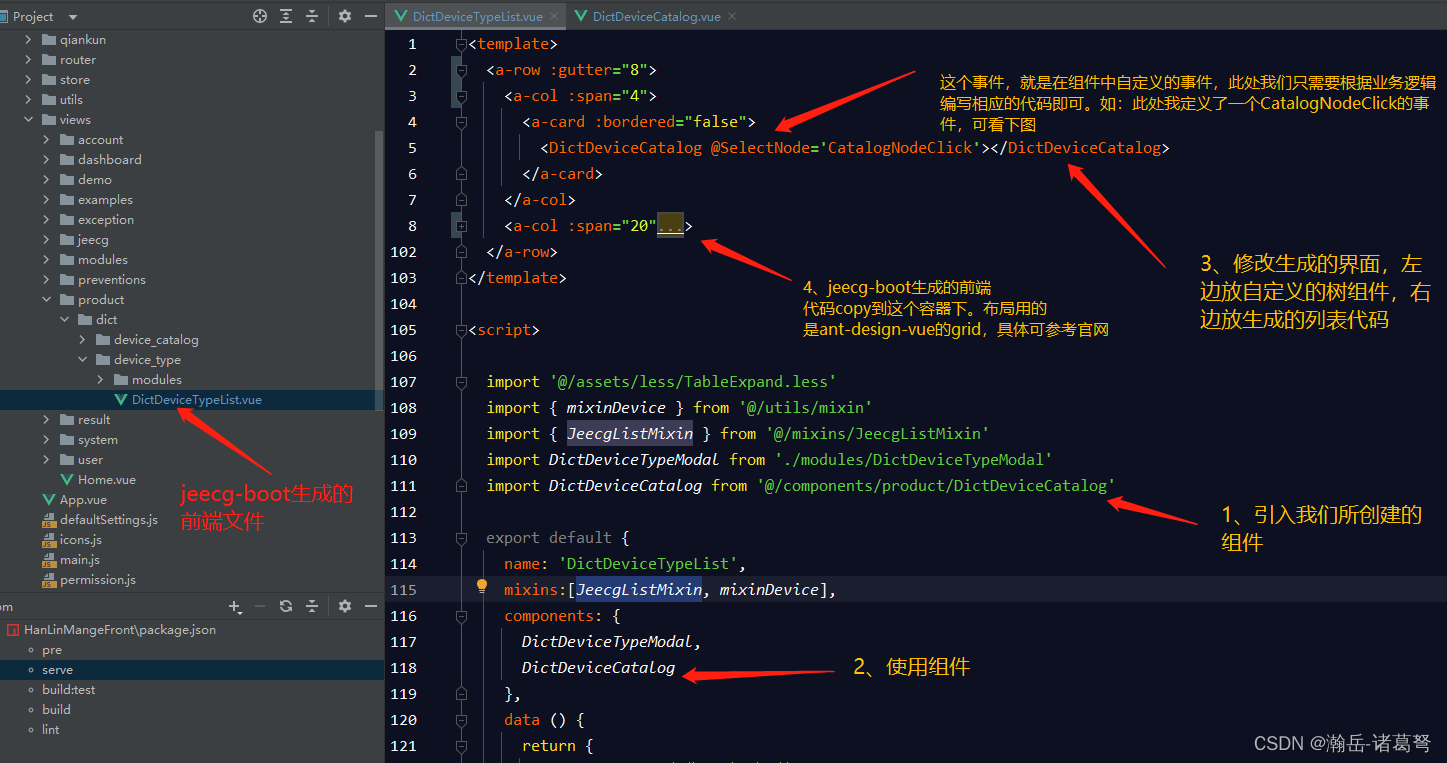

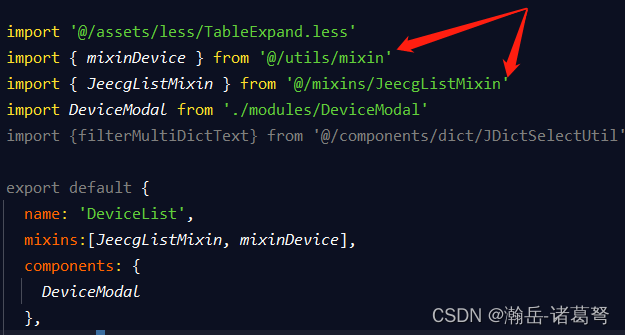

在JEECG-boot代码生成的基础上修改list页面(结合自定义的组件)

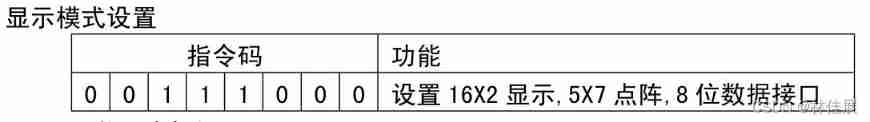

Lecture 8: 1602 LCD (Guo Tianxiang)

Mise en œuvre d’une fonction complexe d’ajout, de suppression et de modification basée sur jeecg - boot

随机推荐

Cobalt strike feature modification

Engineering organisms containing artificial metalloenzymes perform unnatural biosynthesis

模拟卷Leetcode【普通】1061. 按字典序排列最小的等效字符串

mysql按照首字母排序

MySQL is sorted alphabetically

keil MDK中删除添加到watch1中的变量

sourceInsight中文乱码

Simulation volume leetcode [general] 1219 Golden Miner

A 27-year-old without a diploma, wants to work hard on self-study programming, and has the opportunity to become a programmer?

Simulation volume leetcode [general] 1314 Matrix area and

如何做好金融文献翻译?

How to do a good job in financial literature translation?

查询字段个数

Biomedical localization translation services

LeetCode 1200. Minimum absolute difference

Py06 字典 映射 字典嵌套 键不存在测试 键排序

模拟卷Leetcode【普通】1249. 移除无效的括号

How much is it to translate Chinese into English for one minute?

Distributed system basic (V) protocol (I)

My daily learning records / learning methods