当前位置:网站首页>Pytoch Foundation

Pytoch Foundation

2022-07-06 11:28:00 【Sweet potato】

install Pytorch

pip install torch

One 、 What is? Pytorch

Pytorch It's based on Numpy Scientific computing package for , It provides two functions to its users

- As Numpy The replacement of , Provide users with access to GPU Powerful capabilities

- As a platform for deep learning , Provide users with maximum flexibility and speed

Two 、 Basic element operations

- Tensor tensor : Be similar to Numpy Medium ndarray data structure , The biggest difference is Tensor You can use GPU The acceleration function of

1、 Operation of creating matrix

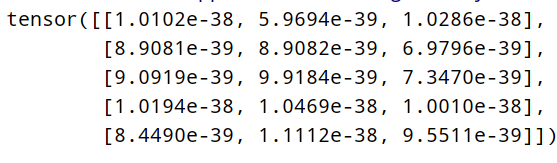

- Create an uninitialized matrix

import torch

x = torch.empty(5, 3)

print(x)

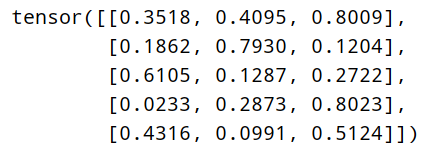

- Create a matrix with initialization

The value conforms to the standard Gaussian distribution

import torch

x = torch.rand(5, 3)

print(x)

Be careful : Compare whether there is an initialized matrix , When declaring an uninitialized matrix , It itself does not contain any exact value , When creating an uninitialized matrix , Any value in the memory allocated to the matrix is assigned to the matrix , Essentially meaningless data

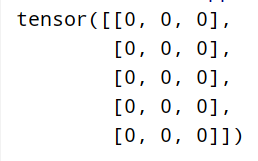

- Create an all zero matrix and specify the type of data element as long

import torch

x = torch.zeros(5, 3, dtype=torch.long)

print(x)

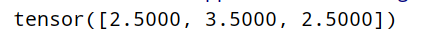

- Create tensors from data

import torch

x = torch.tensor([2.5, 3.5, 2.5])

print(x)

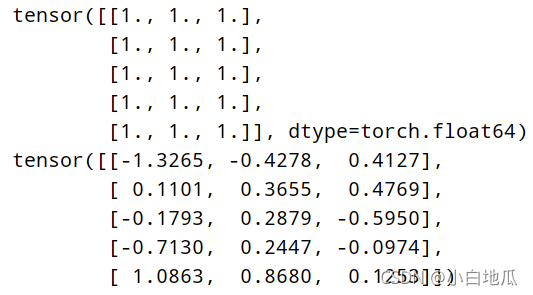

- Create a new tensor of the same size from an existing tensor

x = torch.zeros(5, 3, dtype=torch.long)

# utilize new_ones Method to get a tensor

x = x.new_ones(5, 3, dtype=torch.double)

print(x)

# utilize randn_like Method to get a new tensor with the same tensor size , And use random initialization to assign values

y = torch.randn_like(x, dtype=torch.float)

print(y)

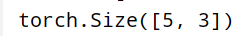

- Get the size of the tensor

x.size()

Note that the return value is a tuple , Support all tuple operations

3、 ... and 、 Basic operation

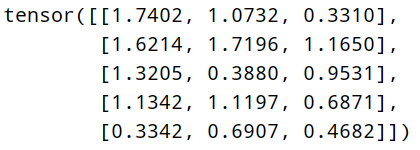

- Addition operation

result = torch.empty(5, 3)

x = torch.rand(5, 3)

y = torch.rand(5, 3)

print(x + y) # The first addition

print(torch.add(x, y)) # The second addition 1

torch.add(x, y, out=result) # The second addition 2

print(result)

y.add_(x) # Third addition

print(y)

The results are the same

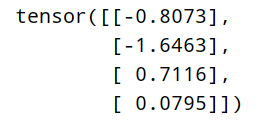

- Slice of tensor

x = torch.randn(4, 4)

print(x[:, :1])

- Change the shape of the tensor

x = torch.randn(4, 4)

# torch.view() The operation needs to ensure that the total number of data elements remains unchanged

y = x.view(16)

# -1 Number of automatic matches

z = x.view(-1, 8)

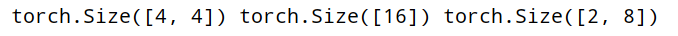

print(x.size(), y.size(), z.size())

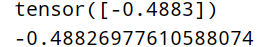

- If there is only one element in the tensor , It can be used .iem() Take out the value , As python Of number type

x = torch.randn(1)

print(x)

print(x.item())

Four 、Torch Tensor and Numpy array Mutual conversion between

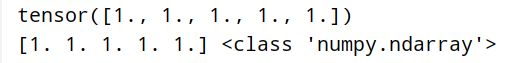

- Torch Tensor and Numpy array Share the underlying memory space , So change one of the values , The other will change with it

a = torch.ones(5)

print(a)

b = a.numpy()

print(b, type(b))

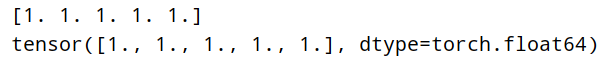

a = numpy.ones(5)

b = torch.from_numpy(a)

print(a)

print(b)

5、 ... and 、GPU and CPU Transfer between

x = torch.randn(1)

# If installed on the server GPU and CUDA

if torch.cuda.is_available():

# Define a device object , This is designated as CUDA, namely GPU

device = torch.device("cuda")

# Directly in GPU Create a Tensor

y = torch.ones_like(x, device=device)

# Will be in CPU above x Tensor moves to GPU above

x = x.to(device)

# x and y All in GPU above , In order to add

z = x + y

# The tensor at this time z stay GPU above

print(z)

# Can also be z Transferred to the CPU above , And specify the element type of the tensor

print(z.to("cpu",torch.double))

边栏推荐

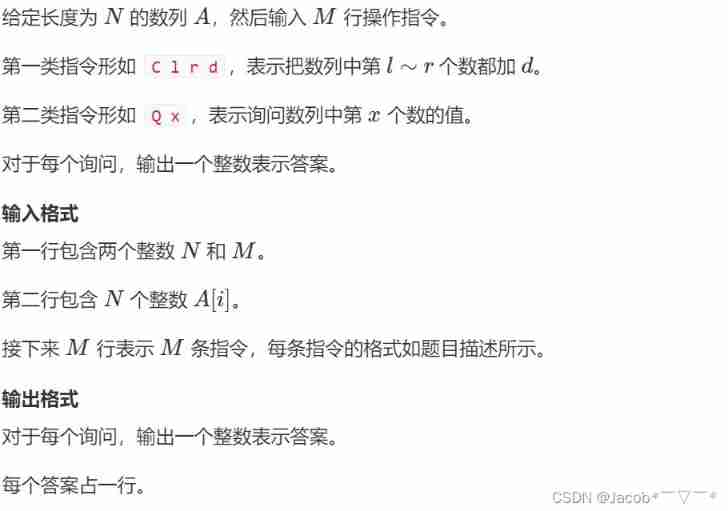

- 数数字游戏

- Use dapr to shorten software development cycle and improve production efficiency

- How to set up voice recognition on the computer with shortcut keys

- QT creator runs the Valgrind tool on external applications

- MySQL and C language connection (vs2019 version)

- ES6 let 和 const 命令

- Dotnet replaces asp Net core's underlying communication is the IPC Library of named pipes

- About string immutability

- When using lambda to pass parameters in a loop, the parameters are always the same value

- [AGC009D]Uninity

猜你喜欢

vs2019 使用向导生成一个MFC应用程序

AcWing 242. A simple integer problem (tree array + difference)

When you open the browser, you will also open mango TV, Tiktok and other websites outside the home page

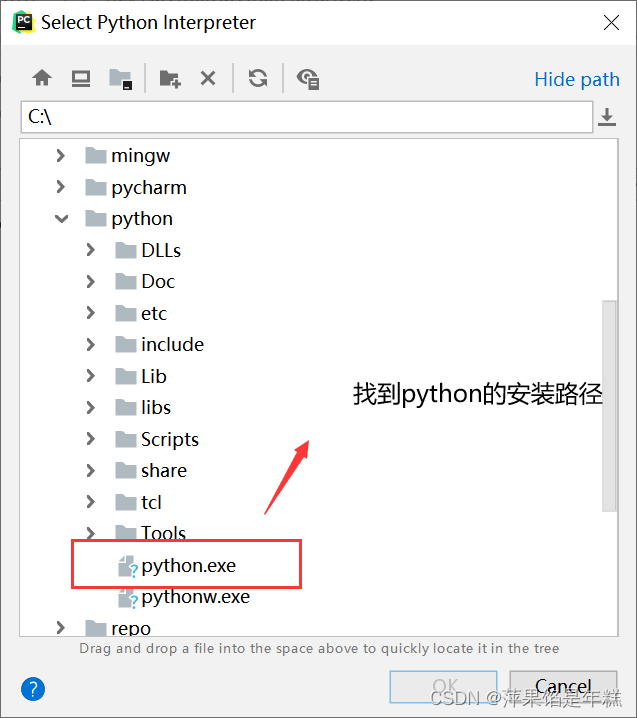

PyCharm中无法调用numpy,报错ModuleNotFoundError: No module named ‘numpy‘

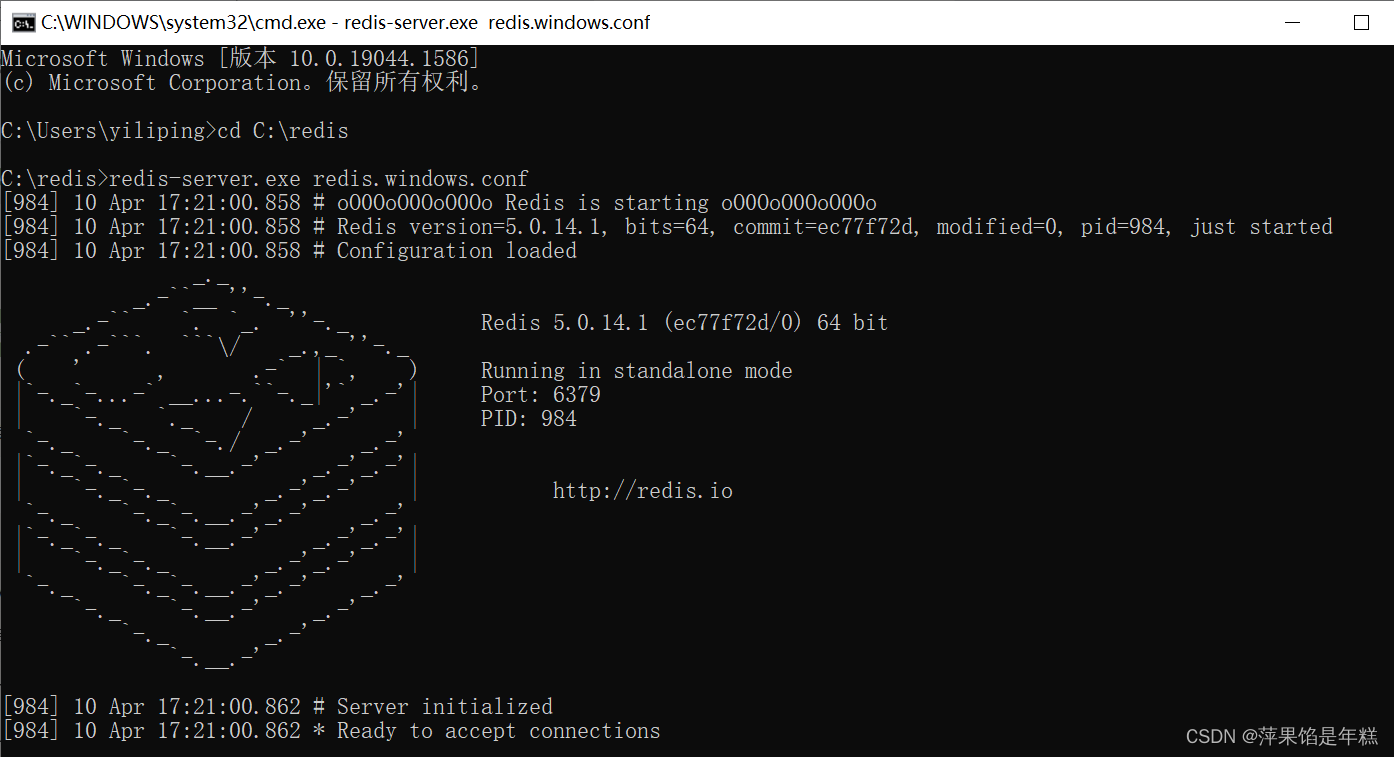

Install mongdb tutorial and redis tutorial under Windows

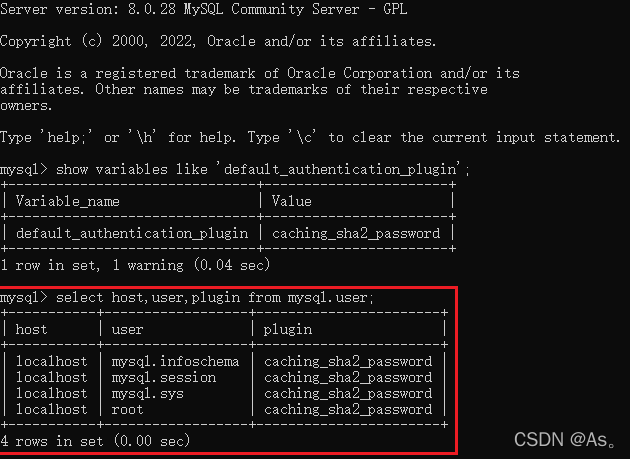

Error connecting to MySQL database: 2059 - authentication plugin 'caching_ sha2_ The solution of 'password'

QT creator create button

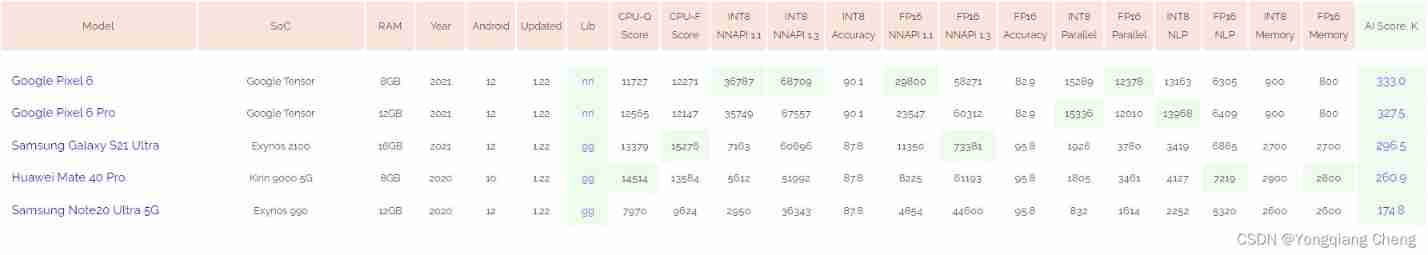

AI benchmark V5 ranking

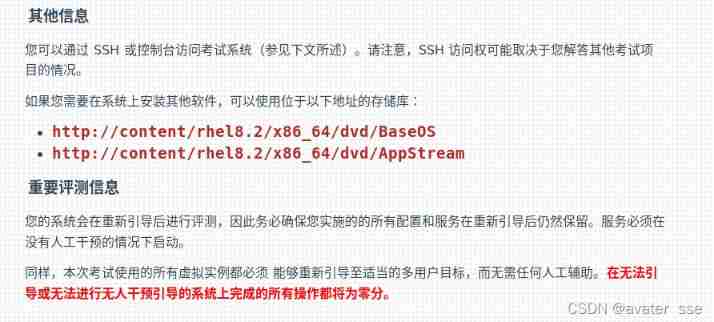

Rhcsa certification exam exercise (configured on the first host)

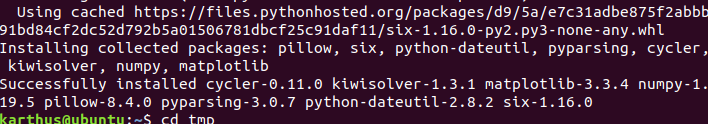

Solve the problem of installing failed building wheel for pilot

随机推荐

Integration test practice (1) theoretical basis

Number game

软件测试-面试题分享

引入了junit为什么还是用不了@Test注解

Deoldify project problem - omp:error 15:initializing libiomp5md dll,but found libiomp5md. dll already initialized.

2019腾讯暑期实习生正式笔试

Introduction to the easy copy module

QT creator runs the Valgrind tool on external applications

Error reporting solution - io UnsupportedOperation: can‘t do nonzero end-relative seeks

[Blue Bridge Cup 2017 preliminary] buns make up

Project practice - background employee information management (add, delete, modify, check, login and exit)

AcWing 1298.曹冲养猪 题解

AcWing 1294. Cherry Blossom explanation

yarn安装与使用

基于apache-jena的知识问答

neo4j安装教程

Why can't I use the @test annotation after introducing JUnit

L2-007 家庭房产 (25 分)

QT creator shape

Nanny level problem setting tutorial