当前位置:网站首页>Industrial deployment II: the recurrence of picodet network structure on yolov5 Lite

Industrial deployment II: the recurrence of picodet network structure on yolov5 Lite

2022-07-08 02:20:00 【pogg_】

【GiantPandaCV Introduction 】 This blog is only for PicoDet The network structure of .PicoDet To a certain extent, it refreshes the industry's lightweight mobile terminal model sota, This is also where I am more interested . This article will PicoDet The model network structure is migrated to yolov5 The platform of , the reason being that anchor base In the form of , There may be some differences in performance with the native model , The following are the performance indicators of the native model .

One 、PicoDet Introduce

Picodet The paper is in 11 The moon releases , Later, the model structure is reproduced , But because there is no graphics card , It is too late to test the effect of the reproduced model ( There is still no graphics card to run the model , Then release the code ), I'm free this weekend , Turn out the previous code and sort it out , And talk about the idea of recurrence :

1.1 ESNet

ESNet Its full name is Enhance ShuffleNet, It is based on absentmindedness shufflenetv2 Evolved ( The figure below )

about Stride=2, We can see ,ESNet and Shufflenetv2 The difference is that channel shuffle, Added one 3x3 Of depthwise separable conv, besides , In one of them branch Added in se module;

about Stride=2, We can see ,ESNet and Shufflenetv2 The difference is that channel shuffle, Added one 3x3 Of depthwise separable conv, besides , In one of them branch Added in se module;

about Stride=1, Should be ESNet The biggest change , contain depthwise separable conv+point conv Of branch Become a ghost conv+se module+point conv, This needs a little attention ,channel A little misalignment will lead to concat and channel shuffle error .

We can install different branch And various modules to explain :

The first is the attention mechanism SE modular , You can refer to mobile series , Activate function selection hard_sigmoid:

Duplicate code :

class ES_SEModule(nn.Module):

def __init__(self, channel, reduction=4):

super().__init__()

self.avg_pool = nn.AdaptiveAvgPool2d(1)

self.conv1 = nn.Conv2d(

in_channels=channel,

out_channels=channel // reduction,

kernel_size=1,

stride=1,

padding=0)

self.relu = nn.ReLU()

self.conv2 = nn.Conv2d(

in_channels=channel // reduction,

out_channels=channel,

kernel_size=1,

stride=1,

padding=0)

self.hardsigmoid = nn.Hardsigmoid()

about Ghost Block, Compared to traditional convolution ,GhostNet A two-step , First GhostNet Use normal convolution calculation , obtain channel Fewer feature maps , And then use it cheap operation Get more feature maps , Then put different characteristic graphs concat together , Combine into a new output.

Code :

class GhostConv(nn.Module):

# The code comes from yolov5.common.py

def __init__(self, c1, c2, k=3, s=1, g=1, act=True): # ch_in, ch_out, kernel, stride, groups

super().__init__()

c_ = c2 // 2 # hidden channels

self.cv1 = Conv(c1, c_, 1, s, None, g, act)

self.cv2 = Conv(c_, c_, k, s, None, c_, act)

def forward(self, x):

y = self.cv1(x)

return torch.cat((y, self.cv2(y)), dim=1)

about stride=2,branch1 Is common depthwise separable conv:

The other side branch2 Structure is point conv+se module+depthwise separable conv:

branch4 The structure is right concat After branch1 and branch2 Do depth separable convolution :

Combine these modules , constitute stride=2 Total branch of :

def forward(self, x):

x1 = torch.cat((self.branch1(x), self.branch2(x)), dim=1)

out = self.branch4(x1)

branch3 There are many structural combination modules , namely ghost conv+identity+se module+point conv:

Combine these modules , constitute stride=1 Total branch of :

def forward(self, x):

x1, x2 = x.chunk(2, dim=1)

# to channel split, Upset tensor

x3 = torch.cat((x1, self.branch3(x2)), dim=1)

# Conduct branck3 The operation of

out = channel_shuffle(x3, 2)

# After the operation tensor Reassemble

We can draw and analyze tensor The direction of , In the most complex Stride=1 For example :

The reproduction code is as follows :

# build ES_Bottleneck

# -------------------------------------------------------------------------

class GhostConv(nn.Module):

# Ghost Convolution https://github.com/huawei-noah/ghostnet

def __init__(self, c1, c2, k=3, s=1, g=1, act=True): # ch_in, ch_out, kernel, stride, groups

super().__init__()

c_ = c2 // 2 # hidden channels

self.cv1 = Conv(c1, c_, 1, s, None, g, act)

self.cv2 = Conv(c_, c_, k, s, None, c_, act)

def forward(self, x):

y = self.cv1(x)

return torch.cat((y, self.cv2(y)), dim=1)

class ES_SEModule(nn.Module):

def __init__(self, channel, reduction=4):

super().__init__()

self.avg_pool = nn.AdaptiveAvgPool2d(1)

self.conv1 = nn.Conv2d(

in_channels=channel,

out_channels=channel // reduction,

kernel_size=1,

stride=1,

padding=0)

self.relu = nn.ReLU()

self.conv2 = nn.Conv2d(

in_channels=channel // reduction,

out_channels=channel,

kernel_size=1,

stride=1,

padding=0)

self.hardsigmoid = nn.Hardsigmoid()

def forward(self, x):

identity = x

x = self.avg_pool(x)

x = self.conv1(x)

x = self.relu(x)

x = self.conv2(x)

x = self.hardsigmoid(x)

out = identity * x

return out

class ES_Bottleneck(nn.Module):

def __init__(self, inp, oup, stride):

super(ES_Bottleneck, self).__init__()

if not (1 <= stride <= 3):

raise ValueError('illegal stride value')

self.stride = stride

branch_features = oup // 2

# assert (self.stride != 1) or (inp == branch_features << 1)

if self.stride > 1:

# Article 1 with a branch Branch , be used for stride=2 Of ES_Bottleneck

self.branch1 = nn.Sequential(

self.depthwise_conv(inp, inp, kernel_size=3, stride=self.stride, padding=1),

nn.BatchNorm2d(inp),

nn.Conv2d(inp, branch_features, kernel_size=1, stride=1, padding=0, bias=False),

nn.BatchNorm2d(branch_features),

nn.Hardswish(inplace=True),

)

self.branch2 = nn.Sequential(

# Article 12 branch Branch , be used for stride=2 Of ES_Bottleneck

nn.Conv2d(inp if (self.stride > 1) else branch_features,

branch_features, kernel_size=1, stride=1, padding=0, bias=False),

nn.BatchNorm2d(branch_features),

nn.ReLU(inplace=True),

self.depthwise_conv(branch_features, branch_features, kernel_size=3, stride=self.stride, padding=1),

nn.BatchNorm2d(branch_features),

ES_SEModule(branch_features),

nn.Conv2d(branch_features, branch_features, kernel_size=1, stride=1, padding=0, bias=False),

nn.BatchNorm2d(branch_features),

nn.Hardswish(inplace=True),

)

self.branch3 = nn.Sequential(

# Article 3 the branch Branch , be used for stride=1 Of ES_Bottleneck

GhostConv(branch_features, branch_features, 3, 1),

ES_SEModule(branch_features),

nn.Conv2d(branch_features, branch_features, kernel_size=1, stride=1, padding=0, bias=False),

nn.BatchNorm2d(branch_features),

nn.Hardswish(inplace=True),

)

self.branch4 = nn.Sequential(

# Article 4. branch Branch , be used for stride=2 Of ES_Bottleneck The last depth separable convolution of

self.depthwise_conv(oup, oup, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(oup),

nn.Conv2d(oup, oup, kernel_size=1, stride=1, padding=0, bias=False),

nn.BatchNorm2d(oup),

nn.Hardswish(inplace=True),

)

@staticmethod

def depthwise_conv(i, o, kernel_size=3, stride=1, padding=0, bias=False):

return nn.Conv2d(i, o, kernel_size, stride, padding, bias=bias, groups=i)

@staticmethod

def conv1x1(i, o, kernel_size=1, stride=1, padding=0, bias=False):

return nn.Conv2d(i, o, kernel_size, stride, padding, bias=bias)

def forward(self, x):

if self.stride == 1:

x1, x2 = x.chunk(2, dim=1)

x3 = torch.cat((x1, self.branch3(x2)), dim=1)

out = channel_shuffle(x3, 2)

elif self.stride == 2:

x1 = torch.cat((self.branch1(x), self.branch2(x)), dim=1)

out = self.branch4(x1)

return out

# ES_Bottleneck end

# -------------------------------------------------------------------------

1.2 CSP - PAN

neck Part of it is relatively simple , The main op from depthwise separable conv + CSPNet + PANet form

CSP - PAN Of yaml structure :

# CSP-PAN

head:

[ [ -1, 1, Conv, [ 232, 1, 1 ] ], # 7

[ [ -1, 6 ], 1, Concat, [ 1 ] ], # cat backbone P4

[ -1, 1, BottleneckCSP, [ 232, False ] ], # 9 (P3/8-small)

[ -1, 1, Conv, [ 116, 1, 1 ] ], # 10

[ -1, 1, nn.Upsample, [ None, 2, 'nearest' ] ],

[ [ -1, 4 ], 1, Concat, [ 1 ] ], # cat backbone P4

[ -1, 1, BottleneckCSP, [ 116, False ] ], # 13

[ -1, 1, Conv, [ 116, 1, 1 ] ], # 14

[ -1, 1, nn.Upsample, [ None, 2, 'nearest' ] ],

[ [ -1, 2 ], 1, Concat, [ 1 ] ], # cat backbone P3

[ -1, 1, BottleneckCSP, [ 116, False ] ], # 17 (P3/8-small)

[-1, 1, DWConvblock, [ 116, 5, 2 ]], # 18

[ [ -1, 14 ], 1, Concat, [ 1 ] ], # cat head P4

[ -1, 1, BottleneckCSP, [ 116, False ] ], # 20 (P4/16-medium)

[ -1, 1, DWConvblock, [ 232, 5, 2 ] ],

[ [ -1, 10 ], 1, Concat, [ 1 ] ], # cat head P5

[ -1, 1, BottleneckCSP, [ 232, False ] ], # 23 (P5/32-large)

[ [ -1, 7 ], 1, Concat, [ 1 ] ], # cat head P6

[ -1, 1, DWConvblock, [ 464, 5, 2 ] ], # 26 (P5/32-large)

[ [ 17, 20, 23, 25 ], 1, Detect, [ nc, anchors ] ], # Detect(P3, P4, P5, P6)

]

General model structure and 640*640 Under the param and Flops:

The code will be updated for individuals repo, Welcome to star And white whoring !

https://github.com/ppogg/YOLOv5-Lite

边栏推荐

- Clickhouse principle analysis and application practice "reading notes (8)

- #797div3 A---C

- Unity 射线与碰撞范围检测【踩坑记录】

- Yolo fast+dnn+flask realizes streaming and streaming on mobile terminals and displays them on the web

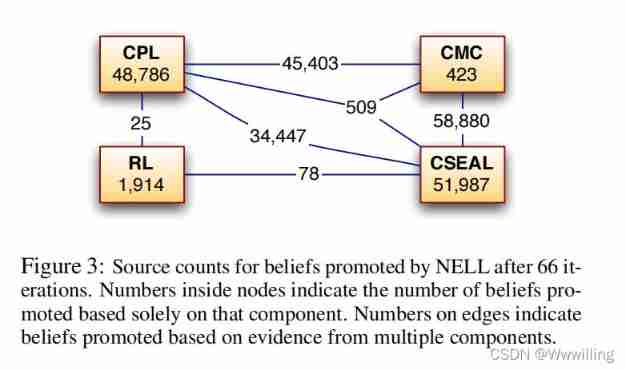

- Random walk reasoning and learning in large-scale knowledge base

- Mqtt x newsletter 2022-06 | v1.8.0 release, new mqtt CLI and mqtt websocket tools

- Spock单元测试框架介绍及在美团优选的实践_第三章(void无返回值方法mock方式)

- Deep understanding of cross entropy loss function

- 实现前缀树

- COMSOL --- construction of micro resistance beam model --- final temperature distribution and deformation --- addition of materials

猜你喜欢

Xmeter newsletter 2022-06 enterprise v3.2.3 release, error log and test report chart optimization

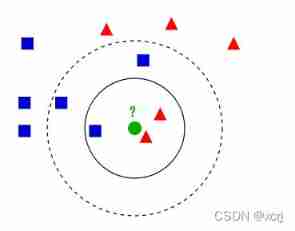

Ml self realization /knn/ classification / weightlessness

From starfish OS' continued deflationary consumption of SFO, the value of SFO in the long run

Opengl/webgl shader development getting started guide

XXL job of distributed timed tasks

Monthly observation of internet medical field in May 2022

牛熊周期与加密的未来如何演变?看看红杉资本怎么说

Towards an endless language learning framework

Neural network and deep learning-5-perceptron-pytorch

Learn face detection from scratch: retinaface (including magic modified ghostnet+mbv2)

随机推荐

How to use diffusion models for interpolation—— Principle analysis and code practice

Force buckle 5_ 876. Intermediate node of linked list

Keras深度学习实战——基于Inception v3实现性别分类

Ml backward propagation

很多小夥伴不太了解ORM框架的底層原理,這不,冰河帶你10分鐘手擼一個極簡版ORM框架(趕快收藏吧)

入侵检测——Uniscan

The circuit is shown in the figure, r1=2k Ω, r2=2k Ω, r3=4k Ω, rf=4k Ω. Find the expression of the relationship between output and input.

Mqtt x newsletter 2022-06 | v1.8.0 release, new mqtt CLI and mqtt websocket tools

In depth understanding of the se module of the attention mechanism in CV

【每日一题】648. 单词替换

XXL job of distributed timed tasks

Spock单元测试框架介绍及在美团优选的实践_第四章(Exception异常处理mock方式)

UFS Power Management 介绍

VIM use

非分区表转换成分区表以及注意事项

leetcode 866. Prime Palindrome | 866. 回文素数

Unity 射线与碰撞范围检测【踩坑记录】

Analysis ideas after discovering that the on duty equipment is attacked

Opengl/webgl shader development getting started guide

电路如图,R1=2kΩ,R2=2kΩ,R3=4kΩ,Rf=4kΩ。求输出与输入关系表达式。