Interview questions

How to ensure that messages are not consumed repeatedly ? Or say , How to guarantee the idempotence of message consumption ?

Psychological analysis of interviewers

In fact, this is a very common problem , These two questions can be asked together . Since it's consumer news , It must be considered whether there will be repeated consumption ? Can we avoid repeated consumption ? Or do not make the system abnormal after repeated consumption ? This is MQ Basic problems in the field , In fact, I still ask you in essence How to guarantee idempotence with message queue , This is a question to consider in your architecture .

Analysis of interview questions

Answer the question , First of all, don't hear the repetition of the news , I don't know , you Let's talk about the possible problems of repeated consumption .

First , such as RabbitMQ、RocketMQ、Kafka, There may be the problem of repeated message consumption , normal . Because the problem is usually not MQ I promise , It's developed by us to guarantee . Pick one Kafka Let's give you an example , Talk about how to repeat consumption .

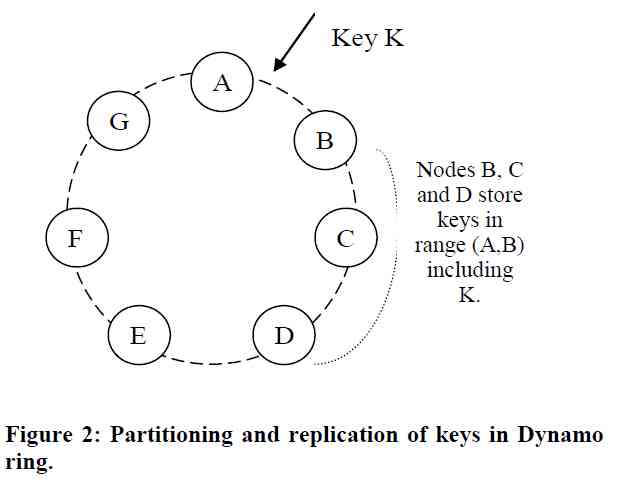

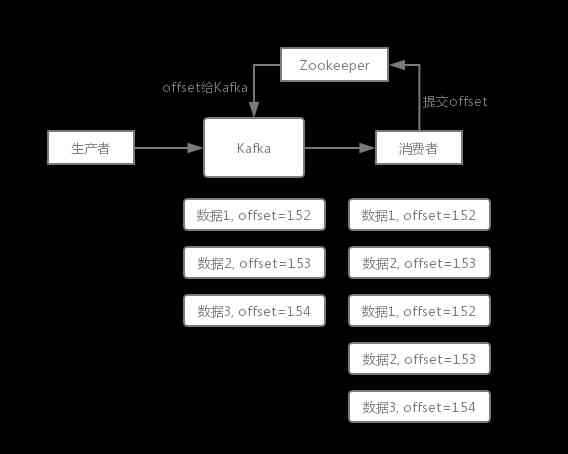

Kafka There's actually a offset The concept of , It's every message written in , There is one. offset, Represents the sequence number of the message , then consumer After consuming the data , Every once in a while ( On a regular basis ), I'll take the news I've consumed offset Submit a , Express “ I've already spent , Next time I restart something , You let me continue from the last consumption to offset Let 's go on spending ”.

But there are always accidents , For example, we often encountered in previous production , It's you sometimes reboot the system , See how you restart , If you come across something in a hurry , direct kill Process , Restart again . This can lead to consumer Some messages have been processed , But I didn't submit it offset, Embarrassed . After reboot , A few messages will be consumed once again .

Take a chestnut .

There is such a scene . data 1/2/3 In turn Kafka,Kafka Each of these three pieces of data will be assigned one offset, Represents the serial number of this data , Let's assume that the distribution of offset In turn, is 152/153/154. Consumers from Kafka When we go shopping , It's also in this order of consumption . If when consumers consume offset=153 This data of , Just about to submit offset To Zookeeper, At this point the consumer process is restarted . So the data consumed at this time 1/2 Of offset Not submitted ,Kafka I don't know you've already spent offset=153 This data . So after the reboot , Consumers will look for Kafka say , well , Guys , Then you give me the data behind the last place I consumed and continue to pass it to me . Because of the previous offset Failed to submit successfully , So the data 1/2 It will come back , If the consumer doesn't go heavy at this time , Then it will lead to repeated consumption .

Be careful : New version of the Kafka Have already put offset Of storage from Zookeeper Transfer to Kafka brokers, And use the internal displacement theme __consumer_offsets For storage .

If the consumer's job is to take a piece of data and write one in the database , Will lead to saying , You may just put the data 1/2 Insert... Into the database 2 Time , So the data is wrong .

In fact, repeated consumption is not terrible , What's terrible is that you don't think about repeated consumption , How to guarantee idempotence .

Let's give you an example . Suppose you have a system , Consume a message and insert a piece of data into the database , If you repeat a message twice , You just inserted two , This data is not wrong ? But if you spend the second time , Make your own judgment about whether you've already consumed , If you throw it directly , In this way, a piece of data is not preserved , So as to ensure the correctness of the data .

A piece of data repeats twice , There is only one piece of data in the database , This guarantees the idempotence of the system .

Idempotency , Popular point theory , Just one data , Or a request , Repeat for you many times , You have to make sure that the corresponding data doesn't change , Don't make mistakes .

So here comes the second question , How to guarantee the idempotence of message queue consumption ?

In fact, we have to think about business , I'll give you some ideas :

- For example, you take a data to write to the database , First, you can check according to the primary key , If all this data is available , You don't have to insert ,update Just a moment .

- For example, you write Redis, That's OK , Anyway, every time set, Natural idempotence .

- For example, you are not the above two scenes , That's a little more complicated , When you need to have producers send every piece of data , There is a global and unique id, Similar orders id Things like that , Then when you spend here , According to this id Go for example Redis Check it out , Have you ever consumed before ? If you haven't consumed , You deal with , And then this id Write Redis. If you've spent , Then don't deal with it , Make sure you don't repeat the same message .

- For example, the unique key based on the database ensures that duplicate data will not be repeatedly inserted . Because there is only one key constraint , Repeated data insertion will only report errors , No dirty data in the database .

Of course , How to ensure MQ The consumption of is idempotent , It needs to be combined with specific business .

Zero basis , learn Java, Just join me for ten years Java Study Group :3907814 , Focus on technology learning and communication .