当前位置:网站首页>Realsense d435 d435i d415 depth camera obtains RGB map, left and right infrared camera map, depth map and IMU data under ROS

Realsense d435 d435i d415 depth camera obtains RGB map, left and right infrared camera map, depth map and IMU data under ROS

2022-07-04 10:13:00 【Wildcraner】

First of all, you should make sure that your camera driver is installed , Environment configuration can be seen in another article of mine :https://blog.csdn.net/weixin_46195203/article/details/119205851

** First step :** Create a new file informationread( The recommended file name here is :informationread, In this way, there is no need to modify it separately Makelist The information in the )

** The second step :** stay informationread Create two new files under the folder, which are build and src One more CMakeLists.txt file

** The third step :** stay CMakeLists.txt Copy the code in the file

cmake_minimum_required(VERSION 3.1.0)

project(alldata)

set( CMAKE_CXX_COMPILER "g++" )

set( CMAKE_BUILD_TYPE "Release" )

set( CMAKE_CXX_FLAGS "-std=c++11 -O3" )

# Enable C++11

set(CMAKE_CXX_STANDARD 11)

set(CMAKE_CXX_STANDARD_REQUIRED TRUE)

FIND_PACKAGE(OpenCV REQUIRED)

FIND_PACKAGE(realsense2 REQUIRED)

include_directories( ${

OpenCV_INCLUDE_DIRS} )

include_directories( ${

realsense2_INCLUDE_DIRS})

add_executable(informationread src/GetAlldata.cpp)

target_link_libraries(informationread ${

OpenCV_LIBS} ${

realsense2_LIBRARY})

** Step four :** Copy the following code to a .cpp file Put it in src In file ( It is suggested that cpp The file named :GetAlldata.cpp, In this way, there is no need to modify it separately Makelist The information in the )

#include <librealsense2/rs.hpp>

// include OpenCV header file

#include <opencv2/opencv.hpp>

using namespace std;

using namespace cv;

#define width 640

#define height 480

#define fps 30

int main(int argc, char** argv) try

{

// judge whether devices is exist or not

rs2::context ctx;

auto list = ctx.query_devices(); // Get a snapshot of currently connected devices

if (list.size() == 0)

throw std::runtime_error("No device detected. Is it plugged in?");

rs2::device dev = list.front();

//

rs2::frameset frames;

//Contruct a pipeline which abstracts the device

rs2::pipeline pipe;// Establish a communication pipeline //https://baike.so.com/doc/1559953-1649001.html pipeline The explanation of

//Create a configuration for configuring the pipeline with a non default profile

rs2::config cfg;

//Add desired streams to configuration

cfg.enable_stream(RS2_STREAM_COLOR, width, height, RS2_FORMAT_BGR8, fps);// Color images

cfg.enable_stream(RS2_STREAM_DEPTH, width, height, RS2_FORMAT_Z16,fps);// Depth image

cfg.enable_stream(RS2_STREAM_INFRARED, 1, width, height, RS2_FORMAT_Y8, fps);// Infrared image 1

cfg.enable_stream(RS2_STREAM_INFRARED, 2, width, height, RS2_FORMAT_Y8, fps);// Infrared image 2

// If you want to get imu Data uncomment the following two lines of code , And modify it imu Assignment

//cfg.enable_stream(RS2_STREAM_ACCEL, RS2_FORMAT_MOTION_XYZ32F);// gyroscope

//cfg.enable_stream(RS2_STREAM_GYRO, RS2_FORMAT_MOTION_XYZ32F);// gyroscope

int imu = 0;// If you want to get imu data , This is set to 1

// get depth scale

// float depth_scale = get_depth_scale(profile.get_device());

// start stream

pipe.start(cfg);// Instructs the pipeline to start the flow with the requested configuration

while(1)

{

frames = pipe.wait_for_frames();// Wait for all configured flow generation frameworks

// Align to depth

rs2::align align_to_depth(RS2_STREAM_DEPTH);

frames = align_to_depth.process(frames);

// Get imu data

if(imu){

if (rs2::motion_frame accel_frame = frames.first_or_default(RS2_STREAM_ACCEL))

{

rs2_vector accel_sample = accel_frame.get_motion_data();

std::cout << "Accel:" << accel_sample.x << ", " << accel_sample.y << ", " << accel_sample.z << std::endl;

}

if (rs2::motion_frame gyro_frame = frames.first_or_default(RS2_STREAM_GYRO))

{

rs2_vector gyro_sample = gyro_frame.get_motion_data();

std::cout << "Gyro:" << gyro_sample.x << ", " << gyro_sample.y << ", " << gyro_sample.z << std::endl;

}

}

//Get each frame

rs2::frame color_frame = frames.get_color_frame();

rs2::frame depth_frame = frames.get_depth_frame();

rs2::video_frame ir_frame_left = frames.get_infrared_frame(1);

rs2::video_frame ir_frame_right = frames.get_infrared_frame(2);

// Creating OpenCV Matrix from a color image

Mat color(Size(width, height), CV_8UC3, (void*)color_frame.get_data(), Mat::AUTO_STEP);

Mat pic_right(Size(width,height), CV_8UC1, (void*)ir_frame_right.get_data());

Mat pic_left(Size(width,height), CV_8UC1, (void*)ir_frame_left.get_data());

Mat pic_depth(Size(width,height), CV_16U, (void*)depth_frame.get_data(), Mat::AUTO_STEP);

// Display in a GUI

namedWindow("Display Image", WINDOW_AUTOSIZE );

imshow("Display Image", color);

waitKey(1);

imshow("Display depth", pic_depth*15);

waitKey(1);

imshow("Display pic_left", pic_left);

waitKey(1);

imshow("Display pic_right",pic_right);

waitKey(1);

}

return 0;

}

// error

catch (const rs2::error & e)

{

std::cerr << "RealSense error calling " << e.get_failed_function() << "(" << e.get_failed_args() << "):\n " << e.what() << std::endl;

return EXIT_FAILURE;

}

catch (const std::exception& e)

{

std::cerr << e.what() << std::endl;

return EXIT_FAILURE;

}

The default in the code is D415 perhaps D435 This one doesn't imu The camera has no output imu Information

If you want imu Information Follow the instructions to make minor modifications .

** Step four :** stay build Open the command window in the file and enter

cmake ..

make -j4

./informationread

Now you can see the real-time running results

边栏推荐

- 浅谈Multus CNI

- Pcl:: fromrosmsg alarm failed to find match for field 'intensity'

- Service developers publish services based on EDAs

- QTreeView+自定义Model实现示例

- If the uniapp is less than 1000, it will be displayed according to the original number. If the number exceeds 1000, it will be converted into 10w+ 1.3k+ display

- C # use smtpclient The sendasync method fails to send mail, and always returns canceled

- Hands on deep learning (32) -- fully connected convolutional neural network FCN

- Hands on deep learning (41) -- Deep recurrent neural network (deep RNN)

- C language pointer classic interview question - the first bullet

- uniapp 处理过去时间对比现在时间的时间差 如刚刚、几分钟前,几小时前,几个月前

猜你喜欢

Application of safety monitoring in zhizhilu Denggan reservoir area

Servlet基本原理与常见API方法的应用

【Day1】 deep-learning-basics

Hands on deep learning (38) -- realize RNN from scratch

Hands on deep learning (41) -- Deep recurrent neural network (deep RNN)

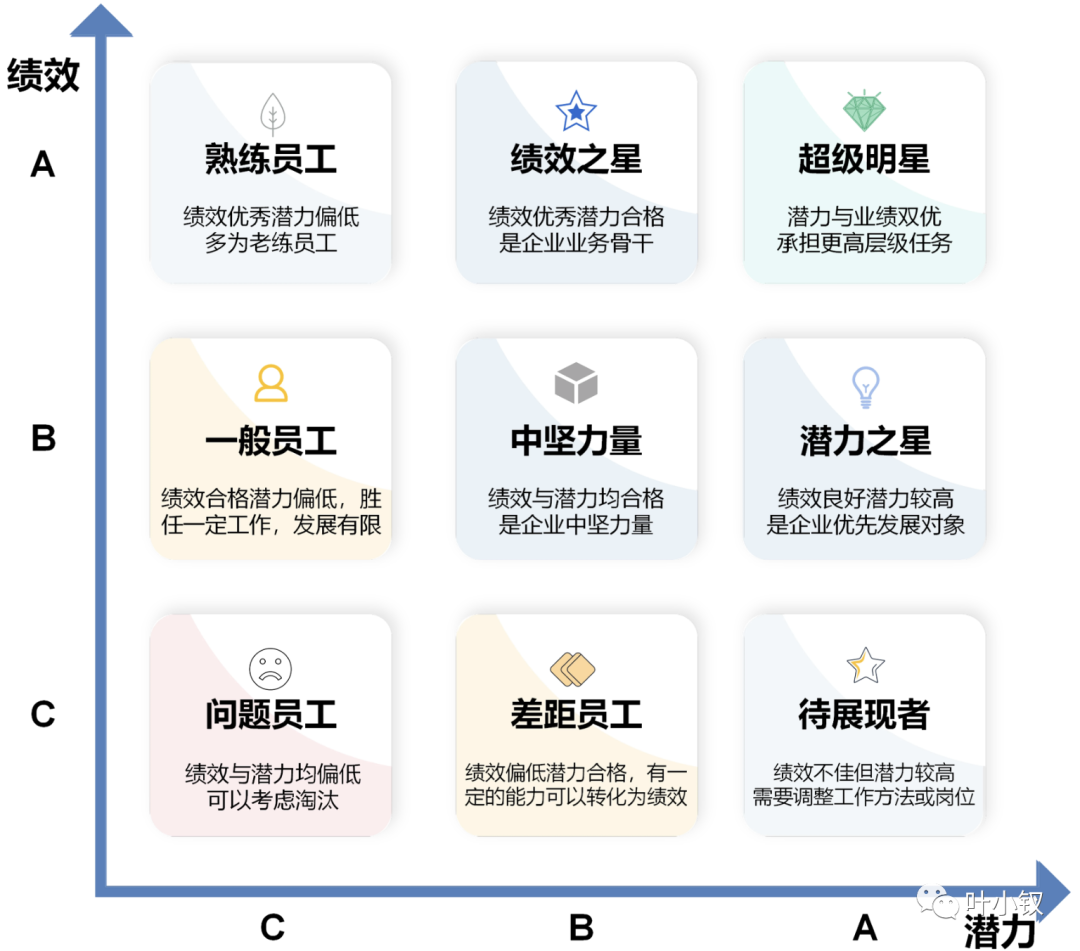

技术管理进阶——如何设计并跟进不同层级同学的绩效

Reasons and solutions for the 8-hour difference in mongodb data date display

法向量点云旋转

For programmers, if it hurts the most...

What are the advantages of automation?

随机推荐

Kotlin 集合操作汇总

Hands on deep learning (44) -- seq2seq principle and Implementation

Lavel document reading notes -how to use @auth and @guest directives in lavel

C # use gdi+ to add text to the picture and make the text adaptive to the rectangular area

技术管理进阶——如何设计并跟进不同层级同学的绩效

Use C to extract all text in PDF files (support.Net core)

lolcat

Exercise 7-4 find out the elements that are not common to two arrays (20 points)

C # use ffmpeg for audio transcoding

C # use smtpclient The sendasync method fails to send mail, and always returns canceled

Hands on deep learning (34) -- sequence model

入职中国平安三周年的一些总结

AUTOSAR from getting started to mastering 100 lectures (106) - SOA in domain controllers

Write a mobile date selector component by yourself

libmysqlclient.so.20: cannot open shared object file: No such file or directory

C language pointer classic interview question - the first bullet

Safety reinforcement learning based on linear function approximation safe RL with linear function approximation translation 2

自动化的优点有哪些?

Reprint: summation formula of proportional series and its derivation process

Check 15 developer tools of Alibaba