当前位置:网站首页>Open3d learning note 3 [sampling and voxelization]

Open3d learning note 3 [sampling and voxelization]

2022-07-02 07:54:00 【Silent clouds】

open3d Voxelization of learning notes

One 、 Add some small knowledge

1、 With mesh Mode reading ply file

import open3d as o3d

mesh = o3d.io.read_triangle_mesh("mode/Fantasy Dragon.ply")

mesh.compute_vertex_normals()

2. Rotation matrix

The 3D model uses R,T Two parameters to transform , The spatial coordinate system of the view is established : Up for z Axis , To the right is y Axis ,x The axis points to the front of the screen . Use transform Method transform coordinates , The transformation matrix is [4*4] Matrix ,transform([[R, T], [0, 1]]).

Read one normally ply file :

import open3d as o3d

pcd = o3d.io.read_point_cloud("mode/Fantasy Dragon.ply")

o3d.visualization.draw_geometries([pcd], width=1280, height=720)

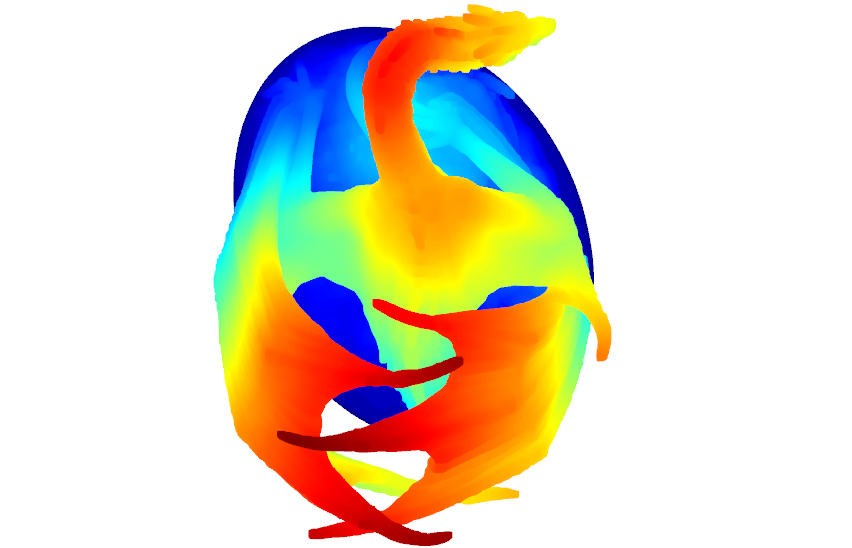

The display effect is as shown in the figure :

Use the conversion function , Put him horizontally , And the head faces the screen . Then it is to change the original z Shaft change to y Axis ,y Shaft change to x Axis ,x Shaft change to z Axis , So the code is :

import open3d as o3d

mesh = o3d.io.read_triangle_mesh("mode/Fantasy Dragon.ply")

mesh.compute_vertex_normals()

mesh.transform([[0, 1, 0, 0], [0, 0, 1, 0], [1, 0, 0, 0], [0, 0, 0, 1]])

o3d.visualization.draw_geometries([mesh], width=1280, height=720)

effect :

Two 、 The way to convert to point cloud

1、 Turn into numpy The array is redrawn into a point cloud

import open3d as o3d

import numpy as np

mesh = o3d.io.read_triangle_mesh("mode/Fantasy Dragon.ply")

mesh.compute_vertex_normals()

v_mesh = np.asarray(mesh.vertices)

pcd = o3d.geometry.PointCloud()

pcd.points = o3d.utility.Vector3dVector(v_mesh)

o3d.visualization.draw_geometries([pcd], width=1280, height=720)

about ply The format of the file is ok , But if stl This triangular mesh , The result of transformation will be a little unsatisfactory .

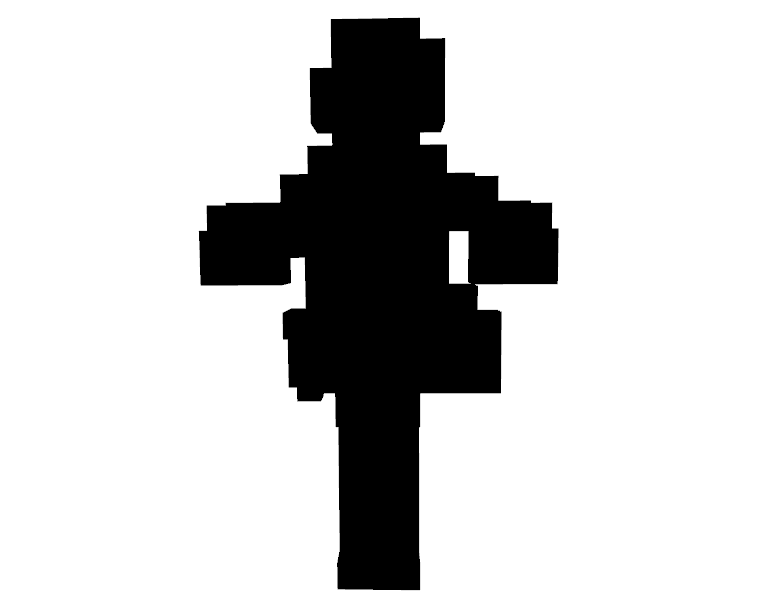

2、 sampling

open3d Provides a sampling method , Sampling points can be set , simplified model .

import open3d as o3d

mesh = o3d.io.read_triangle_mesh("mode/ganyu.STL")

mesh.compute_vertex_normals()

pcd = o3d.geometry.TriangleMesh.sample_points_uniformly(mesh, number_of_points=10000) # Sampling point cloud

o3d.visualization.draw_geometries([pcd], width=1280, height=720)

3、 ... and 、 Voxelization

Voxelization , Can simplify the model , Get a uniform mesh .

Convert triangle mesh to voxel mesh

import open3d as o3d

import numpy as np

print("Load a ply point cloud, print it, and render it")

mesh = o3d.io.read_triangle_mesh("mode/ganyu.STL")

mesh.compute_vertex_normals()

mesh.scale(1 / np.max(mesh.get_max_bound() - mesh.get_min_bound()), center=mesh.get_center())

voxel_grid = o3d.geometry.VoxelGrid.create_from_triangle_mesh(mesh, voxel_size=0.05)

o3d.visualization.draw_geometries([voxel_grid], width=1280, height=720)

Point cloud generates voxel mesh

import open3d as o3d

import numpy as np

print("Load a ply point cloud, print it, and render it")

pcd = o3d.io.read_point_cloud("mode/Fantasy Dragon.ply")

pcd.scale(1 / np.max(pcd.get_max_bound() - pcd.get_min_bound()), center=pcd.get_center())

pcd.colors = o3d.utility.Vector3dVector(np.random.uniform(0,1,size=(2000,3)))

print('voxelization')

voxel_grid = o3d.geometry.VoxelGrid.create_from_point_cloud(pcd, voxel_size=0.05)

o3d.visualization.draw_geometries([voxel_grid], width=1280, height=720)

Four 、 Vertex normal estimation

voxel_down_pcd = pcd.voxel_down_sample(voxel_size=0.05)

voxel_down_pcd.estimate_normals(

search_param=o3d.geometry.KDTreeSearchParamHybrid(radius=0.1, max_nn=30))

o3d.visualization.draw_geometries([voxel_down_pcd], point_show_normal=True, width=1280, height=720)

estimate_normals Calculate the normal of each point . This function finds adjacent points and calculates the principal axis of adjacent points using covariance analysis .

This function will KDTreeSearchParamHybrid Class as a parameter . The two key parameters are the specified search radius and the maximum nearest neighbor .radius=0.1, max_nn=30 That is to 10cm Search radius for , And only consider 30 Adjacent points to save computing time .

Read the normal vector

print(" Print the first vector :")

print(voxel_down_pcd.normals[0])

# Print the first vector :

#[ 0.51941952 0.82116269 -0.23642166]

# Print the first ten normal vectors

print(np.asarray(voxel_down_pcd.normals)[:10,:])

5、 ... and 、 What should be noted

- pcd The format file belongs to the point cloud type ,ply It can be read in point cloud and grid mode at the same time , use mesh When reading , It can be treated as triangular mesh , use pcd Reading can be directly used as point cloud data processing .

- When triangle meshes are directly sampled and then normals are calculated, there will be errors in normal annotation , That is, all normals point in one direction .

- To avoid sampling normal errors, use

sample_points_poisson_disk()Method sampling .

边栏推荐

- EKLAVYA -- 利用神经网络推断二进制文件中函数的参数

- 解决latex图片浮动的问题

- Translation of the paper "written mathematical expression recognition with bidirectionally trained transformer"

- Convert timestamp into milliseconds and format time in PHP

- MoCO ——Momentum Contrast for Unsupervised Visual Representation Learning

- Latex formula normal and italic

- What if a new window always pops up when opening a folder on a laptop

- 程序的内存模型

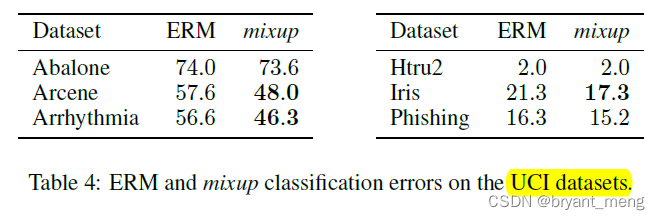

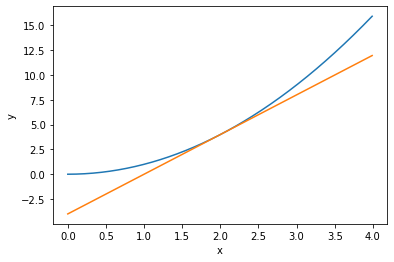

- 【Mixup】《Mixup:Beyond Empirical Risk Minimization》

- 【深度学习系列(八)】:Transoform原理及实战之原理篇

猜你喜欢

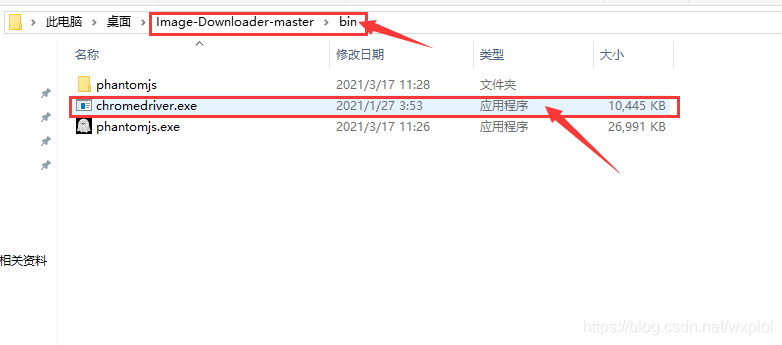

Installation and use of image data crawling tool Image Downloader

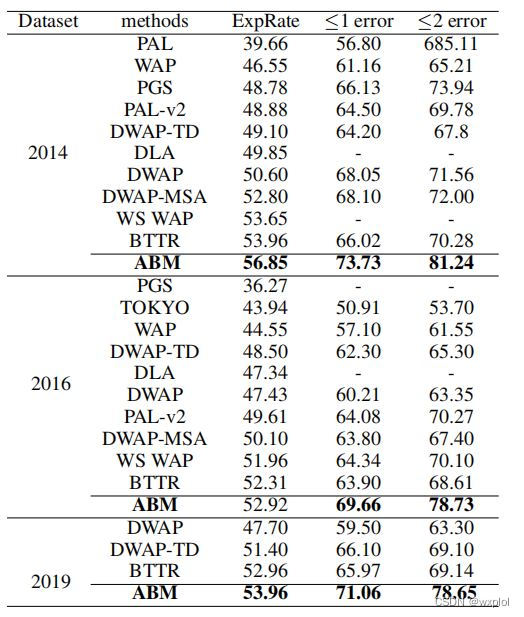

ABM thesis translation

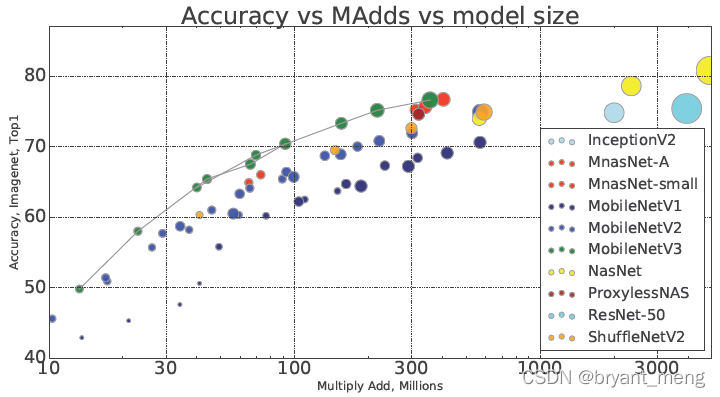

【MobileNet V3】《Searching for MobileNetV3》

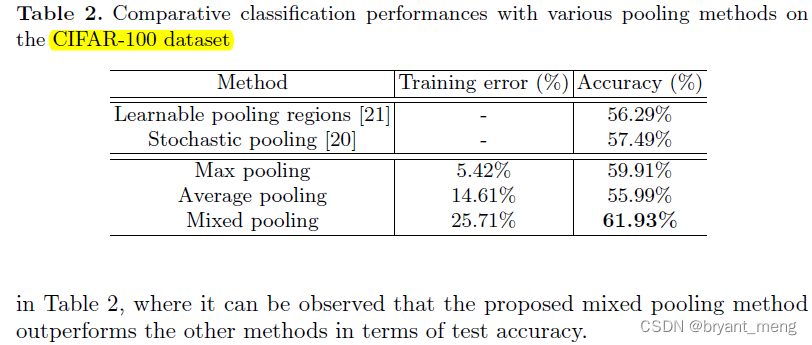

【Mixed Pooling】《Mixed Pooling for Convolutional Neural Networks》

Mmdetection trains its own data set -- export coco format of cvat annotation file and related operations

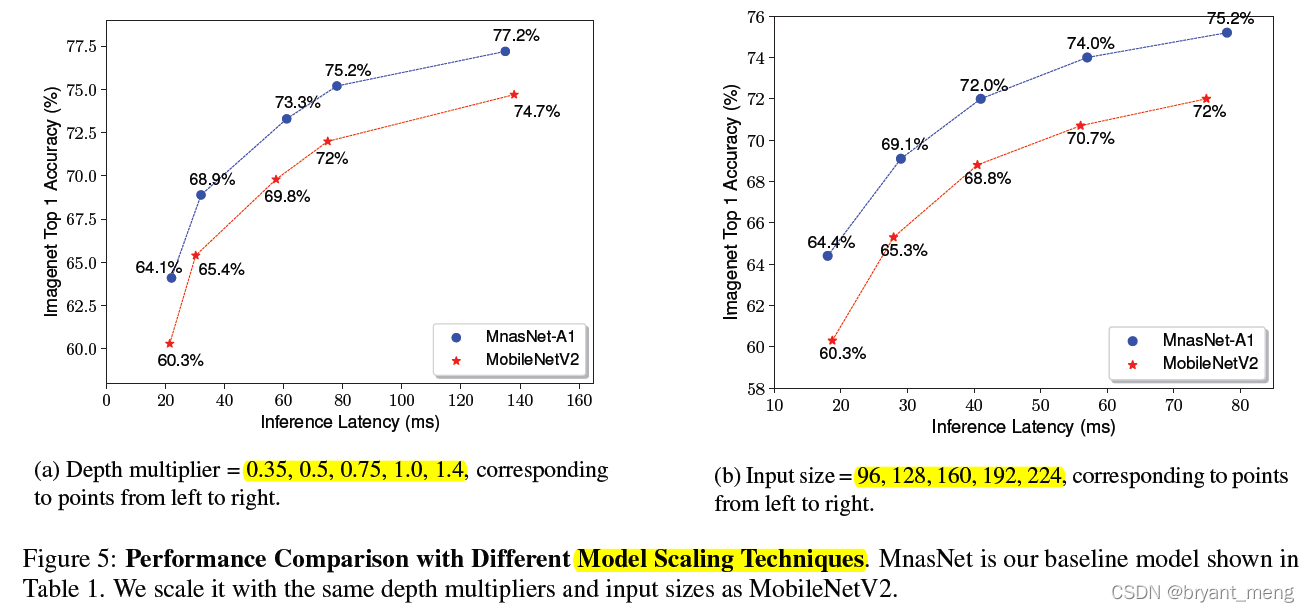

【MnasNet】《MnasNet:Platform-Aware Neural Architecture Search for Mobile》

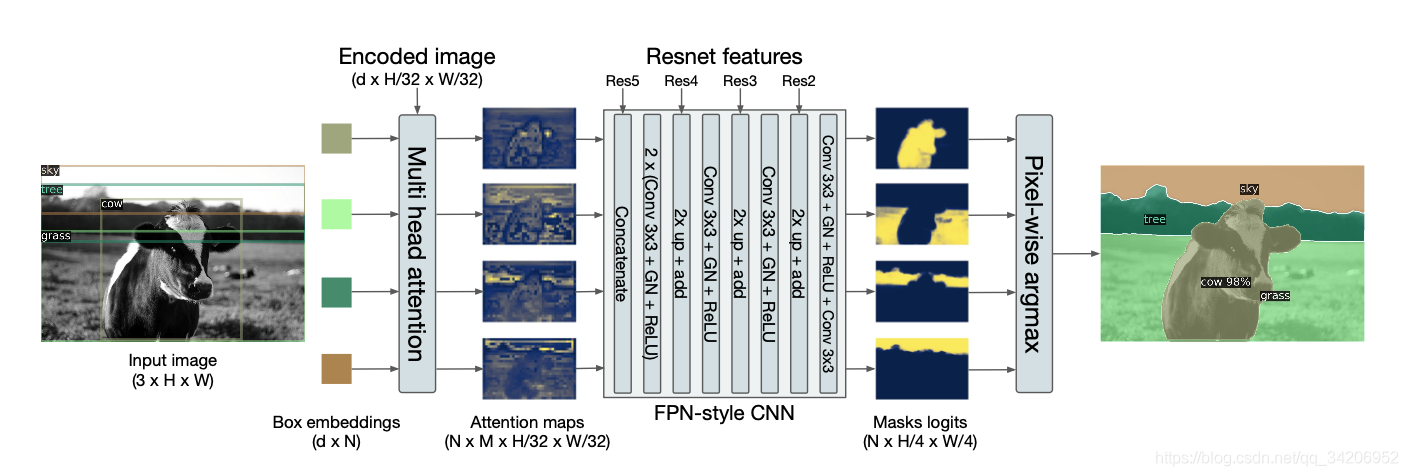

利用Transformer来进行目标检测和语义分割

![[mixup] mixup: Beyond Imperial Risk Minimization](/img/14/8d6a76b79a2317fa619e6b7bf87f88.png)

[mixup] mixup: Beyond Imperial Risk Minimization

【Mixup】《Mixup:Beyond Empirical Risk Minimization》

【学习笔记】反向误差传播之数值微分

随机推荐

Execution of procedures

【Programming】

Hystrix dashboard cannot find hystrix Stream solution

【Sparse-to-Dense】《Sparse-to-Dense:Depth Prediction from Sparse Depth Samples and a Single Image》

Translation of the paper "written mathematical expression recognition with bidirectionally trained transformer"

[Sparse to Dense] Sparse to Dense: Depth Prediction from Sparse Depth samples and a Single Image

【DIoU】《Distance-IoU Loss:Faster and Better Learning for Bounding Box Regression》

【Sparse-to-Dense】《Sparse-to-Dense:Depth Prediction from Sparse Depth Samples and a Single Image》

Label propagation

[learning notes] numerical differentiation of back error propagation

【Paper Reading】

Apple added the first iPad with lightning interface to the list of retro products

【AutoAugment】《AutoAugment:Learning Augmentation Policies from Data》

Thesis writing tip2

【MnasNet】《MnasNet:Platform-Aware Neural Architecture Search for Mobile》

open3d学习笔记二【文件读写】

【学习笔记】Matlab自编高斯平滑器+Sobel算子求导

【BiSeNet】《BiSeNet:Bilateral Segmentation Network for Real-time Semantic Segmentation》

【Hide-and-Seek】《Hide-and-Seek: A Data Augmentation Technique for Weakly-Supervised Localization xxx》

【Wing Loss】《Wing Loss for Robust Facial Landmark Localisation with Convolutional Neural Networks》