当前位置:网站首页>Design and implementation of spark offline development framework

Design and implementation of spark offline development framework

2022-07-07 23:28:00 【Microservice technology sharing】

One 、 background

With Spark And the continuous development of its community ,Spark Its own technology is also maturing ,Spark The advantages in technical architecture and performance are becoming more and more obvious , At present, most companies tend to use... In big data processing Spark.Spark Support multi language development , Such as Scala、Java、Sql、Python etc. .

Spark SQL Use standard data connections , And Hive compatible , Easy to communicate with other languages API Integrate , Express clearly 、 Easy to use 、 The cost of learning is low , It is the preferred language for developers to develop simple data processing , But for complex data processing 、 Development of data analysis , Use SQL Development seems inadequate , Maintenance costs are also very high , Using high-level language processing will be more efficient .

In daily data warehouse development work , In addition to our development work , It also involves a large number of data backtracking tasks . For innovative businesses , The caliber changes frequently 、 Rapid business iteration , Backtracking of data warehouse is very common , It's very common to go back a few months or even a year , But the traditional way of backtracking task is very inefficient , It also requires human resources to pay close attention to the status of each task .

In view of the current situation , We developed a set of Spark Offline development framework , As shown in the following table , We give examples of existing problems and solutions . The implementation of the framework not only makes the development simple and efficient , And the data backtracking work does not need any development , Complete a lot of backtracking work quickly and efficiently .

Two 、 framework design

The framework is designed to encapsulate repetitive work , Make development simple . The frame is as shown in the figure 2-1 Shown , It is divided into three parts , Basic framework 、 Extensible tools and Applications , Developers only need to focus on the application to realize code development simply and quickly .

2.1 Basic framework

In the basic framework , We implement the separation mechanism between code and configuration for all types of applications , Resource allocation is unified to XML It is saved in the form of a file and processed by the framework . The framework will use the resource size according to the tasks configured by the developer , It's done SparkSession、SparkContext、SparkConf The creation of , At the same time, common environment variables are loaded , Developed a general UDF function ( As in common use url Parameter analysis, etc ). among Application Parent class for all applications , The processing flow is shown in the figure , Developers just need to write the green part .

at present , The common environment variables supported by the offline framework are shown in the following table .

2.2 Extensible tools

Extensible tools contain a large number of tool classes , Serve the application and basic framework , Commonly used , Configuration file parsing class , Such as resolving task resource parameters ; Database tool class , Used to read and write database ; Date tool class , Used to add and subtract dates 、 transformation 、 Identify and resolve environment variables, etc . Common tool modules serving applications can be collectively referred to as extensible tools , No more details here .

2.3 Applications

2.3.1 SQL application

about SQL application , Just create SQL Just code and resource configuration , The application class is unique ( The implemented ), There is one and only one , For all SQL Application and use , Developers don't need to care . The following configuration ,class Unique class name for all applications , Developers should be concerned about path Medium sql The code and conf Should be sql Size of resources used .

<?xml version="1.0" encoding="UTF-8"?>

<project name="test">

<class>com.way.app.instance.SqlExecutor</class>

<path>sql File path </path>

<!-- sparksession conf -->

<conf>

<spark.executor.memory>1G</spark.executor.memory>

<spark.executor.cores>2</spark.executor.cores>

<spark.driver.memory>1G</spark.driver.memory>

<spark.executor.instances>20</spark.executor.instances>

</conf>

</project>

2.3.2 Java application

For complex data processing ,SQL When the code does not meet the requirements , We also support Java Programming , And SQL The difference is , Developers need to create new application classes , Inherit Application Parent class and implement run The method can ,run In the method, the developer only needs to pay attention to the data processing logic , For general purpose SparkSession、SparkContext No need to pay attention to the creation and closing of , The framework also helps developers encapsulate the input of code 、 Output logic , For input type , Framework support HDFS File input 、SQL Input and other input types , Developers only need to call the relevant processing functions .

Here's a simple Java Data processing applications , As you can see from the configuration file , You still need to configure the resource size , But with SQL The difference is , Developers need to customize the corresponding Java class (class Parameters ), And the input of the application (input Parameters ) And output parameters (output Parameters ), The input in this application is SQL Code , Output is HDFS file . from Test Class implementation can see , Developers only need three steps : Get input data 、 Logical processing 、 Results output , You can complete the coding .

<?xml version="1.0" encoding="UTF-8"?>

<project name="ecommerce_dwd_hanwuji_click_incr_day_domain">

<class>com.way.app.instance.ecommerce.Test</class>

<input>

<type>table</type>

<sql>select

clk_url,

clk_num

from test_table

where event_day='{DATE}'

and click_pv > 0

and is_ubs_spam=0

</sql>

</input>

<output>

<type>afs_kp</type>

<path>test/event_day={DATE}</path>

</output>

<conf>

<spark.executor.memory>2G</spark.executor.memory>

<spark.executor.cores>2</spark.executor.cores>

<spark.driver.memory>2G</spark.driver.memory>

<spark.executor.instances>10</spark.executor.instances>

</conf>

</project>

package com.way.app.instance.ecommerce;import com.way.app.Application;import org.apache.spark.api.java.JavaRDD;import org.apache.spark.sql.Row;import java.util.Map;import org.apache.spark.api.java.function.FilterFunction;import org.apache.spark.sql.Dataset;public class Test extends Application { @Override public void run() { // Input Map<String, String> input = (Map<String, String>) property.get("input"); Dataset<Row> ds = sparkSession.sql(getInput(input)).toDF("url", "num"); // Logical processing ( Simply filter out url Logs with some sites ) JavaRDD<String> outRdd = ds.filter((FilterFunction<Row>) row -> { String url = row.getAs("url").toString(); return url.contains(".jd.com") || url.contains(".suning.com") || url.contains("pin.suning.com") || url.contains(".taobao.com") || url.contains("detail.tmall.hk") || url.contains(".amazon.cn") || url.contains(".kongfz.com") || url.contains(".gome.com.cn") || url.contains(".kaola.com") || url.contains(".dangdang.com") || url.contains("aisite.wejianzhan.com") || url.contains("w.weipaitang.com"); }) .toJavaRDD() .map(row -> row.mkString("\001")); // Output Map<String, String> output = (Map<String, String>) property.get("output"); outRdd.saveAsTextFile(getOutPut(output)); }}

2.3.3 Data backtracking application

The application of data backtracking is to solve the problem of fast backtracking 、 Release manpower and develop , It's very convenient to use , Developers don't need to refactor the task code , And SQL Apply the same , The backtracking application class is the only class ( The implemented ), There is one and only one , For all backtracking tasks , And support a variety of backtracking schemes .

2.3.3.1 The project design

In the process of daily backtracking, it is found that , A backtracking task is a serious waste of time , No matter how the task is submitted , All need to go through the following process of implementing environmental application and preparation :

stay client Submit application, First client towards RS Apply to start ApplicationMaster

RS First find one at random NodeManager start-up ApplicationMaster

ApplicationMaster towards RS Apply to start Executor Resources for

RS Return a batch of resources to ApplicationMaster

ApplicationMaster Connect Executor

each Executor Reverse register with ApplicationMaster

ApplicationMaster send out task、 monitor task perform , Recovery results

The time taken by this process is collectively referred to as execution environment preparation , After we submit the task , Go through the following three processes :

Perform environmental preparation

Start executing code

Release resources

Performing environmental preparation usually involves 5-20 Minutes of waiting time , The resource situation of the queue fluctuates up and down at that time , The failure rate is 10% about , The failure reason is due to queue 、 The Internet 、 Force majeure caused by insufficient resources ; The failure rate of code execution is usually 5% about , Usually due to node instability 、 Network and other factors lead to . The backtracking application of offline development framework is considered from two aspects of saving time and manpower , Design scheme drawing 2-3 Shown .

In terms of backtracking time : The first of all backtracking subtasks 、 The third step is to compress the time into one time , That is, environmental preparation and release once each , Execute backtracking code multiple times . If the developer's backtracking task is 30 Subtask , The time saved is 5-20 Minutes by 29, so , The more backtracking subtasks , The more obvious the backtracking effect is .

In terms of manual intervention , First of all , Developers don't need to develop additional 、 Add backtracking configuration . second , The number of tasks started by the offline framework backtracking application is far less than that of the traditional backtracking scheme , In an effort to 2-3 For example , The backtracking task is in serial backtracking mode , After using the framework, you only need to pay attention to the execution state of one task , The traditional method requires manual maintenance N The execution status of a task .

Last , We are using the offline development framework to trace back a year of serial tasks , The execution of the code only needs 5 About minutes , We found that , The backtracking framework is not ideal for offline development tasks ( That is, the shortest time allocated to resources 、 All subtasks have no failure 、 It can be started serially at one time 365 God ), The time required is 2.5 God , But the task of backtracking using an offline development framework , In the worst case ( That is, the maximum time allocated to resources , Task failure occurs 10%), It only needs 6 You can finish it in an hour , Efficiency improvement 90% above , And basically no human attention .

2.3.3.2 Function is introduced

Breakpoint continuation

Use Spark Calculation , When we enjoy the fast speed of its calculation , It will inevitably encounter instability , The node is down 、 Network connection failed 、 Task failures caused by resource problems are common , Back tracking tasks often take months 、 Even a year , The task is huge , It is particularly important to continue to trace back from the breakpoint after failure . In offline framework design , The successful part of the task backtracking process is recorded , Breakpoint resuming will be performed after the task fails and restarts .

Backtracking order

In the backtracking task , Usually, we will determine the backtracking order according to the business needs , For example, for incremental data with new and old users , Because the current date data depends on historical data , So we usually go back from history to now . But when there is no such need , Generally speaking , Tracing back first can quickly meet the business side's understanding of the current data indicators , We usually go back to history from now on . In offline framework design , Developers can choose the backtracking order according to business needs .

Parallel backtracking

Usually , Backtracking tasks have lower priority than routine tasks , In the case of limited resources , In the process of backtracking, it cannot be opened all at once , So as not to occupy a lot of resources and affect routine tasks , So the offline framework defaults to serial backtracking . Of course, when resources are sufficient , We can choose the appropriate parallel backtracking . Offline development framework supports a certain degree of concurrency , Developers can easily trace back tasks .

2.3.3.3 Create a backtracking task

The use of backtracking application is very convenient , Developers don't need to develop new code , Use routine code , Configure the backtracking scheme , As shown in the following code ,

class The parameter is the unique class of backtracking application , Required parameters , All backtracking tasks do not need to change .

type The parameter is backtracking application type , The default is sql, If the application type is java, be type Value should be java Class name .

path The parameter is backtracking code path , Required parameters , No default , Usually the same as routine task code , There is no need to modify .

limitdate The parameter is the deadline for backtracking , Required parameters , No default .

startdate The parameter is backtracking start date , Required parameters , No default , If the task enters breakpoint continuation or turns on parallel backtracking , The parameter is invalid .

order The parameter is backtracking order , The default is reverse order . The duty of 1 Time is in positive order , Is worth - 1 Time is in reverse order .

distance The parameter is backtracking step , The framework defaults to serial backtracking , But it also supports parallel backtracking , This parameter is mainly used to support parallel backtracking , When the parameter exists and the value is not - 1 when , The backtracking start date is the base date . Such as starting two parallel tasks , The execution range of the task is from the base date to the base date plus step size or limitdate, If the base date plus step size is later than limitdate, It is to take limitdate, Otherwise, vice versa .

file The parameter is backtracking log file , Required parameters , No default , Used to record the date when the backtracking was successful , When the task fails to restart again ,startdate The next date in the log file will prevail .

conf The parameters are the same as other applications , Configure the resource occupation of this backtracking task .

<?xml version="1.0" encoding="UTF-8"?><project name="ecommerce_ads_others_order_retain_incr_day"> <class>com.way.app.instance.ecommerce.Huisu</class> <type>sql</type> <path>/sql/ecommerce/ecommerce_ads_others_order_retain_incr_day.sql</path> <limitdate>20220404</limitdate> <startdate>20210101</startdate> <order>1</order> <distance>-1</distance> <file>/user/ecommerce_ads_others_order_retain_incr_day/process</file> <conf> <spark.executor.memory>1G</spark.executor.memory> <spark.executor.cores>2</spark.executor.cores> <spark.executor.instances>30</spark.executor.instances> <spark.yarn.maxAppAttempts>1</spark.yarn.maxAppAttempts> </conf></project>

3、 ... and 、 Usage mode

3.1 Introduction

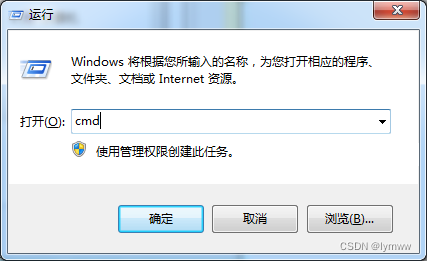

When using offline framework development , Developers only need to focus on the data logic processing part , After the development is completed and packaged , Submit for execution , For each application, the main class is the same , As mentioned above, it is Application Parent class , Does not vary with application , The only change is the parameters that the parent class needs to receive , This parameter is the relative path of the applied configuration file .

3.2 Use comparison

The comparison before and after using the offline framework is shown below .

Four 、 expectation

at present , Offline development framework only supports SQL、Java Language code development , but Spark There are far more than these two languages supported , We need to continue to upgrade the framework and support multilingual development , Make it easier for developers 、 Fast big data development .

边栏推荐

- Oracle-数据库的备份与恢复

- USB (XIV) 2022-04-12

- 深入理解Mysql锁与事务隔离级别

- USB (XV) 2022-04-14

- One week learning summary of STL Standard Template Library

- First week of July

- 进度播报|广州地铁七号线全线29台盾构机全部完成始发

- Deep understanding of MySQL lock and transaction isolation level

- UE4_ Use of ue5 blueprint command node (turn on / off screen response log publish full screen display)

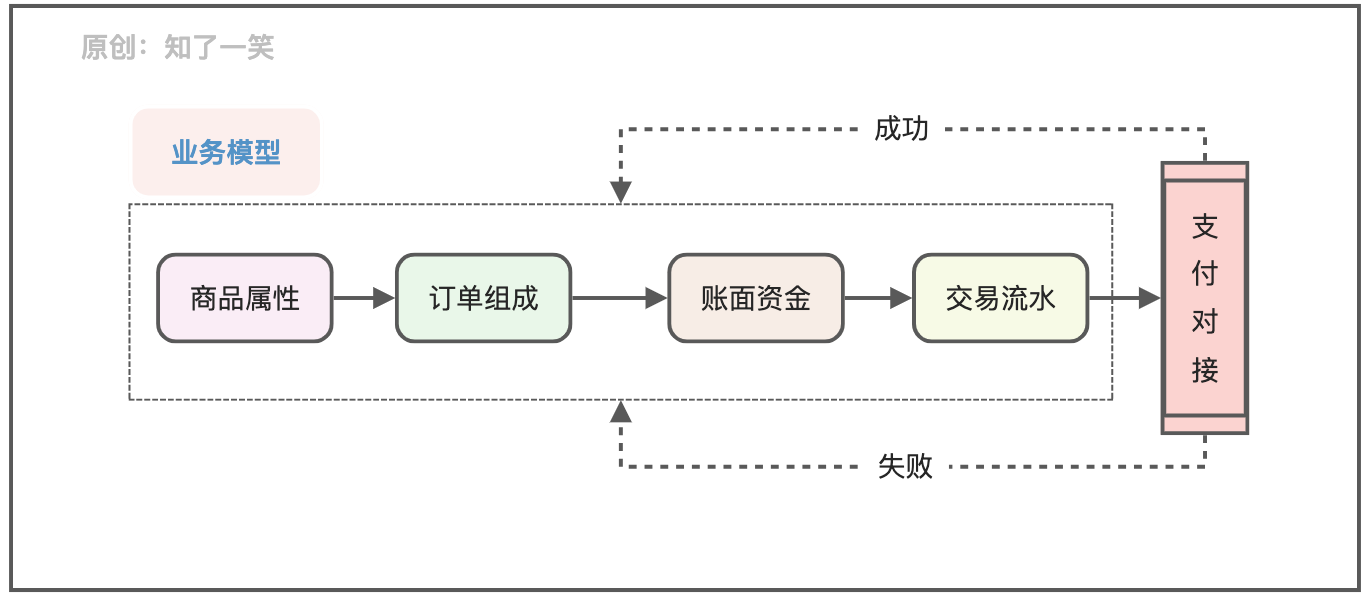

- 聊聊支付流程的设计与实现逻辑

猜你喜欢

Solution of intelligent supply chain collaboration platform in electronic equipment industry: solve inefficiency and enable digital upgrading of industry

![Ros2 topic (03): the difference between ros1 and ros2 [02]](/img/12/244ea30b5b141a0f47a54c08f4fe9f.png)

Ros2 topic (03): the difference between ros1 and ros2 [02]

聊聊支付流程的设计与实现逻辑

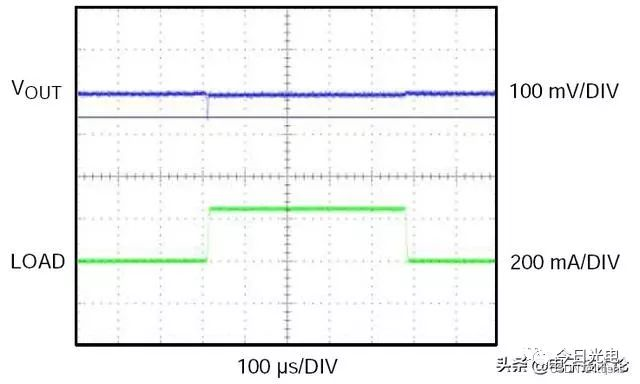

Puce à tension stabilisée LDO - schéma de bloc interne et paramètres de sélection du modèle

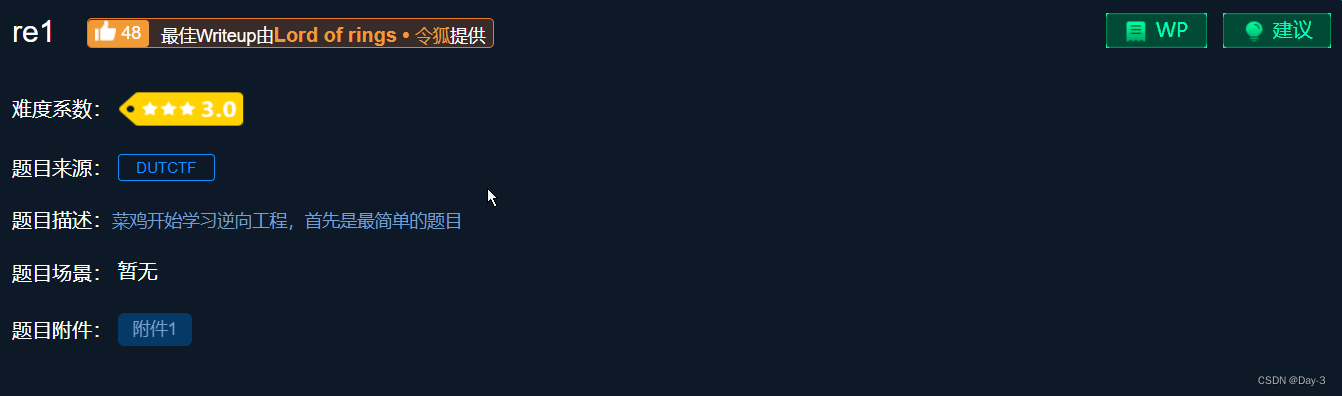

re1攻防世界逆向

S2b2b mall solution of intelligent supply chain in packaging industry: opening up a new ecosystem of e-commerce consumption

When copying something from the USB flash disk, an error volume error is reported. Please run CHKDSK

2022注册测绘师备考开始 还在不知所措?手把手教你怎么考?

The efficient s2b2c e-commerce system helps electronic material enterprises improve their adaptability in this way

Mysql索引优化实战二

随机推荐

Cloud native data warehouse analyticdb MySQL user manual

Three questions TDM

ROS2专题(03):ROS1和ROS2的区别【02】

Gee (III): calculate the correlation coefficient between two bands and the corresponding p value

Oracle database backup and recovery

HDU 4747 Mex「建议收藏」

CAIP2021 初赛VP

统计电影票房排名前10的电影并存入还有一个文件

移动端异构运算技术 - GPU OpenCL 编程(基础篇)

Caip2021 preliminary VP

高级程序员必知必会,一文详解MySQL主从同步原理,推荐收藏

Experience sharing of system architecture designers in preparing for the exam: the direction of paper writing

建筑建材行业SRM供应商云协同管理平台解决方案,实现业务应用可扩展可配置

七月第一周

B_QuRT_User_Guide(39)

Deep understanding of MySQL lock and transaction isolation level

USB (十八)2022-04-17

Coreseek: the second step is index building and testing

生鲜行业数字化采购管理系统:助力生鲜企业解决采购难题,全程线上化采购执行

做自媒体视频剪辑怎么赚钱呢?