当前位置:网站首页>Intelligent target detection 59 -- detailed explanation of pytoch focal loss and its implementation in yolov4

Intelligent target detection 59 -- detailed explanation of pytoch focal loss and its implementation in yolov4

2022-07-05 07:08:00 【Bubbliiiing】

Intelligent target detection 59——Pytorch Focal loss Detailed explanation and in YoloV4 The realization of this

Learn foreword

to YoloV4 Add one in the warehouse of Focal Loss have a look , I've been hearing that Focal Loss stay Yolo Series is useless , But practice makes true knowledge . And many people ask , It's better to add .

What is? Focal Loss

Focal Loss It's a kind of Loss Calculation scheme . It has two important characteristics .

1、 Control the weight of positive and negative samples

2、 Control the weights of easy and difficult samples

The concept of positive and negative samples is as follows :

The essence of target detection is dense sampling , Generate thousands of a priori boxes in an image ( Or feature points ), Match the real box with a partial a priori box , The prior box on the match is the positive sample , What doesn't match is the negative sample .

The concepts of easy classification and difficult classification samples are as follows :

Suppose there is a binary classification problem , sample 1 And the sample 2 All are categories 1. In the prediction results of the network , sample 1 Belong to the category 1 Probability =0.9, sample 2 Belong to the category 1 Probability =0.6, The former prediction is more accurate , It is a sample that is easy to classify ; The latter is not accurate enough , It is difficult to classify samples .

How to realize weight control , Please look down :

One 、 Control the weight of positive and negative samples

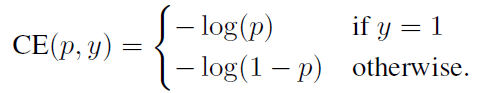

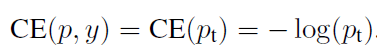

The following is the commonly used cross entropy loss, Take two categories as an example :

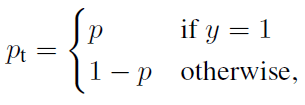

We can use the following Pt Simplified cross entropy loss.

here :

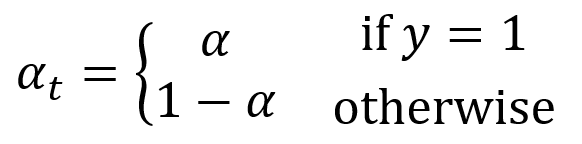

Want to reduce the impact of negative samples , You can add a coefficient before the conventional loss function αt. And Pt similar :

When label=1 When ,αt=α;

When label=otherwise When ,αt=1 - α.

a The range is 0 To 1. At this point, we can set α Realize the control of positive and negative sample pairs loss The contribution of .

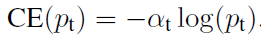

It's just :

Two 、 Control the weights of easy and difficult samples

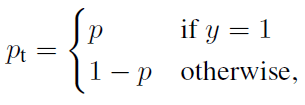

The sample belongs to a certain class , And the greater the probability of this kind in the prediction results , The easier it is to classify , In dichotomous problems , The label of the positive sample is 1, The label of the negative sample is 0,p The representative sample is 1 The probability of a class .

For a positive sample ,1-p The greater the value of , The harder it is to classify samples .

For negative samples ,p The greater the value of , The harder it is to classify samples .

Pt Is defined as follows :

So the use of 1-Pt We can calculate whether each sample is easy to classify or difficult to classify .

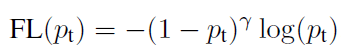

The specific implementation is as follows .

among :

( 1 − p t ) γ (1-p_{t})^{γ} (1−pt)γ

It is the degree to which each sample is easy to distinguish , γ γ γ It's called modulation coefficient

1、 When pt Tend to 0 When , The modulation coefficient tends to 1, For the whole loss My contribution is great . When pt Tend to 1 When , The modulation coefficient tends to 0, That is, for the total loss My contribution is very small .

2、 When γ=0 When ,focal loss It's the traditional cross entropy loss , Can be adjusted by γ Realize the change of modulation coefficient .

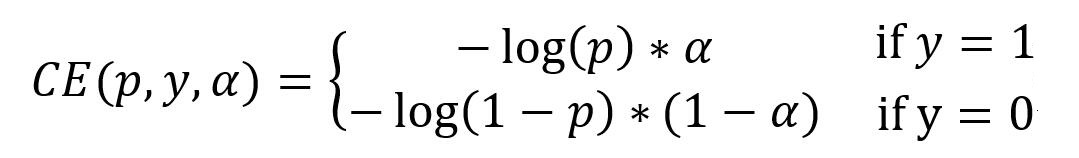

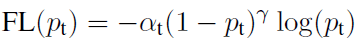

3、 ... and 、 The two weight control methods are combined

It can be achieved by the following formula Control the weight of positive and negative samples and Control the weights of easy and difficult samples .

Realization way

This article takes Pytorch Version of YoloV4 For example , Let's analyze it ,YoloV4 The coordinates of are as follows :

https://github.com/bubbliiiing/yolov4-pytorch

First position YoloV4 in , The loss part distinguished by positive and negative samples ,YoloV4 The loss of consists of three parts , Respectively :

loss_loc( Return to loss )

loss_conf( Loss of target confidence )

loss_cls( Category loss )

The loss part distinguished by positive and negative samples yes confidence_loss( Loss of target confidence ), So we add Focal Loss.

First, the probability in the positioning formula p.prediction Represents the prediction result of each feature point , Take out the part that belongs to confidence , take sigmoid, It's probability p

conf = torch.sigmoid(prediction[..., 4])

First, balance the positive and negative samples , Setting parameters alpha.

torch.where(obj_mask, torch.ones_like(conf) * self.alpha, torch.ones_like(conf) * (1 - self.alpha))

Then balance the difficult and easy classification samples , Setting parameters gamma.

torch.where(obj_mask, torch.ones_like(conf) - conf, conf) ** self.gamma

Multiply by the original cross entropy loss .

ratio = torch.where(obj_mask, torch.ones_like(conf) * self.alpha, torch.ones_like(conf) * (1 - self.alpha)) * torch.where(obj_mask, torch.ones_like(conf) - conf, conf) ** self.gamma

loss_conf = torch.mean((self.BCELoss(conf, obj_mask.type_as(conf)) * ratio)[noobj_mask.bool() | obj_mask])

边栏推荐

猜你喜欢

随机推荐

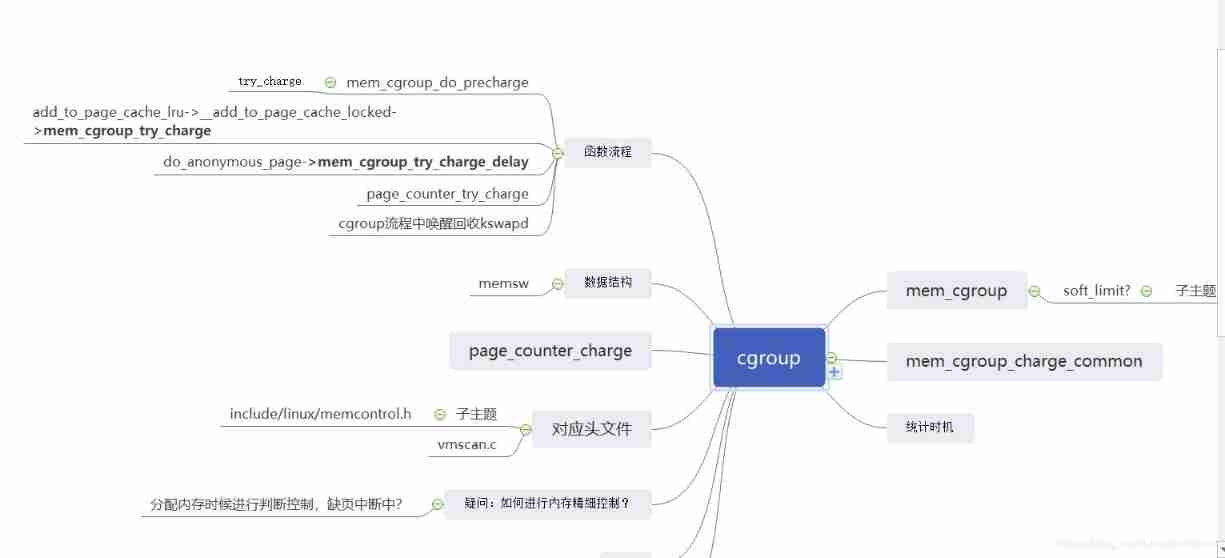

三体目标管理笔记

[software testing] 03 -- overview of software testing

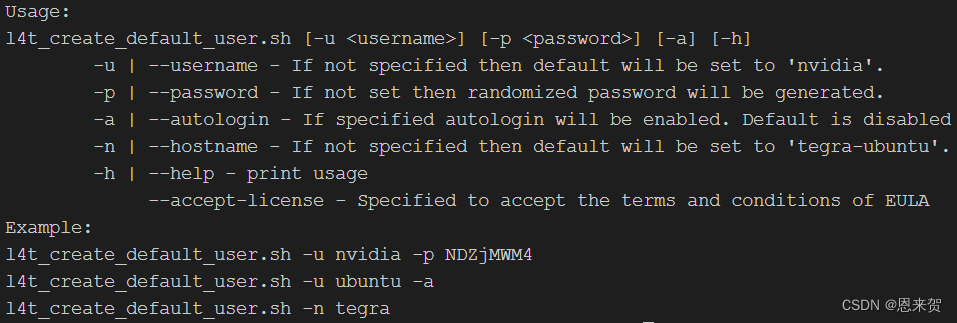

Xavier CPU & GPU 高负载功耗测试

Build a microservice cluster environment locally and learn to deploy automatically

【软件测试】06 -- 软件测试的基本流程

PowerManagerService(一)— 初始化

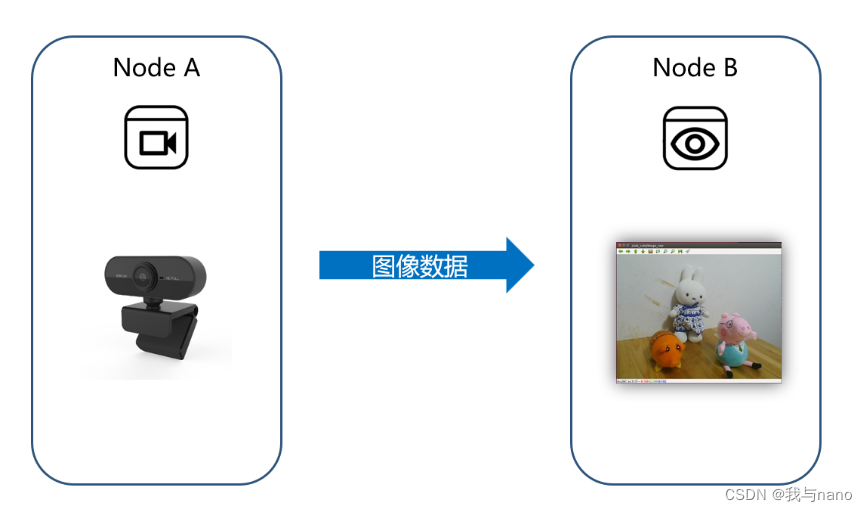

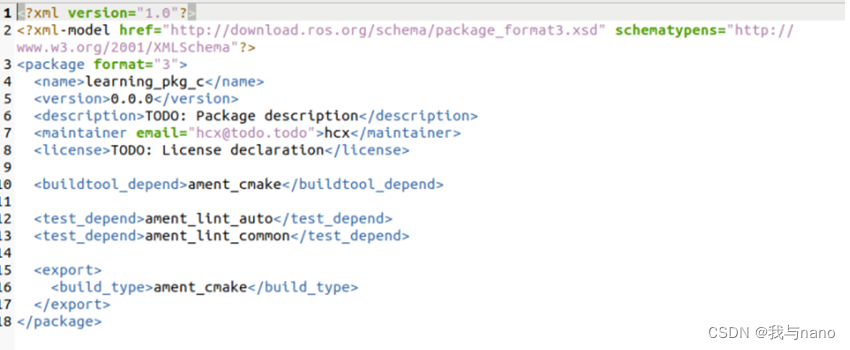

ROS2——ROS2对比ROS1(二)

Concurrent programming - how to interrupt / stop a running thread?

Edge calculation data sorting

Instruction execution time

【软件测试】05 -- 软件测试的原则

Architecture

小米笔试真题一

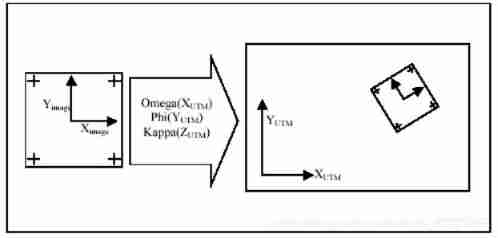

Interpretation of the earliest sketches - image translation work sketchygan

ROS2——初识ROS2(一)

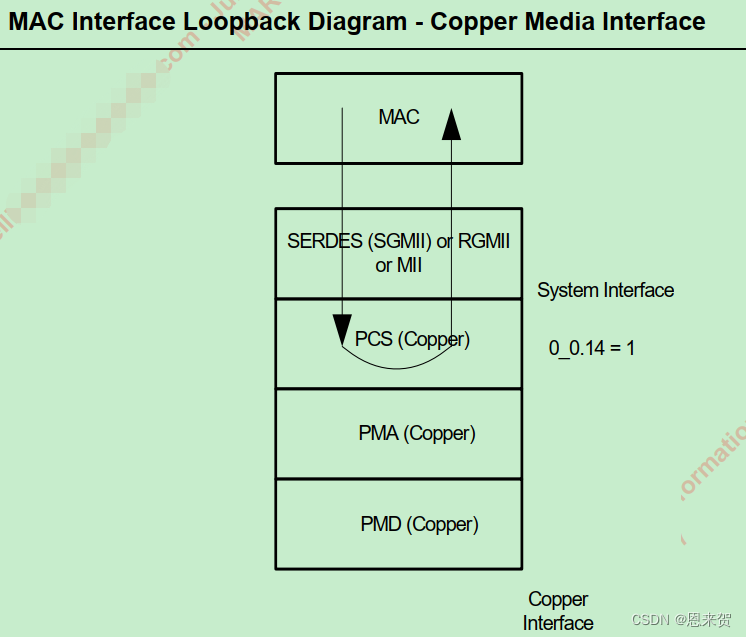

PHY drive commissioning --- mdio/mdc interface Clause 22 and 45 (I)

Ros2 - Service Service (IX)

并发编程 — 如何中断/停止一个运行中的线程?

【MySQL8.0不支持表名大写-对应方案】

数学分析_笔记_第8章:重积分