当前位置:网站首页>Pytoch (VI) -- model tuning tricks

Pytoch (VI) -- model tuning tricks

2022-07-07 08:12:00 【CyrusMay】

Pytorch( 6、 ... and ) —— Model tuning tricks

1. Regularization Regularization

1.1 L1 Regularization

import torch

import torch.nn.functional as F

from torch import nn

device=torch.device("cuda:0")

MLP = nn.Sequential(nn.Linear(128,64),

nn.ReLU(inplace=True),

nn.Linear(64,32),

nn.ReLU(inplace=True),

nn.Linear(32,10)

)

MLP.to(device)

loss_classify = nn.CrossEntropyLoss().to(device)

# L1 norm

l1_loss = 0

for param in MLP.parameters():

l1_loss += torch.sum(torch.abs(param))

loss = loss_classify+l1_loss

1.2 L2 Regularization

import torch

import torch.nn.functional as F

from torch import nn

device=torch.device("cuda:0")

MLP = nn.Sequential(nn.Linear(128,64),

nn.ReLU(inplace=True),

nn.Linear(64,32),

nn.ReLU(inplace=True),

nn.Linear(32,10)

)

MLP.to(device)

# L2 norm

opt = torch.optim.SGD(MLP.parameters(),lr=0.001,weight_decay=0.1) # adopt weight_decay Realization L2

loss = nn.CrossEntropyLoss().to(device)

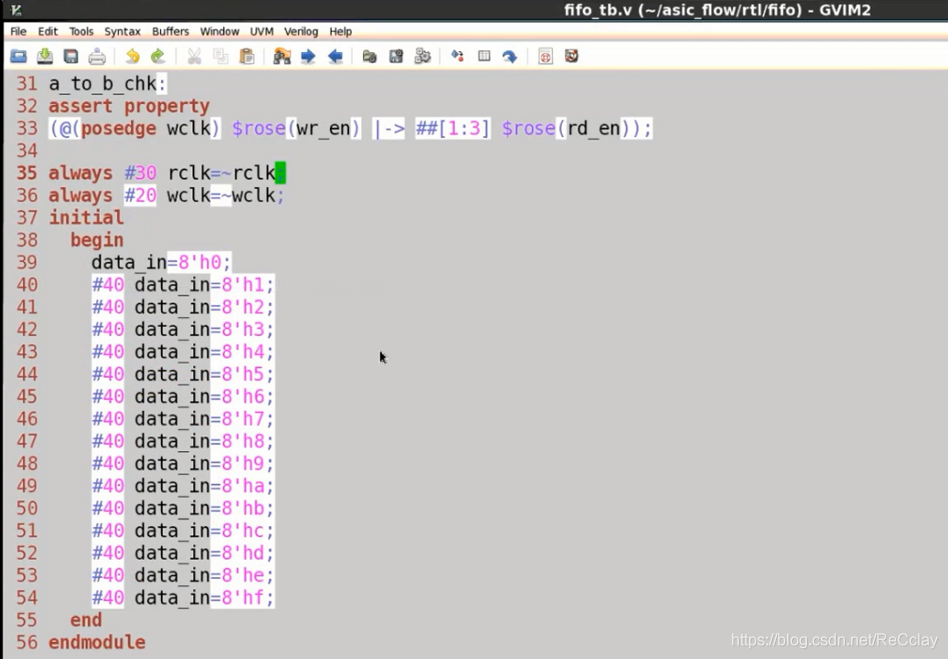

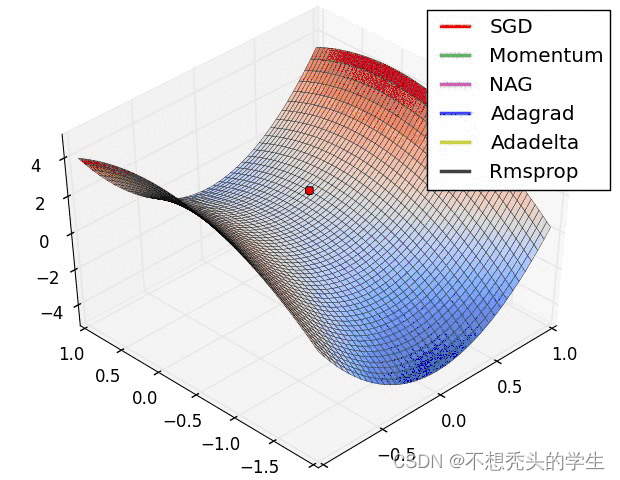

2 Momentum and learning rate decay

2.1 momentum

opt = torch.optim.SGD(model.parameters(),lr=0.001,momentum=0.78,weight_decay=0.1)

2.2 learning rate tunning

- torch.optim.lr_scheduler.ReduceLROnPlateau() Use when the value of the loss function does not decrease

- torch.optim.lr_scheduler.StepLR() Reduce the learning rate according to a certain number of steps

opt = torch.optim.SGD(net.parameters(),lr=1)

lr_scheduler = torch.optim.lr_scheduler.ReduceLROnPlateau(optimizer=opt,mode="min",factor=0.1,patience=10)

for epoch in torch.arange(1000):

loss_val = train(...)

lr_scheduler.step(loss_val) # monitor loss

opt = torch.optim.SGD(net.parameters(),lr=1)

lr_scheduler = torch.optim.lr_scheduler.StepLR(optimizer=opt,step_size=30,gamma=0.1)

for epoch in torch.arange(1000):

lr_scheduler.step() # monitor loss

train(...)

3. Early Stopping

4. Dropout

model = nn.Sequential(

nn.Linear(256,128),

nn.Dropout(p=0.5),

nn.ReLu(),

)

by CyrusMay 2022 07 03

边栏推荐

- Quick analysis of Intranet penetration helps the foreign trade management industry cope with a variety of challenges

- Complex network modeling (I)

- buureservewp(2)

- 数据库实时同步利器——CDC(变化数据捕获技术)

- Blob object introduction

- eBPF Cilium实战(1) - 基于团队的网络隔离

- 电池、电机技术受到很大关注,反而电控技术却很少被提及?

- Register of assembly language by Wang Shuang

- JS copy picture to clipboard read clipboard

- 在Rainbond中一键部署高可用 EMQX 集群

猜你喜欢

随机推荐

[untitled]

Qinglong panel -- Huahua reading

WARNING: Retrying (Retry(total=4, connect=None, read=None, redirect=None, status=None)) after conne

拓维信息使用 Rainbond 的云原生落地实践

JSON data flattening pd json_ normalize

JS cross browser parsing XML application

面试题(CAS)

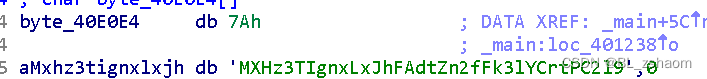

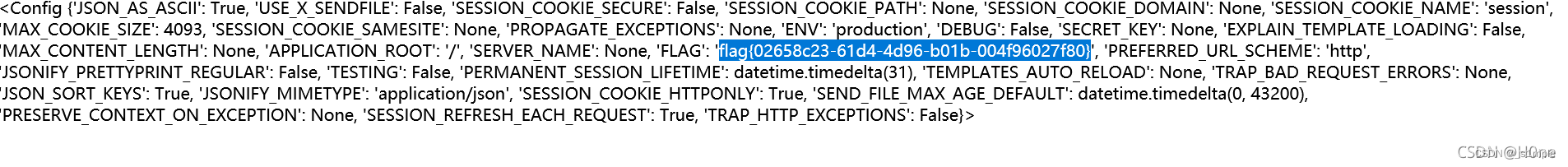

Bugku CTF daily one question chessboard with only black chess

Call pytorch API to complete linear regression

力扣(LeetCode)187. 重复的DNA序列(2022.07.06)

Example of file segmentation

Paddlepaddle 29 dynamically modify the network structure without model definition code (relu changes to prelu, conv2d changes to conv3d, 2D semantic segmentation model changes to 3D semantic segmentat

解析创新教育体系中的创客教育

LeetCode简单题之字符串中最大的 3 位相同数字

OpenVSCode云端IDE加入Rainbond一体化开发体系

Implementation of replacement function of shell script

Use of JMeter

ROS bridge notes (05) - Carla_ ackermann_ Control function package (convert Ackermann messages into carlaegovehiclecontrol messages)

eBPF Cilium实战(1) - 基于团队的网络隔离

Merging binary trees by recursion