当前位置:网站首页>[binocular vision] binocular correction

[binocular vision] binocular correction

2022-07-02 07:48:00 【Silent clouds】

One 、 Binocular calibration

The internal parameters and transformation matrix of the two cameras need to be obtained for double target localization . Please refer to the link :

https://blog.csdn.net/qq_38236355/article/details/89280633

https://blog.csdn.net/qingfengxiaosong/article/details/109897053

Or Baidu

It is recommended to use Matlab Calibrate the tool box , It is suggested to check 3 Coefficients.

Output Matlab After the data of , You can use the following script to extract data :

rowName = cell(1,10);

rowName{1,1} = ' Translation matrix ';

rowName{1,2} = ' Rotation matrix ';

rowName{1,3} = ' The camera 1 Internal parameter matrix ';

rowName{1,4} = ' The camera 1 Radial distortion ';

rowName{1,5} = ' The camera 1 Tangential distortion ';

rowName{1,6} = ' The camera 2 Internal parameter matrix ';

rowName{1,7} = ' The camera 2 Radial distortion ';

rowName{1,8} = ' The camera 2 Tangential distortion ';

rowName{1,9} = ' The camera 1 Distortion vector ';

rowName{1,10} = ' The camera 2 Distortion vector ';

xlswrite('out.xlsx',rowName(1,1),1,'A1');

xlswrite('out.xlsx',rowName(1,2),1,'A2');

xlswrite('out.xlsx',rowName(1,3),1,'A5');

xlswrite('out.xlsx',rowName(1,4),1,'A8');

xlswrite('out.xlsx',rowName(1,5),1,'A9');

xlswrite('out.xlsx',rowName(1,6),1,'A10');

xlswrite('out.xlsx',rowName(1,7),1,'A13');

xlswrite('out.xlsx',rowName(1,8),1,'A14');

xlswrite('out.xlsx',rowName(1,9),1,'A15');

xlswrite('out.xlsx',rowName(1,10),1,'A16');

xlswrite('out.xlsx',stereoParams.TranslationOfCamera2,1,'B1'); % Translation matrix

xlswrite('out.xlsx',stereoParams.RotationOfCamera2.',1,'B2'); % Rotation matrix

xlswrite('out.xlsx',stereoParams.CameraParameters1.IntrinsicMatrix.',1,'B5'); % The camera 1 Internal parameter matrix

xlswrite('out.xlsx',stereoParams.CameraParameters1.RadialDistortion,1,'B8'); % The camera 1 Radial distortion (1,2,5)

xlswrite('out.xlsx',stereoParams.CameraParameters1.TangentialDistortion,1,'B9'); % The camera 1 Tangential distortion (3,4)

xlswrite('out.xlsx',stereoParams.CameraParameters2.IntrinsicMatrix.',1,'B10'); % The camera 2 Internal parameter matrix

xlswrite('out.xlsx',stereoParams.CameraParameters2.RadialDistortion,1,'B13'); % The camera 2 Radial distortion (1,2,5)

xlswrite('out.xlsx',stereoParams.CameraParameters2.TangentialDistortion,1,'B14'); % The camera 2 Tangential distortion (3,4)

xlswrite('out.xlsx',[stereoParams.CameraParameters1.RadialDistortion(1:2), stereoParams.CameraParameters1.TangentialDistortion,...

stereoParams.CameraParameters1.RadialDistortion(3)],1,'B15'); % The camera 1 Distortion vector

xlswrite('out.xlsx',[stereoParams.CameraParameters2.RadialDistortion(1:2), stereoParams.CameraParameters2.TangentialDistortion,...

stereoParams.CameraParameters2.RadialDistortion(3)],1,'B16'); % The camera 2 Distortion vector

Python Binocular correction

Create a new one python Script , Enter the following code :

import cv2

import numpy as np

# Left eye internal reference

left_camera_matrix = np.array([[443.305413261701, 0., 473.481578105186],

[0., 445.685585080218, 481.627083907456],

[0., 0., 1.]])

# Left eye distortion

#k1 k2 p1 p2 k3

left_distortion = np.array([[-0.261575534517449, 0.0622298171820726, 0., 0., -0.00638628534161724]])

# Right eye internal reference

right_camera_matrix = np.array([[441.452616156177,0., 484.276702473006],

[0., 444.350924943458, 465.054536507021],

[0., 0., 1.]])

# Right eye distortion

right_distortion = np.array([[-0.257761221642368, 0.0592089672793365, 0., 0., -0.00576090991058531]])

# Rotation matrix

R = np.matrix([

[0.999837210893742, -0.00477934325693493, 0.017398551383822],

[0.00490062605211919, 0.999963944810228, -0.0069349076319899],

[-0.0173647797717217, 0.00701904249875521, 0.999824583347439]

])

# Translation matrix

T = np.array([-71.0439056359403, -0.474467959947789, -0.27989811881883]) # Translation relation vector

size = (960, 960) # Image size

# Make stereo correction

R1, R2, P1, P2, Q, validPixROI1, validPixROI2 = cv2.stereoRectify(left_camera_matrix, left_distortion,

right_camera_matrix, right_distortion, size, R,

T)

# Calculation correction map

left_map1, left_map2 = cv2.initUndistortRectifyMap(left_camera_matrix, left_distortion, R1, P1, size, cv2.CV_16SC2)

right_map1, right_map2 = cv2.initUndistortRectifyMap(right_camera_matrix, right_distortion, R2, P2, size, cv2.CV_16SC2)

Parameters need to be changed into their own actual parameters .

Next, write a script to test the correction results :

import cv2

import numpy as np

import camera_config

w = 1920

h = 960

cap = cv2.VideoCapture(0)

cap.set(cv2.CAP_PROP_FRAME_WIDTH, w)

cap.set(cv2.CAP_PROP_FRAME_HEIGHT, h)

key = ""

ww = int(w/2)

jiange = int(h/10)

while key!=27:

ret, img = cap.read()

if ret:

imgLeft = img[:, :ww]

imgRight = img[:, ww:w]

left_remap = cv2.remap(imgLeft, camera_config.left_map1, camera_config.left_map2, cv2.INTER_LINEAR)

right_remap = cv2.remap(imgRight, camera_config.right_map1, camera_config.right_map2, cv2.INTER_LINEAR)

out = np.hstack([left_remap, right_remap])

for i in range(10):

cv2.line(out, (0, jiange*i), (w, jiange*i), (255, 0, 0), 2)

cv2.imshow("frame", out)

key = cv2.waitKey(10)

cap.release()

cv2.destroyAllWindows()

You can see the effect :

Before correction ( Bad camera , Fish eye effect , Not suitable for practical use ):

After correction :

边栏推荐

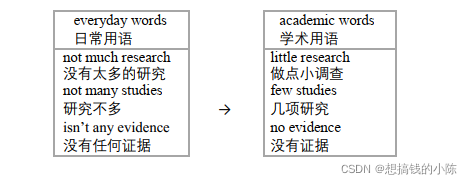

- 【Mixup】《Mixup:Beyond Empirical Risk Minimization》

- 【Mixed Pooling】《Mixed Pooling for Convolutional Neural Networks》

- 【Paper Reading】

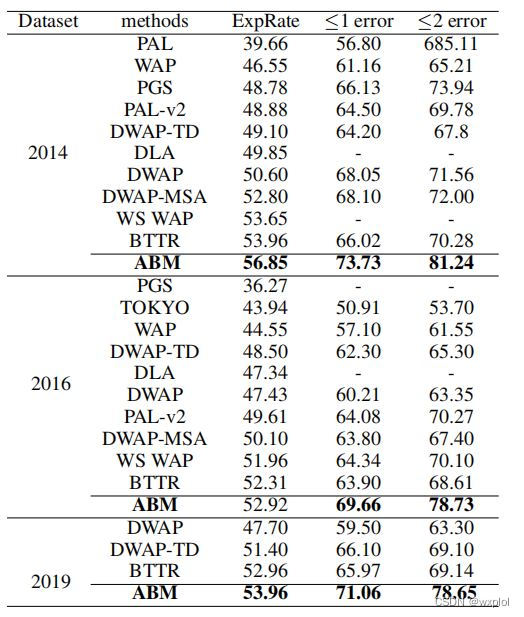

- Translation of the paper "written mathematical expression recognition with bidirectionally trained transformer"

- 【Hide-and-Seek】《Hide-and-Seek: A Data Augmentation Technique for Weakly-Supervised Localization xxx》

- How do vision transformer work?【论文解读】

- PointNet理解(PointNet实现第4步)

- 【FastDepth】《FastDepth:Fast Monocular Depth Estimation on Embedded Systems》

- 基于pytorch的YOLOv5单张图片检测实现

- [CVPR‘22 Oral2] TAN: Temporal Alignment Networks for Long-term Video

猜你喜欢

【Random Erasing】《Random Erasing Data Augmentation》

【双目视觉】双目立体匹配

Faster-ILOD、maskrcnn_ Benchmark training coco data set and problem summary

![[mixup] mixup: Beyond Imperial Risk Minimization](/img/14/8d6a76b79a2317fa619e6b7bf87f88.png)

[mixup] mixup: Beyond Imperial Risk Minimization

【FastDepth】《FastDepth:Fast Monocular Depth Estimation on Embedded Systems》

论文写作tip2

ABM thesis translation

Use Baidu network disk to upload data to the server

Mmdetection trains its own data set -- export coco format of cvat annotation file and related operations

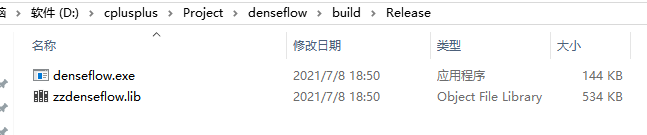

win10+vs2017+denseflow编译

随机推荐

【Sparse-to-Dense】《Sparse-to-Dense:Depth Prediction from Sparse Depth Samples and a Single Image》

[Sparse to Dense] Sparse to Dense: Depth Prediction from Sparse Depth samples and a Single Image

点云数据理解(PointNet实现第3步)

Memory model of program

【Hide-and-Seek】《Hide-and-Seek: A Data Augmentation Technique for Weakly-Supervised Localization xxx》

Record of problems in the construction process of IOD and detectron2

Alpha Beta Pruning in Adversarial Search

【MagNet】《Progressive Semantic Segmentation》

MMDetection安装问题

【Random Erasing】《Random Erasing Data Augmentation》

Apple added the first iPad with lightning interface to the list of retro products

程序的执行

How to turn on night mode on laptop

【Mixed Pooling】《Mixed Pooling for Convolutional Neural Networks》

常见的机器学习相关评价指标

程序的内存模型

conda常用命令

【Paper Reading】

MoCO ——Momentum Contrast for Unsupervised Visual Representation Learning

Proof and understanding of pointnet principle