当前位置:网站首页>Target detection for long tail distribution -- balanced group softmax

Target detection for long tail distribution -- balanced group softmax

2022-07-02 07:57:00 【MezereonXP】

Deal with long tailed target detection – Balanced Group Softmax

List of articles

This time I will introduce an article CVPR2020 The article , Titled “Overcoming Classifier Imbalance for Long-tail Object Detection with Balanced Group Softmax”, It mainly solves the problem of long tail data distribution in target detection , The solution is also very simple .

Long tailed data

First , Long tailed data exist widely , Here we use COCO and LVIS Take two data sets as examples , As shown in the figure below :

The abscissa is the index of the category , The ordinate is the number of samples in the corresponding category .

You can see , In these two data sets , There is an obvious long tail distribution .

Previous methods for dealing with long tail distribution

Here are some related works , Given by category :

- Resampling based on data (data re-sampling)

- Oversampling the tail data :Borderline-smote: a new over-sampling method in im- balanced data sets learning

- Delete the header data :class imbalance, and cost sensitivity: why under-sampling beats over sampling

- Sampling based on category balance :Exploring the limits of weakly supervised pretraining.

- Cost sensitive learning (cost- sensitive learning)

- Through to loss Adjustment , Give different weights to different categories

These methods are usually sensitive to hyperparameters , And poor performance when migrating to the detection framework ( The difference between classification task and detection task )

Balanced Group Softmax

Here is the specific framework of the algorithm :

As shown in the figure above , In the training phase , We will group the categories , Calculate separately in different groups Softmax, Then calculate the respective cross entropy error .

For grouping , The paper is given by 0,10,100,1000,+inf As a segmentation point

Here we need to add one for each group other Category , bring , When the target category is not in a group ,groundtruth Set to other.

The final error form is :

L k = − ∑ n = 0 N ∑ i ∈ G n y i n log ( p i n ) \mathcal{L}_k=-\sum_{n=0}^{N}\sum_{i\in \mathcal{G}_n}y_i^n\log (p_i^n) Lk=−n=0∑Ni∈Gn∑yinlog(pin)

among , N N N It's the number of groups , G n \mathcal{G}_n Gn It's No n n n Category collection of groups , p i n p_i^n pin Is the probability of model output , y i n y_i^n yin Is the label .

Effect evaluation

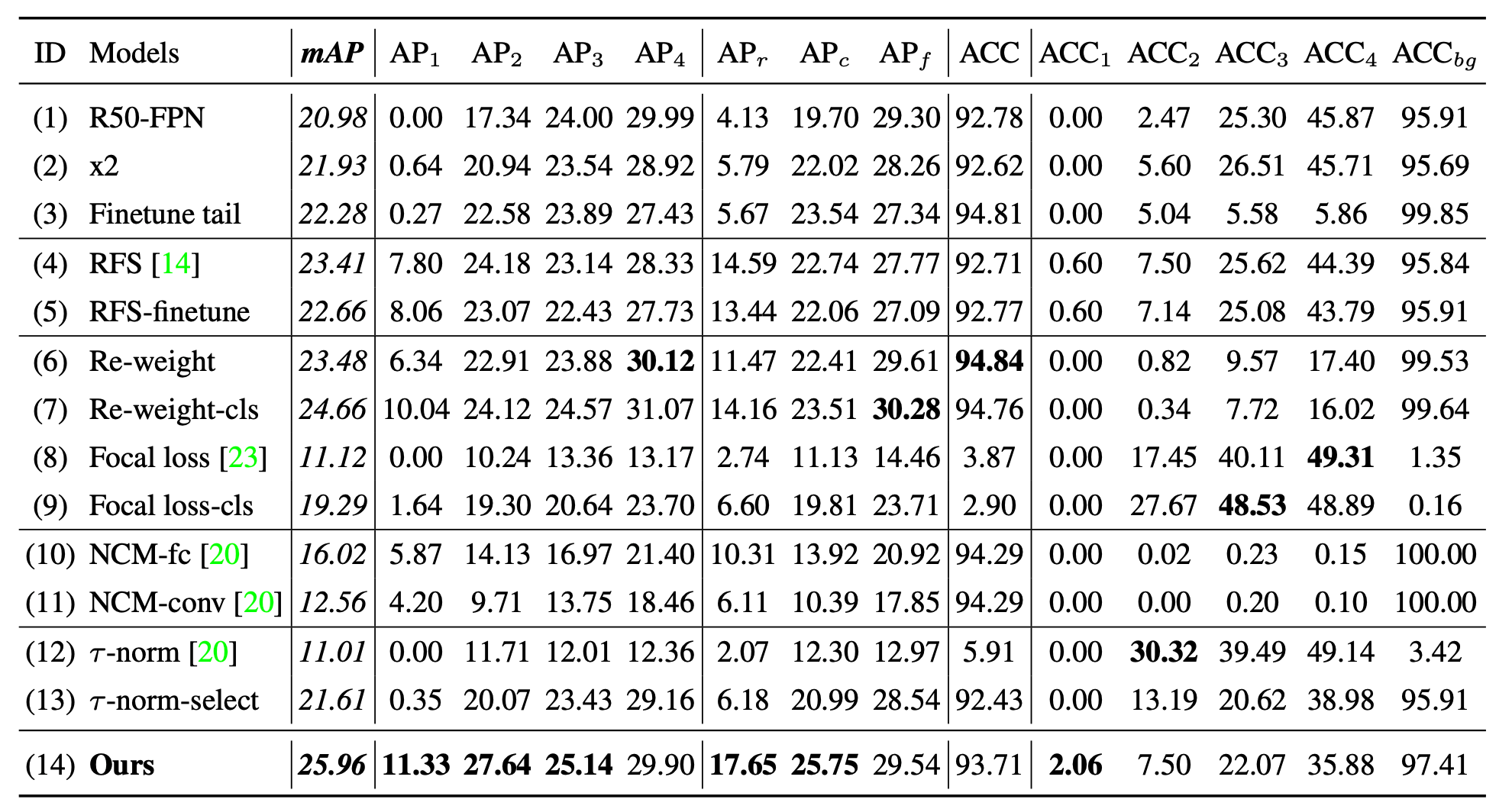

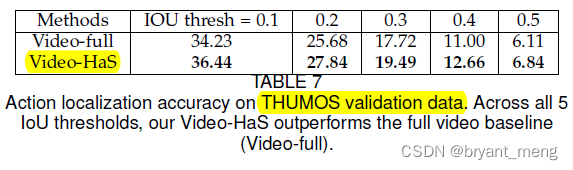

Here is a precision table for comprehensive comparison

AP The subscript of corresponds to the index of the divided group , You can see , Precision in the tail , That is to say A P 1 AP_1 AP1 and A C C 1 ACC_1 ACC1 It has reached SOTA Performance of .

边栏推荐

- open3d学习笔记二【文件读写】

- 【学习笔记】反向误差传播之数值微分

- How to clean up logs on notebook computers to improve the response speed of web pages

- 程序的内存模型

- [in depth learning series (8)]: principles of transform and actual combat

- 【MnasNet】《MnasNet:Platform-Aware Neural Architecture Search for Mobile》

- Execution of procedures

- Faster-ILOD、maskrcnn_ Benchmark trains its own VOC data set and problem summary

- I'll show you why you don't need to log in every time you use Taobao, jd.com, etc?

- 【MobileNet V3】《Searching for MobileNetV3》

猜你喜欢

Common CNN network innovations

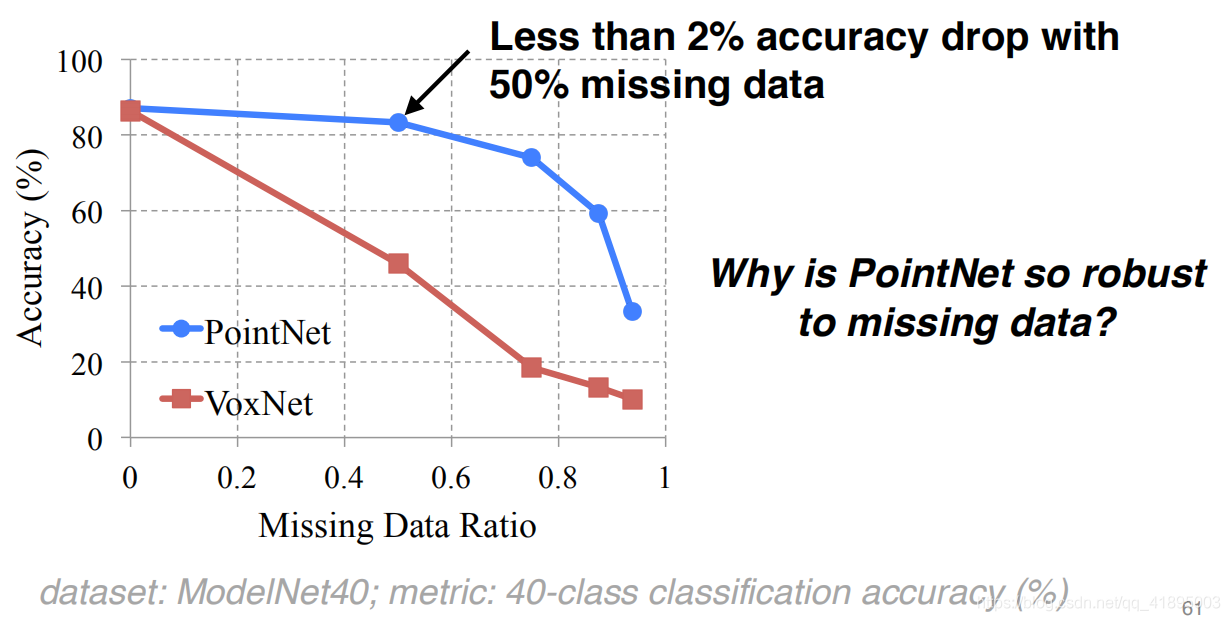

Pointnet understanding (step 4 of pointnet Implementation)

【Programming】

【Hide-and-Seek】《Hide-and-Seek: A Data Augmentation Technique for Weakly-Supervised Localization xxx》

Network metering - transport layer

label propagation 标签传播

Hystrix dashboard cannot find hystrix Stream solution

【Random Erasing】《Random Erasing Data Augmentation》

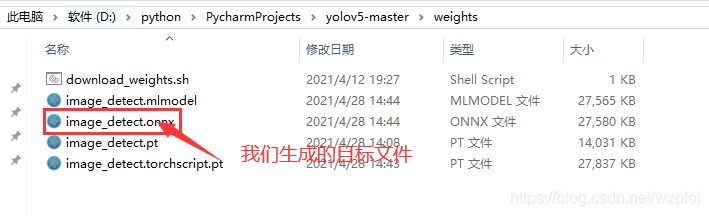

Implementation of yolov5 single image detection based on onnxruntime

Feature Engineering: summary of common feature transformation methods

随机推荐

Look for we media materials from four aspects to ensure your creative inspiration

【学习笔记】Matlab自编高斯平滑器+Sobel算子求导

超时停靠视频生成

联邦学习下的数据逆向攻击 -- GradInversion

针对语义分割的真实世界的对抗样本攻击

【Paper Reading】

【Random Erasing】《Random Erasing Data Augmentation》

【Hide-and-Seek】《Hide-and-Seek: A Data Augmentation Technique for Weakly-Supervised Localization xxx》

【Hide-and-Seek】《Hide-and-Seek: A Data Augmentation Technique for Weakly-Supervised Localization xxx》

What if the laptop task manager is gray and unavailable

Handwritten call, apply, bind

【Mixup】《Mixup:Beyond Empirical Risk Minimization》

[mixup] mixup: Beyond Imperial Risk Minimization

服务器的内网可以访问,外网却不能访问的问题

Open3D学习笔记一【初窥门径,文件读取】

【Cutout】《Improved Regularization of Convolutional Neural Networks with Cutout》

Traditional target detection notes 1__ Viola Jones

【Random Erasing】《Random Erasing Data Augmentation》

Win10 solves the problem that Internet Explorer cannot be installed

Label propagation