当前位置:网站首页>How gensim freezes some word vectors for incremental training

How gensim freezes some word vectors for incremental training

2022-07-02 07:52:00 【MezereonXP】

Gensim It can be used for topic model extraction , Word vector generated python The library of .

It's like something NLP Preprocessing , You can use this library to generate easily and quickly .

Like Word2Vec, We can generate word vectors with a few lines of code , As shown below :

import gensim

from numpy import float32 as REAL

import numpy as np

word_list = ["I", "love", "you", "."]

model = gensim.models.Word2Vec(sentences=word_list, vector_size=200, window=10, min_count=1, workers=4)

# Print word vector

print(model.wv["I"])

# Save the model

model.save("w2v.out")

The author uses Gensim Generate word vectors , But there is a need , There is already a word vector model , Now we want to expand the original vocabulary , But I don't want to modify the word vector of existing words .

Gensim There is no document describing how to freeze word vectors , But we check its source code , It is found that there is an experimental variable that can help us .

# EXPERIMENTAL lockf feature; create minimal no-op lockf arrays (1 element of 1.0)

# advanced users should directly resize/adjust as desired after any vocab growth

self.wv.vectors_lockf = np.ones(1, dtype=REAL)

# 0.0 values suppress word-backprop-updates; 1.0 allows

This code can be found in gensim Of word2vec.py You can find

therefore , We can use this vectos_lockf To meet our needs , The corresponding code is directly given here

# Read the old word vector model

model = gensim.models.Word2Vec.load("w2v.out")

old_key = set(model.wv.index_to_key)

new_word_list = ["You", "are", "a", "good", "man", "."]

model.build_vocab(new_word_list, update=True)

# Get the length of the updated vocabulary

length = len(model.wv.index_to_key)

# Freeze all the previous words

model.wv.vectors_lockf = np.zeros(length, dtype=REAL)

for i, k in enumerate(model.wv.index_to_key):

if k not in old_key:

model.wv.vectors_lockf[i] = 1.

model.train(new_word_list, total_examples=model.corpus_count, epochs=model.epochs)

model.save("w2v-new.out")

In this way, the word vector is frozen , It will not affect some existing models ( We may train some models based on old word vectors ).

边栏推荐

- open3d学习笔记四【表面重建】

- Comparison of chat Chinese corpus (attach links to various resources)

- One book 1078: sum of fractional sequences

- label propagation 标签传播

- Mmdetection model fine tuning

- TimeCLR: A self-supervised contrastive learning framework for univariate time series representation

- ModuleNotFoundError: No module named ‘pytest‘

- 论文写作tip2

- 【Random Erasing】《Random Erasing Data Augmentation》

- 【MobileNet V3】《Searching for MobileNetV3》

猜你喜欢

【DIoU】《Distance-IoU Loss:Faster and Better Learning for Bounding Box Regression》

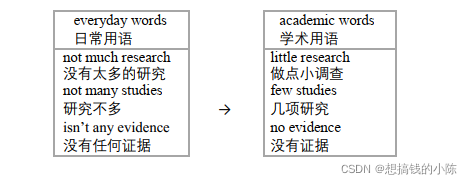

Thesis writing tip2

【Wing Loss】《Wing Loss for Robust Facial Landmark Localisation with Convolutional Neural Networks》

【Programming】

Sorting out dialectics of nature

open3d学习笔记四【表面重建】

Regular expressions in MySQL

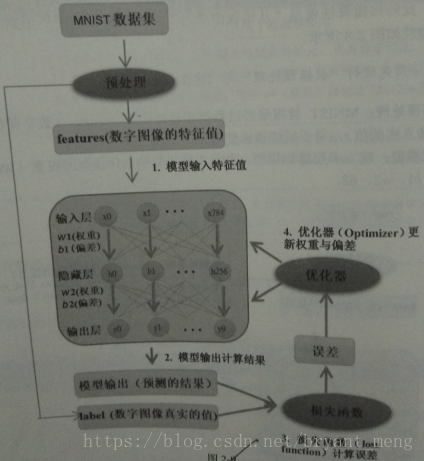

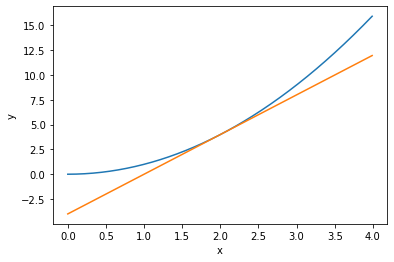

【学习笔记】反向误差传播之数值微分

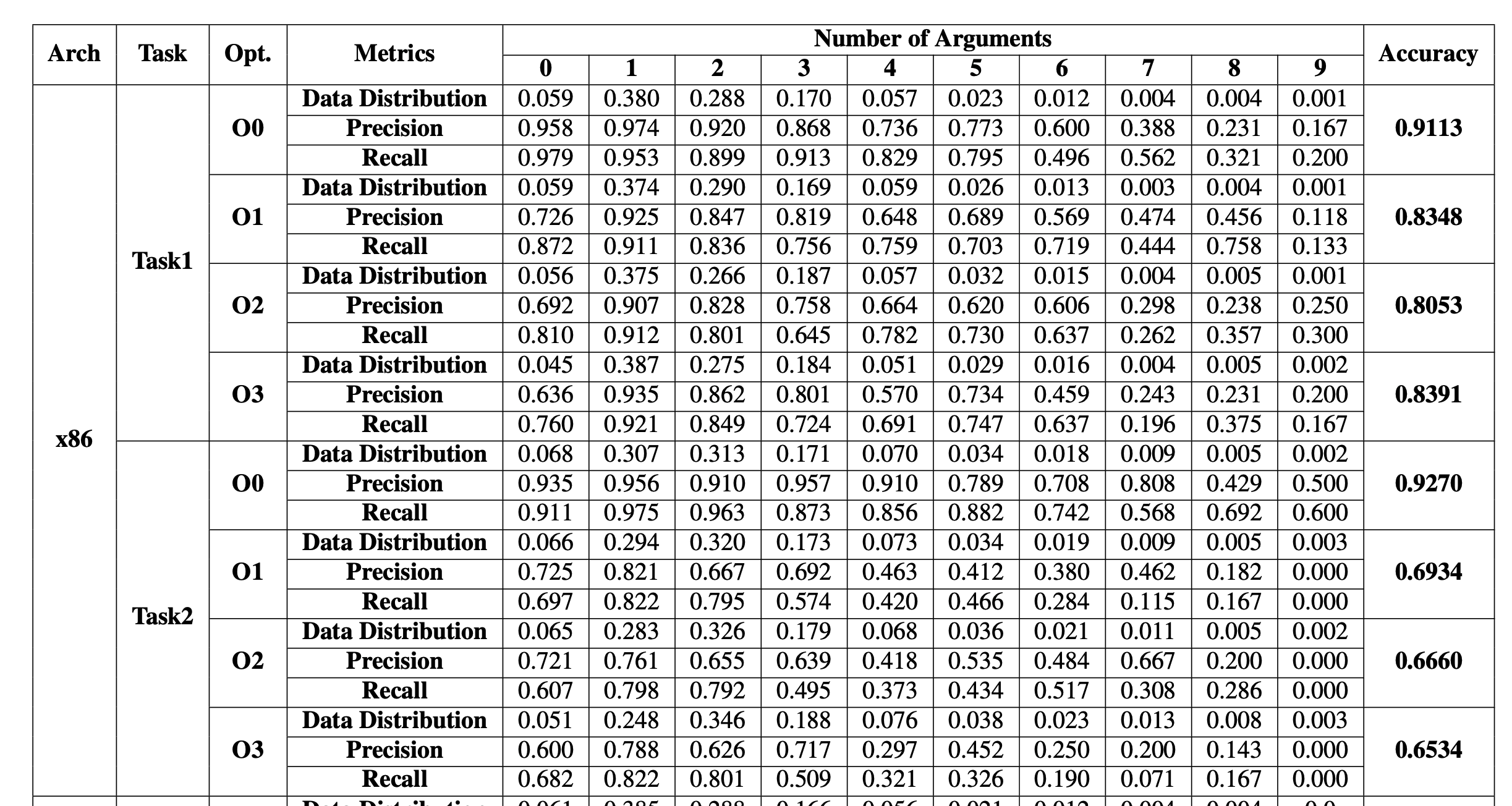

EKLAVYA -- 利用神经网络推断二进制文件中函数的参数

win10+vs2017+denseflow编译

随机推荐

【Paper Reading】

Timeout docking video generation

【多模态】CLIP模型

Open3D学习笔记一【初窥门径,文件读取】

【Batch】learning notes

【Cascade FPD】《Deep Convolutional Network Cascade for Facial Point Detection》

Conversion of numerical amount into capital figures in PHP

【Batch】learning notes

How do vision transformer work? [interpretation of the paper]

Generate random 6-bit invitation code in PHP

Faster-ILOD、maskrcnn_ Benchmark training coco data set and problem summary

C#与MySQL数据库连接

A slide with two tables will help you quickly understand the target detection

【Paper Reading】

Implementation of yolov5 single image detection based on pytorch

Calculate the total in the tree structure data in PHP

【MnasNet】《MnasNet:Platform-Aware Neural Architecture Search for Mobile》

Thesis tips

Calculate the difference in days, months, and years between two dates in PHP

Win10 solves the problem that Internet Explorer cannot be installed