当前位置:网站首页>Backup tidb cluster to persistent volume

Backup tidb cluster to persistent volume

2022-07-07 21:25:00 【Tianxiang shop】

This document describes how to put Kubernetes On TiDB The data of the cluster is backed up to Persistent volume On . Persistent volumes described in this article , Means any Kubernetes Supported persistent volume types . This paper aims to backup data to the network file system (NFS) For example, storage .

The backup method described in this document is based on TiDB Operator Of CustomResourceDefinition (CRD) Realization , Bottom use BR Tools to obtain cluster data , Then store the backup data to a persistent volume .BR Its full name is Backup & Restore, yes TiDB Command line tools for distributed backup and recovery , Used to deal with TiDB Cluster for data backup and recovery .

Use scenarios

If you have the following requirements for data backup , Consider using BR take TiDB Cluster data in Ad-hoc Backup or Scheduled full backup Backup to persistent volumes :

- The amount of data that needs to be backed up is large , And it requires faster backup

- Need to backup data directly SST file ( Key value pair )

If there are other backup requirements , Reference resources Introduction to backup and recovery Choose the right backup method .

Be careful

- BR Only support TiDB v3.1 And above .

- Use BR The data backed up can only be restored to TiDB In the database , Cannot recover to other databases .

Ad-hoc Backup

Ad-hoc Backup supports full backup and incremental backup .Ad-hoc Backup by creating a custom Backup custom resource (CR) Object to describe a backup .TiDB Operator According to this Backup Object to complete the specific backup process . If an error occurs during the backup , The program will not automatically retry , At this time, it needs to be handled manually .

This document assumes that the deployment is in Kubernetes test1 In this namespace TiDB colony demo1 Data backup , The following is the specific operation process .

The first 1 Step : Get ready Ad-hoc Backup environment

Download the file backup-rbac.yaml To the server performing the backup .

Execute the following command , stay

test1In this namespace , To create a backup RBAC Related resources :kubectl apply -f backup-rbac.yaml -n test1Confirm that you can start from Kubernetes Access the... Used to store backup data in the cluster NFS The server , And you have configured TiKV Mounting is the same as backup NFS Share the directory to the same local directory .TiKV mount NFS The specific configuration method of can refer to the following configuration :

spec: tikv: additionalVolumes: # Specify volume types that are supported by Kubernetes, Ref: https://kubernetes.io/docs/concepts/storage/persistent-volumes/#types-of-persistent-volumes - name: nfs nfs: server: 192.168.0.2 path: /nfs additionalVolumeMounts: # This must match `name` in `additionalVolumes` - name: nfs mountPath: /nfsIf you use it TiDB Version below v4.0.8, You also need to do the following . If you use it TiDB by v4.0.8 And above , You can skip this step .

Make sure you have a backup database

mysql.tidbTabularSELECTandUPDATEjurisdiction , Used to adjust before and after backup GC Time .establish

backup-demo1-tidb-secretsecret For storing access TiDB Password corresponding to the user of the cluster .kubectl create secret generic backup-demo1-tidb-secret --from-literal=password=${password} --namespace=test1

The first 2 Step : Back up data to persistent volumes

establish

BackupCR, And back up the data to NFS:kubectl apply -f backup-nfs.yamlbackup-nfs.yamlThe contents of the document are as follows , This example will TiDB The data of the cluster is fully exported and backed up to NFS:--- apiVersion: pingcap.com/v1alpha1 kind: Backup metadata: name: demo1-backup-nfs namespace: test1 spec: # # backupType: full # # Only needed for TiDB Operator < v1.1.10 or TiDB < v4.0.8 # from: # host: ${tidb-host} # port: ${tidb-port} # user: ${tidb-user} # secretName: backup-demo1-tidb-secret br: cluster: demo1 clusterNamespace: test1 # logLevel: info # statusAddr: ${status-addr} # concurrency: 4 # rateLimit: 0 # checksum: true # options: # - --lastbackupts=420134118382108673 local: prefix: backup-nfs volume: name: nfs nfs: server: ${nfs_server_ip} path: /nfs volumeMount: name: nfs mountPath: /nfsIn the configuration

backup-nfs.yamlWhen you file , Please refer to the following information :If you need incremental backup , Only need

spec.br.optionsSpecifies the last backup timestamp--lastbackuptsthat will do . Restrictions on incremental backups , May refer to Use BR Back up and restore ..spec.localIndicates the persistent volume related configuration , Detailed explanation reference Local Storage field introduction .spec.brSome parameter items in can be omitted , Such aslogLevel、statusAddr、concurrency、rateLimit、checksum、timeAgo. more.spec.brFor detailed explanation of fields, please refer to BR Field is introduced .If you use it TiDB by v4.0.8 And above , BR Will automatically adjust

tikv_gc_life_timeParameters , No configuration requiredspec.tikvGCLifeTimeandspec.fromField .more

BackupCR Detailed explanation of fields , Reference resources Backup CR Field is introduced .

Create good

BackupCR after ,TiDB Operator Will be based onBackupCR Automatically start backup . You can check the backup status through the following command :kubectl get bk -n test1 -owide

Backup example

Back up all cluster data

Backing up data from a single database

Back up the data of a single table

Use the table library filtering function to back up the data of multiple tables

Scheduled full backup

Users set backup policies to TiDB The cluster performs scheduled backup , At the same time, set the retention policy of backup to avoid too many backups . Scheduled full backup through customized BackupSchedule CR Object to describe . A full backup will be triggered every time the backup time point , The bottom layer of scheduled full backup passes Ad-hoc Full backup . The following are the specific steps to create a scheduled full backup :

The first 1 Step : Prepare a scheduled full backup environment

Same as Ad-hoc Backup environment preparation .

The first 2 Step : Regularly back up the data to the persistent volume

establish

BackupScheduleCR, Turn on TiDB Scheduled full backup of the cluster , Back up the data to NFS:kubectl apply -f backup-schedule-nfs.yamlbackup-schedule-nfs.yamlThe contents of the document are as follows :--- apiVersion: pingcap.com/v1alpha1 kind: BackupSchedule metadata: name: demo1-backup-schedule-nfs namespace: test1 spec: #maxBackups: 5 #pause: true maxReservedTime: "3h" schedule: "*/2 * * * *" backupTemplate: # Only needed for TiDB Operator < v1.1.10 or TiDB < v4.0.8 # from: # host: ${tidb_host} # port: ${tidb_port} # user: ${tidb_user} # secretName: backup-demo1-tidb-secret br: cluster: demo1 clusterNamespace: test1 # logLevel: info # statusAddr: ${status-addr} # concurrency: 4 # rateLimit: 0 # checksum: true local: prefix: backup-nfs volume: name: nfs nfs: server: ${nfs_server_ip} path: /nfs volumeMount: name: nfs mountPath: /nfsFrom above

backup-schedule-nfs.yamlThe file configuration example shows ,backupScheduleThe configuration of consists of two parts . Part of it isbackupScheduleUnique configuration , The other part isbackupTemplate.backupScheduleFor specific introduction of unique configuration items, please refer to BackupSchedule CR Field is introduced .backupTemplateSpecify the configuration related to cluster and remote storage , Fields and Backup CR Mediumspecequally , Please refer to Backup CR Field is introduced .

After the scheduled full backup is created , Check the status of the backup through the following command :

kubectl get bks -n test1 -owideCheck all the backup pieces below the scheduled full backup :

kubectl get bk -l tidb.pingcap.com/backup-schedule=demo1-backup-schedule-nfs -n test1

边栏推荐

- Unity3d 4.3.4f1 execution project

- 单词反转实现「建议收藏」

- Word inversion implements "suggestions collection"

- How to meet the dual needs of security and confidentiality of medical devices?

- 【OpenCV 例程200篇】223. 特征提取之多边形拟合(cv.approxPolyDP)

- The little money made by the program ape is a P!

- 95年专注安全这一件事 沃尔沃未来聚焦智能驾驶与电气化领域安全

- 华泰证券可以做到万一佣金吗,万一开户安全嘛

- Contour layout of margin

- C language helps you understand pointers from multiple perspectives (1. Character pointers 2. Array pointers and pointer arrays, array parameter passing and pointer parameter passing 3. Function point

猜你喜欢

Goal: do not exclude yaml syntax. Try to get started quickly

![[C language] advanced pointer --- do you really understand pointer?](/img/ee/79c0646d4f1bfda9543345b9da0f25.png)

[C language] advanced pointer --- do you really understand pointer?

SQL注入报错注入函数图文详解

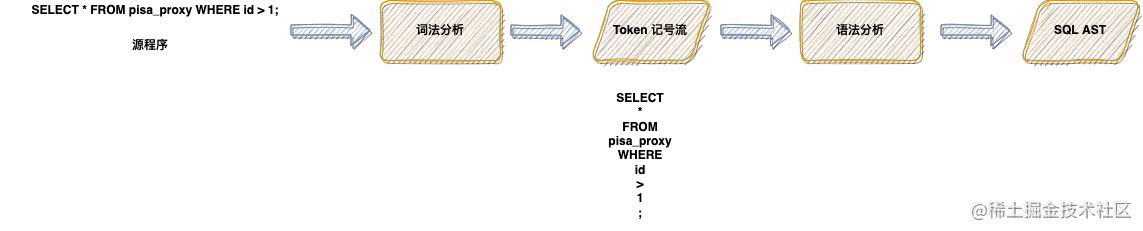

Lex & yacc of Pisa proxy SQL parsing

使用枚举实现英文转盲文

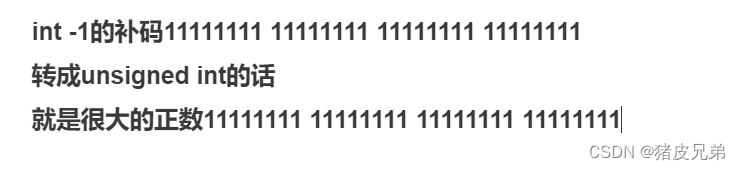

Ten thousand word summary data storage, three knowledge points

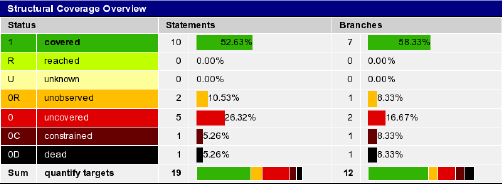

How does codesonar help UAVs find software defects?

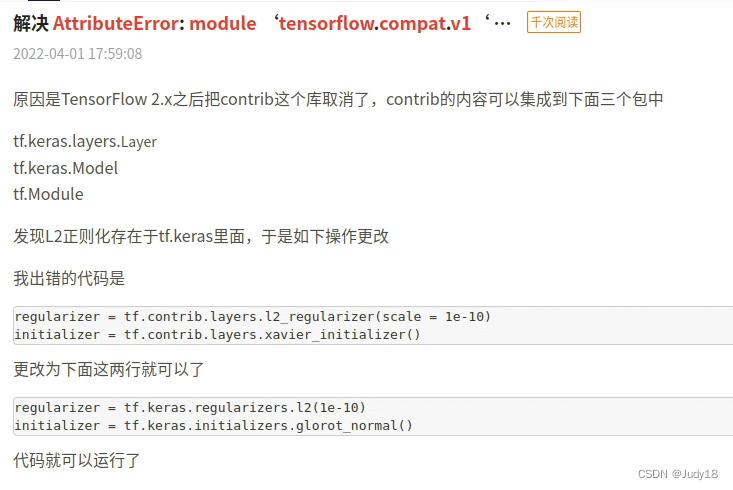

Tensorflow2. How to run under x 1 Code of X

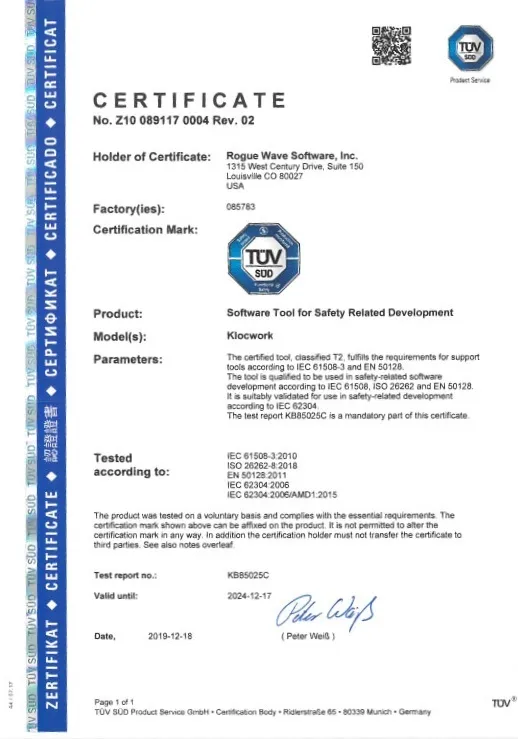

Klocwork code static analysis tool

The new version of onespin 360 DV has been released, refreshing the experience of FPGA formal verification function

随机推荐

Codeforces round 296 (Div. 2) A. playing with paper[easy to understand]

How does codesonar help UAVs find software defects?

201215-03-19 - cocos2dx memory management - specific explanation "recommended collection"

AADL inspector fault tree safety analysis module

万字总结数据存储,三大知识点

An overview of the latest research progress of "efficient deep segmentation of labels" at Shanghai Jiaotong University, which comprehensively expounds the deep segmentation methods of unsupervised, ro

神兵利器——敏感文件发现工具

恶魔奶爸 A1 语音听力初挑战

Klocwork code static analysis tool

Problems encountered in installing mysql8 for Ubuntu and the detailed installation process

HOJ 2245 浮游三角胞(数学啊 )

华泰证券可以做到万一佣金吗,万一开户安全嘛

Small guide for rapid formation of manipulator (12): inverse kinematics analysis

Numerical method for solving optimal control problem (0) -- Definition

uva 12230 – Crossing Rivers(概率)「建议收藏」

【函数递归】简单递归的5个经典例子,你都会吗?

Introduction to referer and referer policy

Make this crmeb single merchant wechat mall system popular, so easy to use!

Demon daddy C

Usage of MySQL subquery keywords (exists)