当前位置:网站首页>Microsoft Research, UIUC & Google research | antagonistic training actor critic based on offline training reinforcement learning

Microsoft Research, UIUC & Google research | antagonistic training actor critic based on offline training reinforcement learning

2022-07-06 03:12:00 【Zhiyuan community】

边栏推荐

- How to accurately identify master data?

- Daily question brushing plan-2-13 fingertip life

- 有没有完全自主的国产化数据库技术

- Audio audiorecord binder communication mechanism

- 继承day01

- Deep parsing pointer and array written test questions

- XSS challenges bypass the protection strategy for XSS injection

- 电机控制反Park变换和反Clarke变换公式推导

- ERA5再分析资料下载攻略

- Distributed service framework dobbo

猜你喜欢

Deeply analyze the chain 2+1 mode, and subvert the traditional thinking of selling goods?

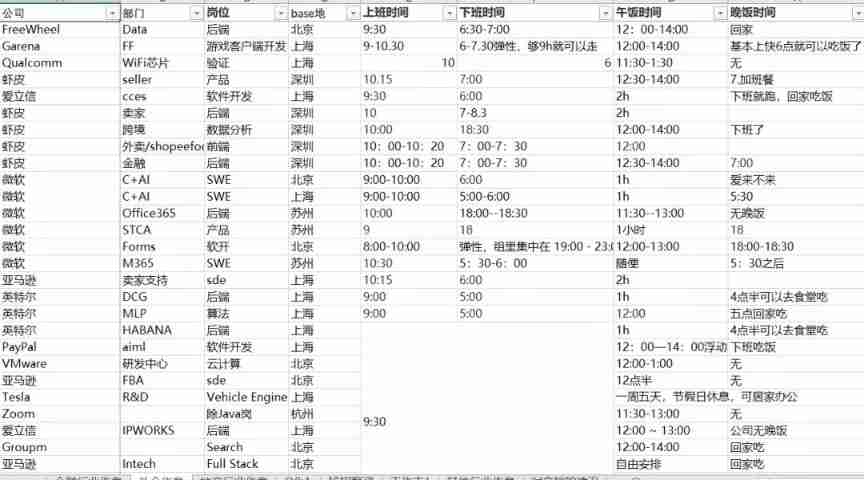

Crazy, thousands of netizens are exploding the company's salary

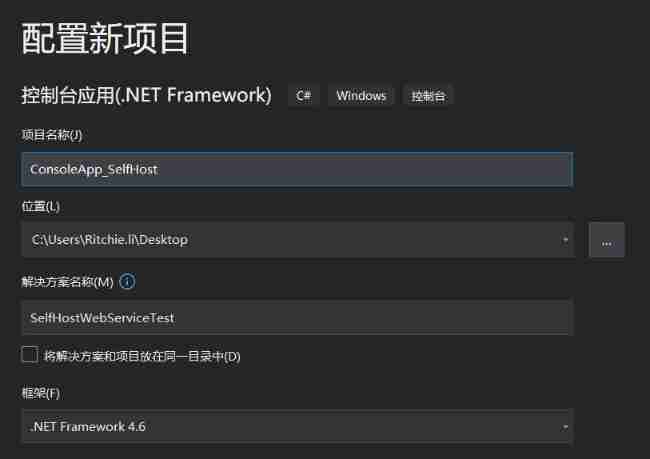

C # create self host webservice

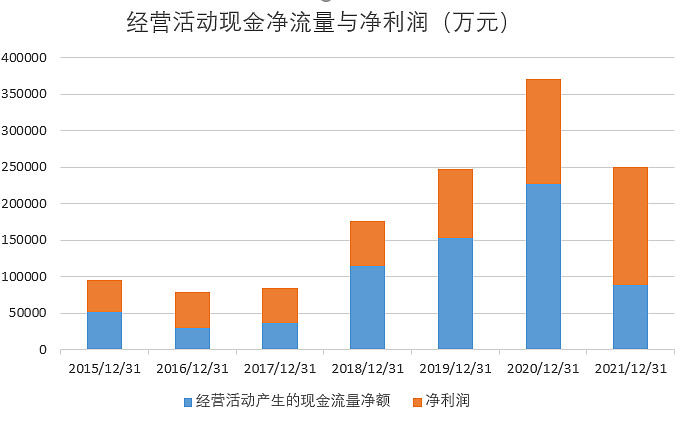

What is the investment value of iFLYTEK, which does not make money?

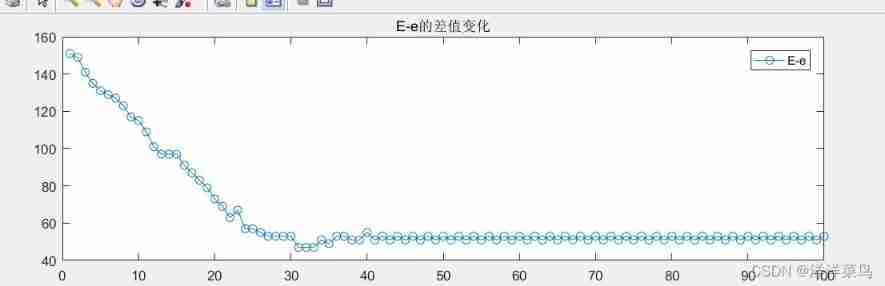

Game theory matlab

八道超经典指针面试题(三千字详解)

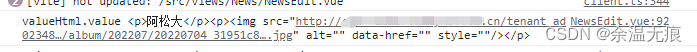

JS regular filtering and adding image prefixes in rich text

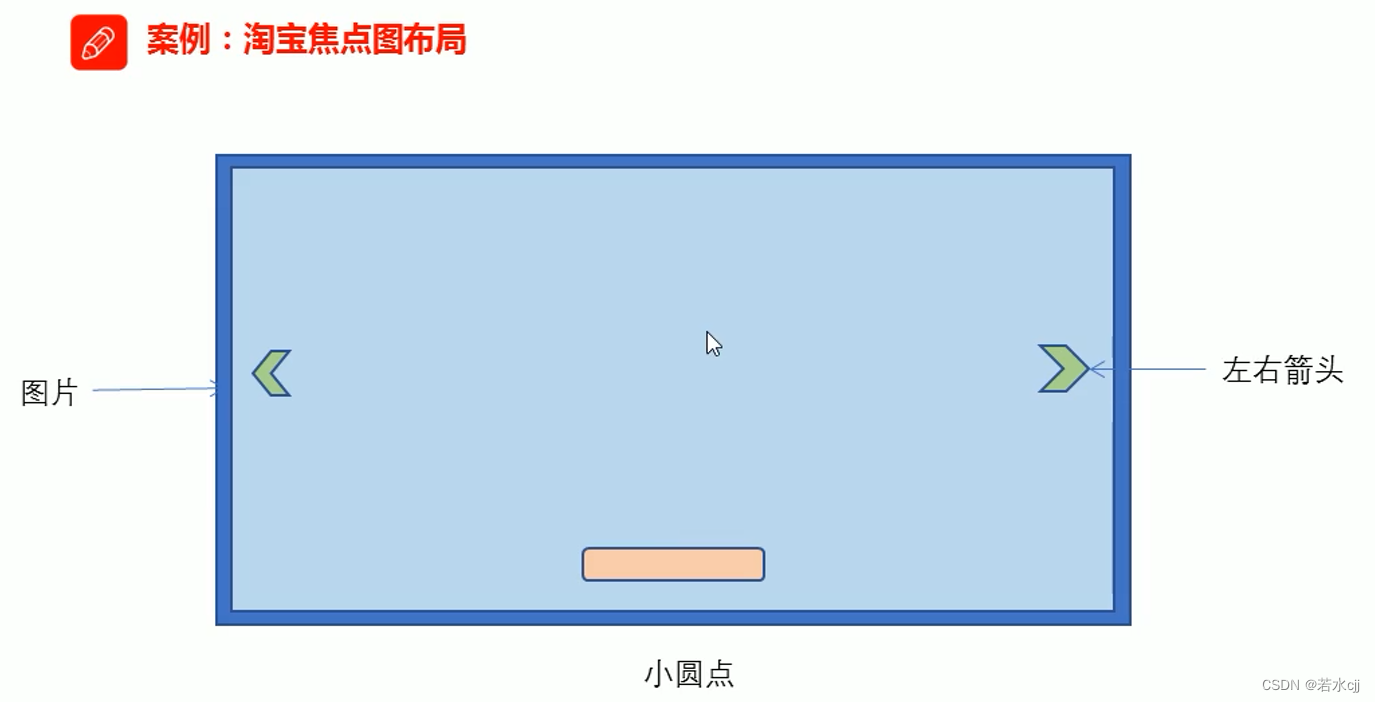

Taobao focus map layout practice

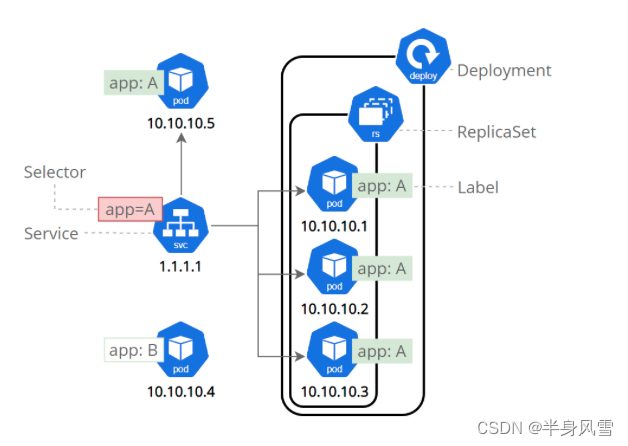

【Kubernetes 系列】一文學會Kubernetes Service安全的暴露應用

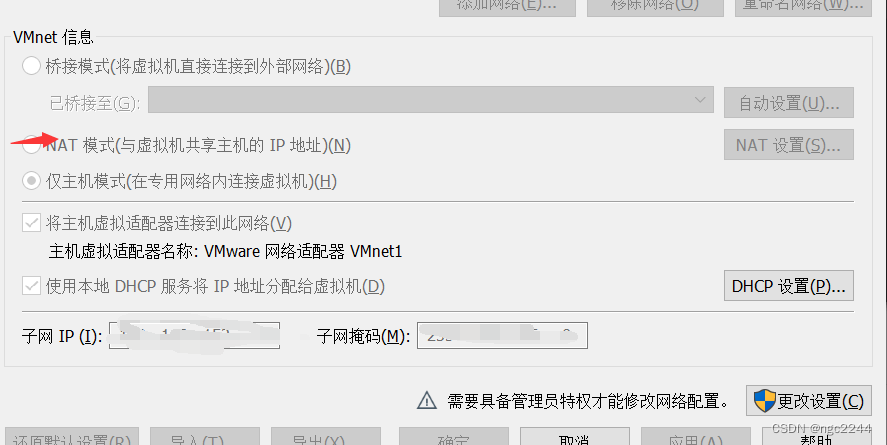

真机无法访问虚拟机的靶场,真机无法ping通虚拟机

随机推荐

Overview of OCR character recognition methods

深度解析指针与数组笔试题

Analyze menu analysis

原型图设计

What are the principles of software design (OCP)

2.11 simulation summary

[kubernetes series] learn the exposed application of kubernetes service security

Differences and application scenarios between resulttype and resultmap

Microservice registration and discovery

Performance test method of bank core business system

Leetcode problem solving -- 108 Convert an ordered array into a binary search tree

An article about liquid template engine

建模规范:命名规范

Software design principles

How does yyds dry inventory deal with repeated messages in the consumption process?

Precautions for single chip microcomputer anti reverse connection circuit

Deep parsing pointer and array written test questions

Classic interview question [gem pirate]

NR modulation 1

StrError & PERROR use yyds dry inventory