当前位置:网站首页>Meta learning Brief

Meta learning Brief

2022-07-02 07:57:00 【MezereonXP】

Meta Learning sketch

Let's review , Traditional machine learning or deep learning process :

- Identify training and test data sets

- Determine the model structure

- Initialize model parameters ( Usually some commonly used random distribution )

- Initialize optimizer types and parameters

- Training , Until it converges

Meta Learning The goal is to learn some steps 2,3,4 Parameters of , We call it Meta knowledge (meta- knowledge)

It might as well be formalized

Suppose the data set is D = { ( x 1 , y 1 ) , . . . , ( x N , y N ) } D = \{(x_1,y_1),...,(x_N,y_N)\} D={ (x1,y1),...,(xN,yN)} among x i x_i xi It's input , y i y_i yi Is the output tag

Our goal is to get a prediction model y ^ = f ( x ; θ ) \hat{y} = f(x;\theta) y^=f(x;θ) , among θ \theta θ Represent the parameters of the model , x x x For input at the same time y ^ \hat{y} y^ Is the output of the prediction

The form of optimization is :

θ ∗ = arg min θ L ( D ; θ , ω ) \theta^*=\arg \min_{\theta} \mathcal{L}(D;\theta,\omega) θ∗=argθminL(D;θ,ω)

Among them ω \omega ω Meta knowledge , Include :

- Optimizer type

- Model structure

- Initial distribution of model parameters

- …

We will compare the existing data sets D D D Divide tasks , Cut into multiple task sets , Each task set includes a training set and a test set , In the form of :

D s o u r c e = { ( D s o u r c e t r a i n , D s o u r c e v a l ) ( i ) } i = 1 M D_{source} = \{(D^{train}_{source},D^{val}_{source})^{(i)}\}_{i=1}^{M} Dsource={ (Dsourcetrain,Dsourceval)(i)}i=1M

The optimization objective is :

ω ∗ = arg max ω log p ( ω ∣ D s o u r c e ) \omega^* = \arg \max_{\omega} \log p(\omega|D_{source}) ω∗=argωmaxlogp(ω∣Dsource)

That is, in the multiple task sets we segment , Find a set of configurations ( That is, meta knowledge ), Make it optimal for these tasks .

This step is generally called Meta training (meta-training)

find ω ∗ \omega^* ω∗ after , It can be applied to a target task data set D t a r g e t = { ( D t a r g e t t r a i n , D t a r g e t v a l ) } D_{target} = \{(D_{target}^{train}, D_{target}^{val})\} Dtarget={ (Dtargettrain,Dtargetval)}

Carry out traditional training on this , That is to find an optimal model parameter θ ∗ \theta^* θ∗

θ ∗ = arg max θ log p ( θ ∣ ω ∗ , D t a r g e t t r a i n ) \theta^* = \arg\max_{\theta}\log p(\theta|\omega^*, D_{target}^{train}) θ∗=argθmaxlogp(θ∣ω∗,Dtargettrain)

This step is called Meta test (meta-testing)

边栏推荐

- Thesis writing tip2

- 针对tqdm和print的顺序问题

- CPU的寄存器

- Installation and use of image data crawling tool Image Downloader

- Daily practice (19): print binary tree from top to bottom

- 利用Transformer来进行目标检测和语义分割

- Summary of open3d environment errors

- C#与MySQL数据库连接

- Open3d learning notes II [file reading and writing]

- 【AutoAugment】《AutoAugment:Learning Augmentation Policies from Data》

猜你喜欢

【双目视觉】双目立体匹配

Command line is too long

![[learning notes] matlab self compiled image convolution function](/img/82/43fc8b2546867d89fe2d67881285e9.png)

[learning notes] matlab self compiled image convolution function

用MLP代替掉Self-Attention

应对长尾分布的目标检测 -- Balanced Group Softmax

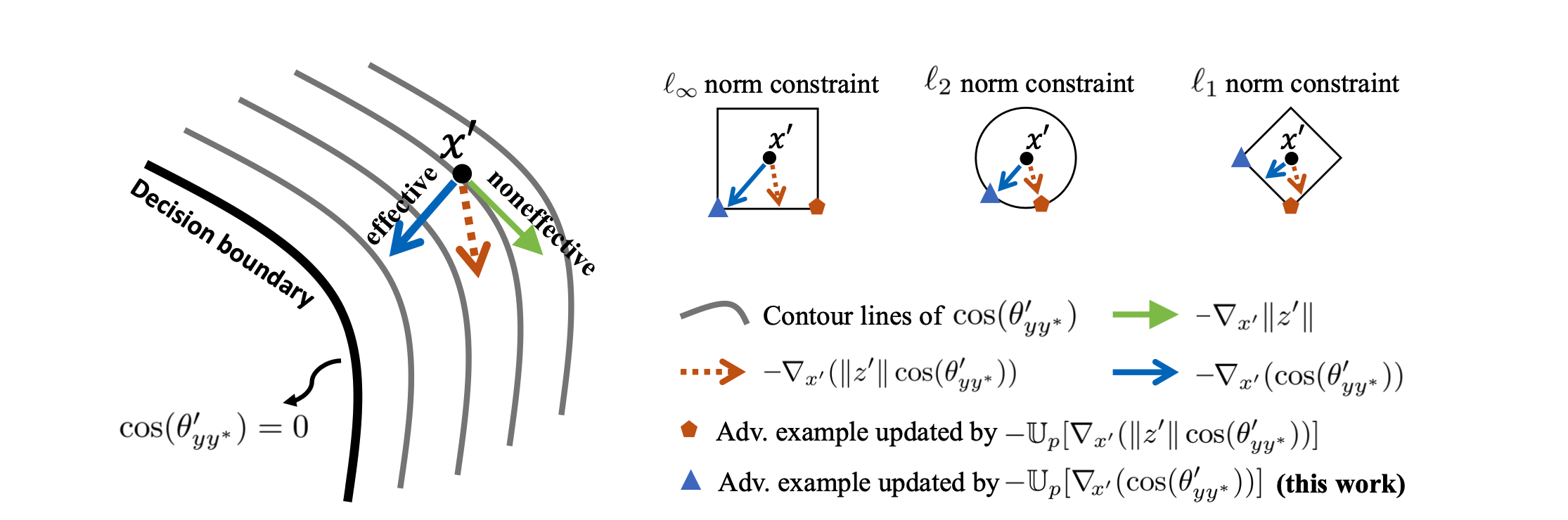

利用超球嵌入来增强对抗训练

【Random Erasing】《Random Erasing Data Augmentation》

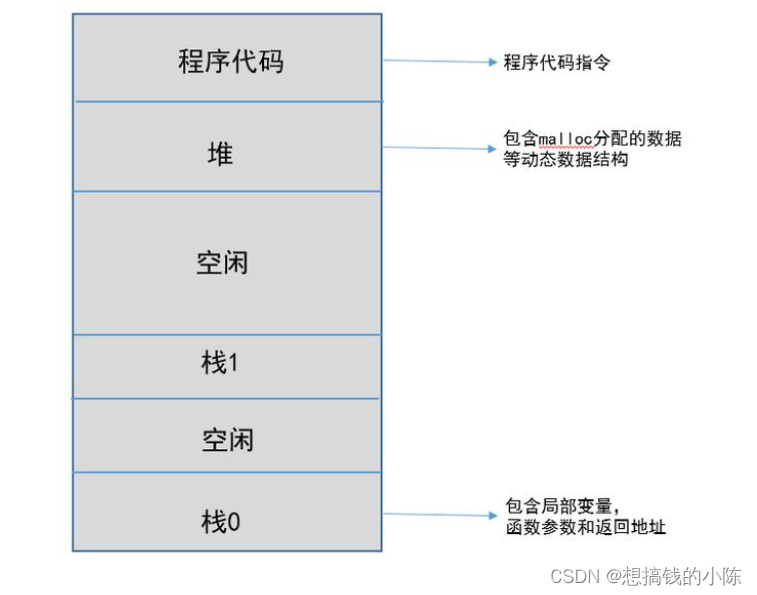

Memory model of program

Deep learning classification Optimization Practice

w10升级至W11系统,黑屏但鼠标与桌面快捷方式能用,如何解决

随机推荐

[Sparse to Dense] Sparse to Dense: Depth Prediction from Sparse Depth samples and a Single Image

One book 1078: sum of fractional sequences

Apple added the first iPad with lightning interface to the list of retro products

【Cascade FPD】《Deep Convolutional Network Cascade for Facial Point Detection》

【雙目視覺】雙目矯正

MoCO ——Momentum Contrast for Unsupervised Visual Representation Learning

CPU的寄存器

Installation and use of image data crawling tool Image Downloader

Implementation of yolov5 single image detection based on pytorch

【Mixed Pooling】《Mixed Pooling for Convolutional Neural Networks》

open3d学习笔记二【文件读写】

【TCDCN】《Facial landmark detection by deep multi-task learning》

解决jetson nano安装onnx错误(ERROR: Failed building wheel for onnx)总结

CONDA common commands

【DIoU】《Distance-IoU Loss:Faster and Better Learning for Bounding Box Regression》

超时停靠视频生成

【AutoAugment】《AutoAugment:Learning Augmentation Policies from Data》

【MagNet】《Progressive Semantic Segmentation》

利用Transformer来进行目标检测和语义分割

PPT的技巧