当前位置:网站首页>Basic configuration and use of spark

Basic configuration and use of spark

2022-07-06 17:39:00 【Bald Second Senior brother】

Catalog

Content :

spark Configuration of the three modes and spark The basic use method

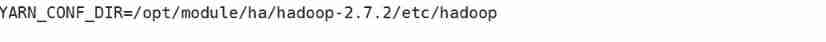

Spark Three models of

Local Pattern ( Local mode )

local Set up Master Methods :local( Default to a thread ),local[k]( Specified number of threads ),local[*]( Most used cpu Set thread ); The thread executing is Worker

To configure :

local There is no need to modify the configuration file ,Spark After installation, you can use it directly local Model to calculate and analyze data

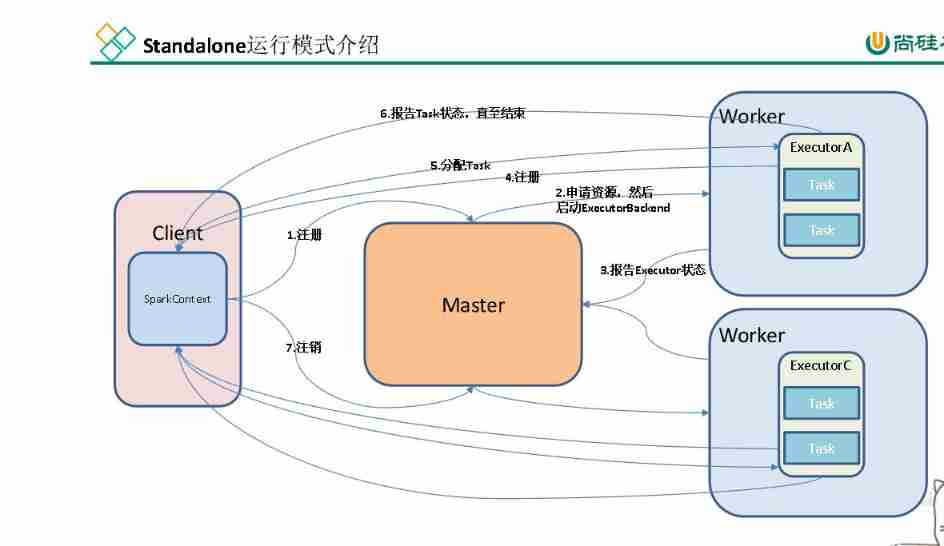

Standalone Pattern

Operation mode and hadoop Of resourcemanage Very similar .

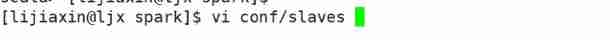

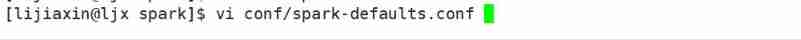

The configuration file

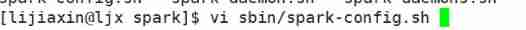

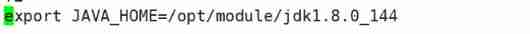

Modify this file , If you don't change the name of his reference document

Modify the content :

Add the name of the host , Follow hadoop The same is for group and cluster services

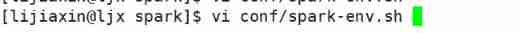

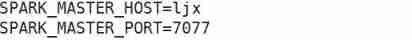

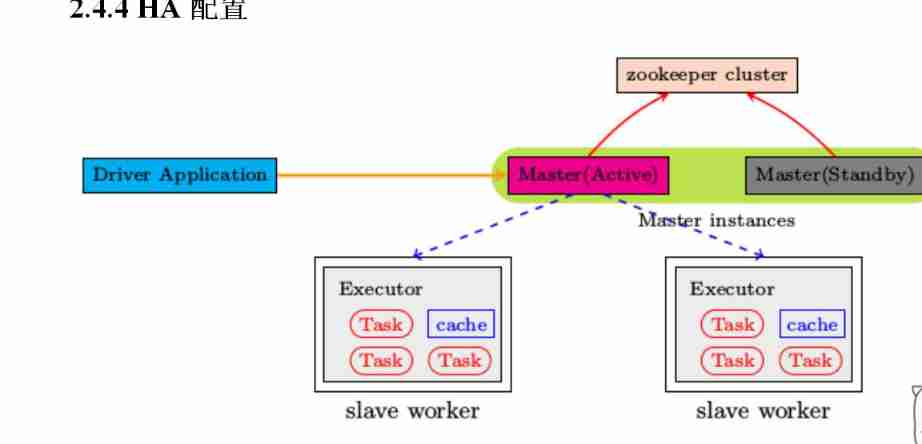

This file also needs to be modified , If nothing, change the reference file

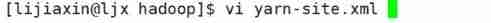

Configure the content

This is designated to run on that machine master, And specify the port number

The next step is to send the configuration file to slaves Each host in

This file modification specifies java The running address of

History server

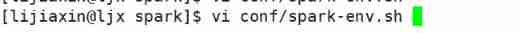

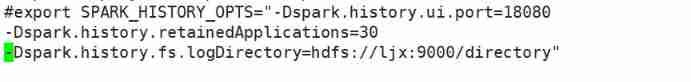

To add a historical server, you need to modify this configuration file and add the following contents

Be careful : Take out the notes

As above, the configuration file needs to be modified

stay hdps Created in directory file , And start up hdfs

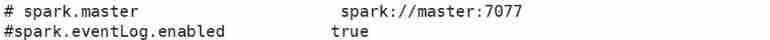

HA

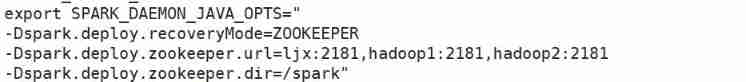

Modify the configuration file

Add content

Appoint zookeeper Location of , And hdfs At the same time, comment out

To avoid conflict

Then distribute the configuration file

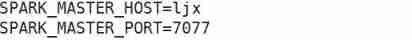

Yarn Pattern

effect : You don't need to build Spark Cluster of

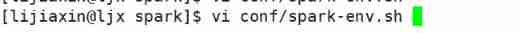

The configuration file :

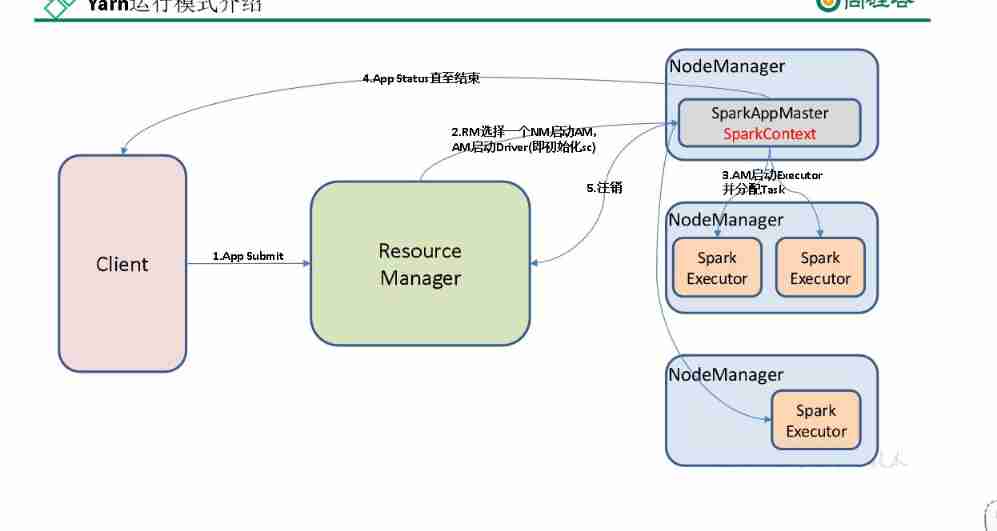

1.

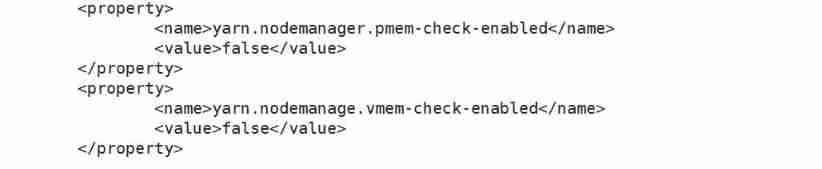

modify hadoop in yarn Configuration file for

Modify the content :

The function of this configuration is to turn off operations with excessive memory , Otherwise, when the computing memory exceeds a certain limit spark It will turn off automatically

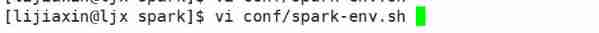

2. modify

Modify the content :

Be careful

Before using different modes, you should comment or delete the contents of other modes in the configuration file .

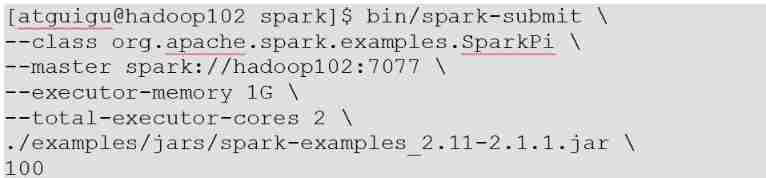

Use of official cases

Pay attention to each different mode ,master The content of is different , There will be some differences in other places

api Use

object WordCount {

def main(args: Array[String]): Unit = {

//WordCount Development

//local Pattern

// establish sparkConf object

// Set up spark Deployment environment for

val config = new SparkConf().setMaster("local[*]").setAppName("WordCount")

// establish spark Context object

val sc=new SparkContext(config)

// Read the file , Read line by line ( Local search file:///xxxxx)

val lines = sc.textFile("file:///opt/module/ha/spark/in")

// Decompose the data into words one by one

val words = lines.flatMap(_.split(" "))

// Transformation structure

val wordToOne = words.map((_, 1))

// Group aggregation

val wordToSum = wordToOne.reduceByKey(_ + _)

// Printout

println(wordToSum.collect())

}

}

summary :

Today I learned how to spark At the same time, the official use of spark Three modes of and their configuration files ,spark The configuration file of is relatively simple, but it is inconvenient that it cannot coexist , At the same time, the use method of his official operation is too complex to be easy to remember , It is estimated that you still need to check the document for later use . Next is in java It is also cumbersome when used on , But it is much simpler than the command line

边栏推荐

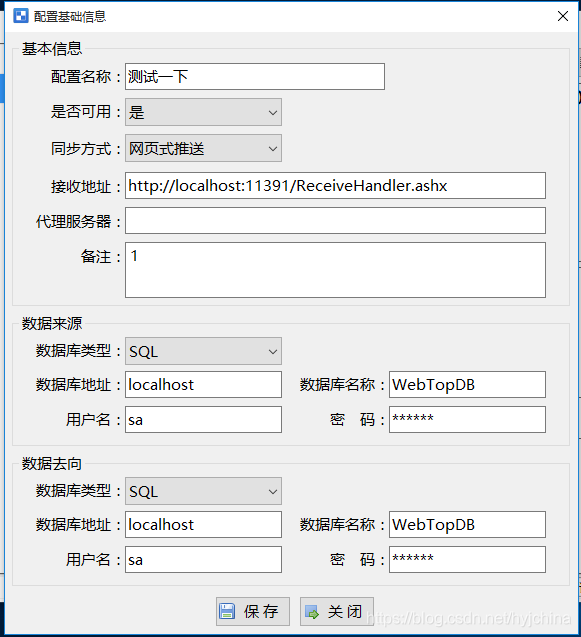

- 04 products and promotion developed by individuals - data push tool

- [VNCTF 2022]ezmath wp

- About selenium starting Chrome browser flash back

- Openharmony developer documentation open source project

- Grafana 9 正式发布,更易用,更酷炫了!

- 关于Selenium启动Chrome浏览器闪退问题

- Garbage first of JVM garbage collector

- EasyRE WriteUp

- Xin'an Second Edition: Chapter 25 mobile application security requirements analysis and security protection engineering learning notes

- 基于LNMP部署flask项目

猜你喜欢

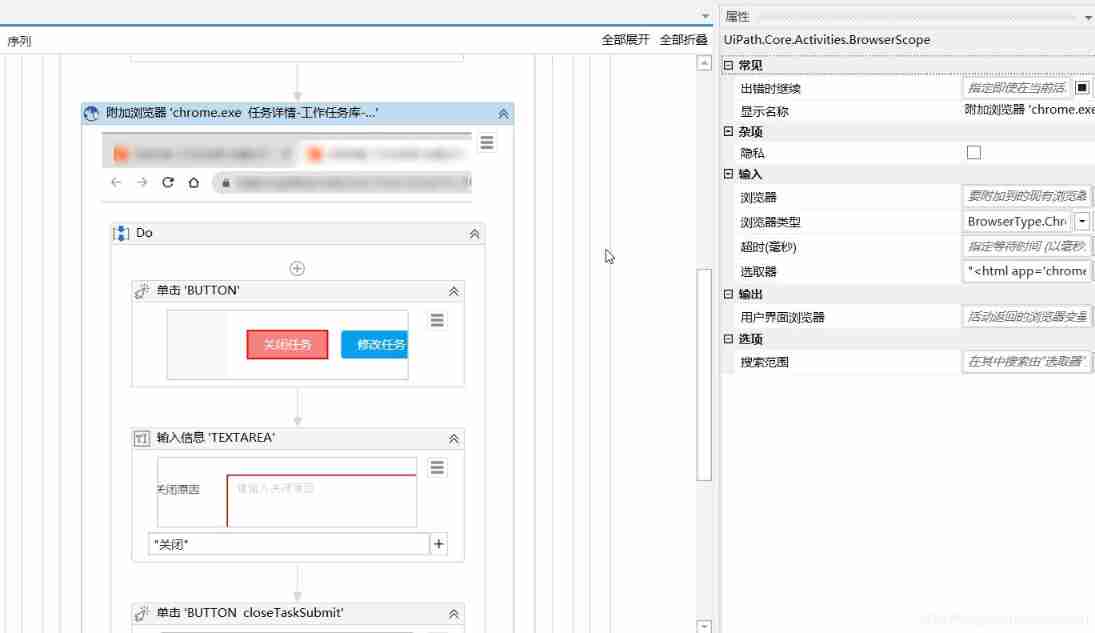

Uipath browser performs actions in the new tab

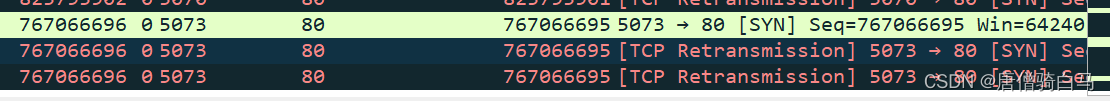

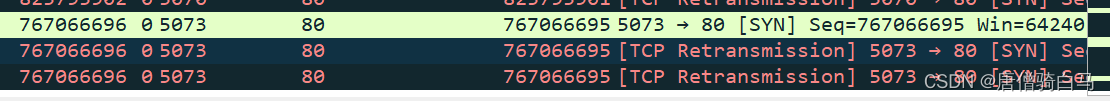

TCP连接不止用TCP协议沟通

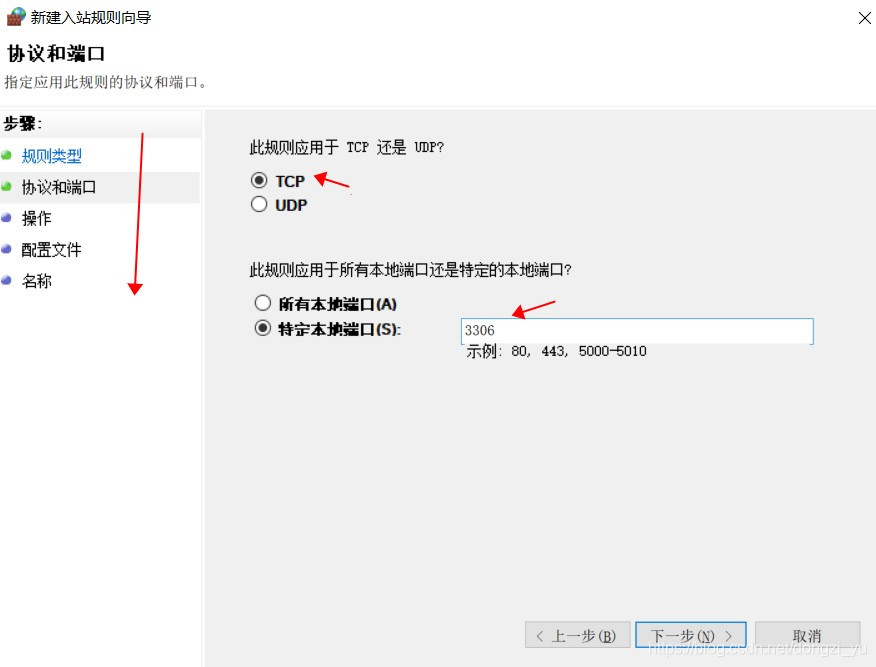

连接局域网MySql

华为认证云计算HICA

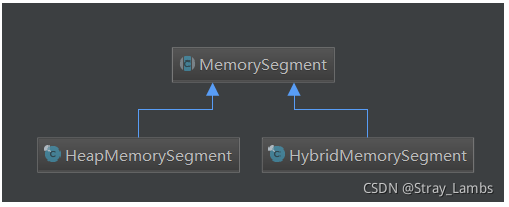

Flink parsing (III): memory management

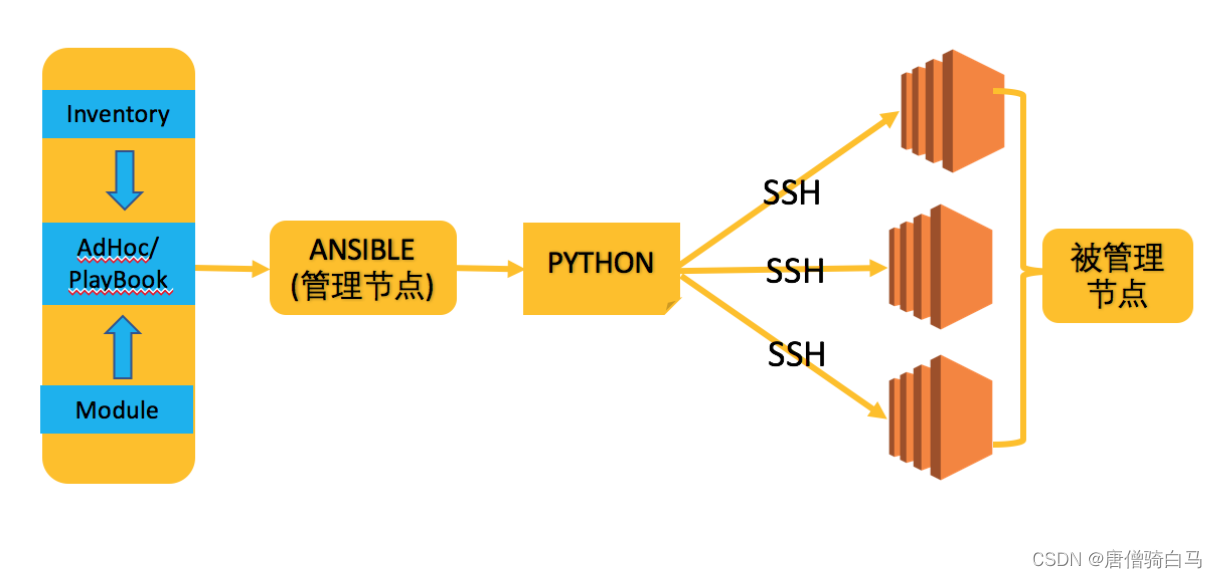

自动化运维利器ansible基础

04个人研发的产品及推广-数据推送工具

![[ASM] introduction and use of bytecode operation classwriter class](/img/0b/87c9851e577df8dcf8198a272b81bd.png)

[ASM] introduction and use of bytecode operation classwriter class

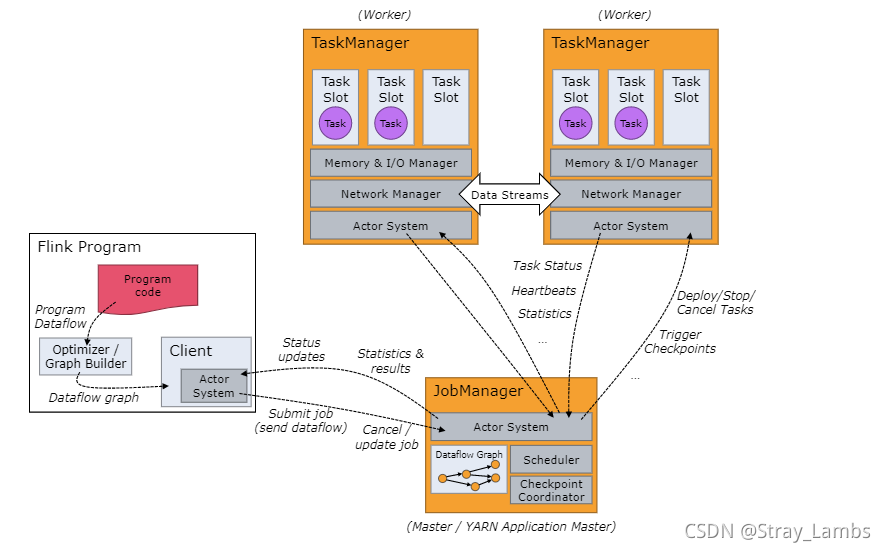

Flink analysis (I): basic concept analysis

TCP connection is more than communicating with TCP protocol

随机推荐

About selenium starting Chrome browser flash back

JVM 垃圾回收器之Garbage First

Virtual machine startup prompt probing EDD (edd=off to disable) error

Wu Jun's trilogy insight (V) refusing fake workers

MySQL Advanced (index, view, stored procedures, functions, Change password)

Kali2021 installation and basic configuration

Pyspark operator processing spatial data full parsing (5): how to use spatial operation interface in pyspark

Junit单元测试

轻量级计划服务工具研发与实践

Distributed (consistency protocol) leader election (dotnext.net.cluster implements raft election)

The art of Engineering

CTF reverse entry question - dice

Integrated development management platform

分布式(一致性协议)之领导人选举( DotNext.Net.Cluster 实现Raft 选举 )

【MySQL入门】第四话 · 和kiko一起探索MySQL中的运算符

应用服务配置器(定时,数据库备份,文件备份,异地备份)

Wu Jun's trilogy experience (VII) the essence of Commerce

1. Introduction to JVM

Redis quick start

Automatic operation and maintenance sharp weapon ansible Foundation