当前位置:网站首页>If you don't know these four caching modes, dare you say you understand caching?

If you don't know these four caching modes, dare you say you understand caching?

2022-07-04 10:19:00 【New horizon of procedure】

summary

In the system architecture , Caching is one of the easiest ways to provide system performance , Students with a little development experience will inevitably deal with caching , At least I have practiced .

If used properly , Caching can reduce response time 、 Reduce database load and save cost . But if the cache is not used properly , There may be some inexplicable problems .

In different scenarios , The caching strategy used also varies . If in your impression and experience , Caching is just a simple query 、 update operation , Then this article is really worth learning .

ad locum , Explain systematically for everyone 4 Three cache modes and their usage scenarios 、 Process and advantages and disadvantages .

Selection of caching strategy

In essence , Caching strategy depends on data and data access patterns . let me put it another way , How data is written and read .

for example :

- Does the system write more and read less ?( for example , Time based logging )

- Whether the data is written only once and read many times ?( for example , User profile )

- Is the returned data always unique ?( for example , Search for )

Choosing the right caching strategy is the key to improving performance .

There are five common cache strategies :

- Cache-Aside Pattern: Bypass caching mode

- Read Through Cache Pattern: Read penetration mode

- Write Through Cache Pattern: Write through mode

- Write Behind Pattern: Also called Write Back, Asynchronous cache write mode

The above cache strategy is divided based on the data reading and writing process , Under some caching strategies, the application only interacts with the cache , Under some caching strategies, applications interact with caches and databases at the same time . Because this is an important dimension of strategy division , Therefore, you need to pay special attention to the following process learning .

Cache Aside

Cache Aside Is the most common caching mode , Applications can talk directly to caches and databases .Cache Aside It can be used for read and write operations .

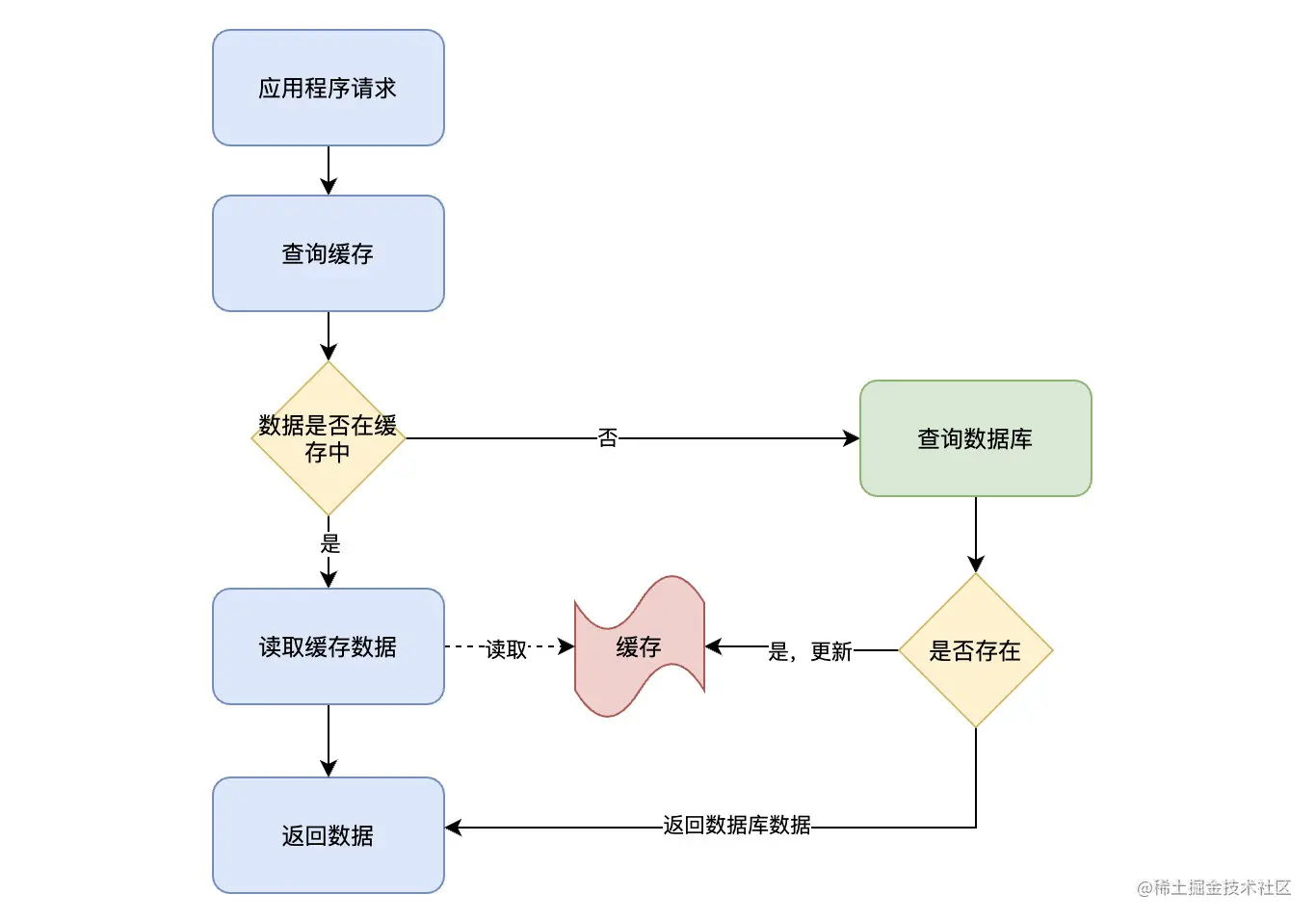

Read operations Flow chart of :

The process of reading operation :

The application receives a data query ( read ) request ;

Whether the data that the application needs to query is in the cache :

- If there is (Cache hit), Query the data from the cache , Go straight back to ;

- If it doesn't exist (Cache miss), Then retrieve data from the database , And stored in the cache , Return result data ;

Here we need to pay attention to an operation boundary , That is, the database and cache operations are directly operated by the application .

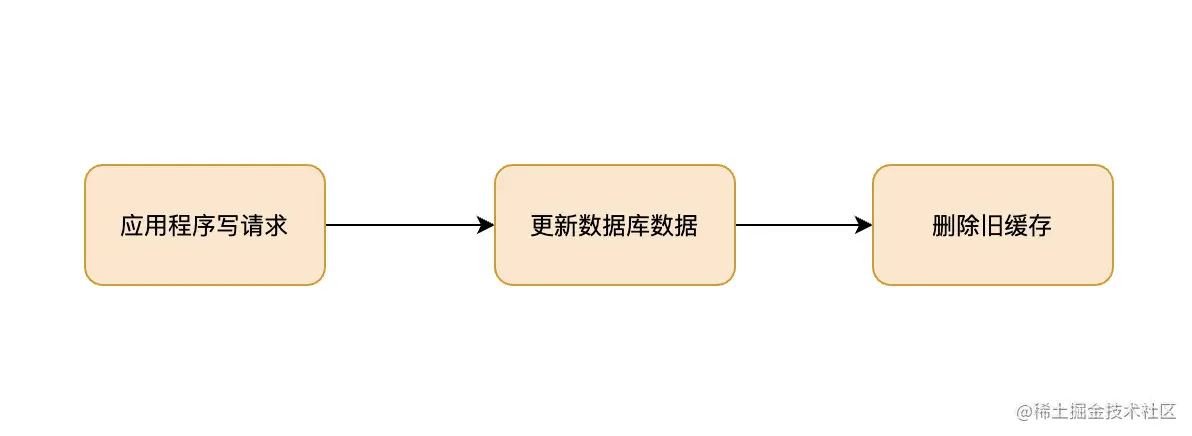

Write operations Flow chart of :

The write operation here , Including the creation of 、 Update and delete . When writing operations ,Cache Aside The pattern is to update the database first ( increase 、 Delete 、 Change ), Then delete the cache directly .

Cache Aside Patterns can be said to apply to most scenarios , Usually in order to deal with different types of data , There are also two strategies to load the cache :

- Load cache when using : When you need to use cached data , Query from the database , After the first query , Subsequent requests get data from the cache ;

- Preload cache : Preload the cache information through the program at or after the project starts , such as ” National Information 、 Currency information 、 User information , News “ Wait for data that is not often changed .

Cache Aside It is suitable for reading more and writing less , For example, user information 、 News reports, etc , Once written to the cache , Almost no modification . The disadvantage of this mode is that the cache and database double write may be inconsistent .

Cache Aside It is also a standard model , image Facebook This mode is adopted .

Read Through

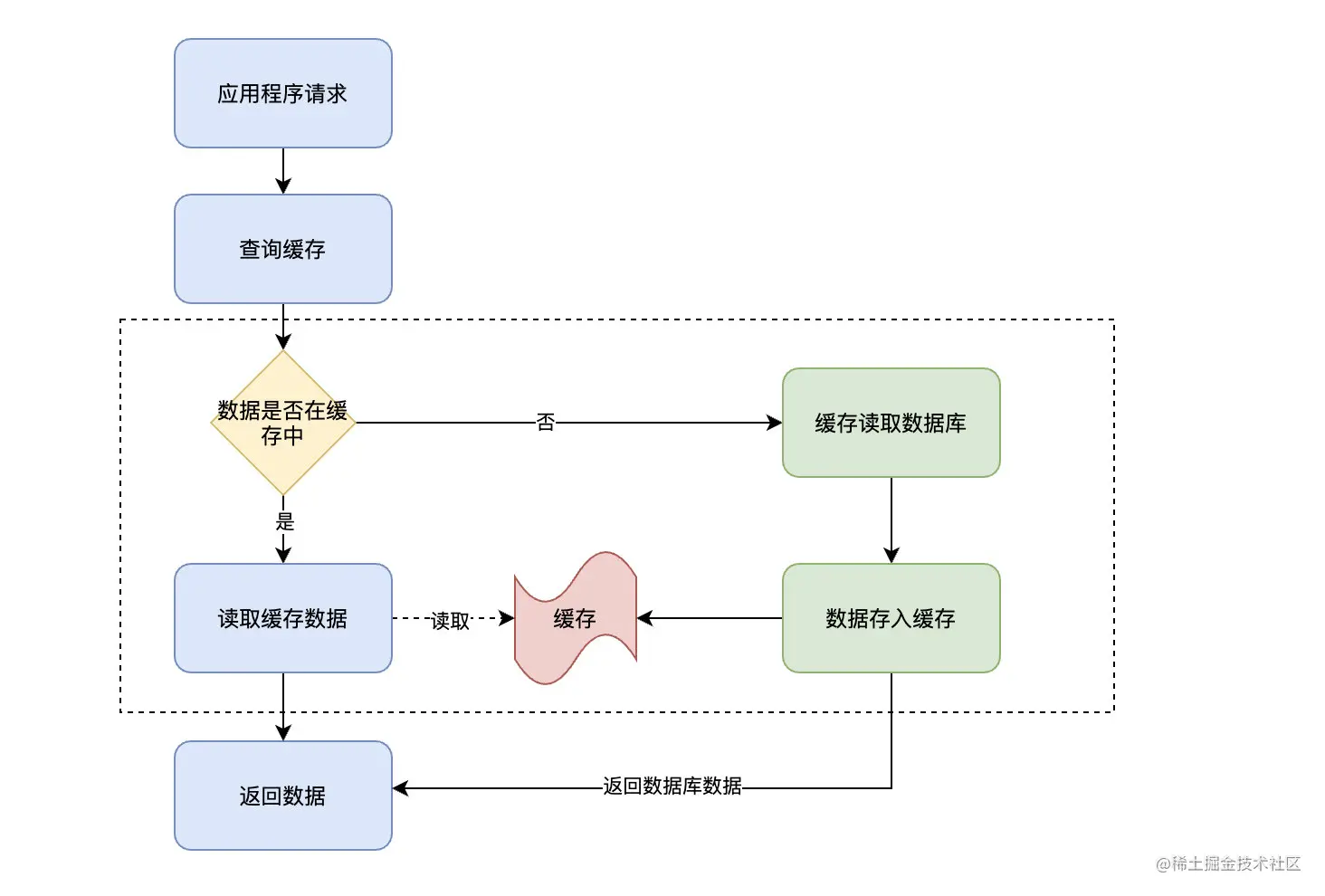

Read-Through and Cache-Aside Very similar , The difference is that the program doesn't need to focus on where to read data ( Cache or database ), It only needs to read data from the cache . Where the data in the cache comes from is determined by the cache .

Cache Aside The caller is responsible for loading the data into the cache , and Read Through The cache service itself will be used to load , So it is transparent to the application side .Read-Through Its advantage is to make the program code more concise .

This involves the application operation boundary problem we mentioned above , Look directly at the flow chart :

In the above flow chart , Focus on the operations in the dotted box , This part of the operation is no longer handled by the application , Instead, the cache handles it itself . in other words , When an application queries a piece of data from the cache , If the data does not exist, the cache will load the data , Finally, the cache returns the data results to the application .

Write Through

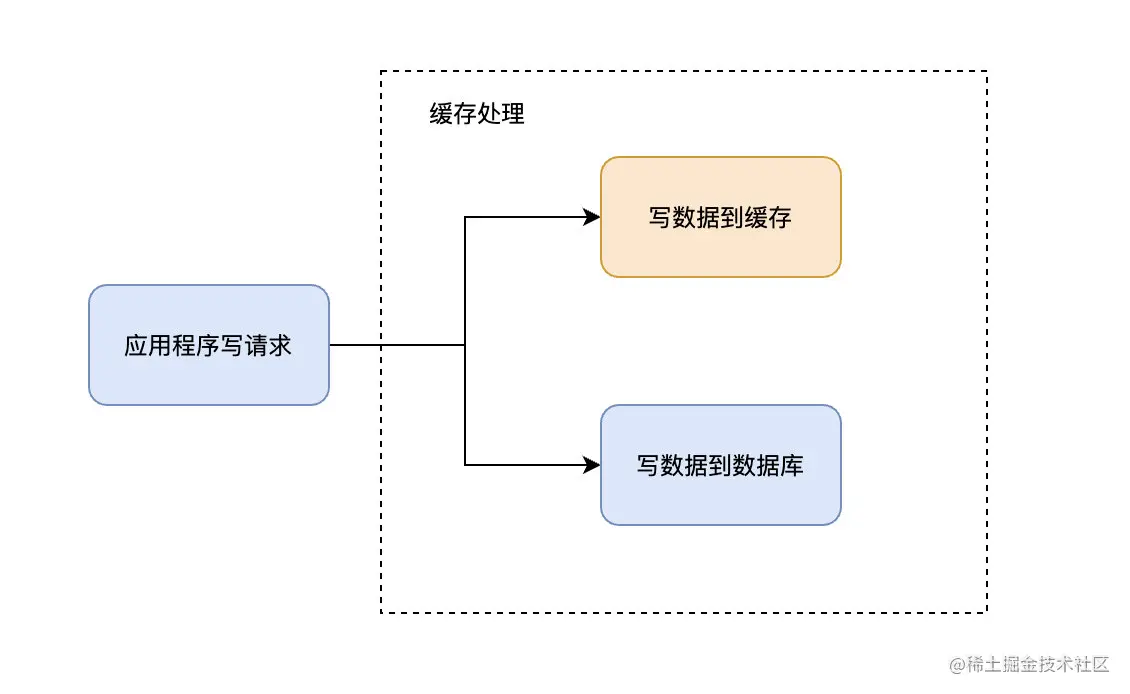

stay Cache Aside in , The application needs to maintain two data stores : A cache , A database . This is for applications , It's a little cumbersome .

Write-Through In mode , All writes are cached , Every time you write data to the cache , The cache will persist the data to the corresponding database , And these two operations are completed in one transaction . therefore , Only if you succeed in writing twice can you finally succeed . The downside is write latency , The benefit is data consistency .

It can be understood as , Applications think that the back end is a single storage , And storage itself maintains its own Cache.

Because the program only interacts with the cache , Coding will become simpler and cleaner , This becomes especially obvious when the same logic needs to be reused in multiple places .

When using Write-Through when , Generally, it is used together Read-Through To use .Write-Through The potential use scenario for is the banking system .

Write-Through Applicable cases are :

- You need to read the same data frequently

- Can't stand data loss ( relative Write-Behind for ) Inconsistent with the data

In the use of Write-Through Special attention should be paid to the effectiveness management of cache , Otherwise, a large amount of cache will occupy memory resources . Even valid cache data is cleared by invalid cache data .

Write-Behind

Write-Behind and Write-Through stay ” The program only interacts with the cache and can only write data through the cache “ This aspect is very similar . The difference is Write-Through The data will be written into the database immediately , and Write-Behind After a while ( Or triggered by other ways ) Write the data together into the database , This asynchronous write operation is Write-Behind The biggest feature .

Database write operations can be done in different ways , One way is to collect all write operations and at a certain point in time ( For example, when the database load is low ) Batch write . Another way is to merge several write operations into a small batch operation , Then the cache collects write operations and writes them in batches .

Asynchronous write operations greatly reduce the request latency and reduce the burden on the database . At the same time, it also magnifies the inconsistency of data . For example, someone directly queries data from the database at this time , But the updated data has not been written to the database , At this time, the queried data is not the latest data .

Summary

Different caching modes have different considerations and characteristics , According to the different scenarios of application requirements , You need to choose the appropriate cache mode flexibly . In the process of practice, it is often a combination of multiple modes .

About bloggers :《SpringBoot Technology insider 》 Technical book author , Love to study technology , Write technical dry goods articles .

official account :「 New perspective of procedure 」, The official account of bloggers , Welcome to your attention ~

Technical communication : Please contact blogger wechat :zhuan2quan

边栏推荐

- Reasons and solutions for the 8-hour difference in mongodb data date display

- Online troubleshooting

- Laravel文档阅读笔记-How to use @auth and @guest directives in Laravel

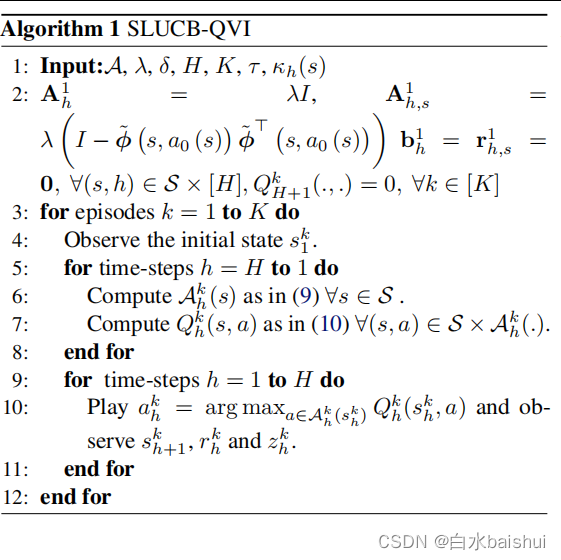

- 基于线性函数近似的安全强化学习 Safe RL with Linear Function Approximation 翻译 1

- Go context basic introduction

- System. Currenttimemillis() and system Nanotime (), which is faster? Don't use it wrong!

- Summary of small program performance optimization practice

- 智慧路灯杆水库区安全监测应用

- Exercise 9-4 finding books (20 points)

- 【Day1】 deep-learning-basics

猜你喜欢

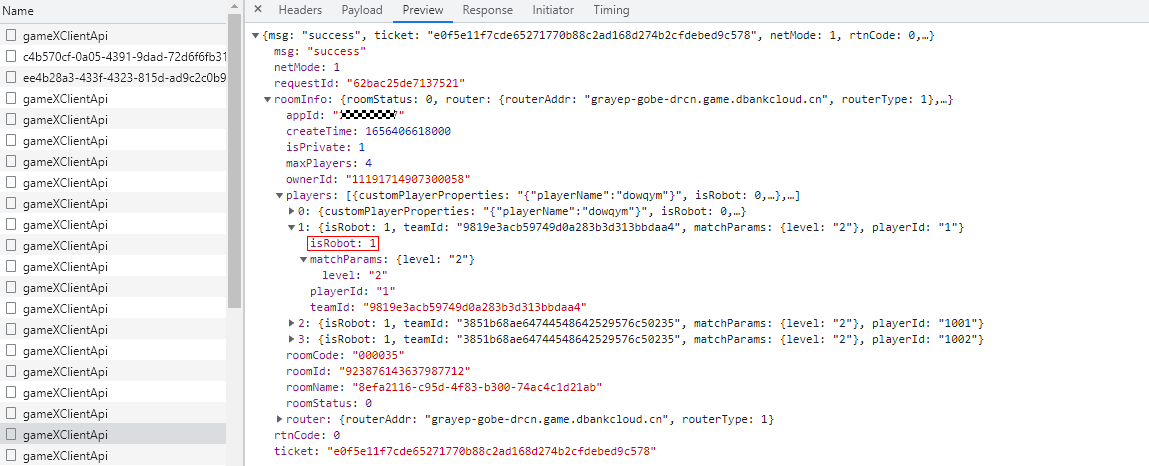

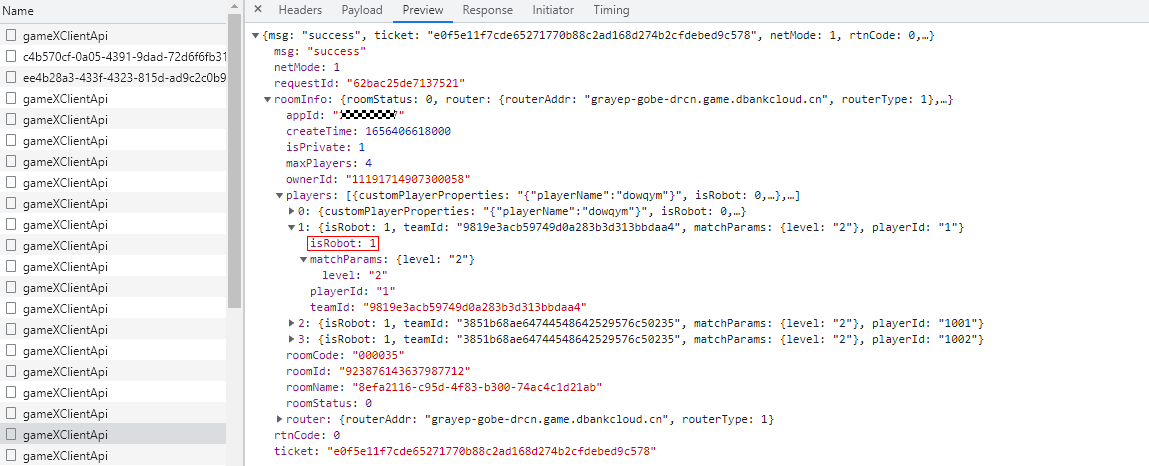

华为联机对战如何提升玩家匹配成功几率

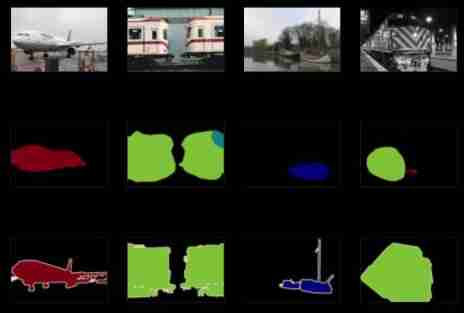

Hands on deep learning (32) -- fully connected convolutional neural network FCN

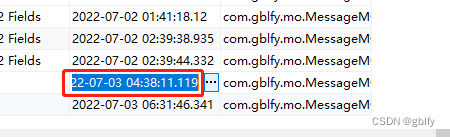

MongoDB数据日期显示相差8小时 原因和解决方案

Safety reinforcement learning based on linear function approximation safe RL with linear function approximation translation 1

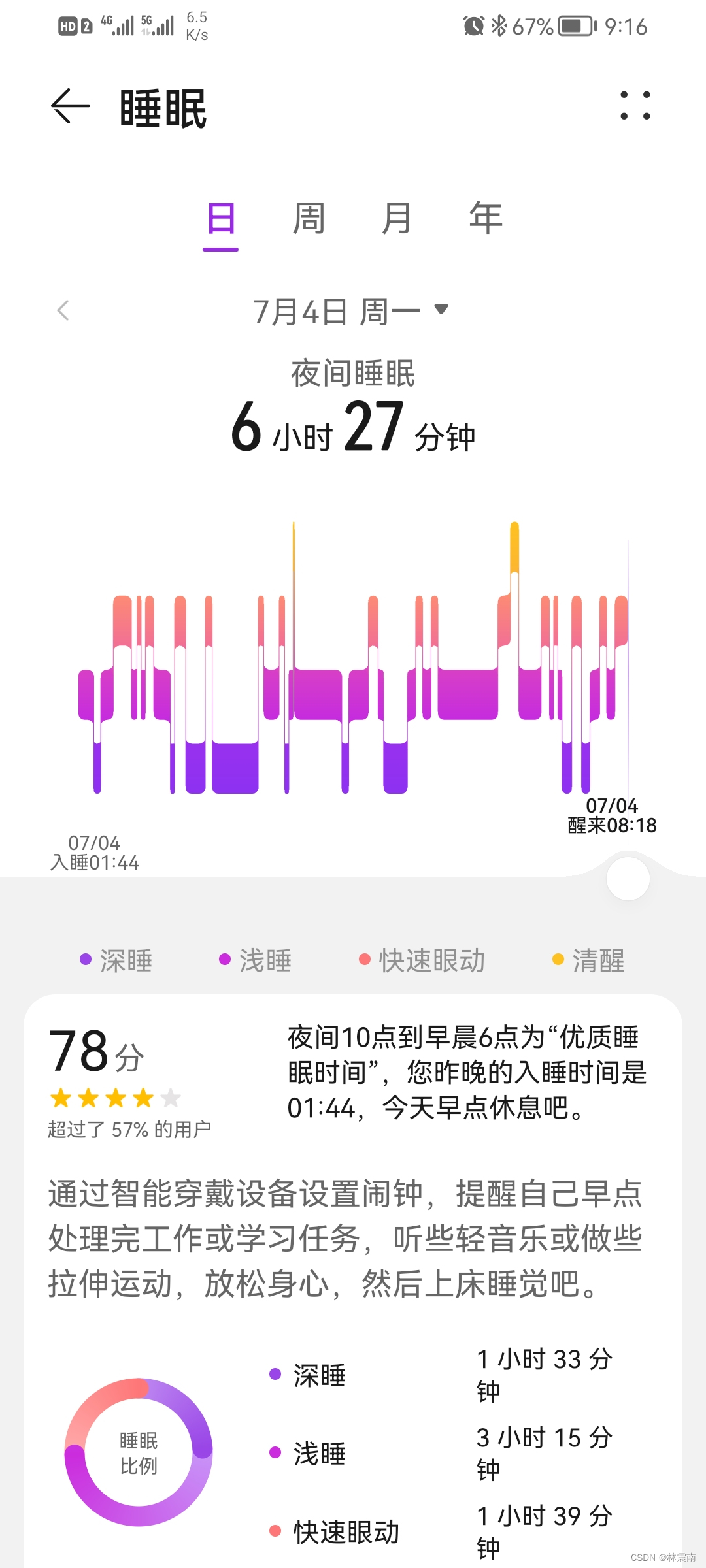

Today's sleep quality record 78 points

How can Huawei online match improve the success rate of player matching

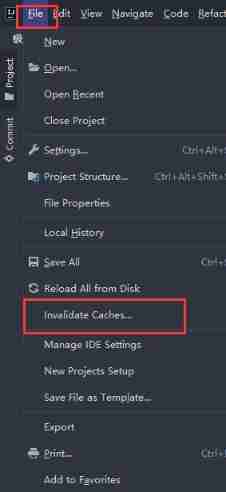

Occasional pit compiled by idea

Hands on deep learning (43) -- machine translation and its data construction

Hands on deep learning (34) -- sequence model

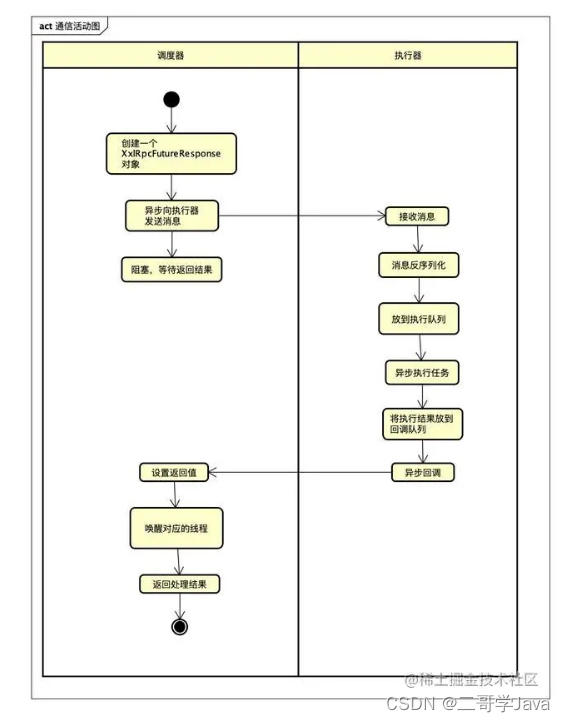

How can people not love the amazing design of XXL job

随机推荐

Use C to extract all text in PDF files (support.Net core)

leetcode1-3

直方图均衡化

Service developers publish services based on EDAs

How can Huawei online match improve the success rate of player matching

Basic principle of servlet and application of common API methods

MongoDB数据日期显示相差8小时 原因和解决方案

Hands on deep learning (36) -- language model and data set

Occasional pit compiled by idea

Tables in the thesis of latex learning

Sword finger offer 31 Stack push in and pop-up sequence

7-17 crawling worms (15 points)

Es advanced series - 1 JVM memory allocation

Latex error: missing delimiter (. Inserted) {\xi \left( {p,{p_q}} \right)} \right|}}

leetcode1-3

Exercise 9-4 finding books (20 points)

Write a mobile date selector component by yourself

品牌连锁店5G/4G无线组网方案

Es entry series - 6 document relevance and sorting

Exercise 7-4 find out the elements that are not common to two arrays (20 points)